Data drift

End-to-End Machine Learning

Joshua Stapleton

Machine Learning Engineer

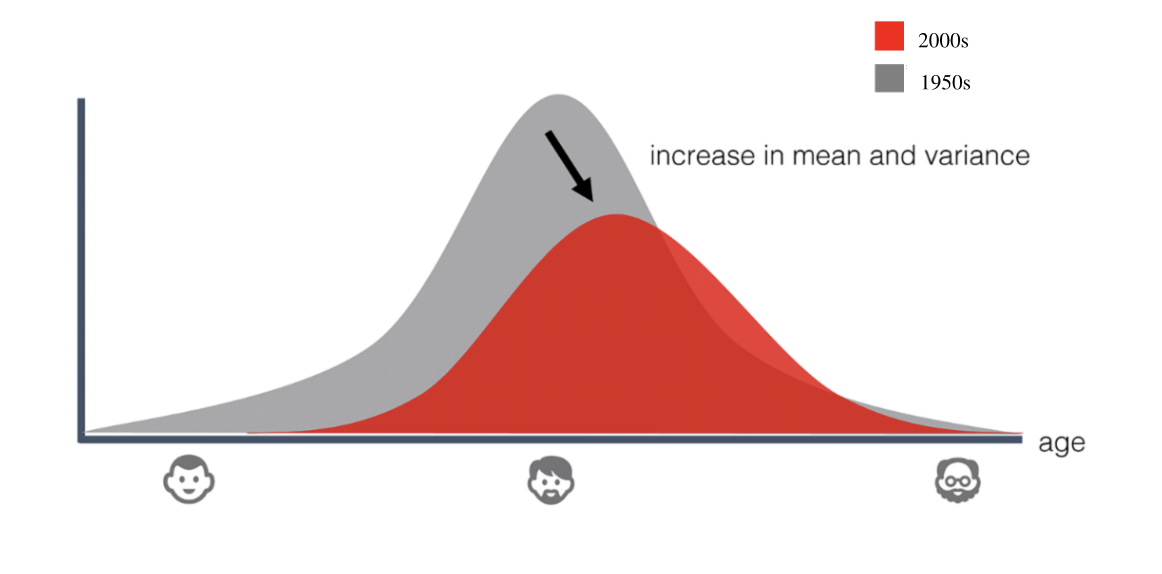

The need for data drift detection

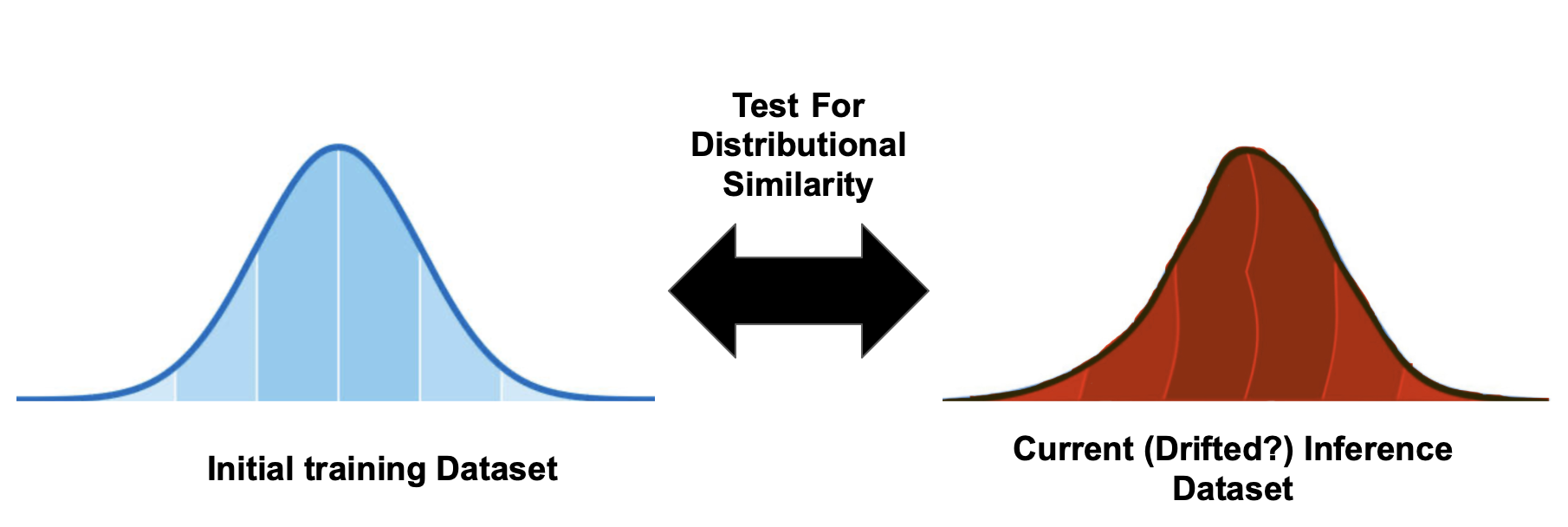

The Kolmogorov-Smirnov test

- Commonly used for detecting data drift

- Compares differences between dataset samples to determine distributional similarity

Using the ks_2samp() function

ks_2samp()function returns two values: test statistic, p-value.- Use p-value to accept/reject the null hypothesis of distributional similarity.

from scipy.stats import ks_2samp

# load the 1D data distribution samples for comparison

sample_1, sample_2 = training_dataset_sample, current_inference_sample

# perform the KS-test - ensure input samples are numpy arrays

test_statistic, p_value = ks_2samp(sample_1, sample_2)

if p_value < 0.05:

print("Reject null hypothesis - data drift might be occuring")

else:

print("Samples are likely to be from the same dataset")

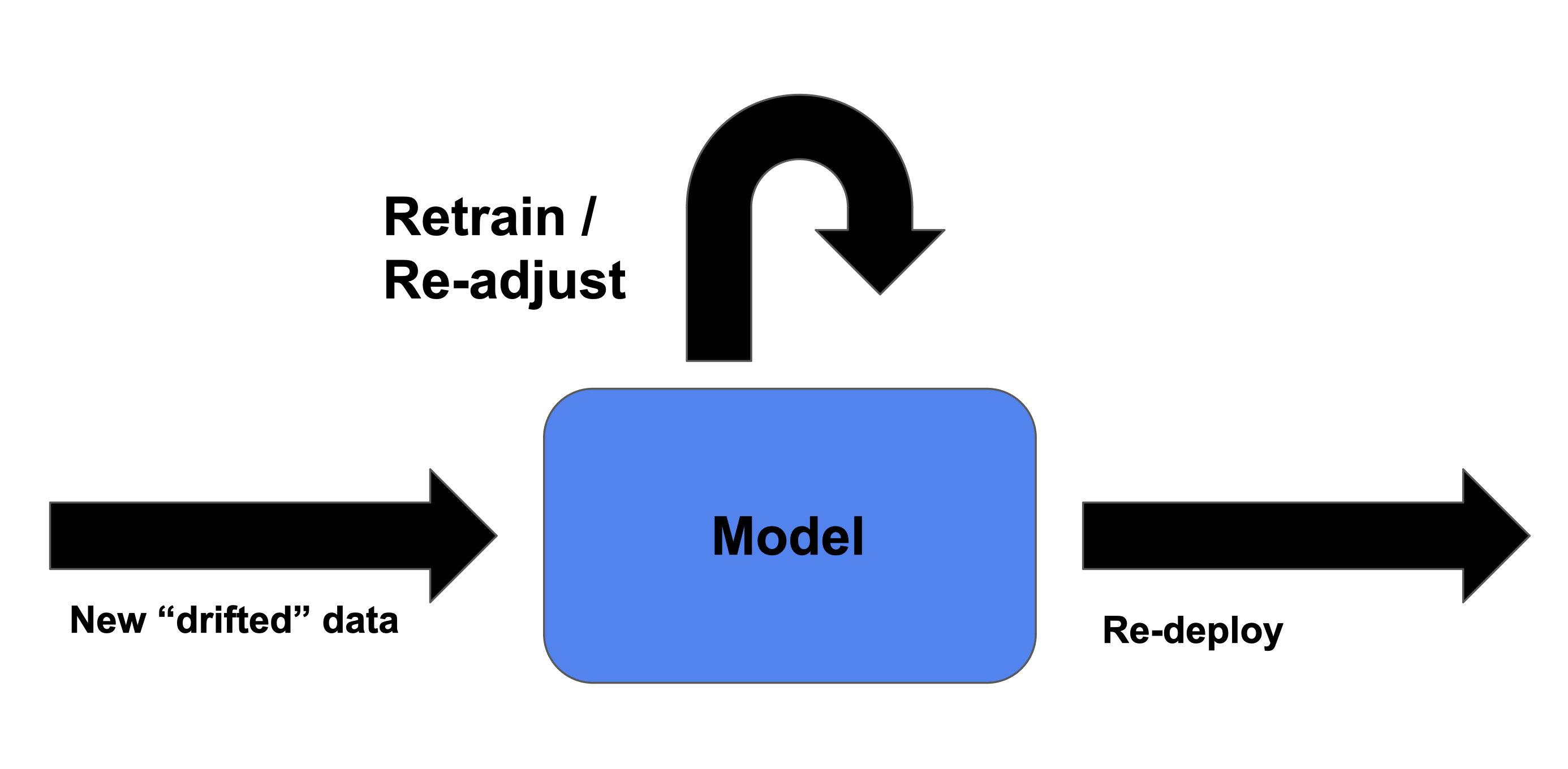

Correcting data drift

Update model to account for new data

- Retrain model

- Re-adjust / update model parameters

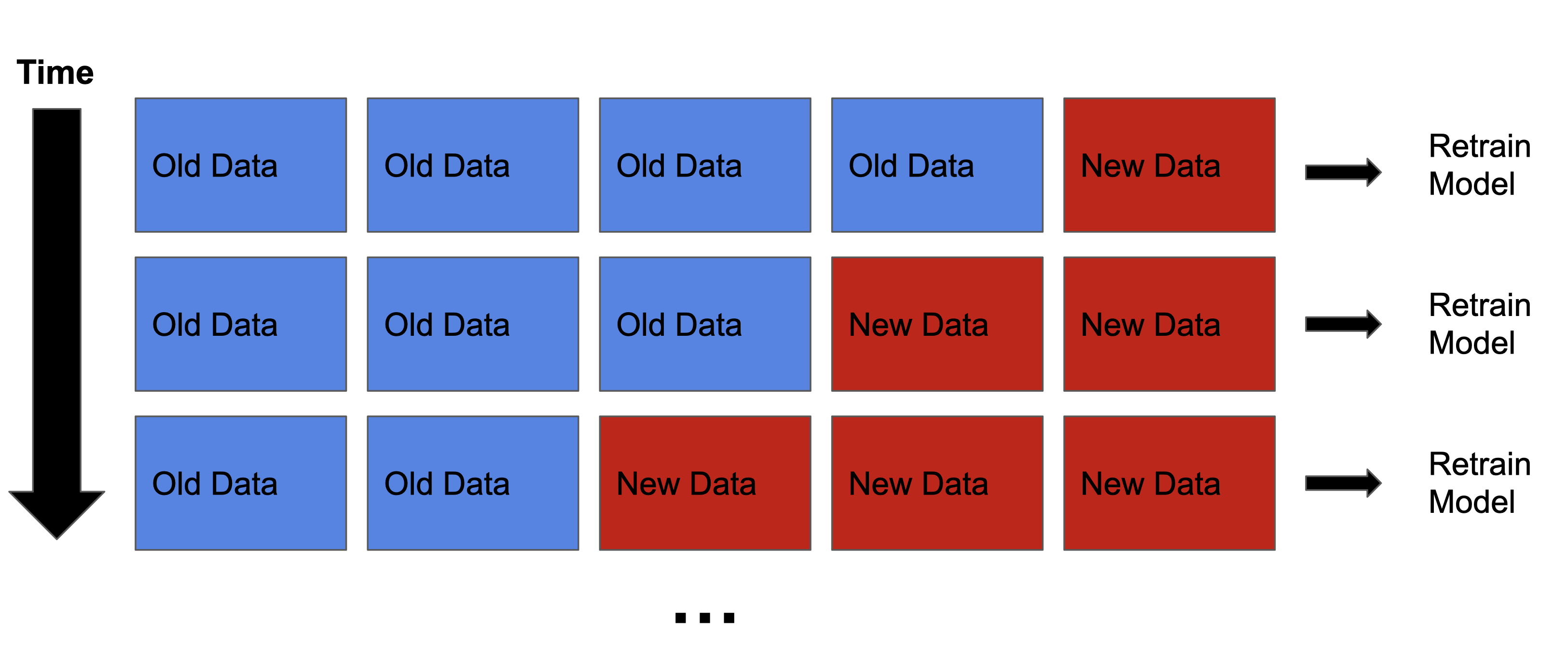

Not enough new/inference data?

- Re-train model on mixed dataset

- Increase amounts of new data

Further resources for detecting and rectifying data drift

Population Stability Index (PSI)

- Compares single categorical variables / columns

Evidently

- Open-source Python library

- Robustly test and correct for data drift

NannyML

- Monitor deployed model performance

Let's practice!

End-to-End Machine Learning