Model evaluation and visualization

End-to-End Machine Learning

Joshua Stapleton

Machine Learning Engineer

Accuracy

- Correct accuracy metrics are vital to robust model evaluation

- Easy to misinterpret or obscure results

Standard accuracy:

- Standard accuracy = num correct answers / num answers

- Standard accuracy can be unhelpful

Example:

# achieves ~99% accuracy for imbalanced dataset of 99 positive and 1 negative

for patient_datapoint in heart_disease_dataset:

model.prediction(patient_datapoint) = 'positive'

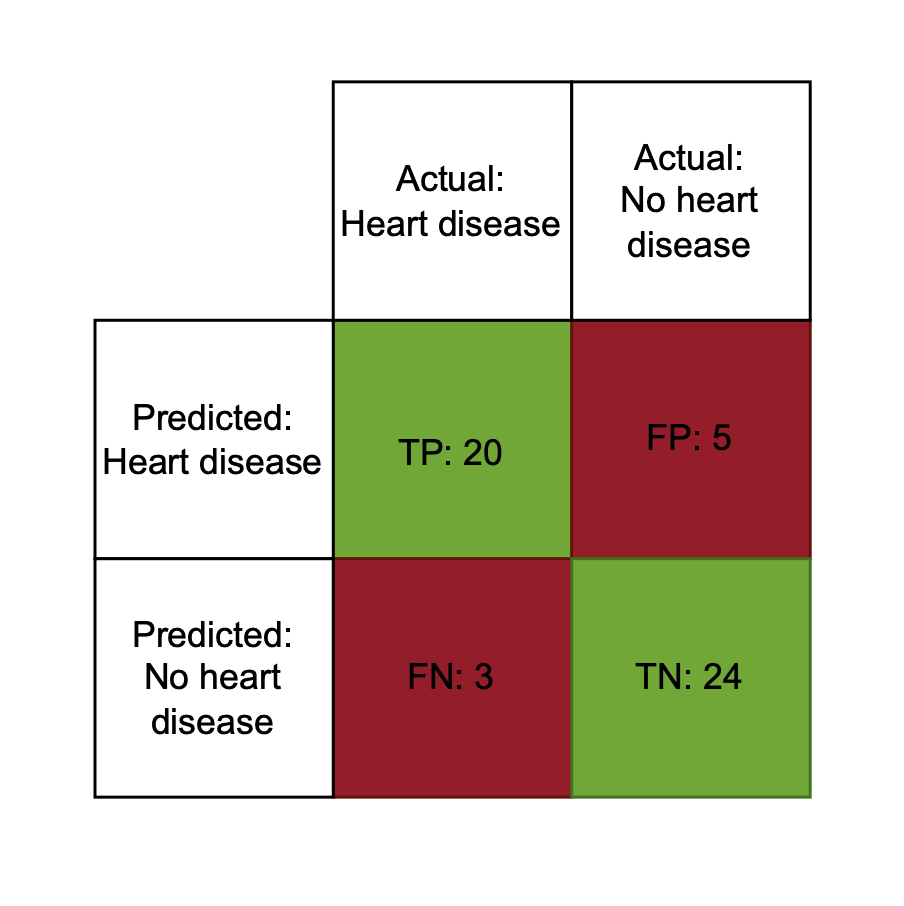

Confusion matrix

True positives (TP)

- Model prediction = actual classification = positive

- The model predicted heart disease, the patient had heart disease

False positives (FP)

- Model prediction = positive, actual classification = negative

- The model predicted heart disease, the patient did not have heart disease

False negatives (FN)

- Model prediction = negative, actual classification = positive

- The model predicted no heart disease, the patient had heart disease

True negatives (TN)

- Model prediction = actual classification = negative

- The model predicted no heart disease, the patient did not have heart disease

Balanced accuracy

- Better metric than plain accuracy for most binary classification models

- Provides weighted average across both classes

- Balanced accuracy = (TP + TN) / 2

from sklearn.metrics import balanced_accuracy_score

# Assume y_test is the true labels and y_pred are the predicted labels

y_pred = model.predict(X_test)

bal_accuracy = balanced_accuracy_score(y_test, y_pred)

print(f"Balanced Accuracy: {bal_accuracy:.2f}")

Balanced Accuracy: 0.85

Confusion matrix usage

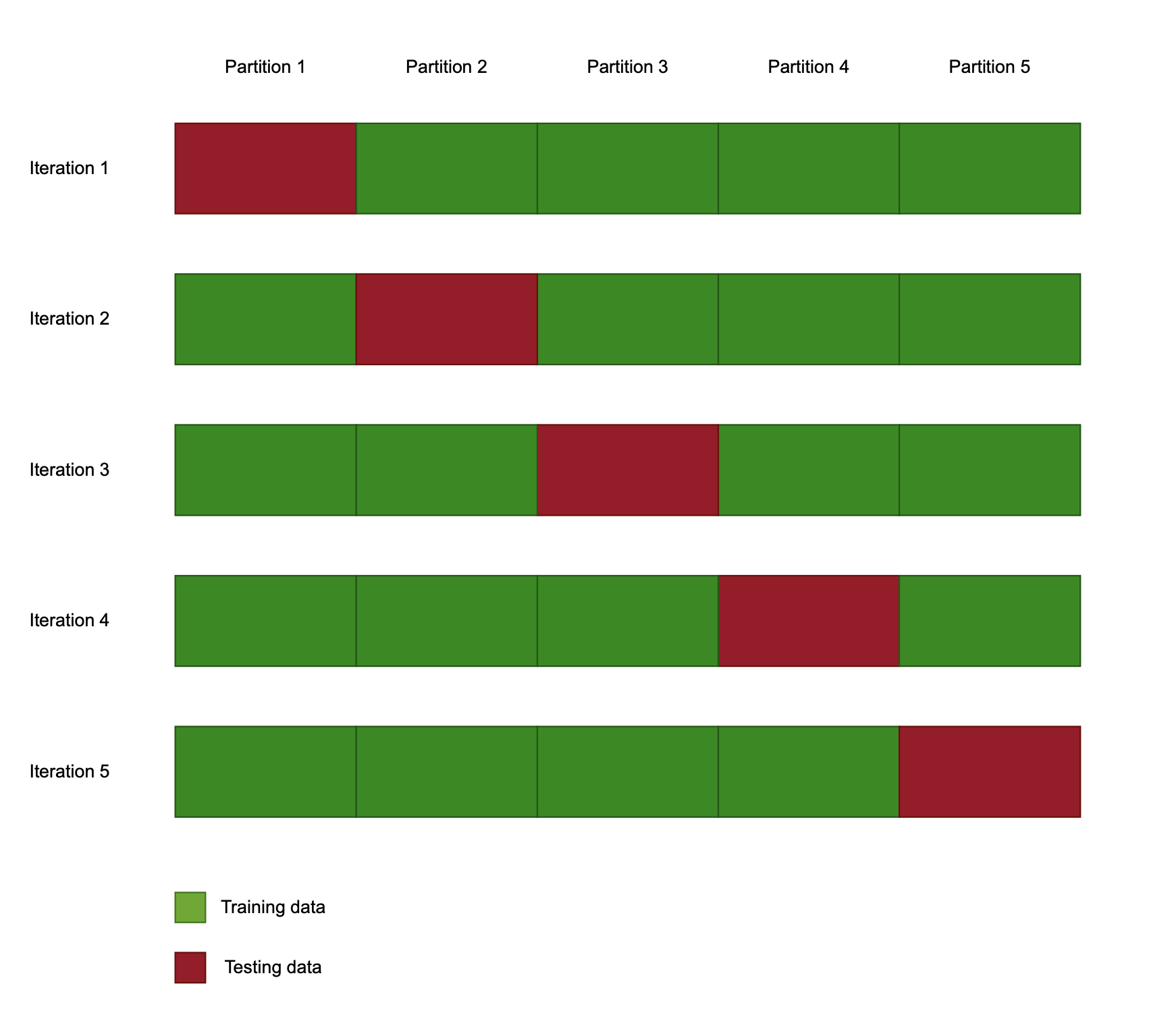

Cross validation

Cross-validation

- Resampling procedure

- Ensures robustness of results

k-fold cross-validation

- Param 'k' = number of splits for dataset

- Resample new train/test split for each modeling run

Cross validation usage

- Straightforward implementation of k-fold cross validation using sklearn

- Model-agnostic scoring

Usage:

from sklearn.model_selection import cross_val_score, KFold # split the data into 10 equal parts kfold = KFold(n_splits=5, shuffle=True, random_state=42)# get the cross validation accuracy for a given model cv_results = cross_val_score(model, heart_disease_X, heart_disease_y, cv=kfold, scoring='balanced_accuracy')

Hyperparameter tuning

Hyperparameter:

- Global model parameter (doesn't change during training)

- Adjust to improve model performance

# Hyperparameters to test

C_values = [0.001, 0.01, 0.1, 1, 10, 100, 1000]

# Manually iterate over the hyperparameters

for C in C_values:

model = LogisticRegression(max_iter=200, C=C)

model.fit(X_train, y_train)

accuracy = cross_val_score(model, X, y, cv=kfold, scoring='balanced_accuracy')

print(f"C = {C}: Bal Acc: {accuracy.mean():.4f} (+/- {accuracy.std():.4f})")

Hyperparameter tuning example

Example output for hyperparameter tuning:

C = 0.001: Bal Acc: 0.6200 (+/- 0.0215)

C = 0.01: Bal Acc: 0.7325 (+/- 0.0234)

C = 0.1: Bal Acc: 0.7923 (+/- 0.0202)

C = 1: Bal Acc: 0.8050 (+/- 0.0191)

C = 10: Bal Acc: 0.8034 (+/- 0.0185)

C = 100: Bal Acc: 0.8021 (+/- 0.0187)

C = 1000: Bal Acc: 0.8017 (+/- 0.0188)

Let's practice!

End-to-End Machine Learning