Feature engineering and selection

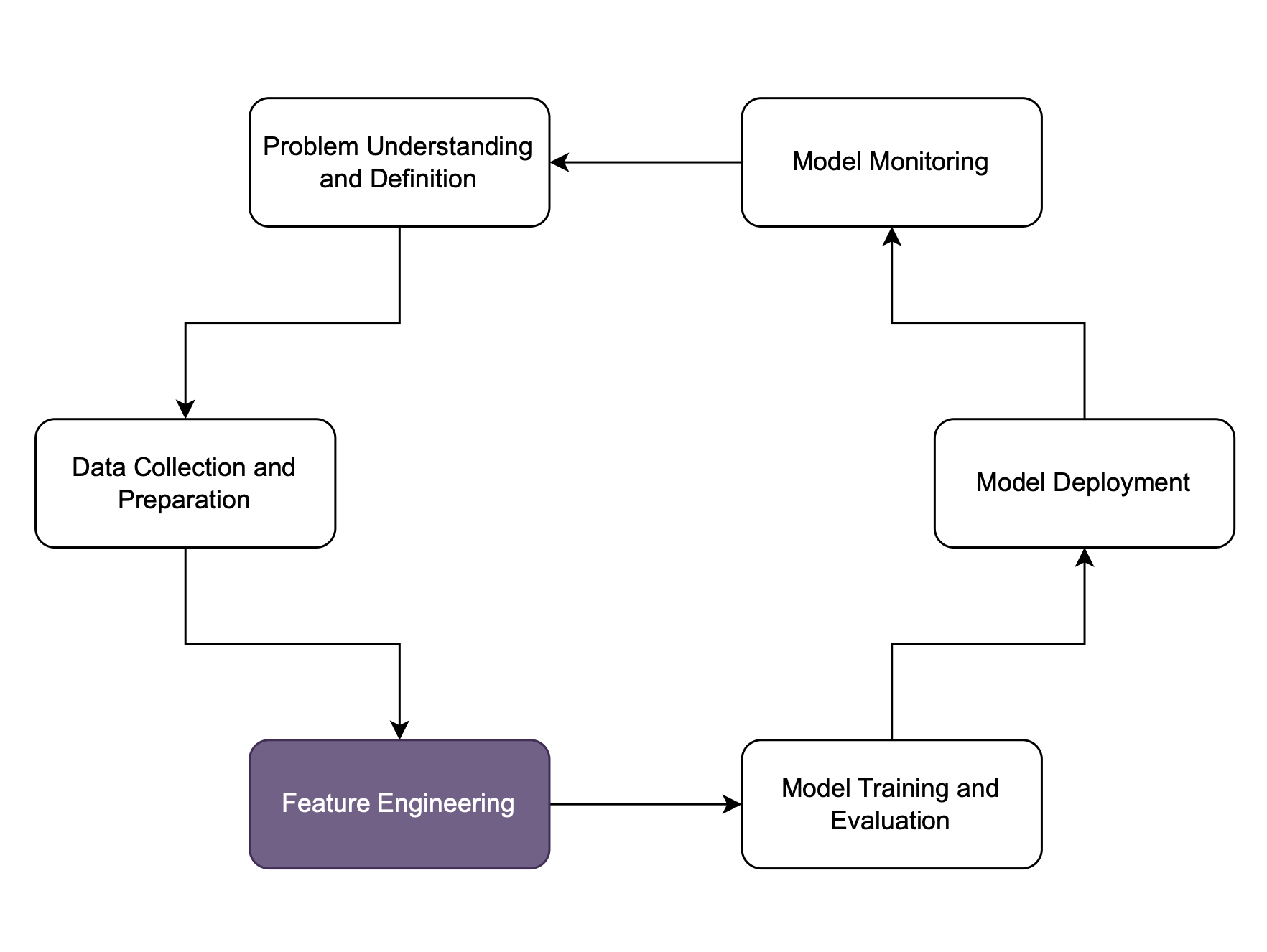

End-to-End Machine Learning

Joshua Stapleton

Machine Learning Engineer

Feature engineering

Creating features

- Simplifies problem

- Improves model efficiency

Techniques

- Modify pre-existing features

- Design new features

Benefits

- Easier deployment, maintenance, training

- Interpretability gain

Normalization

- Scales numeric features to [0, 1]

- Helpful when features have different scales/ranges.

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import Normalizer

# Split the data

X_train, X_test = train_test_split(df, test_size=0.2, random_state=42)

# Createnormalizer object, fit on training data, normalize, and transform test set

norm = Normalizer()

X_train_norm = norm.fit_transform(X_train)

X_test_norm = norm.transform(X_test)

Standardization

- Scales data to have mean = 0, variance = 1

- Beneficial for algorithms that assume similar mean and variance

from sklearn.preprocessing import StandardScaler

# Split the data

X_train, X_test = train_test_split(df, test_size=0.2, random_state=42)

# Create a scaler object and fit training data to standardize it

sc = StandardScaler()

X_train_stzd = sc.fit_transform(X_train)

# Only standardize the test data

X_test_stzd = sc.transform(X_test)

What constitutes a good feature?

- Use relevant features

- Weather on the day of patient appointment should have no bearing on diagnosis

- Use dissimilar (orthogonal) features

- Two features of age in months and age in years would not be helpful

sklearn.feature_selection

from sklearn.ensemble import RandomForestClassifier from sklearn.feature_selection import SelectFromModel from sklearn.model_selection import train_test_split# Splitting data into train and test subsets first to avoid data leakage X_train, X_test, y_train, y_test = train_test_split( heart_disease_df_X, heart_disease_df_y, test_size=0.2, random_state=42)

sklearn.feature_selection (cont.)

# Define and fit the random forest model rf = RandomForestClassifier(n_jobs=-1, class_weight='balanced', max_depth=5) rf.fit(X_train, y_train)# Define and run feature selection model = SelectFromModel(rf, prefit=True) features_bool = model.get_support() features = heart_disease_df.columns[features_bool]

Let's practice!

End-to-End Machine Learning