Overview of Lakehouse AI

Databricks Concepts

Kevin Barlow

Data Practitioner

Lakehouse AI

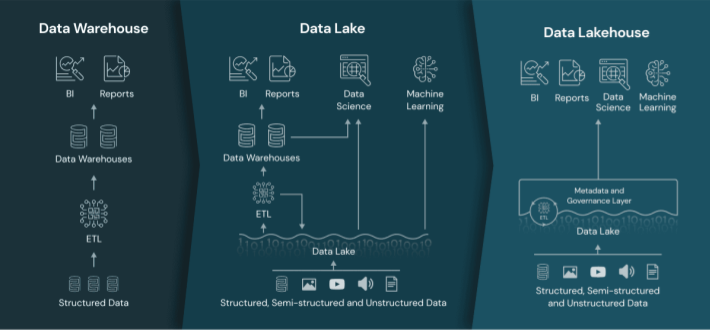

Why the Lakehouse for AI / ML?

- Reliable data and files in the Delta lake

- Highly scalable compute

- Open standards, libraries, frameworks

- Unification with other data teams

1 https://www.databricks.com/blog/2020/01/30/what-is-a-data-lakehouse.html

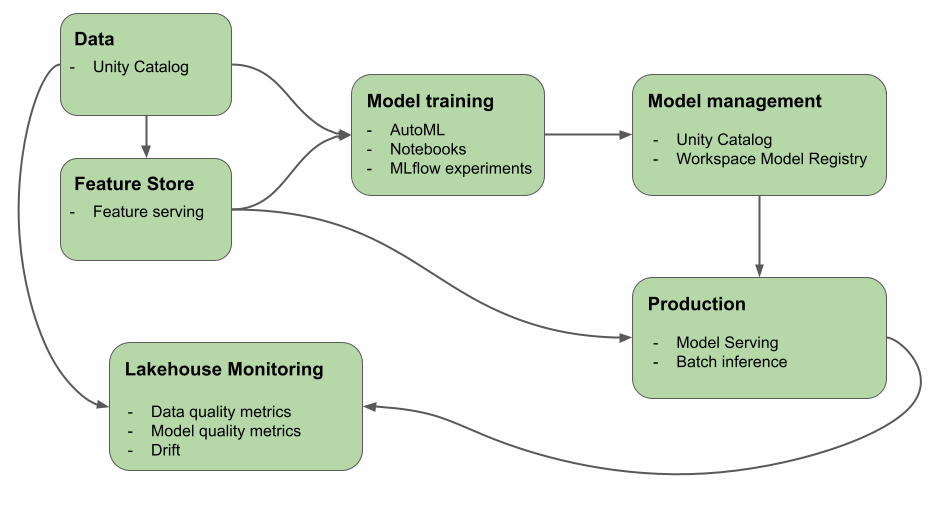

MLOps Lifecycle

MLOps in the Lakehouse

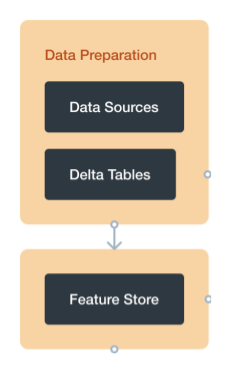

DataOps

- Integrating data across different sources (AutoLoader)

- Transforming data into a usable, clean format (Delta Live Tables)

- Creating useful features for models (Feature Store)

MLOps in the Lakehouse

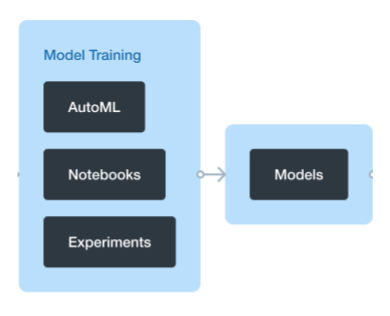

ModelOps

- Develop and train different models (Notebooks)

- Machine learning templates and automation (AutoML)

- Track parameters, metrics, and trials (MLFlow)

- Centralize and consume models (Model Registry)

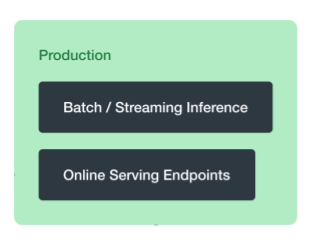

MLOps in the Lakehouse

DevOps

- Govern access to different models (Unity Catalog)

- Continuous Integration and Continuous Deployment (CI/CD) for model versions (Model Registry)

- Deploy models for consumption (Serving Endpoints)

Let's review!

Databricks Concepts