RAG storage and retrieval using vector databases

Developing LLM Applications with LangChain

Jonathan Bennion

AI Engineer & LangChain Contributor

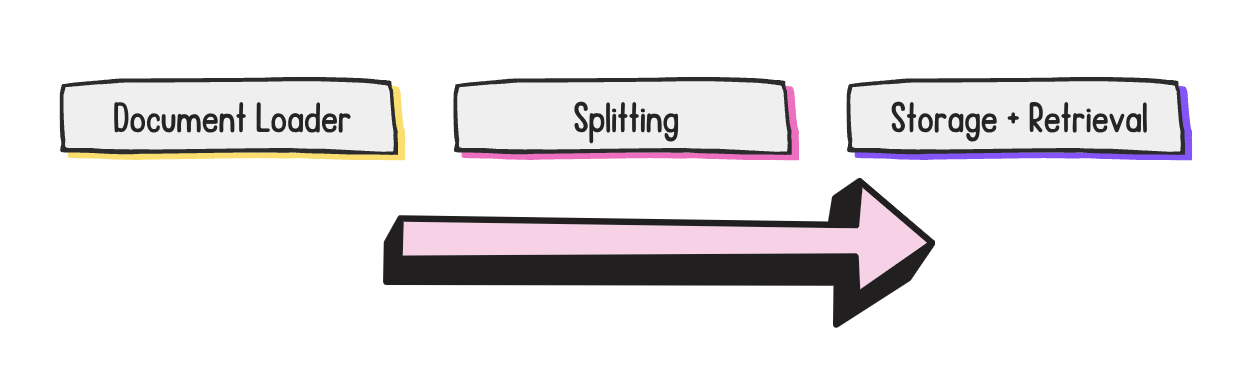

RAG development steps

- Focus of this video: storage and retrieval

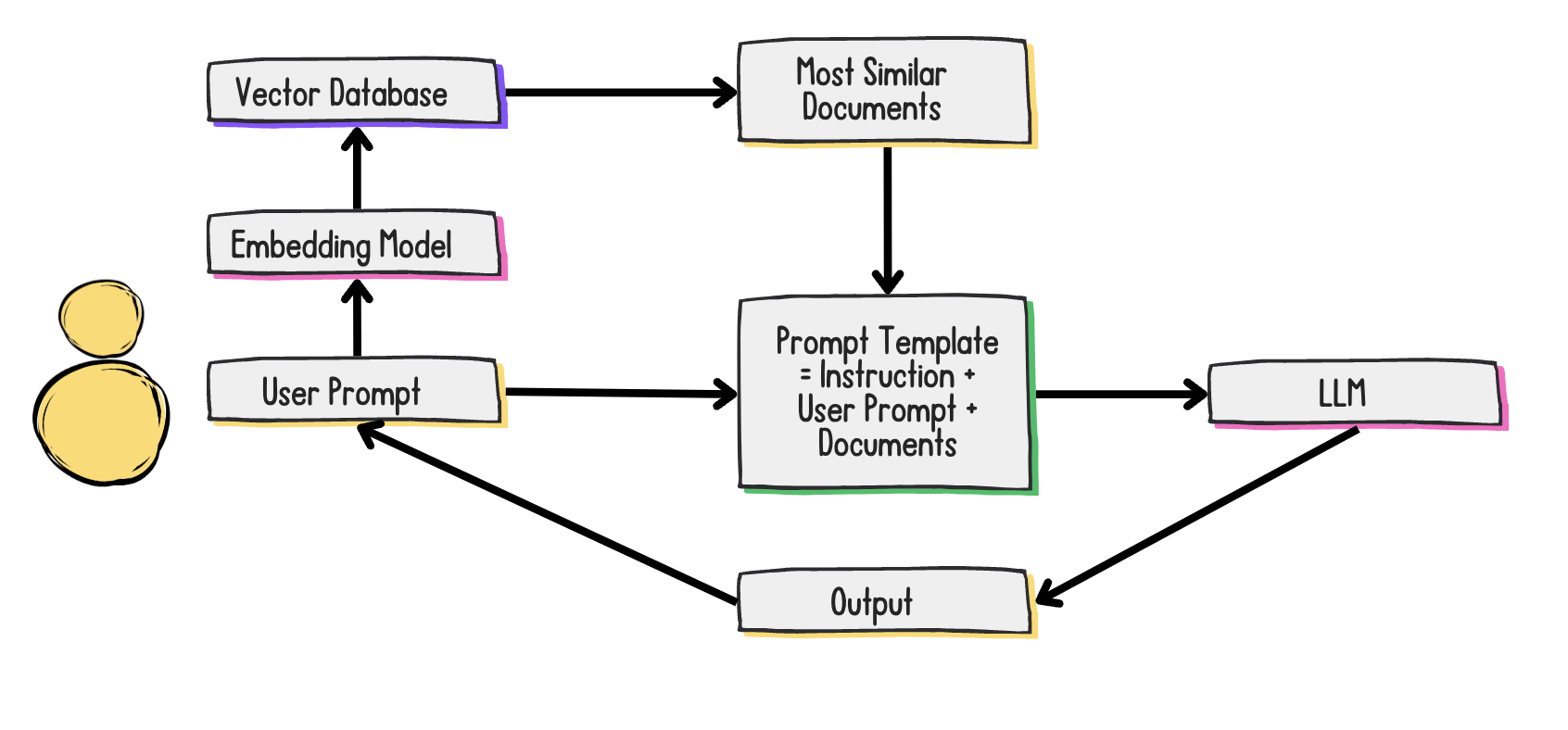

What is a vector database and why do I need it?

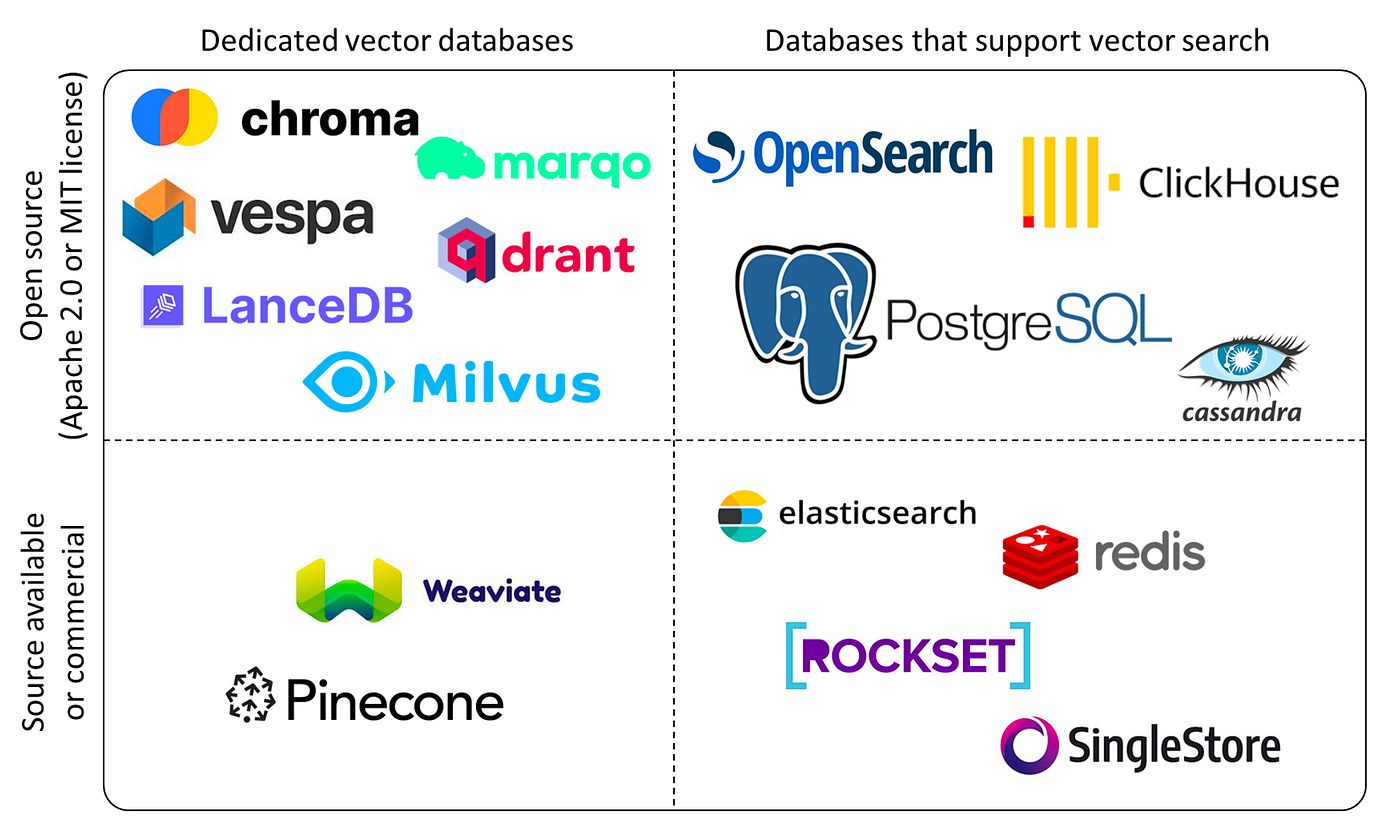

Which vector database should I use?

Need to consider:

- Open source vs. closed source (license)

- Cloud vs. on-premises

- Lightweight vs. powerful

1 Image Credit: Yingjun Wu

Meet the documents...

docs

[

Document(

page_content="In all marketing copy, TechStack should always be written with the T and S

capitalized. Incorrect: techstack, Techstack, etc.",

metadata={"guideline": "brand-capitalization"}

),

Document(

page_content="Our users should be referred to as techies in both internal and external

communications.",

metadata={"guideline": "referring-to-users"}

)

]

Setting up a Chroma vector database

from langchain_openai import OpenAIEmbeddings from langchain_chroma import Chroma embedding_function = OpenAIEmbeddings(api_key=openai_api_key, model='text-embedding-3-small')vectorstore = Chroma.from_documents( docs, embedding=embedding_function, persist_directory="path/to/directory" )retriever = vectorstore.as_retriever( search_type="similarity", search_kwargs={"k": 2} )

Building a prompt template

from langchain_core.prompts import ChatPromptTemplate

message = """

Review and fix the following TechStack marketing copy with the following guidelines in consideration:

Guidelines:

{guidelines}

Copy:

{copy}

Fixed Copy:

"""

prompt_template = ChatPromptTemplate.from_messages([("human", message)])

Chaining it all together!

from langchain_core.runnables import RunnablePassthrough rag_chain = ({"guidelines": retriever, "copy": RunnablePassthrough()} | prompt_template | llm)response = rag_chain.invoke("Here at techstack, our users are the best in the world!")print(response.content)

Here at TechStack, our techies are the best in the world!

Let's practice!

Developing LLM Applications with LangChain