Model Registration with MLflow

Designing Forecasting Pipelines for Production

Rami Krispin

Senior Manager, Data Science and Engineering

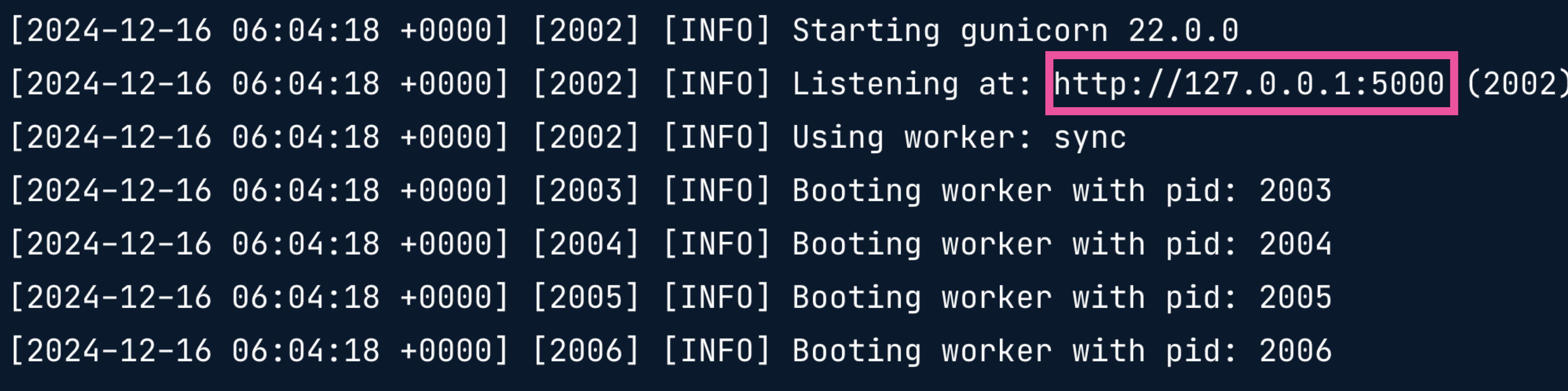

Launching the MLflow UI

mlflow ui

Launching the MLflow UI

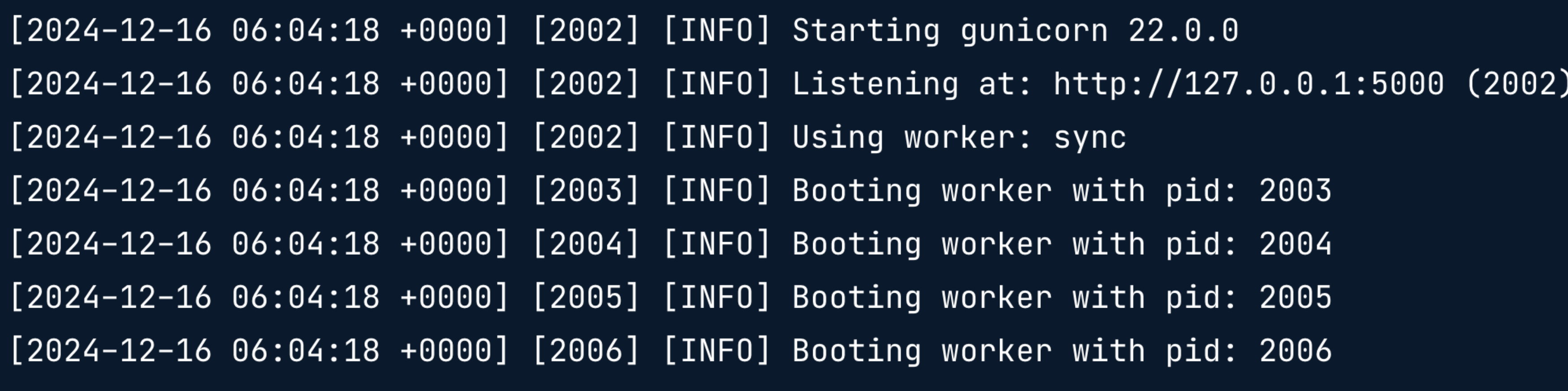

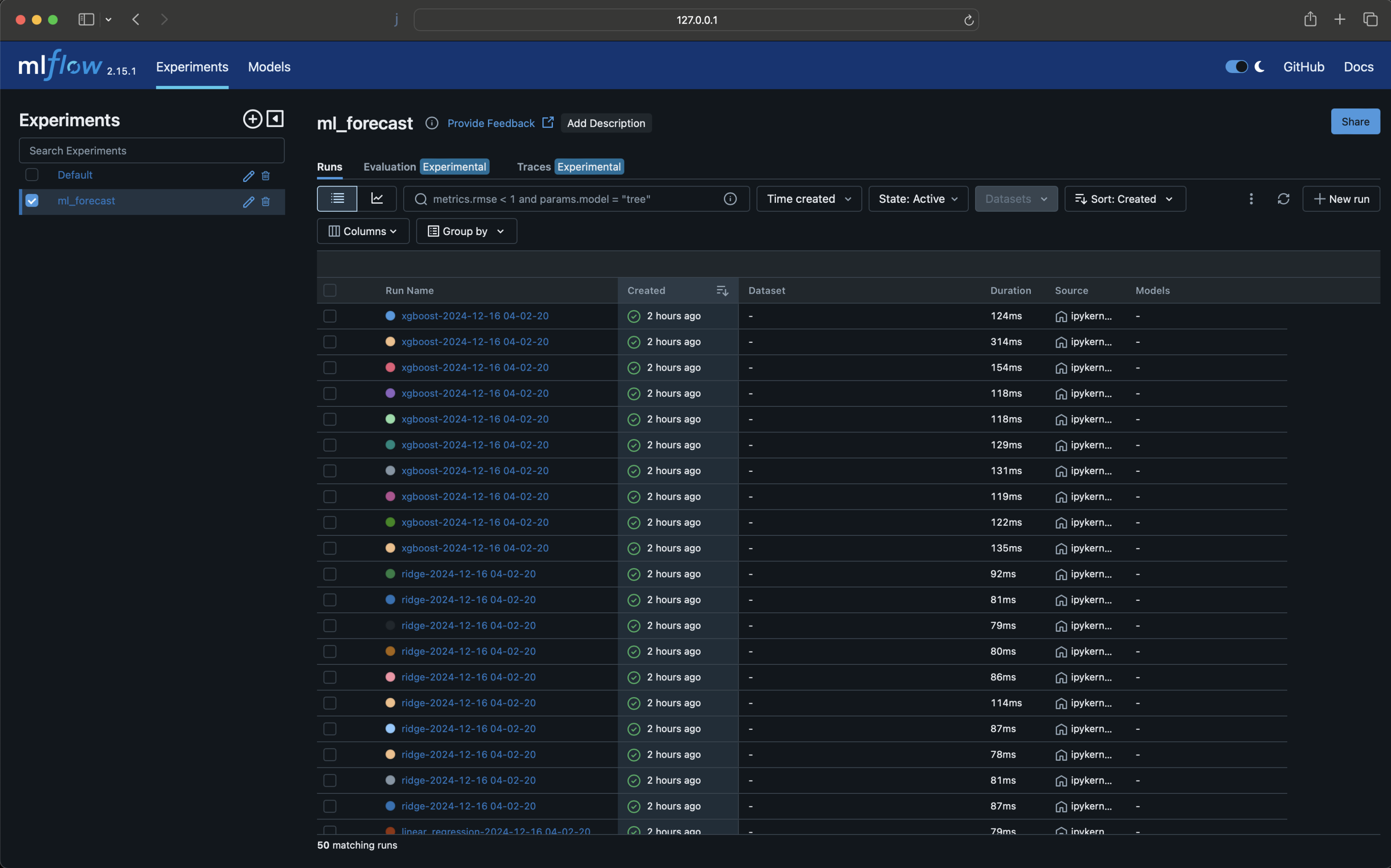

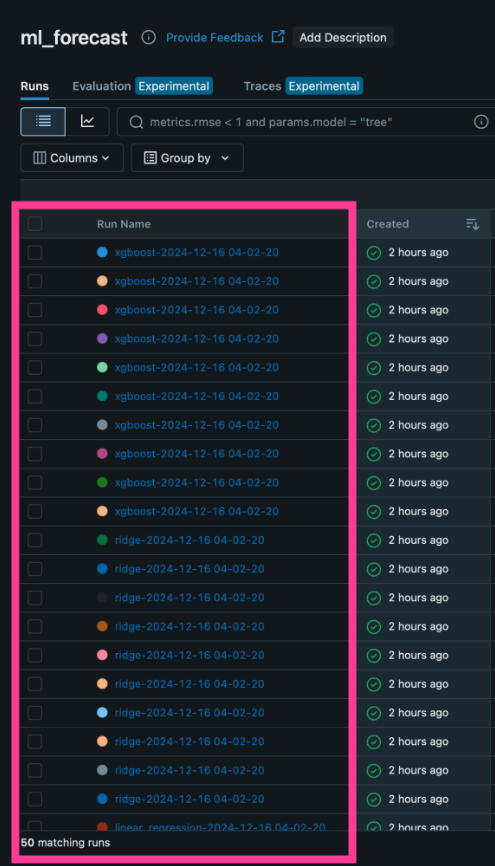

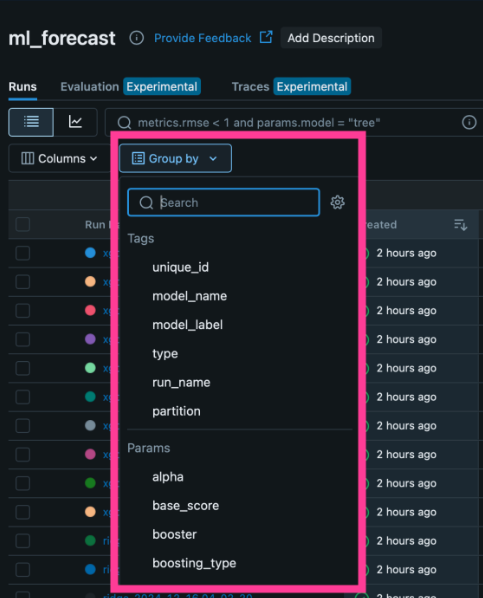

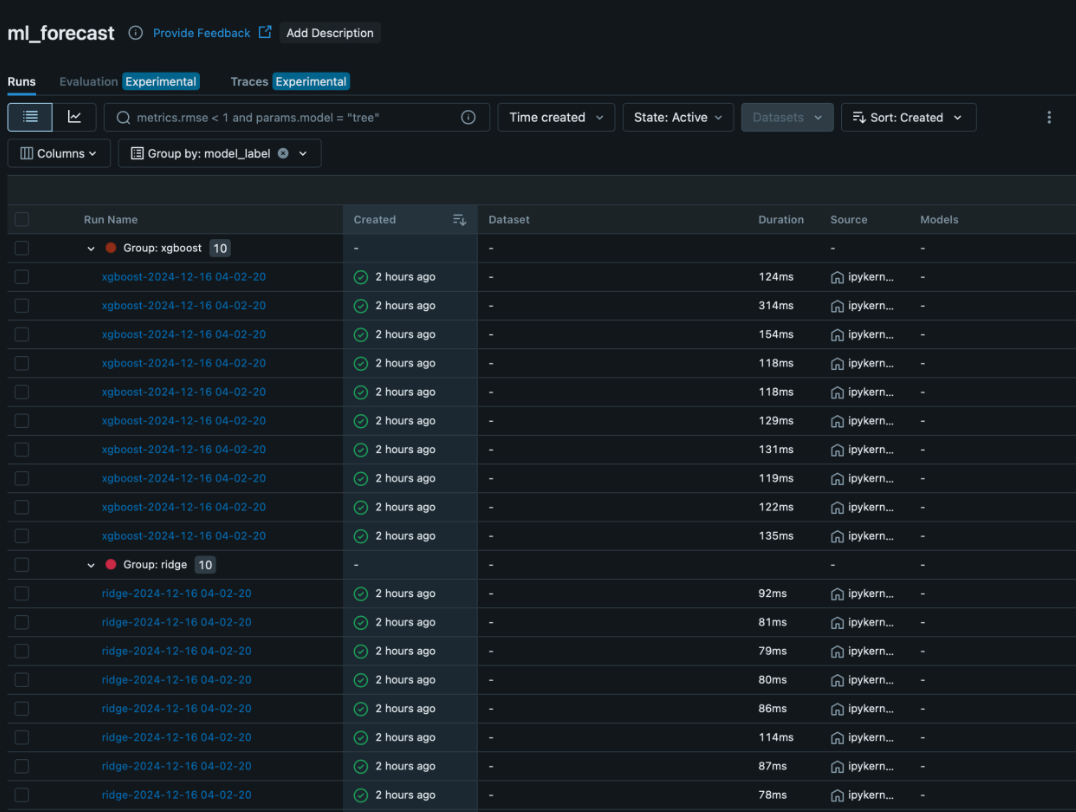

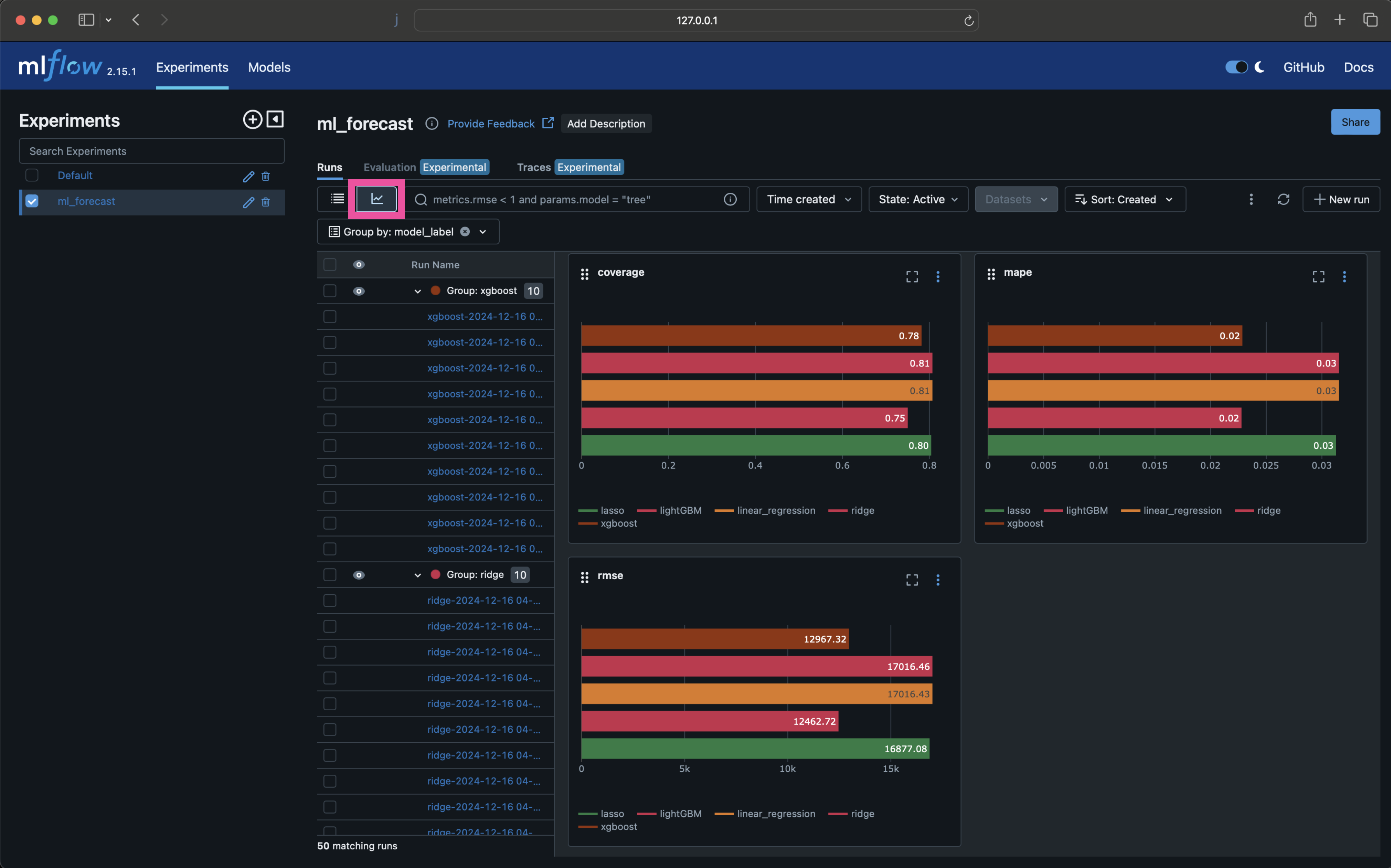

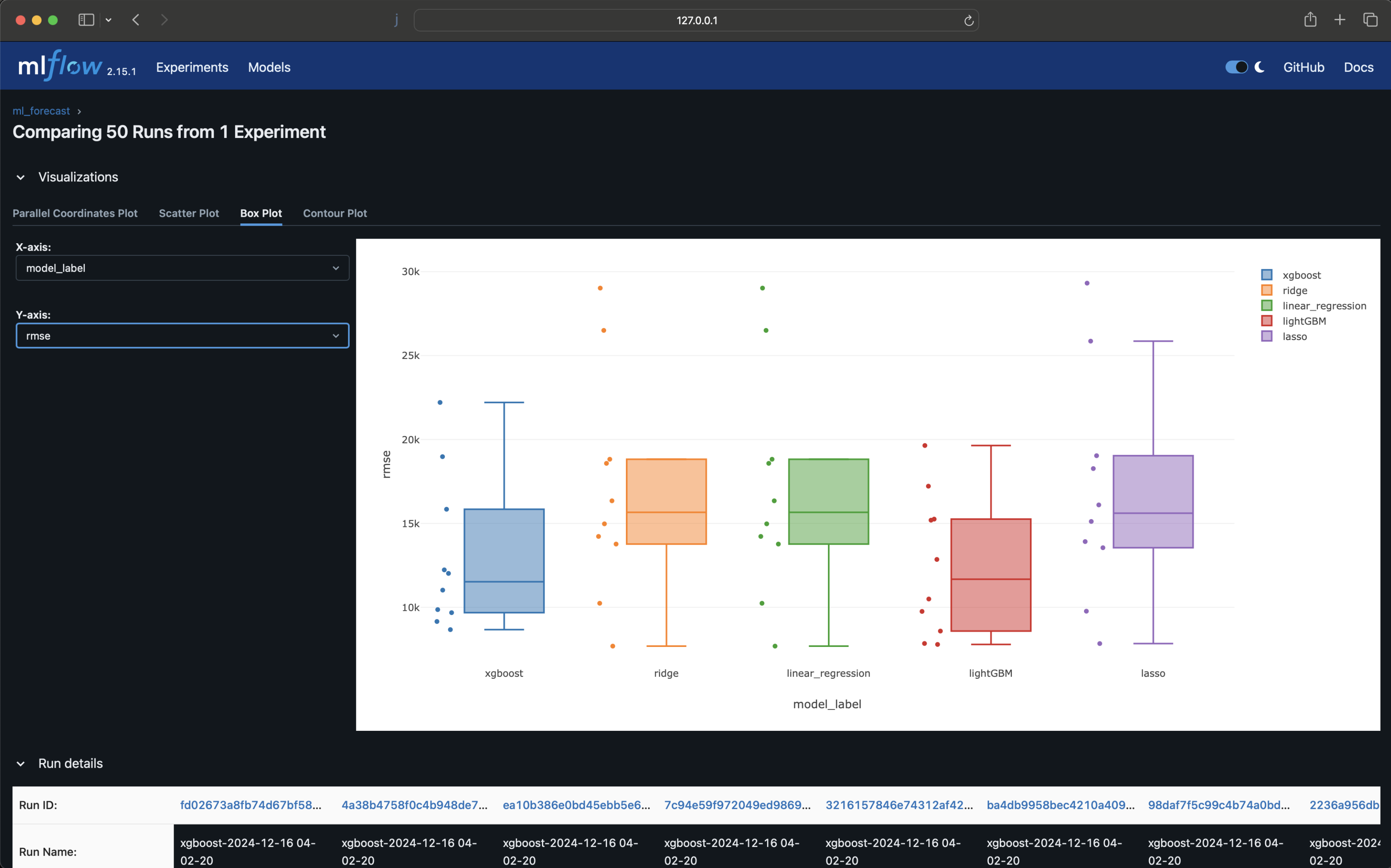

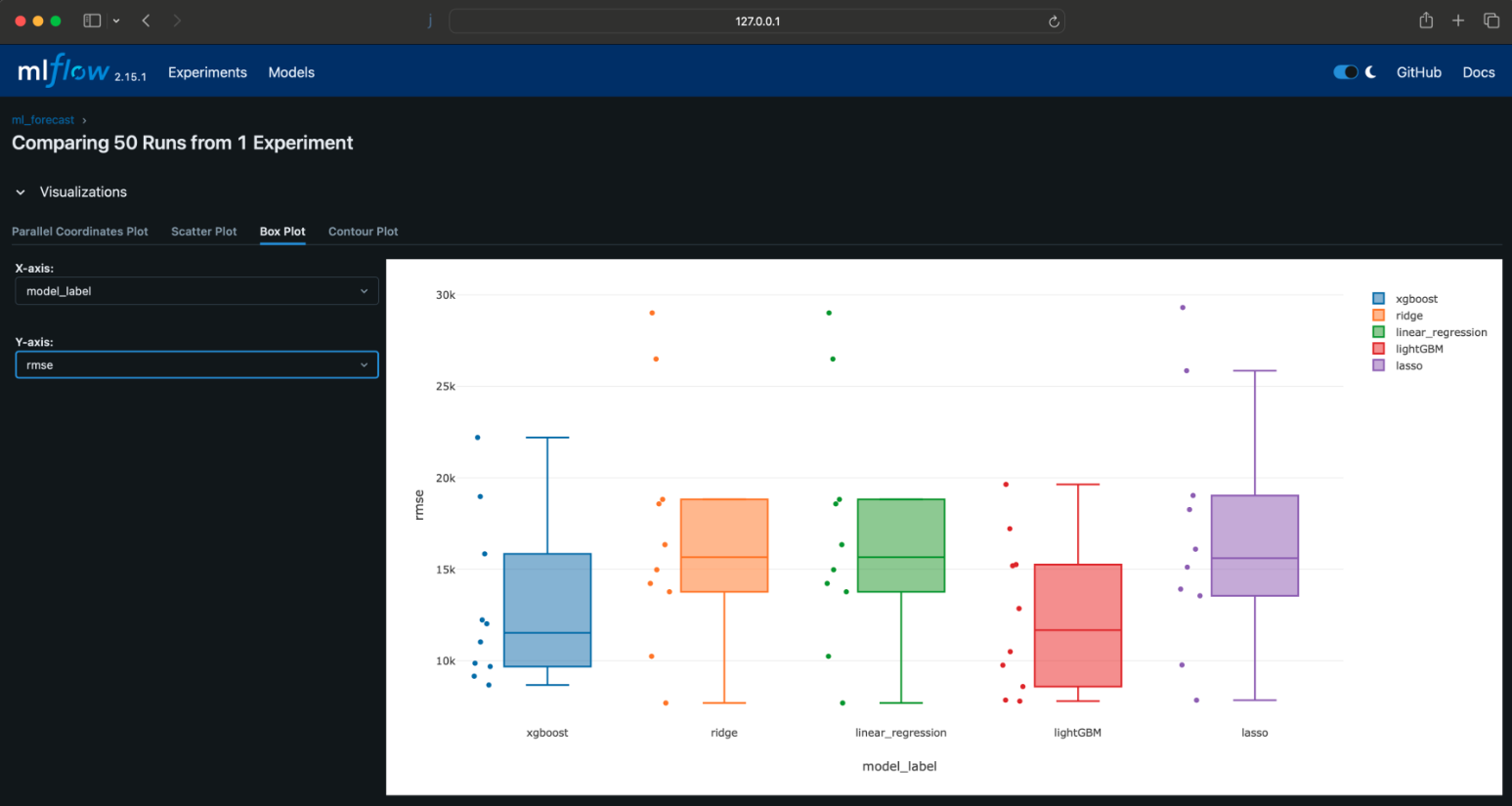

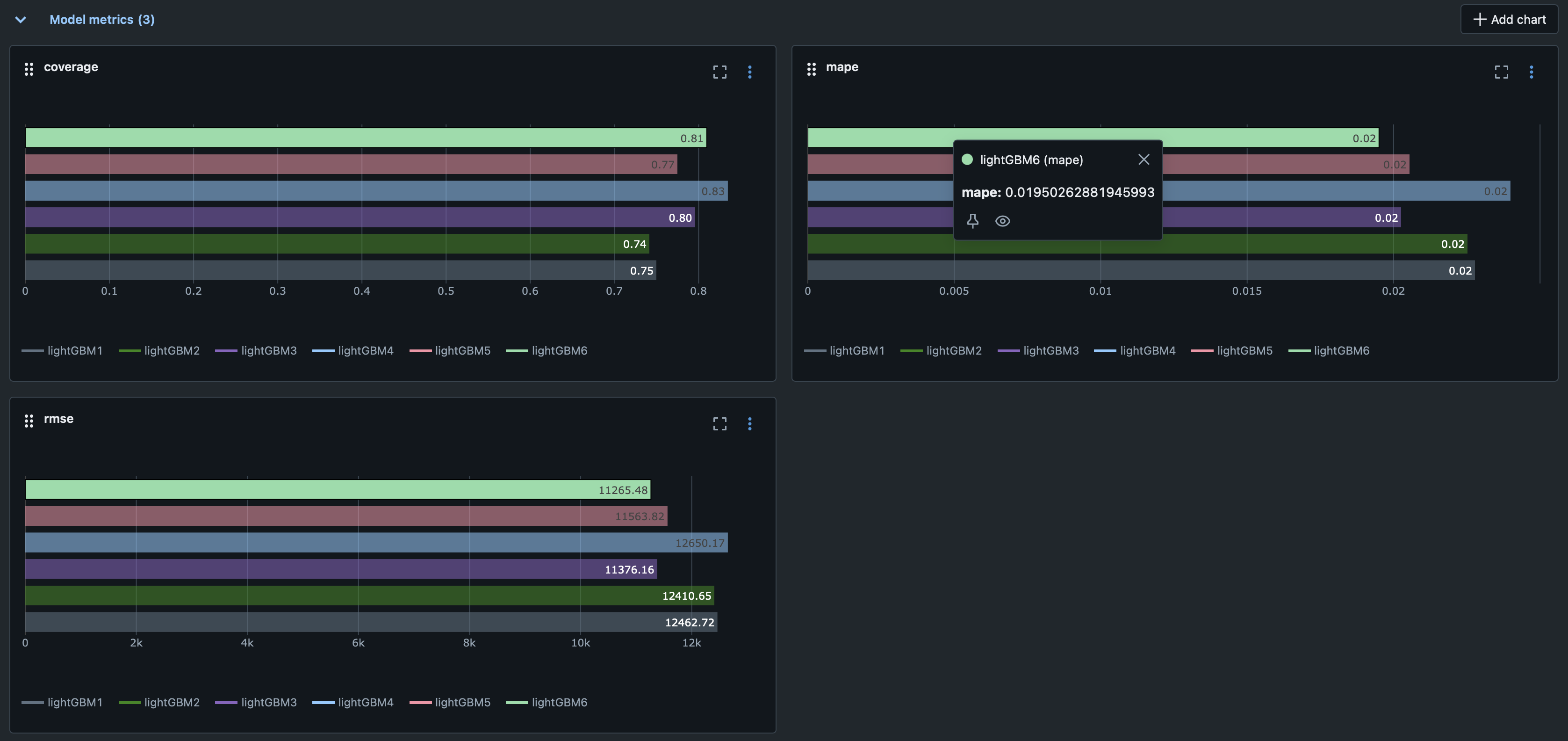

Analyze the backtesting results

Analyze the backtesting results

Analyze the backtesting results

Analyze the backtesting results

Analyze the backtesting results

Analyze the backtesting results

Analyze the backtesting results

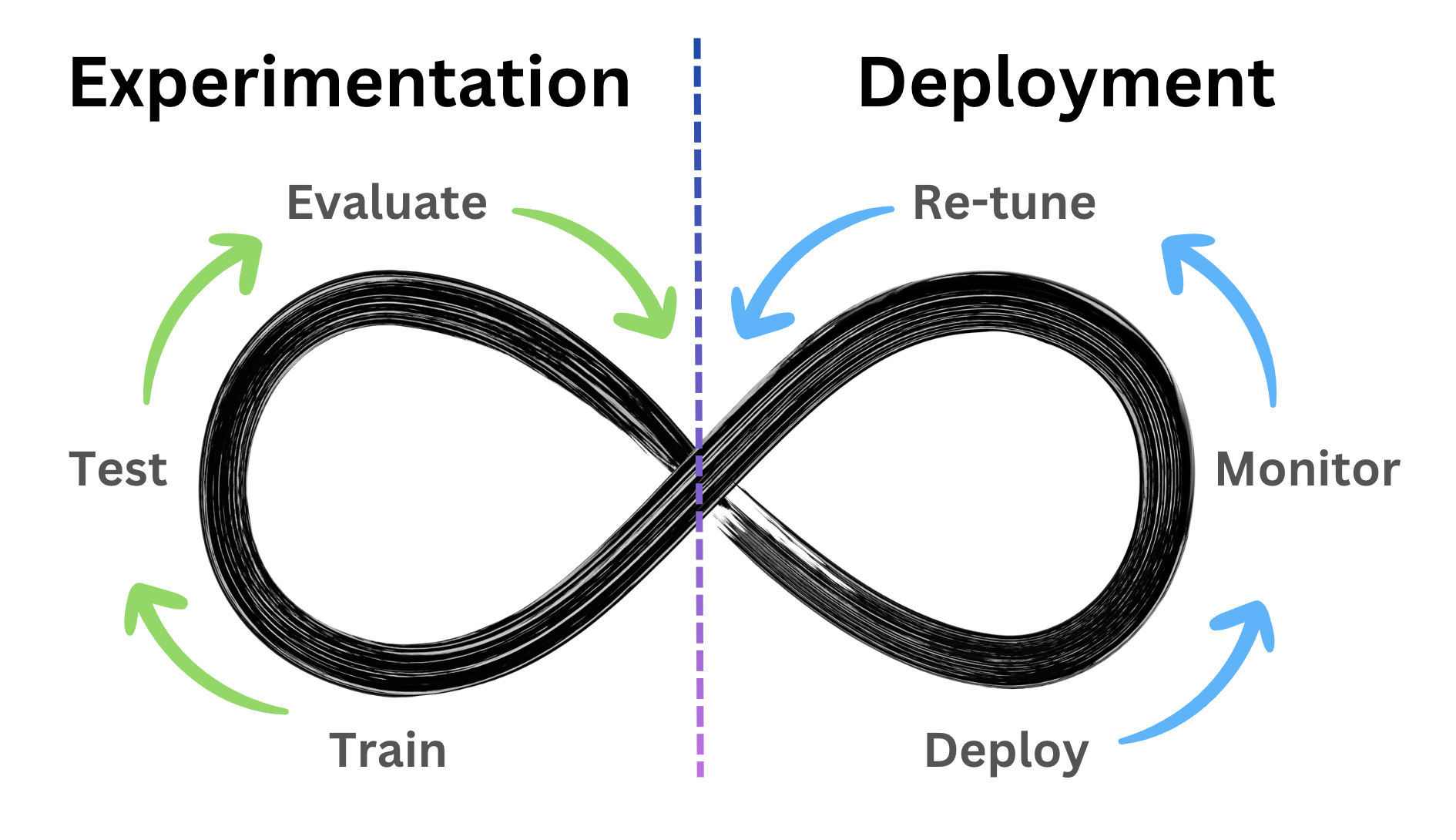

Can we improve the performance?

Model evaluation

- Benchmark

- Residuals analysis

- Backtesting analysis

Potential improvements

- Different models

- New features

- Tuning parameters

Can we improve the performance?

Model optimization

- Benchmark

- Residuals analysis

- Backtesting analysis

Potential improvements

- Different models

- New features

- Tuning parameters

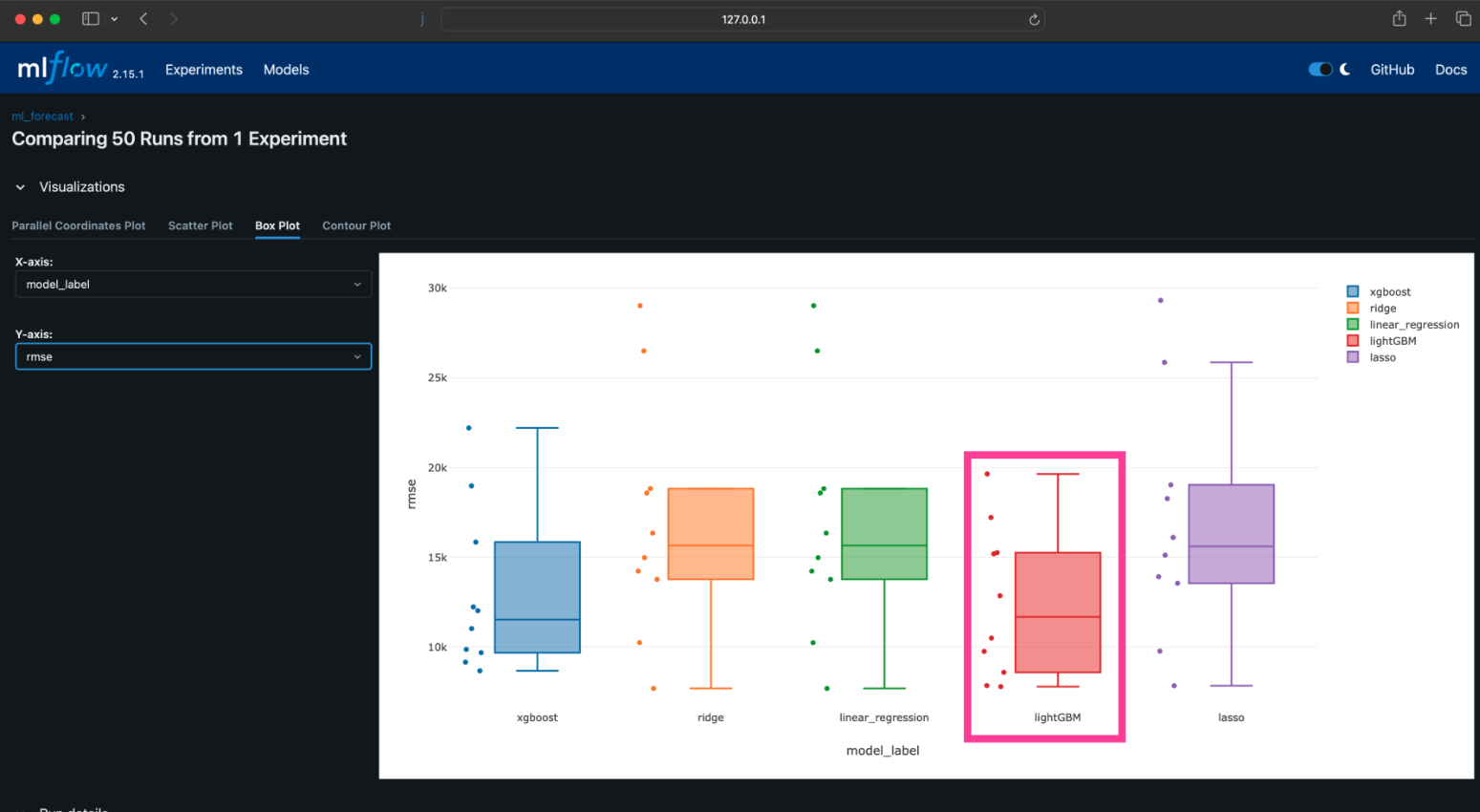

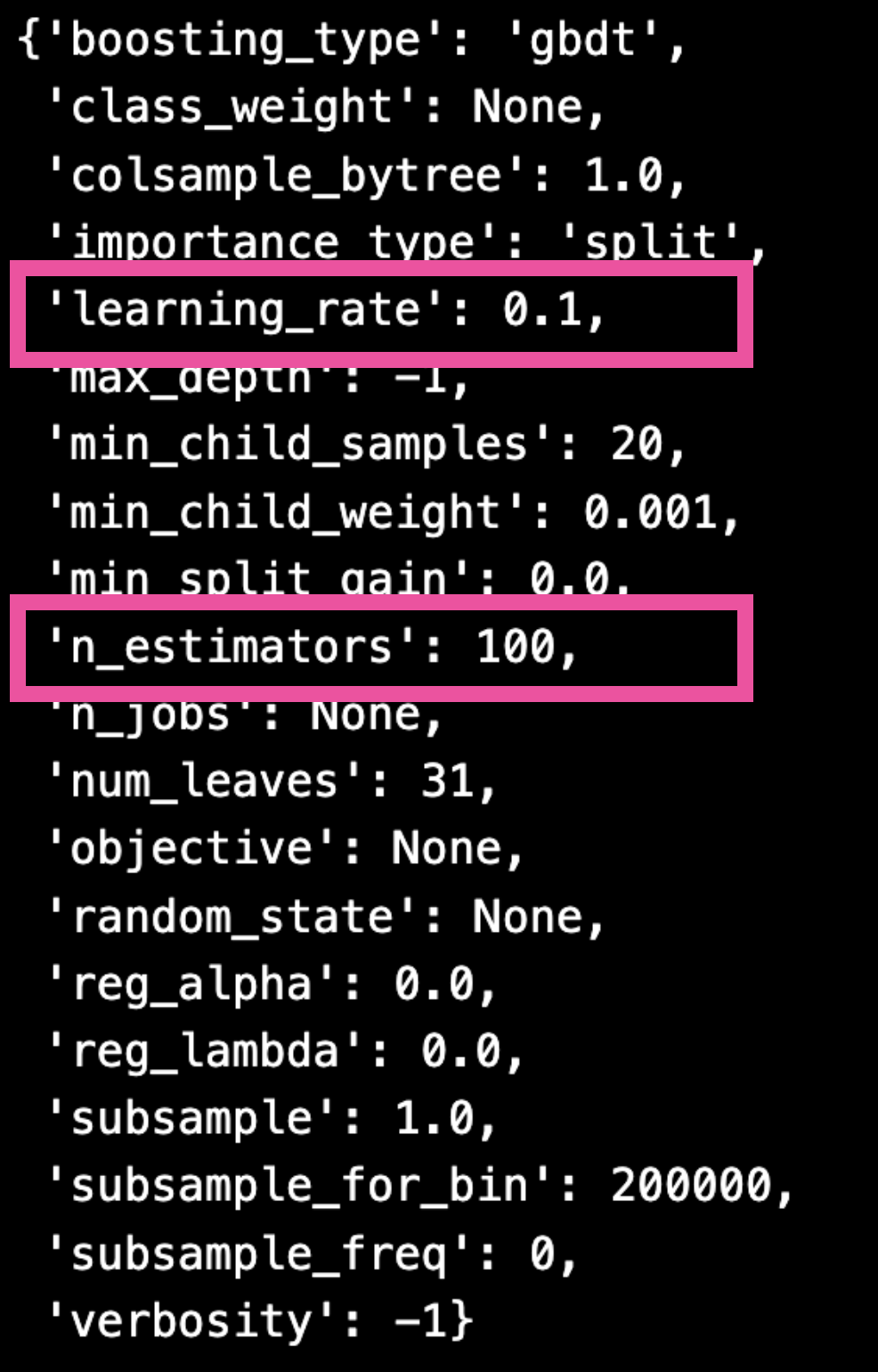

Tuning parameters

Tuning parameters

Hypothesis

- Using lower learning rate

- Training with more trees

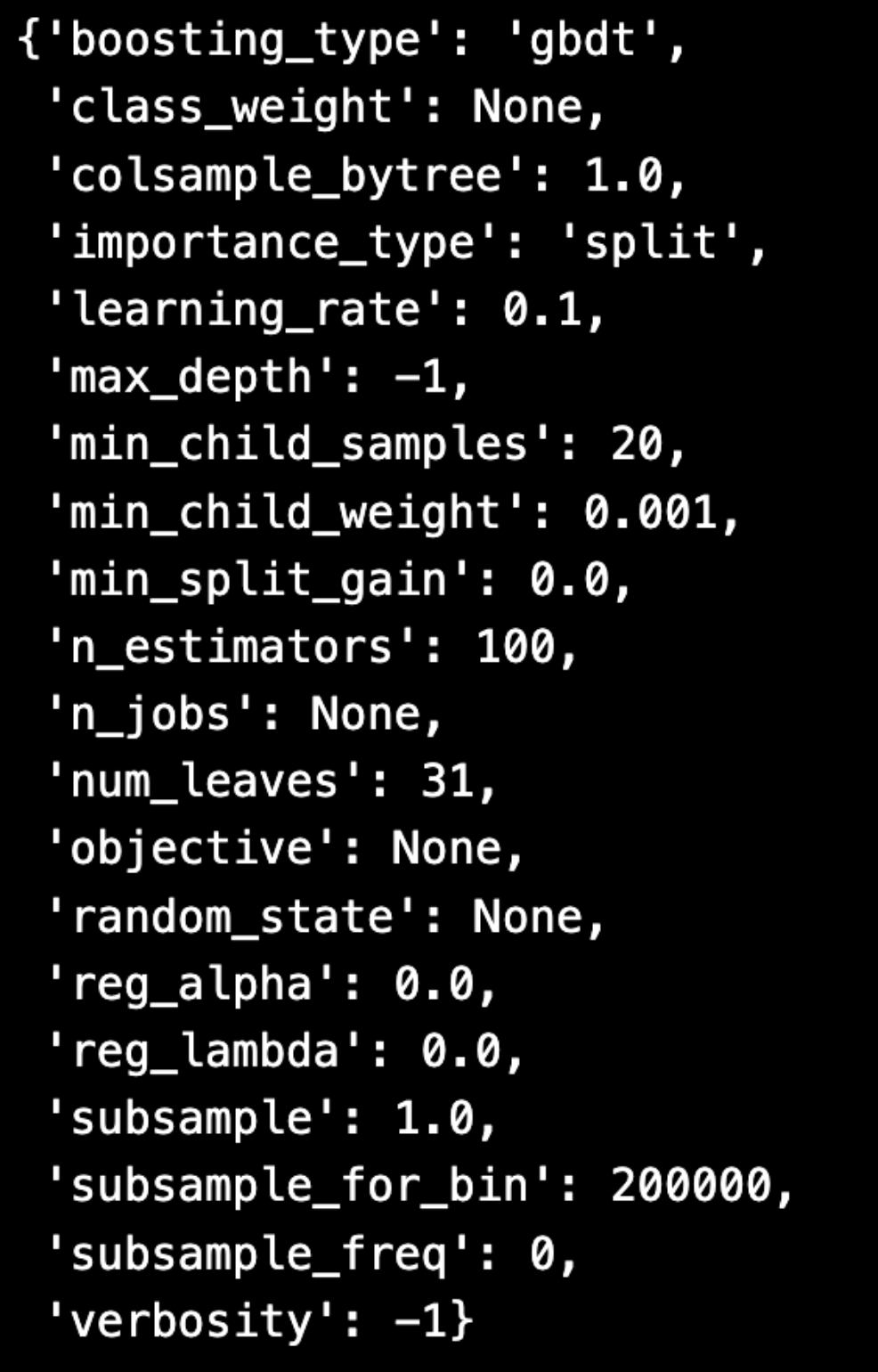

ml_models2 = {

"lightGBM1": LGBMRegressor(n_estimators = 100, learning_rate= 0.1),

"lightGBM2": LGBMRegressor(n_estimators = 250, learning_rate= 0.1),

"lightGBM3": LGBMRegressor(n_estimators = 500, learning_rate= 0.1),

"lightGBM4": LGBMRegressor(n_estimators = 100, learning_rate= 0.05),

"lightGBM5": LGBMRegressor(n_estimators = 250, learning_rate= 0.05),

"lightGBM6": LGBMRegressor(n_estimators = 500, learning_rate= 0.05),

}

Analyzing the results

Experimentation constraints

Let's practice!

Designing Forecasting Pipelines for Production