Monte Carlo methods

Reinforcement Learning with Gymnasium in Python

Fouad Trad

Machine Learning Engineer

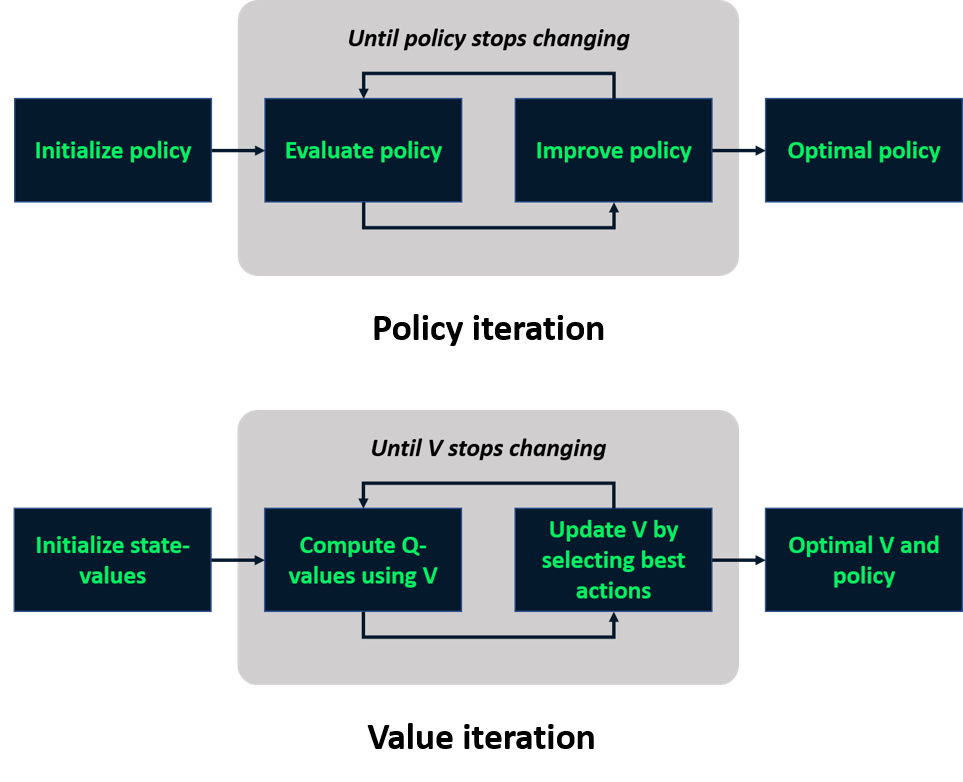

Recap: model-based learning

- Rely on knowledge of environment dynamics

- No interaction with environment

Model-free learning

- Doesn't rely on knowledge of environment dynamics

- Agent interacts with environment

- Learns policy through trial and error

- More suitable for real-world applications

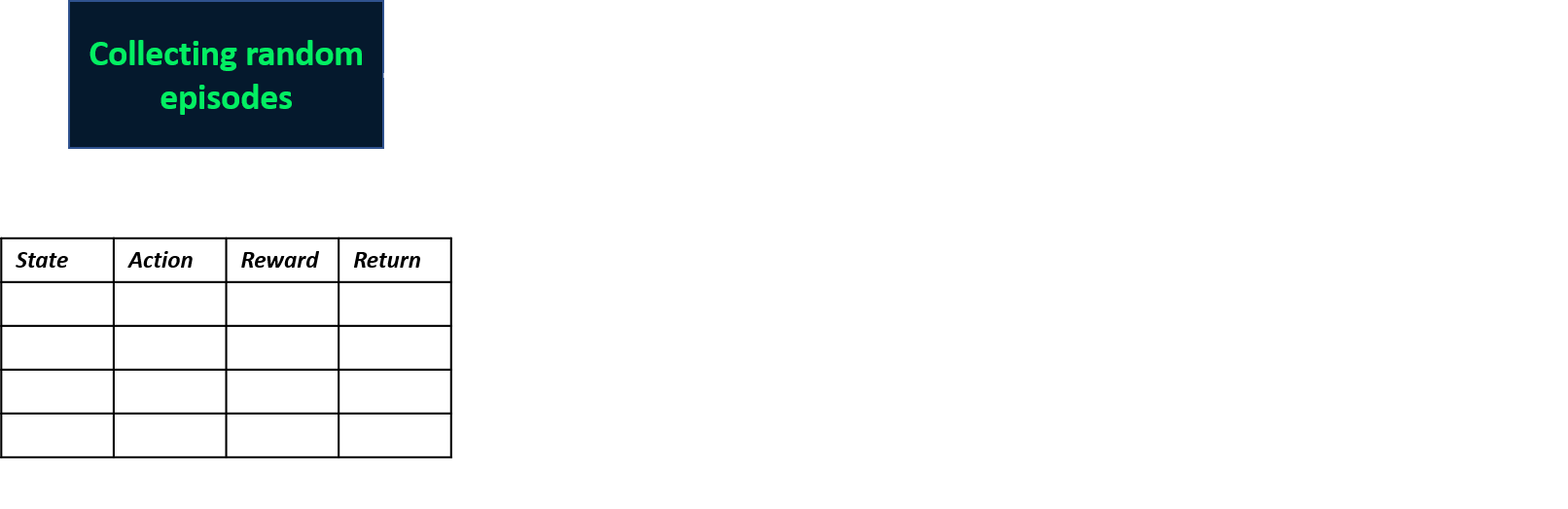

Monte Carlo methods

- Model-free techniques

- Estimate Q-values based on episodes

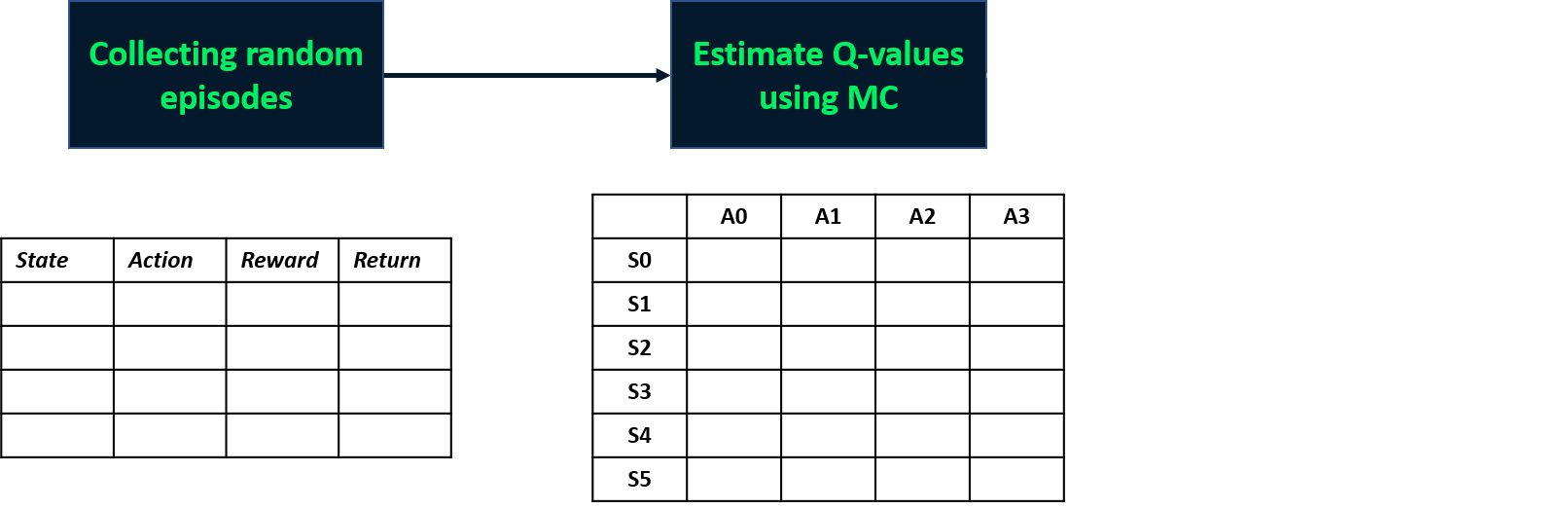

Monte Carlo methods

- Model-free techniques

- Estimate Q-values based on episodes

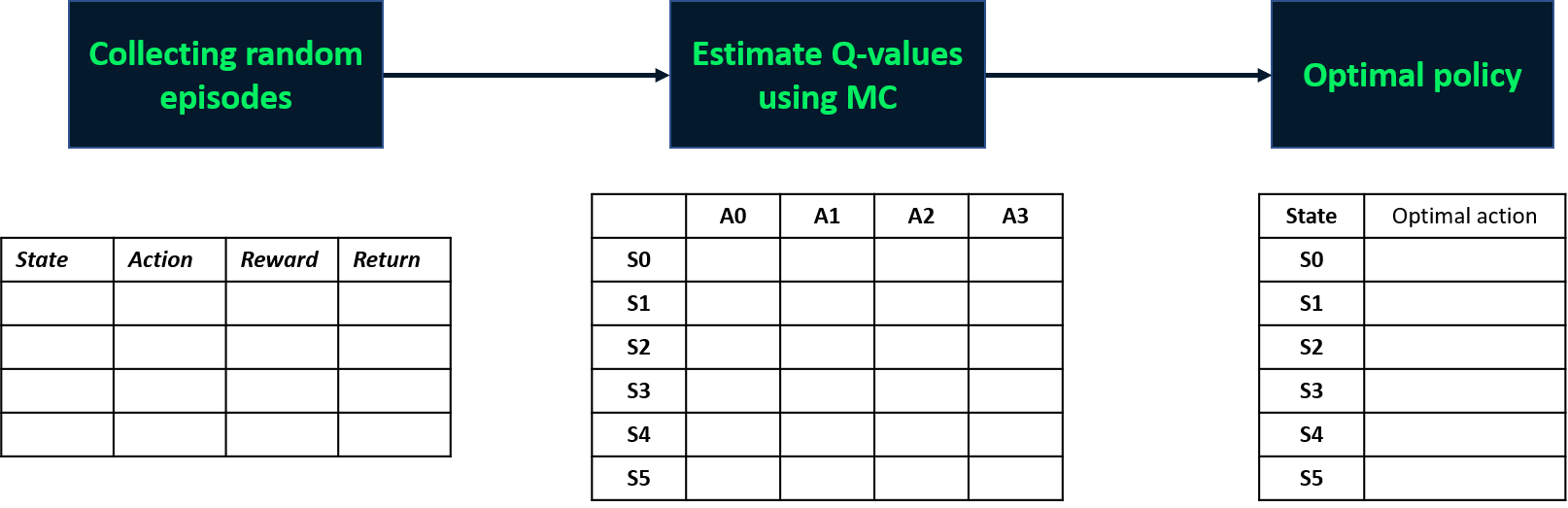

Monte Carlo methods

- Model-free techniques

- Estimate Q-values based on episodes

- Two methods: first-visit, every-visit

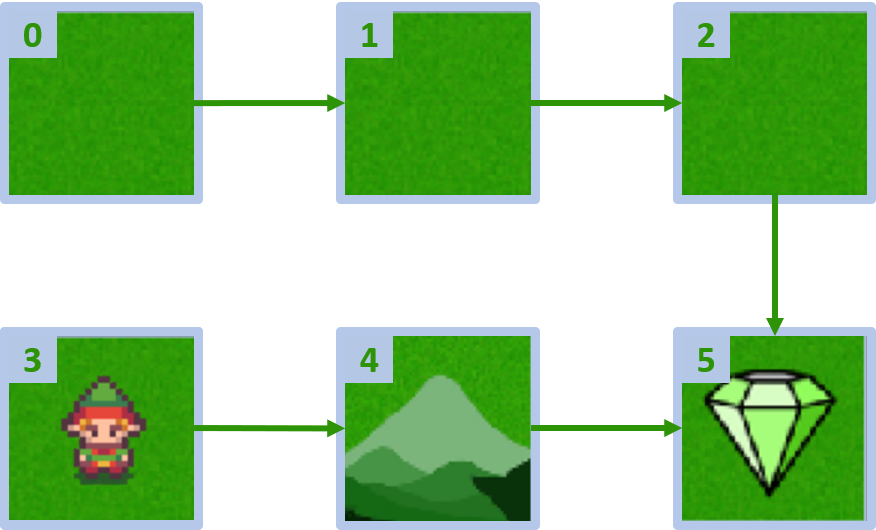

Custom grid world

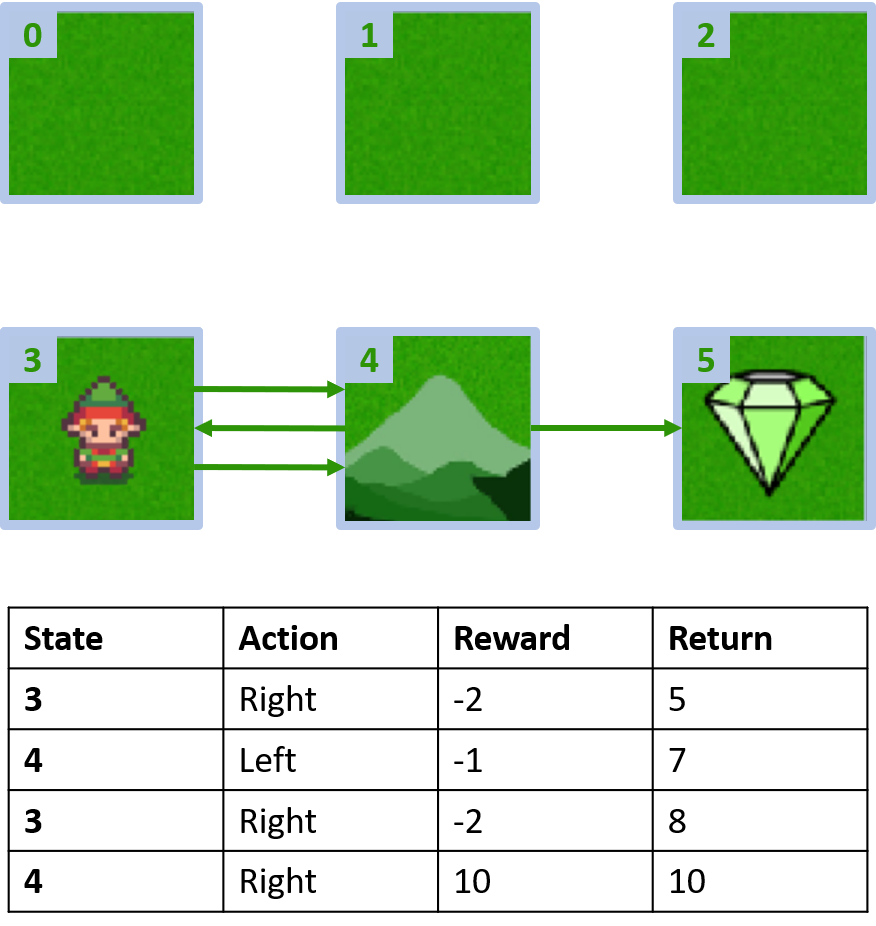

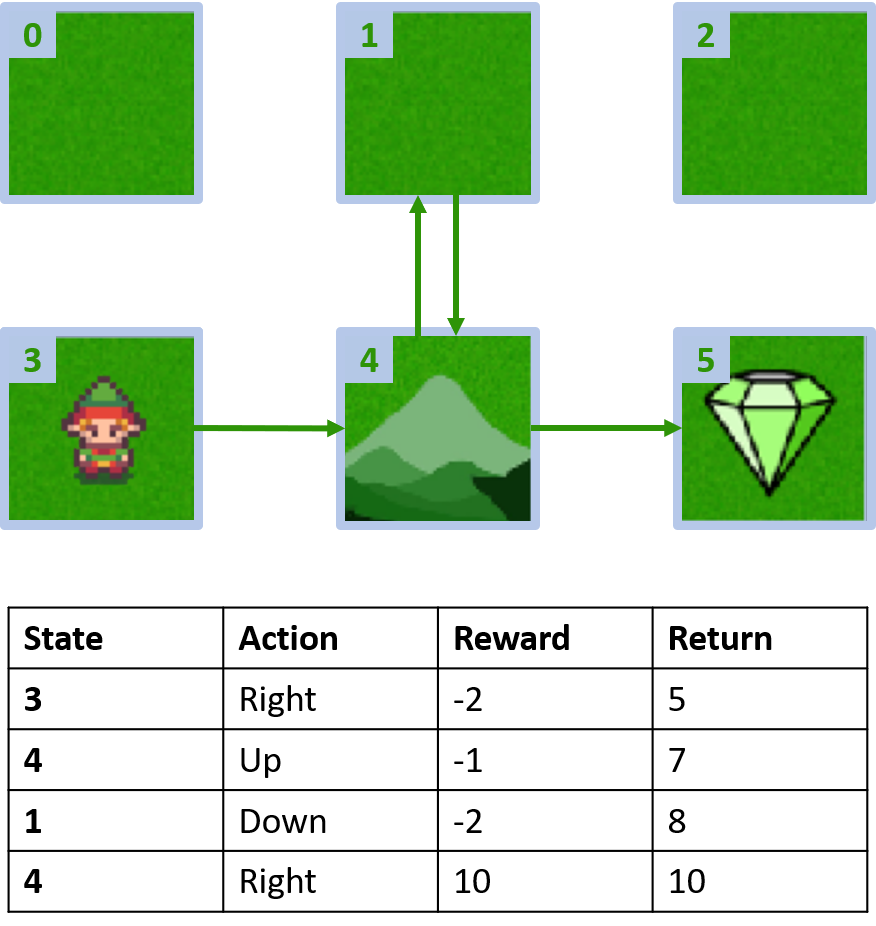

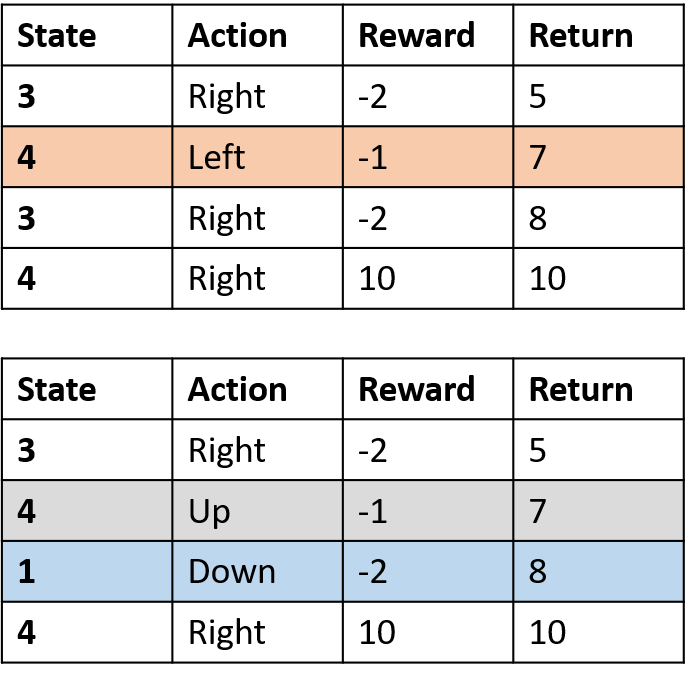

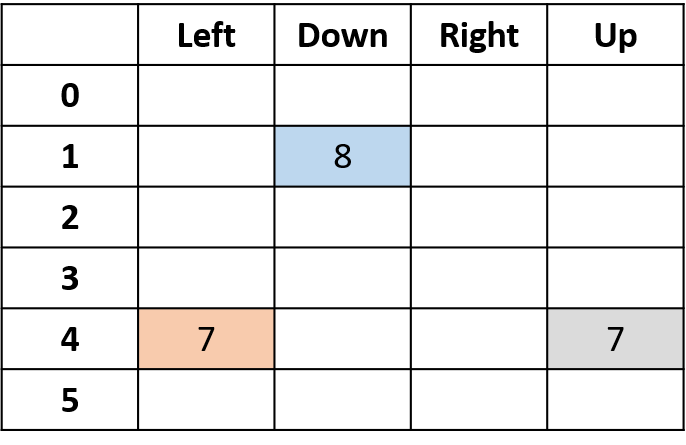

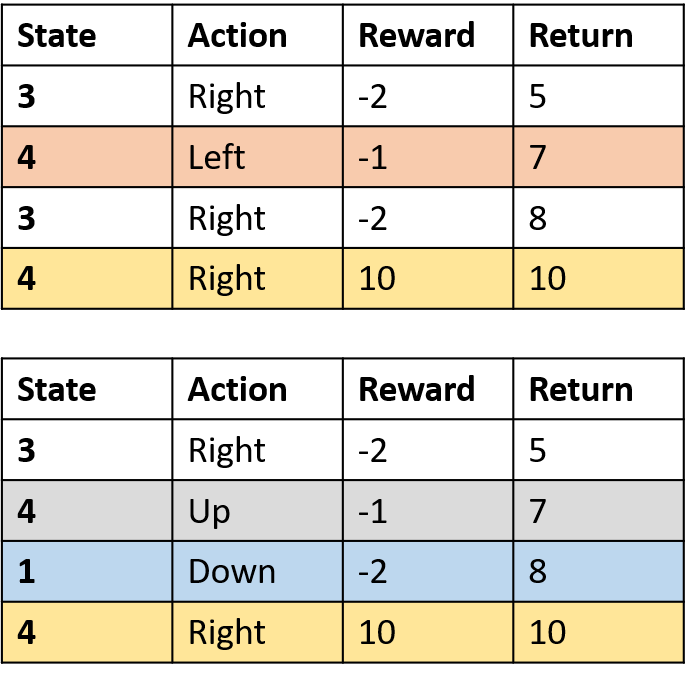

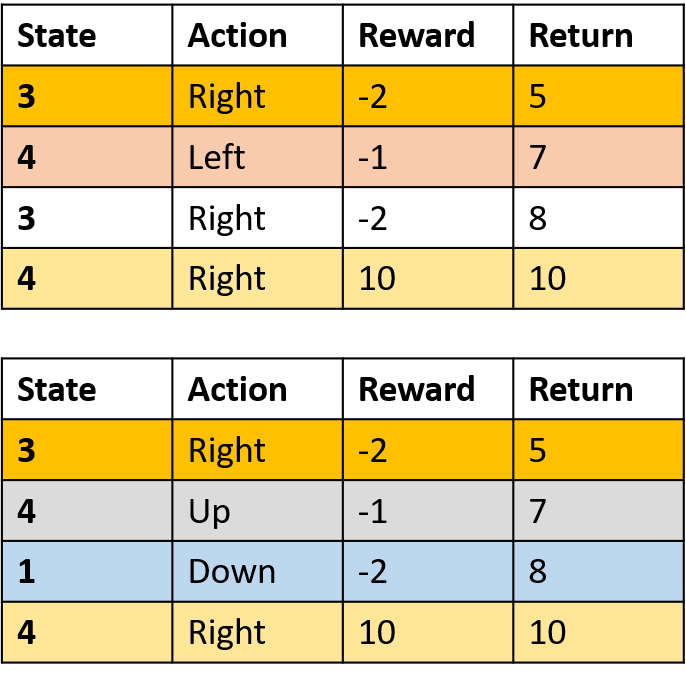

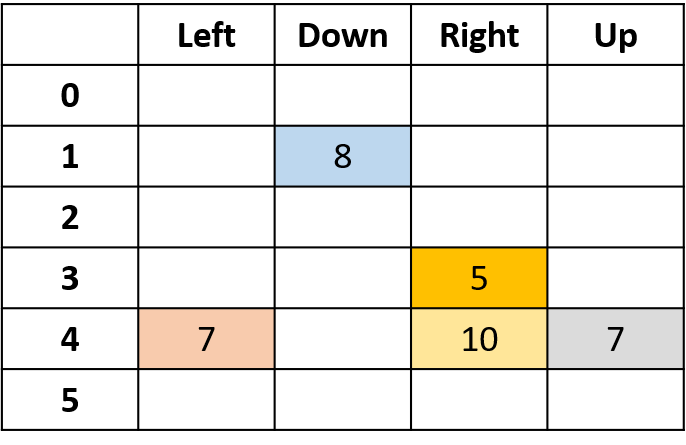

Collecting two episodes

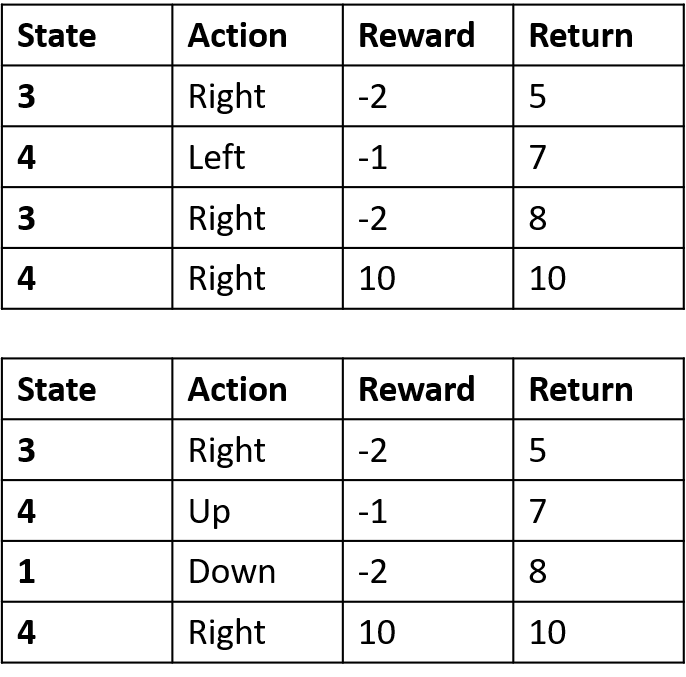

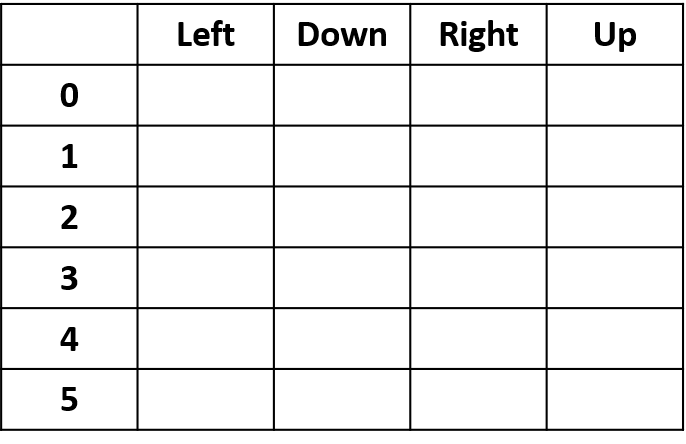

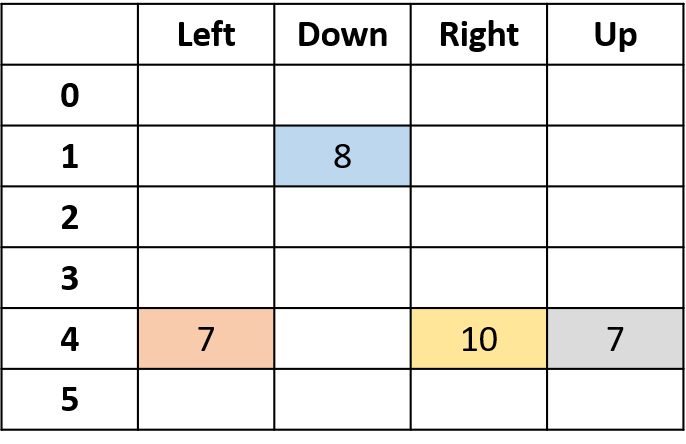

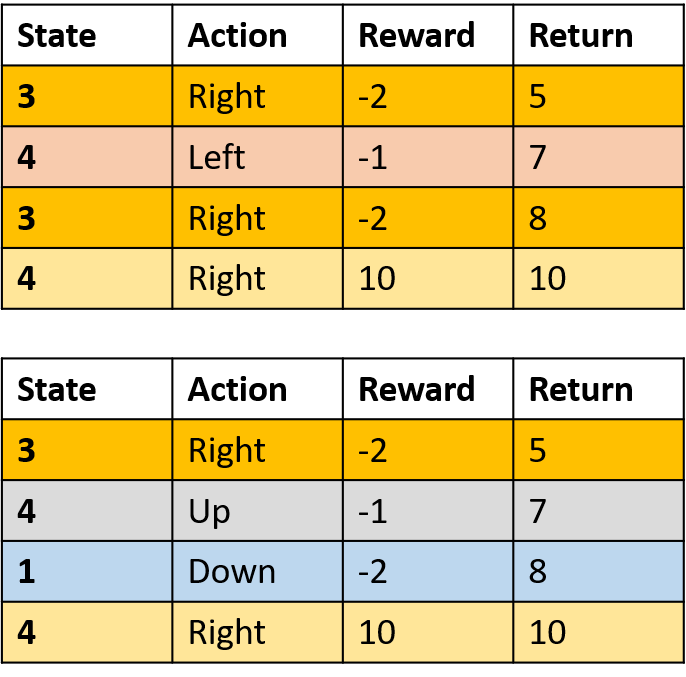

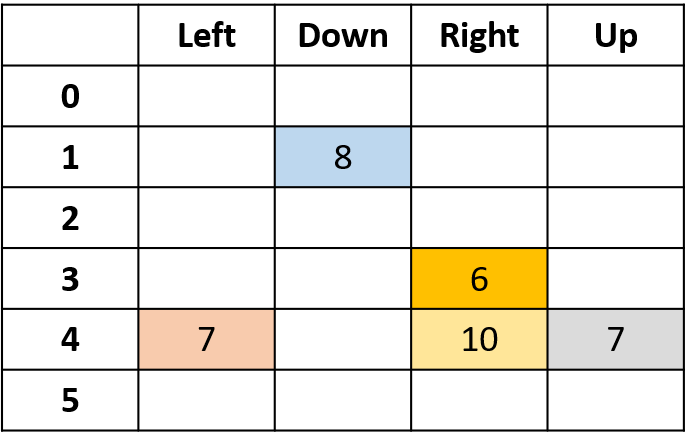

Estimating Q-values

- Q-table: table for Q-values

Q(4, left), Q(4, up), and Q(1, down)

- (s,a) appears once -> fill with return

Q(4, right)

- (s,a) occurs once per episode -> average

Q(3, right) - first-visit Monte Carlo

- Average first visit to (s,a) within episodes

Q(3, right) - every-visit Monte Carlo

- Average every visit to (s,a) within episodes

Generating an episode

def generate_episode(): episode = [] state, info = env.reset()terminated = False while not terminated: action = env.action_space.sample()next_state, reward, terminated, truncated, info = env.step(action)episode.append((state, action, reward)) state = next_statereturn episode

First-visit Monte Carlo

def first_visit_mc(num_episodes): Q = np.zeros((num_states, num_actions)) returns_sum = np.zeros((num_states, num_actions)) returns_count = np.zeros((num_states, num_actions))for i in range(num_episodes): episode = generate_episode() visited_states_actions = set()for j, (state, action, reward) in enumerate(episode):if (state, action) not in visited_states:returns_sum[state, action] += sum([x[2] for x in episode[j:]])returns_count[state, action] += 1 visited_states_actions.add((state, action))nonzero_counts = returns_count != 0Q[nonzero_counts] = returns_sum[nonzero_counts] / returns_count[nonzero_counts] return Q

Every-visit Monte Carlo

def every_visit_mc(num_episodes):

Q = np.zeros((num_states, num_actions))

returns_sum = np.zeros((num_states, num_actions))

returns_count = np.zeros((num_states, num_actions))

for i in range(num_episodes):

episode = generate_episode()

for j, (state, action, reward) in enumerate(episode):

returns_sum[state, action] += sum([x[2] for x in episode[j:]])

returns_count[state, action] += 1

nonzero_counts = returns_count != 0

Q[nonzero_counts] = returns_sum[nonzero_counts] / returns_count[nonzero_counts]

return Q

Getting the optimal policy

def get_policy():

policy = {state: np.argmax(Q[state]) for state in range(num_states)}

return policy

Putting things together

Q = first_visit_mc(1000)policy_first_visit = get_policy()print("First-visit policy: \n", policy_first_visit)Q = every_visit_mc(1000)policy_every_visit = get_policy()print("Every-visit policy: \n", policy_every_visit)

First-visit policy:

{0: 2, 1: 2, 2: 1,

3: 2, 4: 2, 5: 0}

Every-visit policy:

{0: 2, 1: 2, 2: 1,

3: 2, 4: 2, 5: 0}

Let's practice!

Reinforcement Learning with Gymnasium in Python