Temporal difference learning

Reinforcement Learning with Gymnasium in Python

Fouad Trad

Machine Learning Engineer

TD learning vs. Monte Carlo

TD learning

- Model-free

- Estimate Q-table based on interaction

- Update Q-table each step within episode

- Suitable for tasks with long/indefinite episodes

Monte Carlo

- Model-free

- Estimate Q-table based on interaction

- Update Q-table when at least one episode done

- Suitable for short episodic tasks

TD learning as weather forecasting

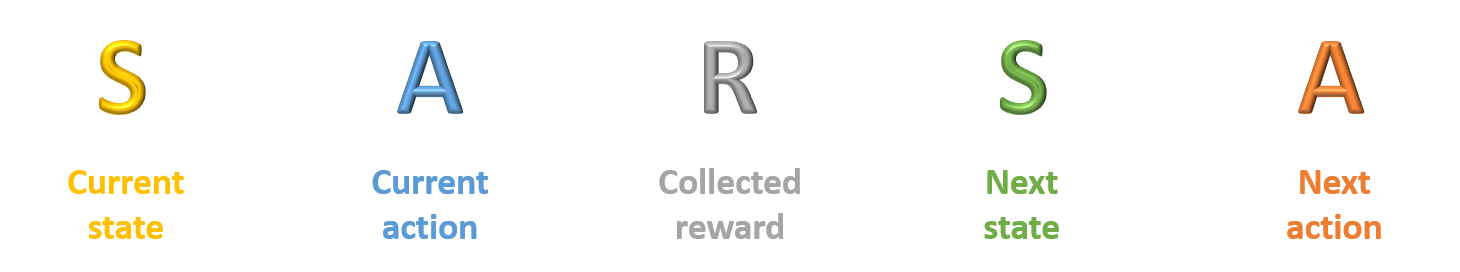

SARSA

- TD algorithm

- On-policy method: adjusts strategy based on taken actions

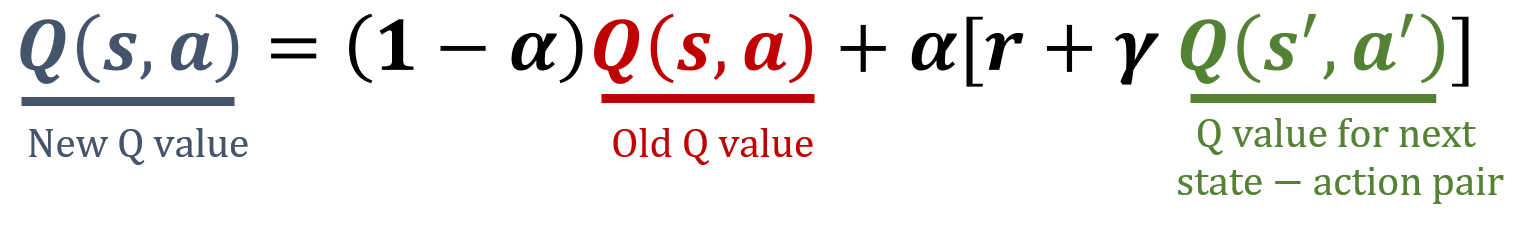

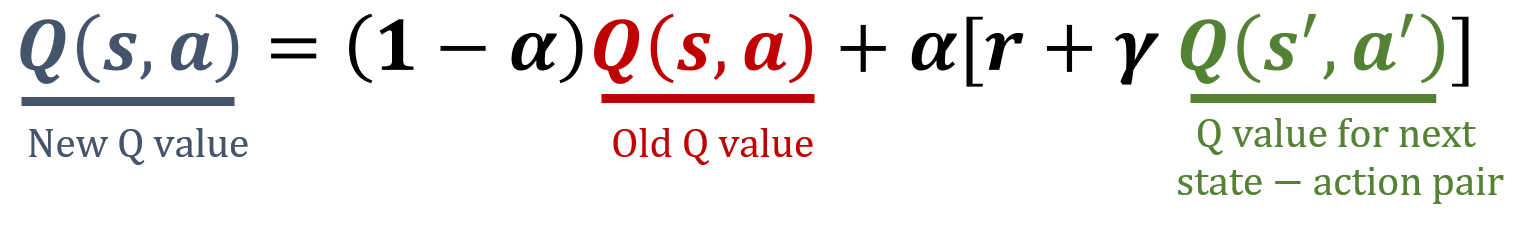

SARSA update rule

- $\alpha$: learning rate

- $\gamma$: discount factor

- Both between 0 and 1

Frozen Lake

Initialization

env = gym.make("FrozenLake", is_slippery=False)num_states = env.observation_space.n num_actions = env.action_space.nQ = np.zeros((num_states, num_actions))alpha = 0.1 gamma = 1 num_episodes = 1000

SARSA loop

for episode in range(num_episodes):state, info = env.reset() action = env.action_space.sample()terminated = False while not terminated: next_state, reward, terminated, truncated, info = env.step(action)next_action = env.action_space.sample()update_q_table(state, action, reward, next_state, next_action)state, action = next_state, next_action

SARSA updates

def update_q_table(state, action, reward, next_state, next_action):old_value = Q[state, action]next_value = Q[next_state, next_action]Q[state, action] = (1 - alpha) * old_value + alpha * (reward + gamma * next_value)

Deriving the optimal policy

policy = get_policy()

print(policy)

{ 0: 1, 1: 2, 2: 1, 3: 0,

4: 1, 5: 0, 6: 1, 7: 0,

8: 2, 9: 1, 10: 1, 11: 0,

12: 0, 13: 2, 14: 2, 15: 0}

Let's practice!

Reinforcement Learning with Gymnasium in Python