Q-learning

Reinforcement Learning with Gymnasium in Python

Fouad Trad

Machine Learning Engineer

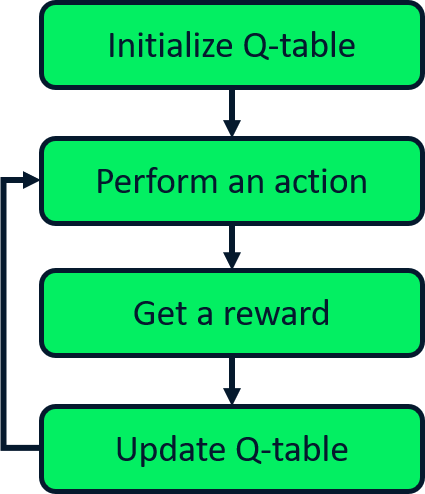

Introduction to Q-learning

- Stands for quality learning

- Model-free technique

- Learns optimal Q-table by interaction

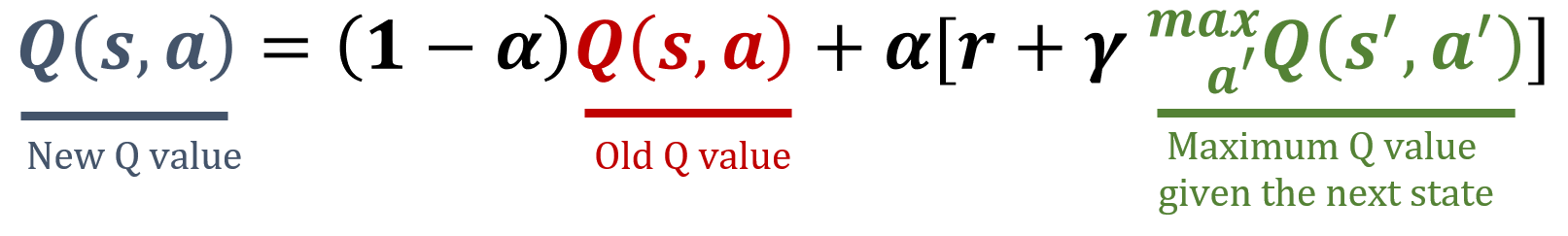

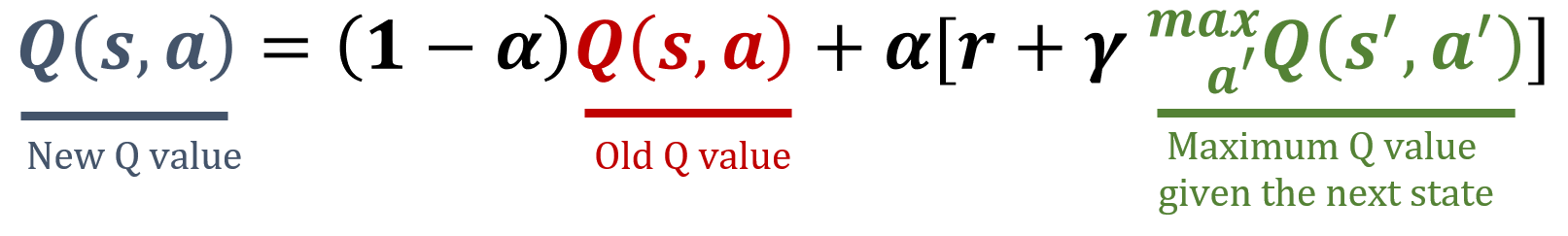

Q-learning vs. SARSA

SARSA

- Updates based on taken action

- On-policy learner

Q-learning

- Updates independent of taken actions

- Off-policy learner

Q-learning implementation

env = gym.make("FrozenLake", is_slippery=True)num_episodes = 1000 alpha = 0.1 gamma = 1num_states, num_actions = env.observation_space.n, env.action_space.n Q = np.zeros((num_states, num_actions))reward_per_random_episode = []

Q-learning implementation

for episode in range(num_episodes): state, info = env.reset() terminated = False episode_reward = 0while not terminated:# Random action selection action = env.action_space.sample()# Take action and observe new state and reward new_state, reward, terminated, truncated, info = env.step(action)# Update Q-table update_q_table(state, action, new_state)episode_reward += reward state = new_statereward_per_random_episode.append(episode_reward)

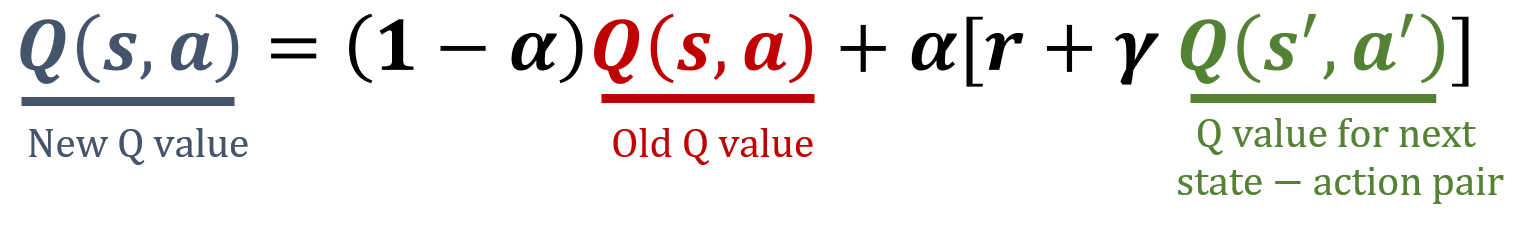

Q-learning update

def update_q_table(state, action, reward, new_state):old_value = Q[state, action]next_max = max(Q[new_state])Q[state, action] = (1 - alpha) * old_value + alpha * (reward + gamma * next_max)

Using the policy

reward_per_learned_episode = []

policy = get_policy()for episode in range(num_episodes): state, info = env.reset() terminated = False episode_reward = 0 while not terminated: # Select the best action based on learned Q-table action = policy[state] # Take action and observe new state new_state, reward, terminated, truncated, info = env.step(action) state = new_stateepisode_reward += rewardreward_per_learned_episode.append(episode_reward)

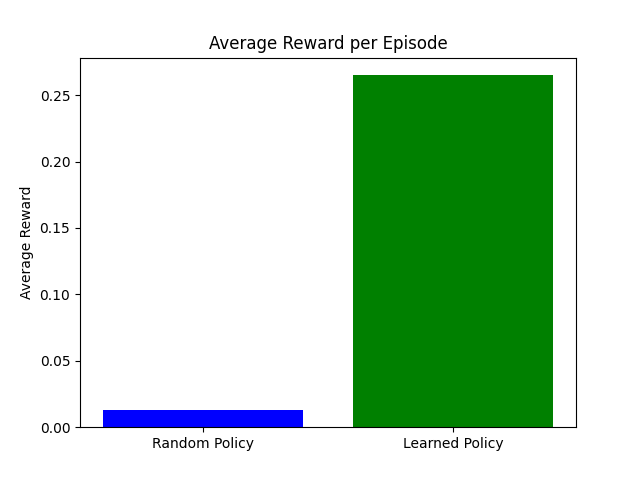

Q-learning evaluation

import numpy as np import matplotlib.pyplot as pltavg_random_reward = np.mean(reward_per_random_episode) avg_learned_reward = np.mean(reward_per_learned_episode)plt.bar(['Random Policy', 'Learned Policy'], [avg_random_reward, avg_learned_reward], color=['blue', 'green']) plt.title('Average Reward per Episode') plt.ylabel('Average Reward') plt.show()

Let's practice!

Reinforcement Learning with Gymnasium in Python