Double Q-learning

Reinforcement Learning with Gymnasium in Python

Fouad Trad

Machine Learning Engineer

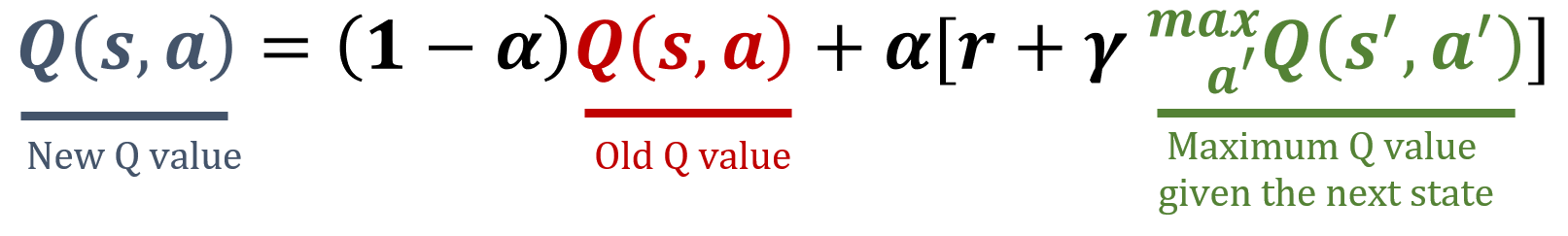

Q-learning

- Estimates optimal action-value function

- Overestimates Q-values by updating based on max Q

- Might lead to suboptimal policy learning

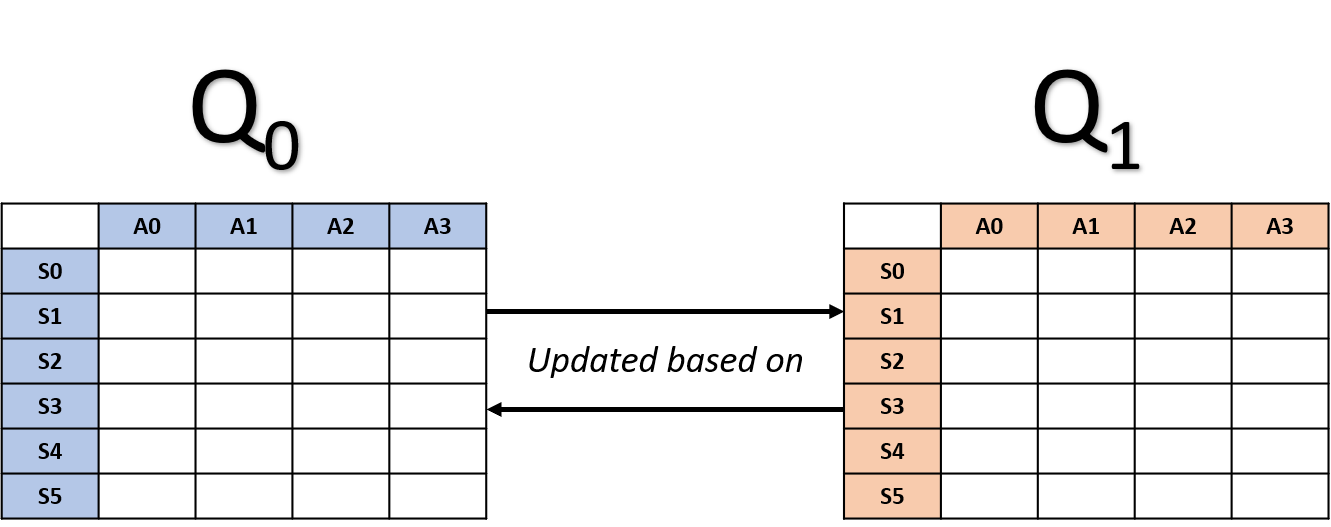

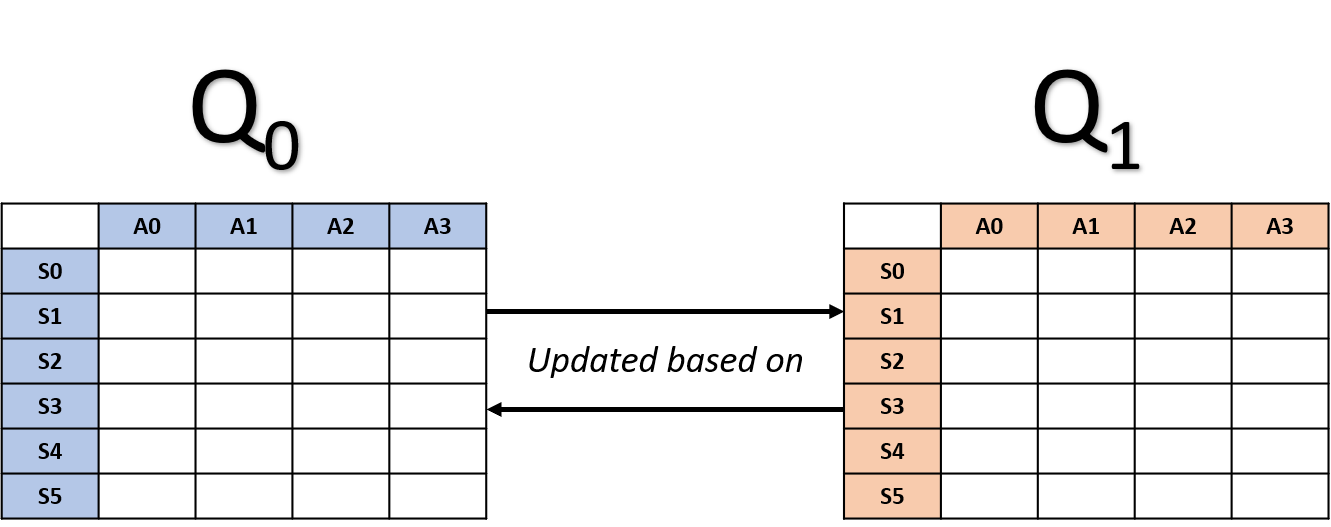

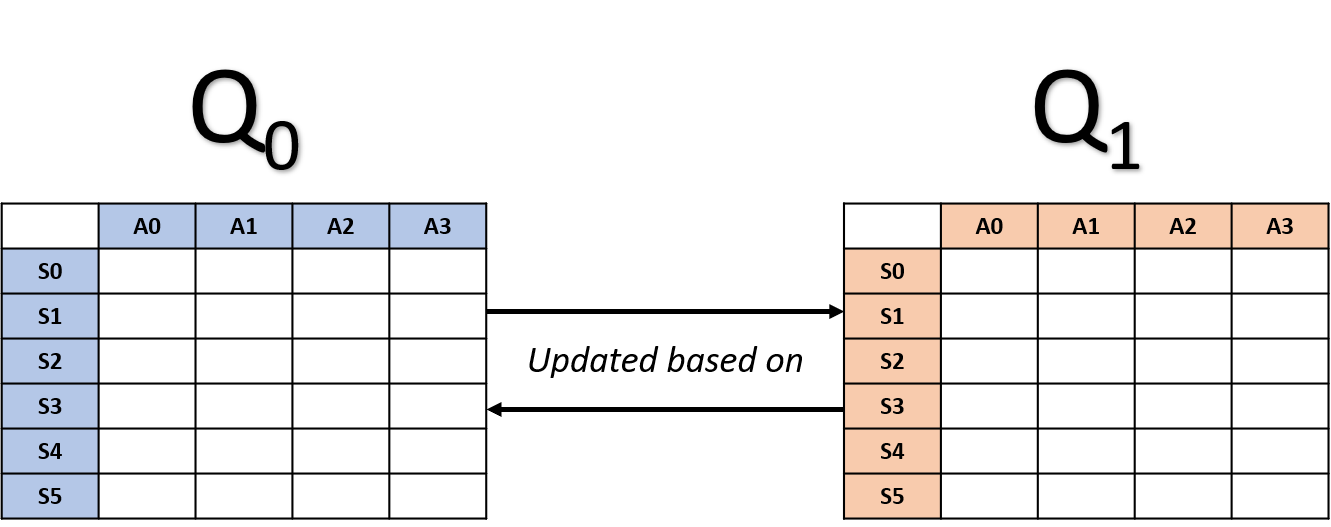

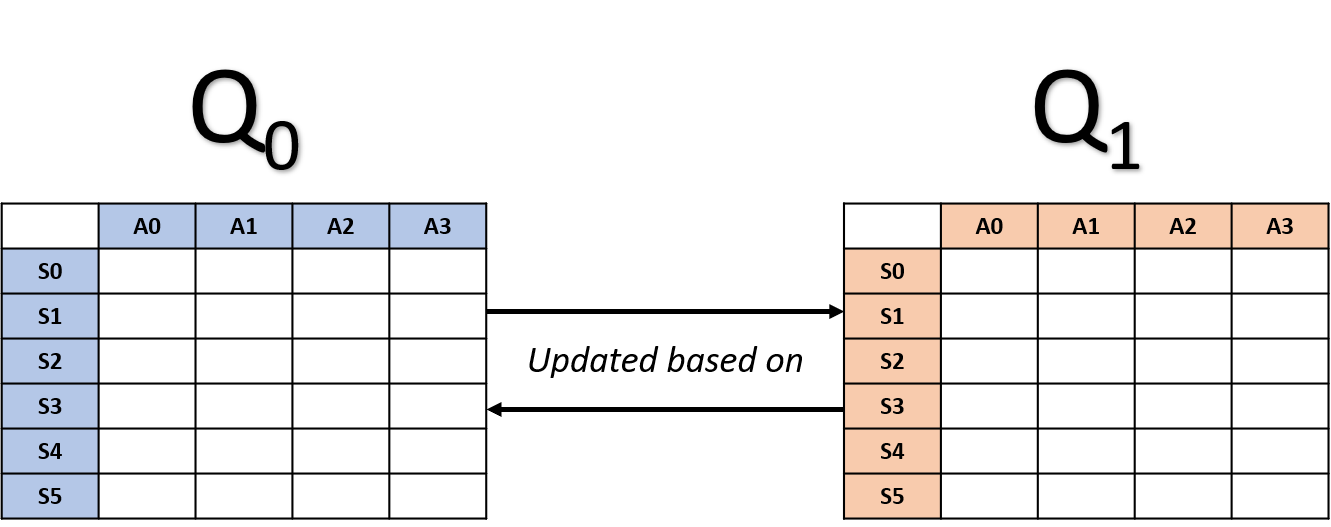

Double Q-learning

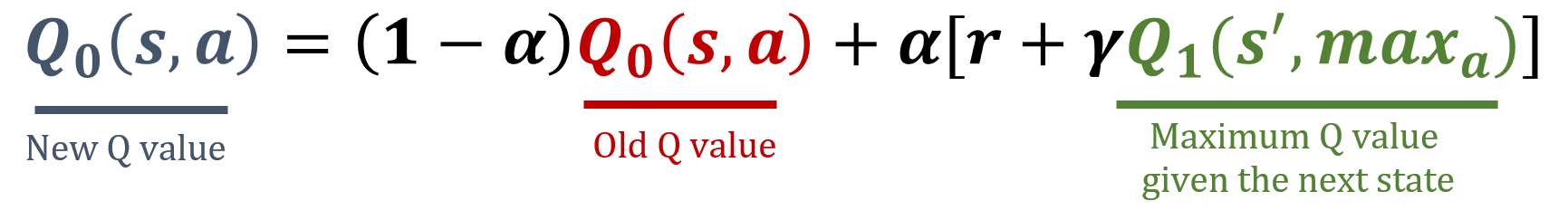

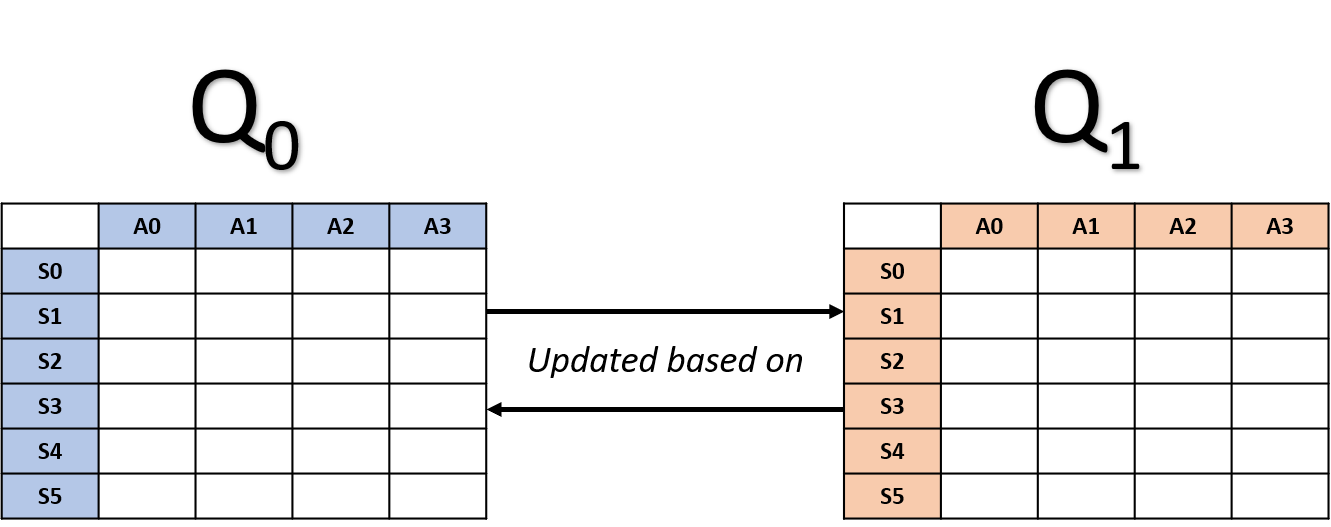

- Maintains two Q-tables

- Each table updated based on the other

- Reduces risk of Q-values overestimation

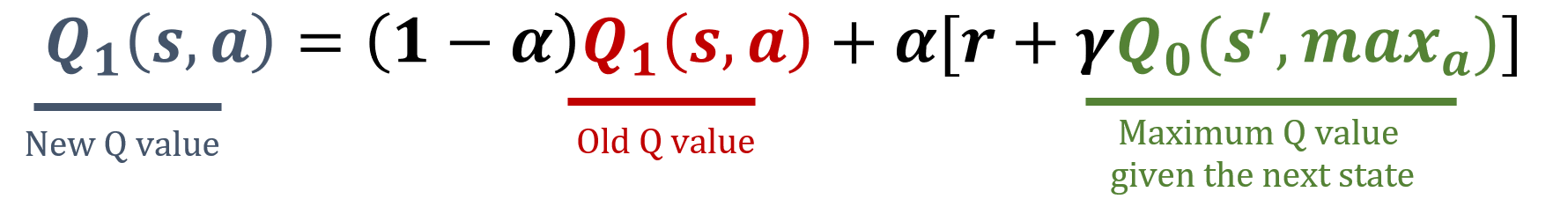

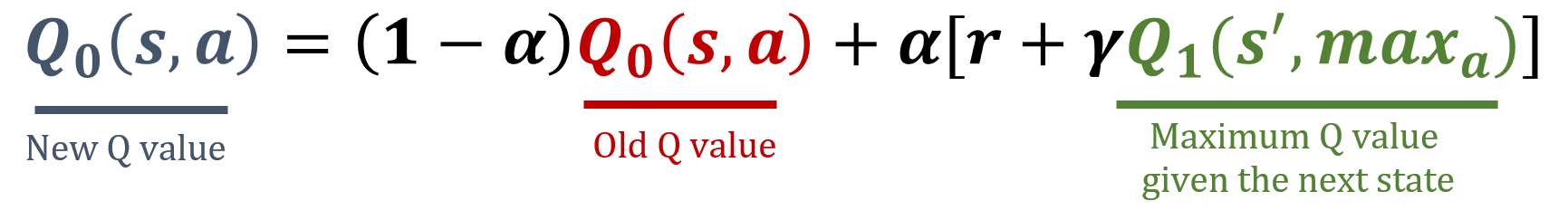

Double Q-learning updates

- Randomly select a table

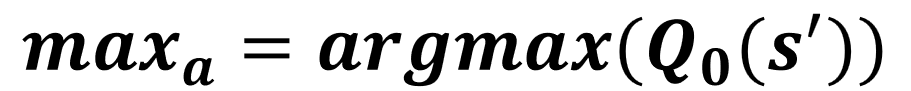

Q0 update

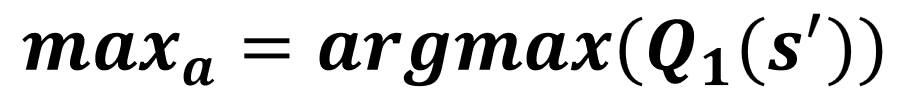

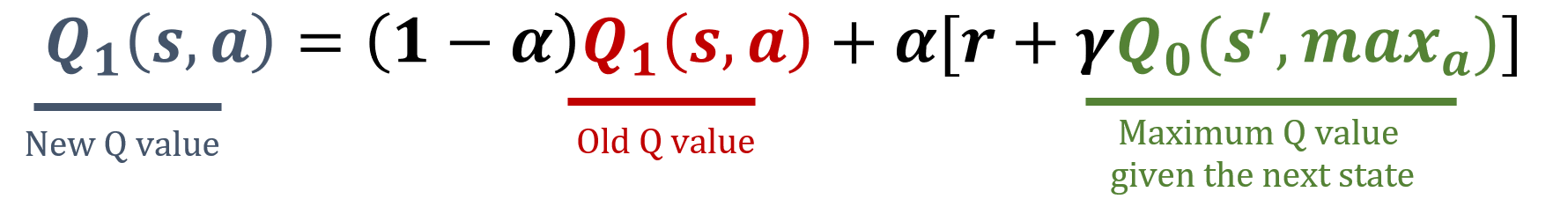

Q1 update

Double Q-learning

- Reduces overestimation bias

- Alternates between Q0 and Q1 updates

- Both tables contribute to learning process

Implementation with Frozen Lake

env = gym.make('FrozenLake-v1', is_slippery=False) num_states = env.observation_space.n n_actions = env.action_space.n Q = [np.zeros((num_states, n_actions))] * 2num_episodes = 1000 alpha = 0.5 gamma = 0.99

Implementing update_q_tables()

def update_q_tables(state, action, reward, next_state): # Select a random Q-table index (0 or 1) i = np.random.randint(2)# Update the corresponding Q-table best_next_action = np.argmax(Q[i][next_state])Q[i][state, action] = (1 - alpha) * Q[i][state, action] + alpha * (reward + gamma * Q[1-i][next_state, best_next_action])

Training

for episode in range(num_episodes): state, info = env.reset() terminated = False while not terminated: action = np.random.choice(n_actions) next_state, reward, terminated, truncated, info = env.step(action) update_q_tables(state, action, reward, next_state) state = next_statefinal_Q = (Q[0] + Q[1])/2 # OR final_Q = Q[0] + Q[1]

Agent's policy

policy = {state: np.argmax(final_Q[state])

for state in range(num_states)}

print(policy)

{ 0: 1, 1: 0, 2: 0, 3: 0,

4: 1, 5: 0, 6: 1, 7: 0,

8: 2, 9: 1, 10: 1, 11: 0,

12: 0, 13: 2, 14: 2, 15: 0}

Let's practice!

Reinforcement Learning with Gymnasium in Python