Multi-armed bandits

Reinforcement Learning with Gymnasium in Python

Fouad Trad

Machine Learning Engineer

Multi-armed bandits

- Gambler facing slot machines

- Challenge → maximize winning

- Solution → exploration-exploitation

Slot machines

- Reward from an arm is 0 or 1

- Agent's goal → Accumulate maximum reward

Solving the problem

- Decayed epsilon-greedy

- Epsilon → select random machine

Solving the problem

- Decayed epsilon-greedy

- Epsilon → select random machine

- 1 - epsilon → select best machine so far

- Epsilon decreases over time

Initialization

n_bandits = 4 true_bandit_probs = np.random.rand(n_bandits)n_iterations = 100000 epsilon = 1.0 min_epsilon = 0.01 epsilon_decay = 0.999counts = np.zeros(n_bandits) # How many times each bandit was playedvalues = np.zeros(n_bandits) # Estimated winning probability of each banditrewards = np.zeros(n_iterations) # Reward historyselected_arms = np.zeros(n_iterations, dtype=int) # Arm selection history

Interaction loop

for i in range(n_iterations): arm = epsilon_greedy()reward = np.random.rand() < true_bandit_probs[arm]rewards[i] = reward selected_arms[i] = arm counts[arm] += 1values[arm] += (reward - values[arm]) / counts[arm]epsilon = max(min_epsilon, epsilon * epsilon_decay)

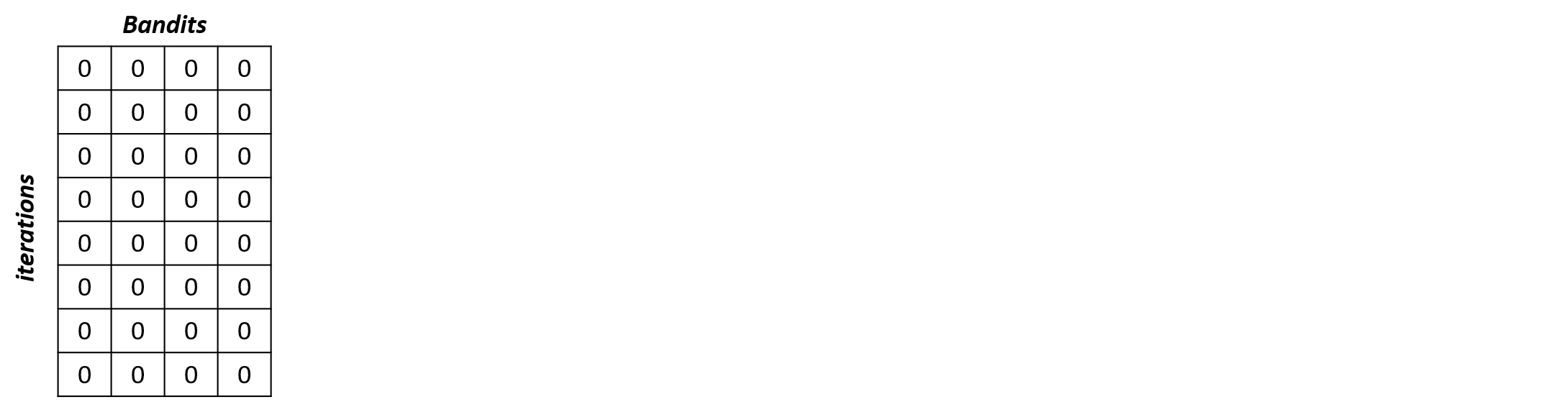

Analyzing selections

selections_percentage = np.zeros((n_iterations, n_bandits))

Analyzing selections

selections_percentage = np.zeros((n_iterations, n_bandits))for i in range(n_iterations): selections_percentage[i, selected_arms[i]] = 1

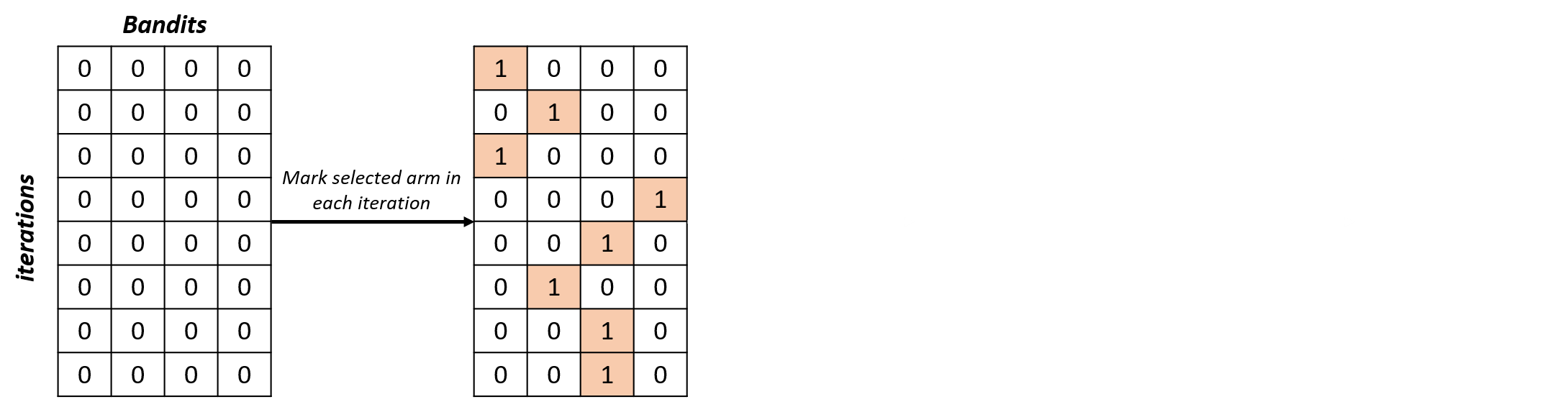

Analyzing selections

selections_percentage = np.zeros((n_iterations, n_bandits))for i in range(n_iterations): selections_percentage[i, selected_arms[i]] = 1selections_percentage = np.cumsum(selections_percentage, axis=0) / np.arange(1, n_iterations + 1).reshape(-1, 1)

Analyzing selections

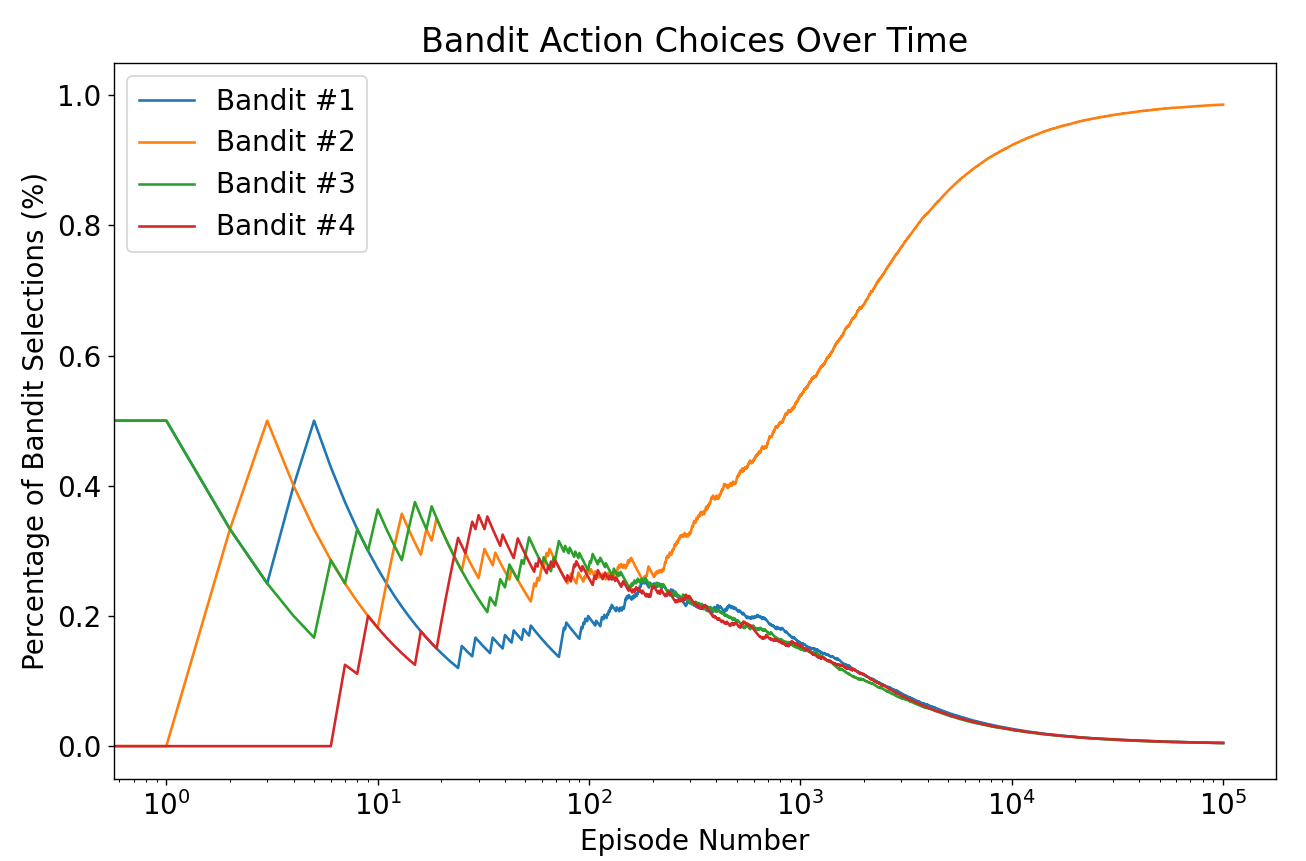

for arm in range(n_bandits): plt.plot(selections_percentage[:, arm], label=f'Bandit #{arm+1}') plt.xscale('log') plt.title('Bandit Action Choices Over Time') plt.xlabel('Episode Number') plt.ylabel('Percentage of Bandit Selections (%)') plt.legend() plt.show()for i, prob in enumerate(true_bandit_probs, 1): print(f"Bandit #{i} -> {prob:.2f}")

Bandit #1 -> 0.37

Bandit #2 -> 0.95

Bandit #3 -> 0.73

Bandit #4 -> 0.60

- Agent learns to select the bandit with highest probability

Let's practice!

Reinforcement Learning with Gymnasium in Python