Expected SARSA

Reinforcement Learning with Gymnasium in Python

Fouad Trad

Machine Learning Engineer

Expected SARSA

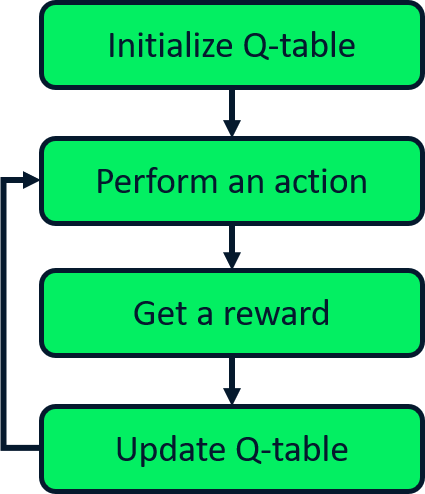

- TD method

- Model-free technique

- Updates Q-table differently than SARSA and Q-learning

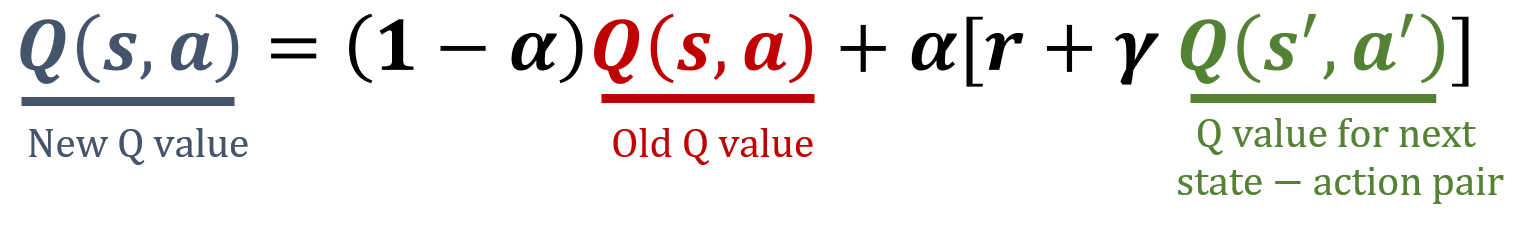

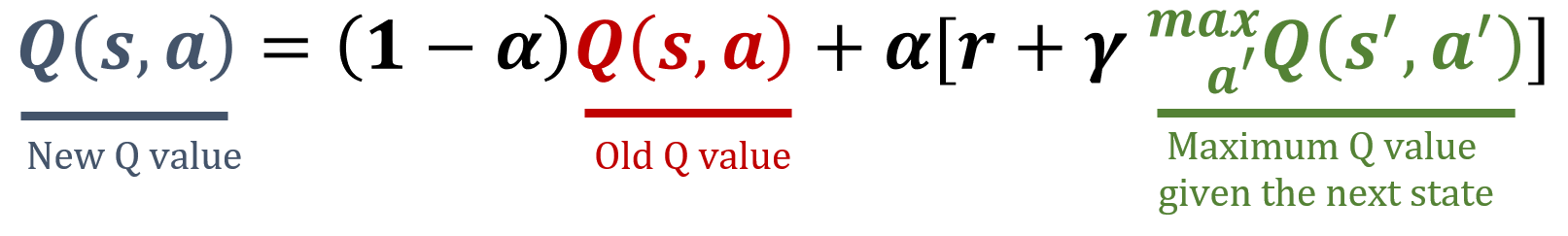

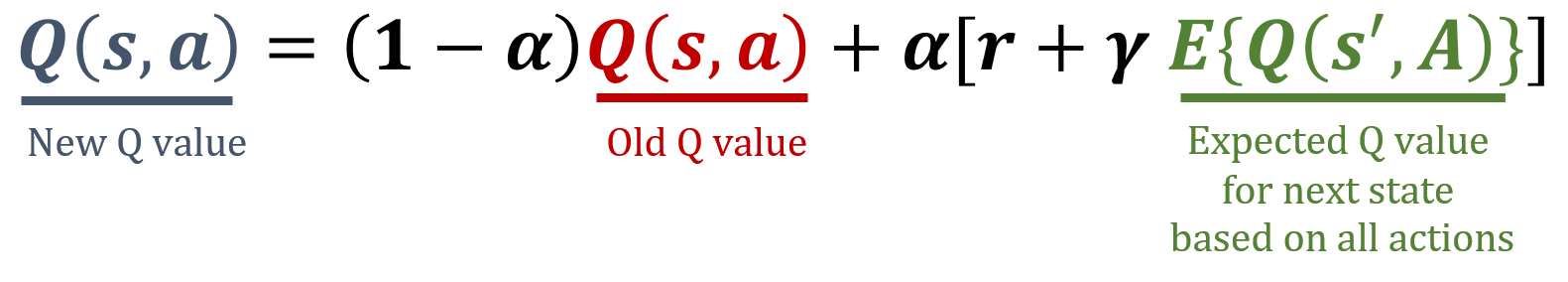

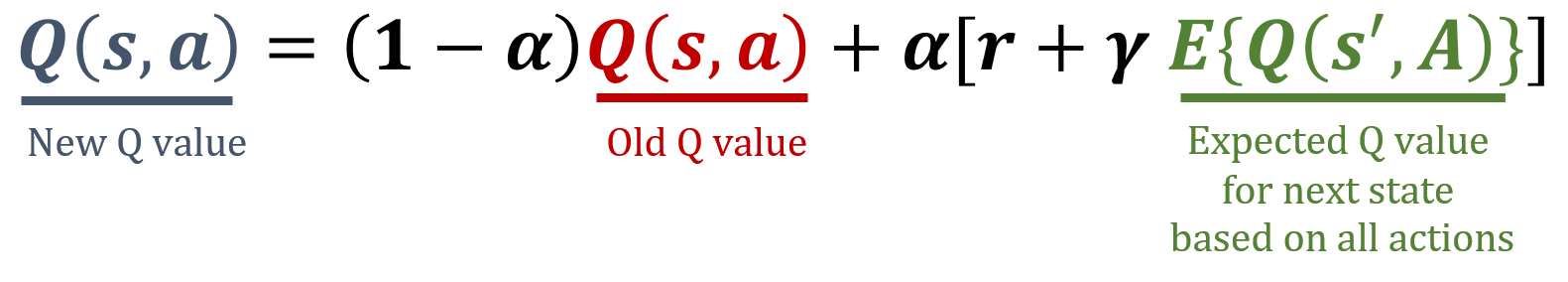

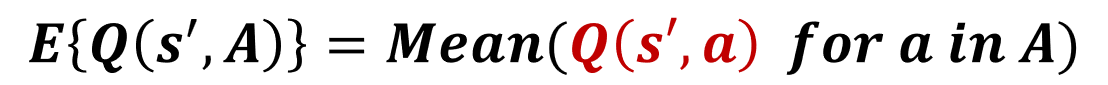

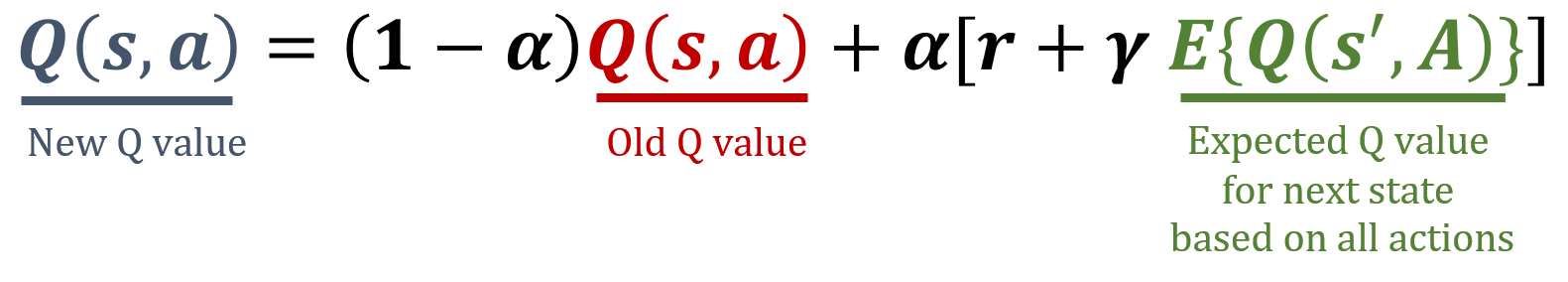

Expected SARSA update

SARSA

Q-learning

Expected SARSA

Expected value of next sate

- Takes into account all actions

- Random actions → equal probabilities

Implementation with Frozen Lake

env = gym.make('FrozenLake-v1', is_slippery=False) num_states = env.observation_space.n num_actions = env.action_space.n Q = np.zeros((num_states, num_actions))gamma = 0.99 alpha = 0.1 num_episodes = 1000

Expected SARSA update rule

def update_q_table(state, action, next_state, reward):expected_q = np.mean(Q[next_state])Q[state, action] = (1-alpha) * Q[state, action] + alpha * (reward + gamma * expected_q)

Training

for i in range(num_episodes): state, info = env.reset() terminated = Falsewhile not terminated: action = env.action_space.sample()next_state, reward, terminated, truncated, info = env.step(action)update_q_table(state, action, next_state, reward) state = next_state

Agent's policy

policy = {state: np.argmax(Q[state])

for state in range(num_states)}

print(policy)

{ 0: 1, 1: 2, 2: 1, 3: 0,

4: 1, 5: 0, 6: 1, 7: 0,

8: 2, 9: 2, 10: 1, 11: 0,

12: 0, 13: 2, 14: 2, 15: 0}

Let's practice!

Reinforcement Learning with Gymnasium in Python