Policy iteration and value iteration

Reinforcement Learning with Gymnasium in Python

Fouad Trad

Machine Learning Engineer

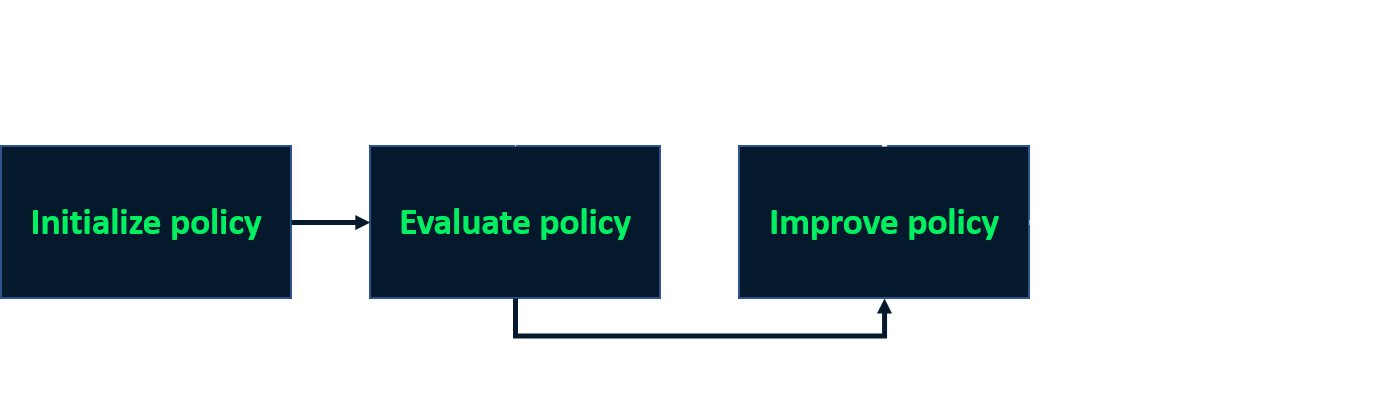

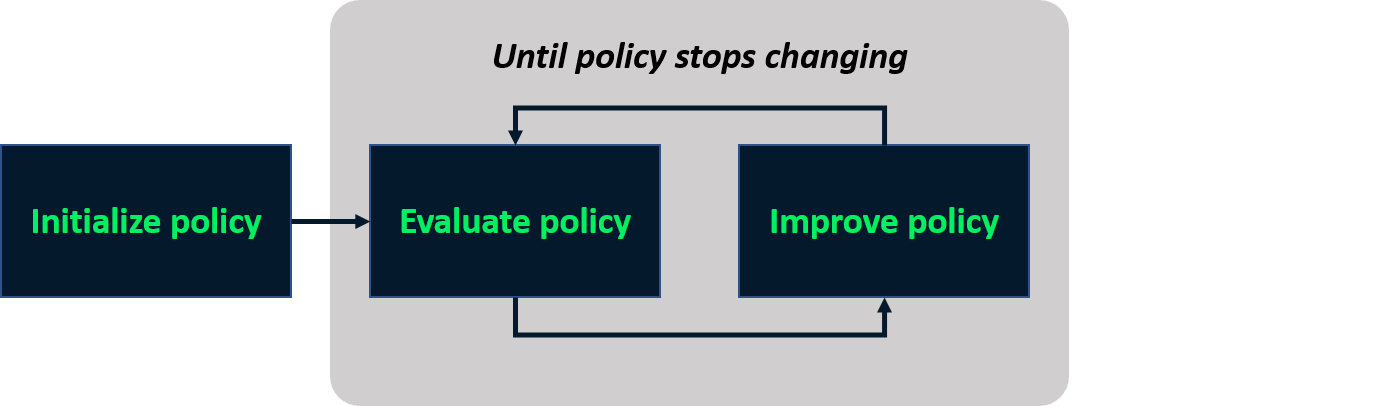

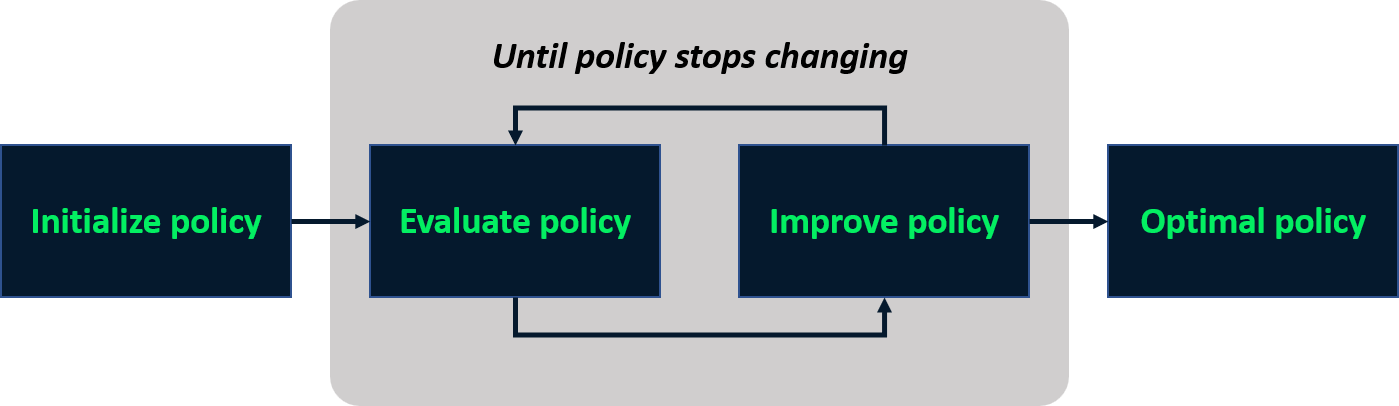

Policy iteration

- Iterative process to find optimal policy

Policy iteration

- Iterative process to find optimal policy

Policy iteration

- Iterative process to find optimal policy

Policy iteration

- Iterative process to find optimal policy

Policy iteration

- Iterative process to find optimal policy

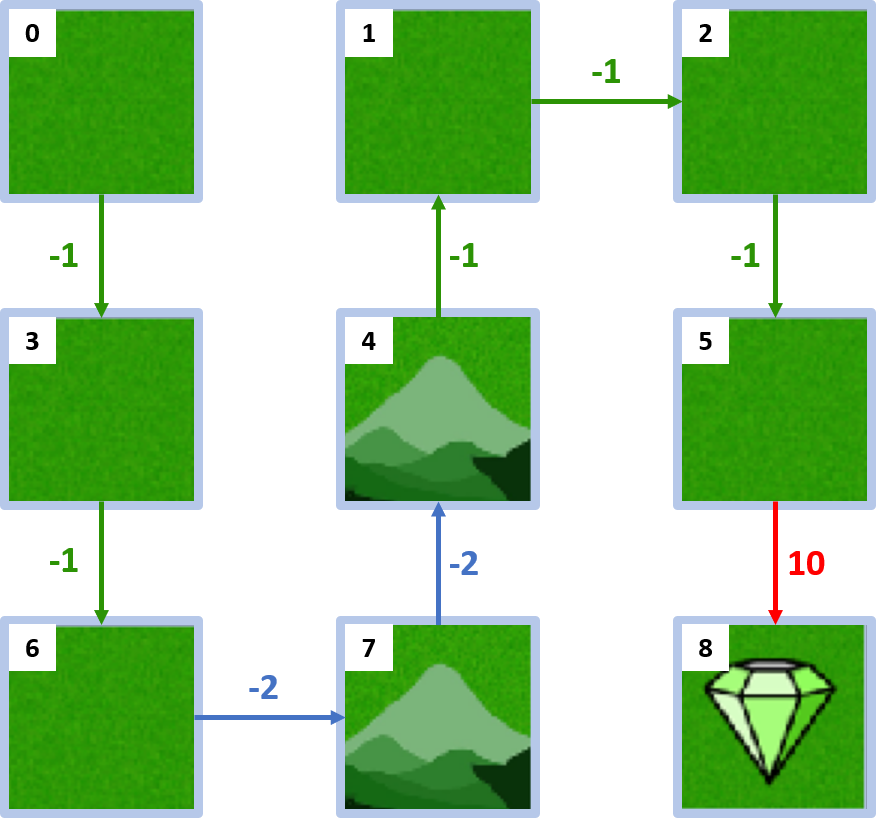

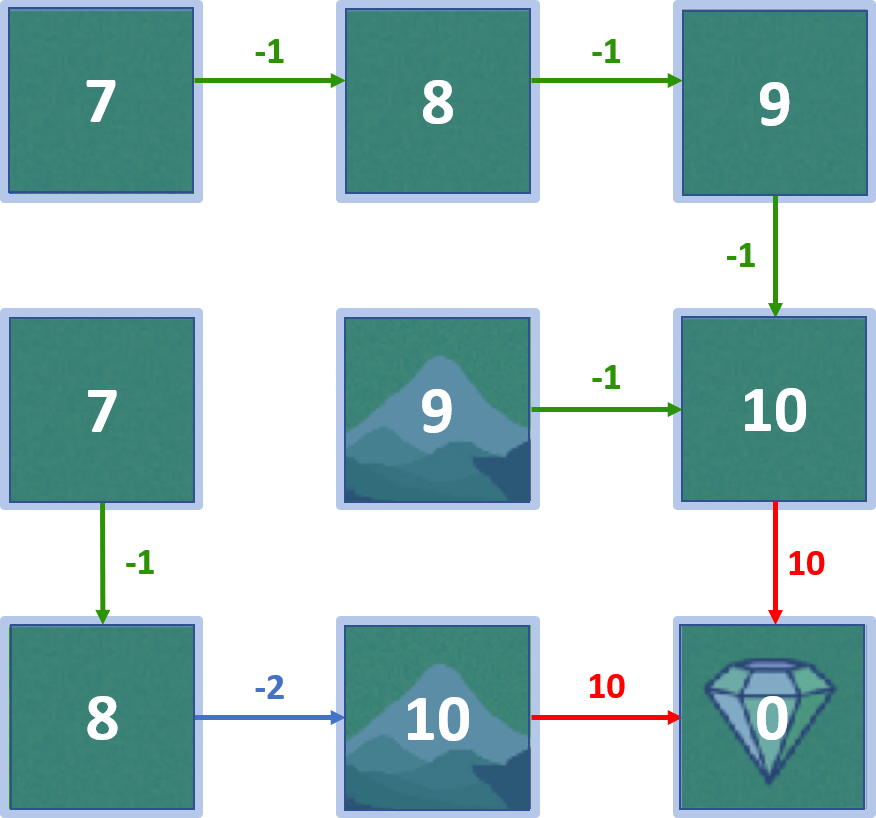

Grid world

policy = {

0:1, 1:2, 2:1,

3:1, 4:3, 5:1,

6:2, 7:3

}

Policy evaluation

def policy_evaluation(policy):V = {state: compute_state_value(state, policy) for state in range(num_states)}return V

Policy improvement

def policy_improvement(policy):improved_policy = {s: 0 for s in range(num_states-1)}Q = {(state, action): compute_q_value(state, action, policy) for state in range(num_states) for action in range(num_actions)}for state in range(num_states-1): max_action = max(range(num_actions), key=lambda action: Q[(state, action)]) improved_policy[state] = max_actionreturn improved_policy

Policy iteration

def policy_iteration():policy = {0:1, 1:2, 2:1, 3:1, 4:3, 5:1, 6:2, 7:3}while True: V = policy_evaluation(policy) improved_policy = policy_improvement(policy)if improved_policy == policy: break policy = improved_policyreturn policy, V

Optimal policy

policy, V = policy_iteration()

print(policy, V)

{0: 2, 1: 2, 2: 1,

3: 1, 4: 2, 5: 1,

6: 2, 7: 2}

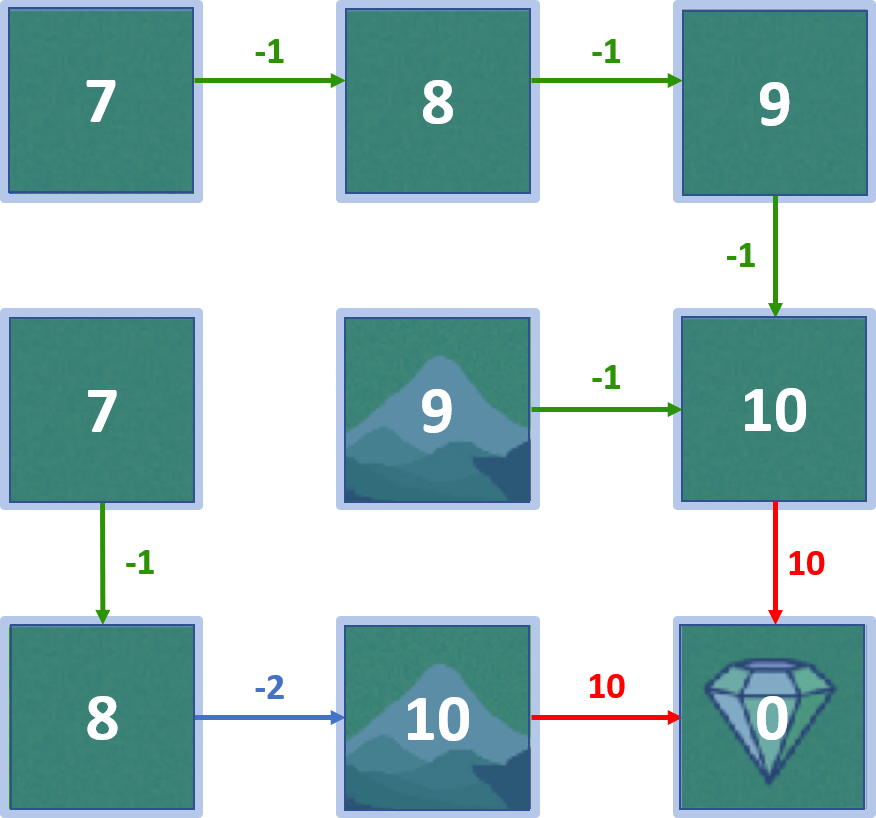

{0: 7, 1: 8, 2: 9,

3: 7, 4: 9, 5: 10,

6: 8, 7: 10, 8: 0}

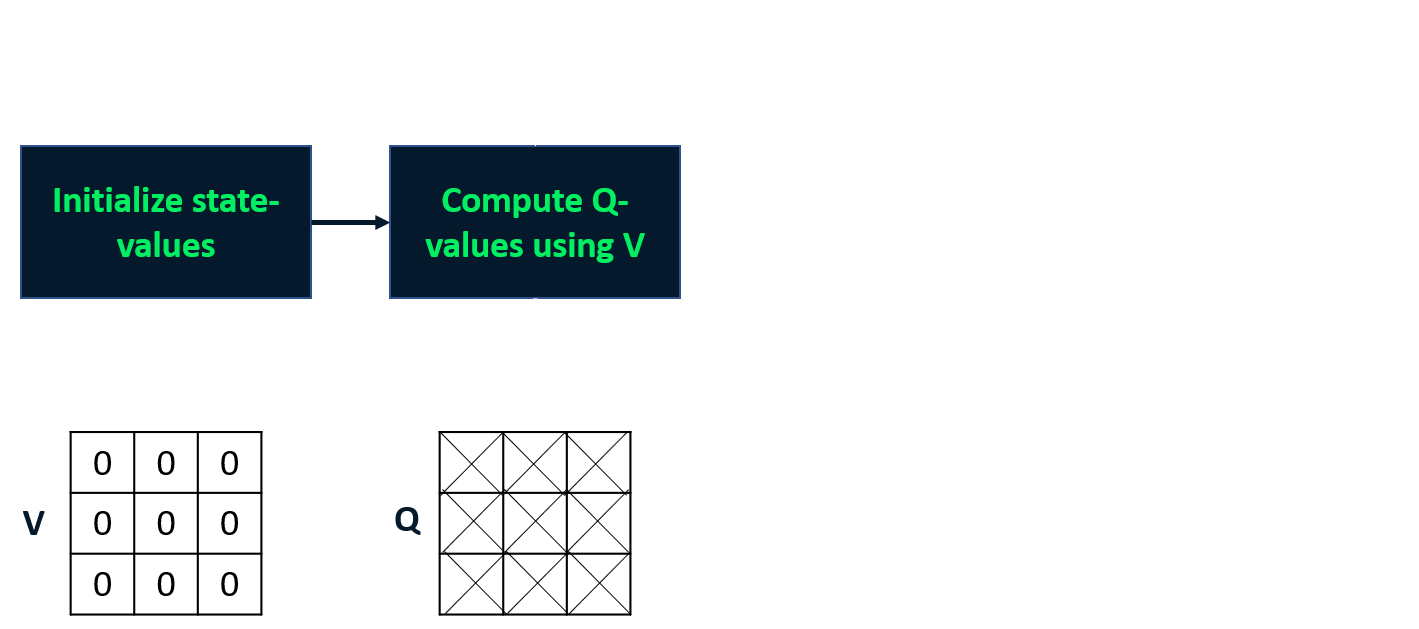

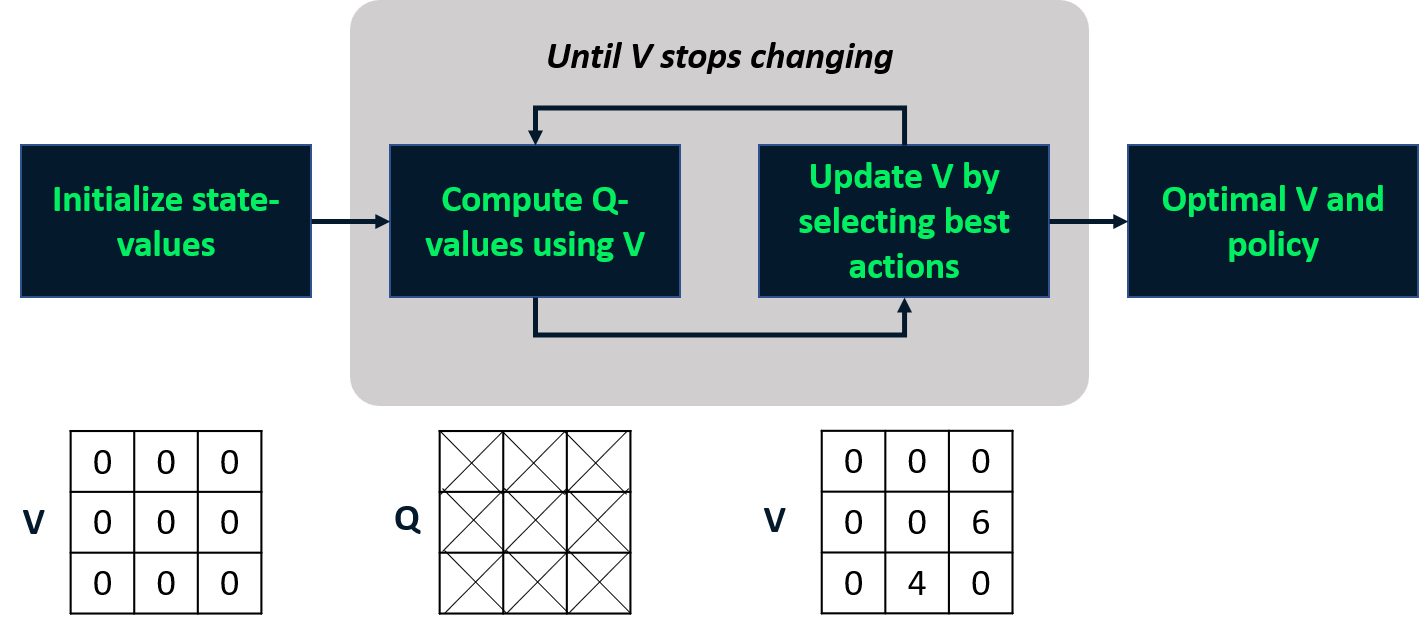

Value iteration

- Combines policy evaluation and improvement in one step

- Computes optimal state-value function

- Derives policy from it

Value iteration

- Combines policy evaluation and improvement in one step.

- Computes optimal state-value function

- Derives policy from it

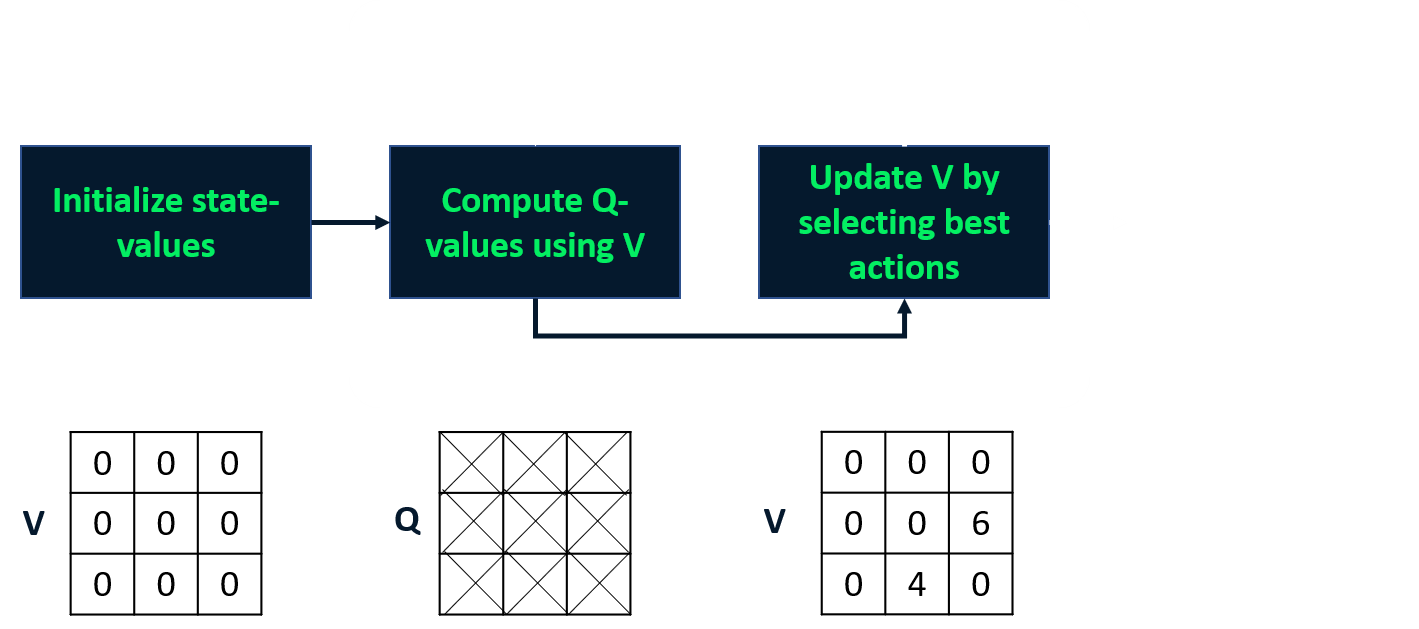

Value iteration

- Combines policy evaluation and improvement in one step.

- Computes optimal state-value function

- Derives policy from it

Value iteration

- Combines policy evaluation and improvement in one step.

- Computes optimal state-value function

- Derives policy from it

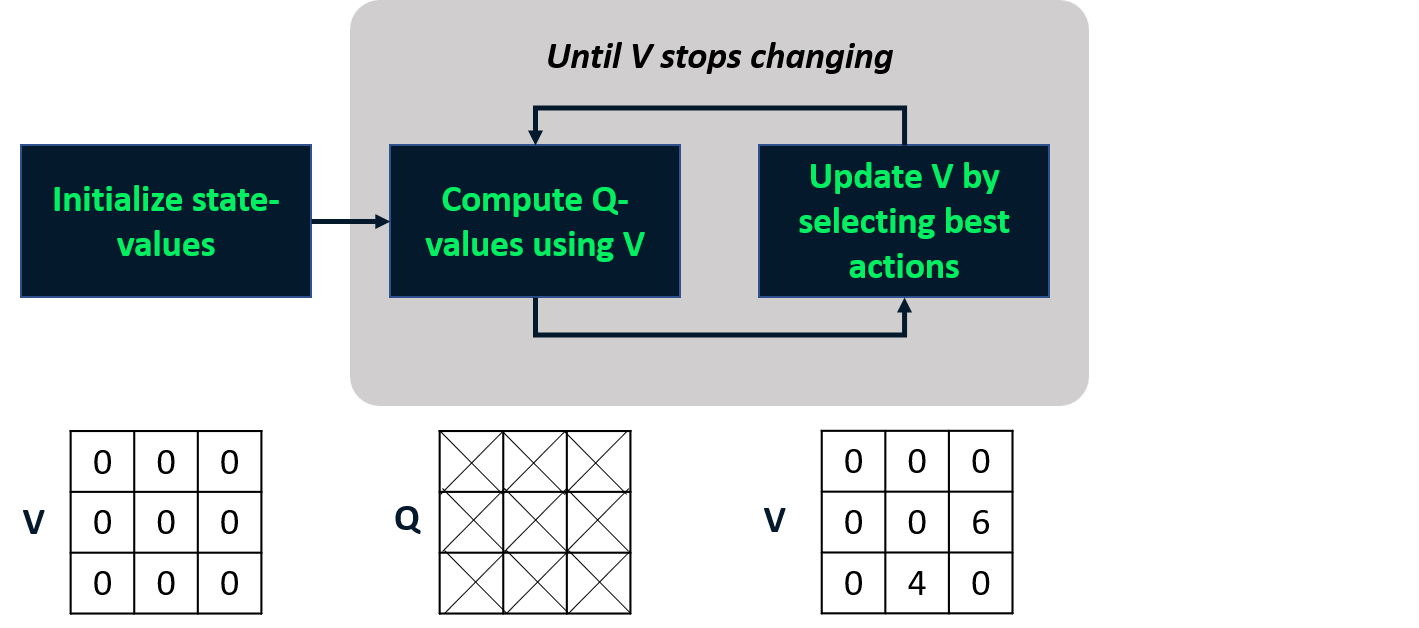

Value iteration

- Combines policy evaluation and improvement in one step.

- Computes optimal state-value function

- Derives policy from it

Implementing value-iteration

V = {state: 0 for state in range(num_states)} policy = {state:0 for state in range(num_states-1)} threshold = 0.001while True: new_V = {state: 0 for state in range(num_states)}for state in range(num_states-1): max_action, max_q_value = get_max_action_and_value(state, V)new_V[state] = max_q_value policy[state] = max_actionif all(abs(new_V[state] - V[state]) < thresh for state in V): break V = new_V

Getting optimal actions and values

def get_max_action_and_value(state, V): Q_values = [compute_q_value(state, action, V) for action in range(num_actions)]max_action = max(range(num_actions), key=lambda a: Q_values[a])max_q_value = Q_values[max_action]return max_action, max_q_value

Computing Q-values

def compute_q_value(state, action, V):

if state == terminal_state:

return None

_, next_state, reward, _ = env.P[state][action][0]

return reward + gamma * V[next_state]

Optimal policy

print(policy, V)

{0: 2, 1: 2, 2: 1,

3: 1, 4: 2, 5: 1,

6: 2, 7: 2}

{0: 7, 1: 8, 2: 9,

3: 7, 4: 9, 5: 10,

6: 8, 7: 10, 8: 0}

Let's practice!

Reinforcement Learning with Gymnasium in Python