Batching upserts

Vector Databases for Embeddings with Pinecone

James Chapman

Curriculum Manager, DataCamp

Upserting limitations

- Rate of requests

- Size of requests

- Batching: breaking requests up into smaller chunks

1 https://docs.pinecone.io/reference/quotas-and-limits#rate-limits

Defining a chunking function

def chunks(iterable, batch_size=100):it = iter(iterable)chunk = tuple(itertools.islice(it, batch_size))while chunk:yield chunkchunk = tuple(itertools.islice(it, batch_size))

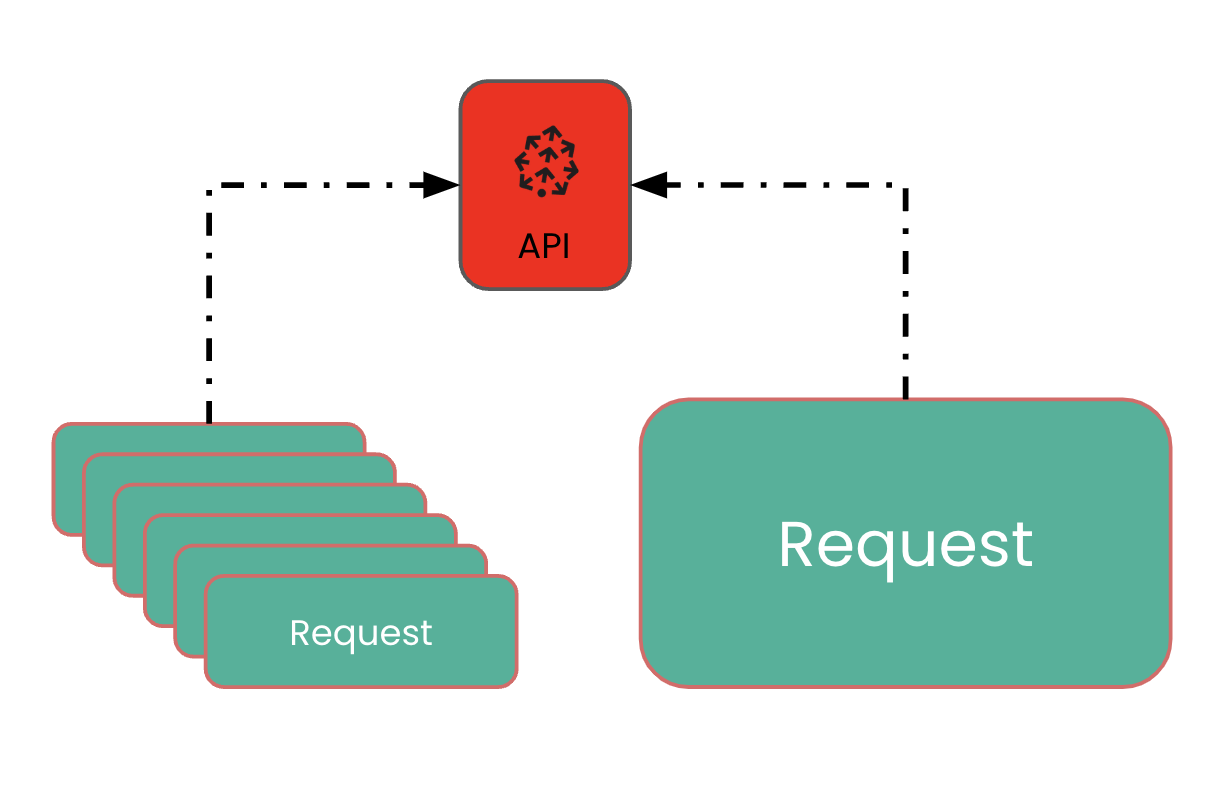

Sequential batching

- Splitting requests and sending them sequentially one-by-one

pc.Pinecone(api_key="YOUR API KEY") index = pc.Index('datacamp-index')for chunk in chunks(vectors): index.upsert(vectors=chunk)

Pros:

- Solve rate and size limiting

Cons:

- Really slow!

Parallel batching

- Splitting requests and sending them in parallel

pc = Pinecone(api_key="YOUR_API_KEY", pool_threads=30)with pc.Index('datacamp-index', pool_threads=30) as index:async_results = [index.upsert(vectors=chunk, async_req=True) for chunk in chunks(vectors, batch_size=100)][async_result.get() for async_result in async_results]

Let's practice!

Vector Databases for Embeddings with Pinecone