Advantage Actor Critic

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

Why actor critic?

REINFORCE limitations:

- High variance

- Poor sample efficiency

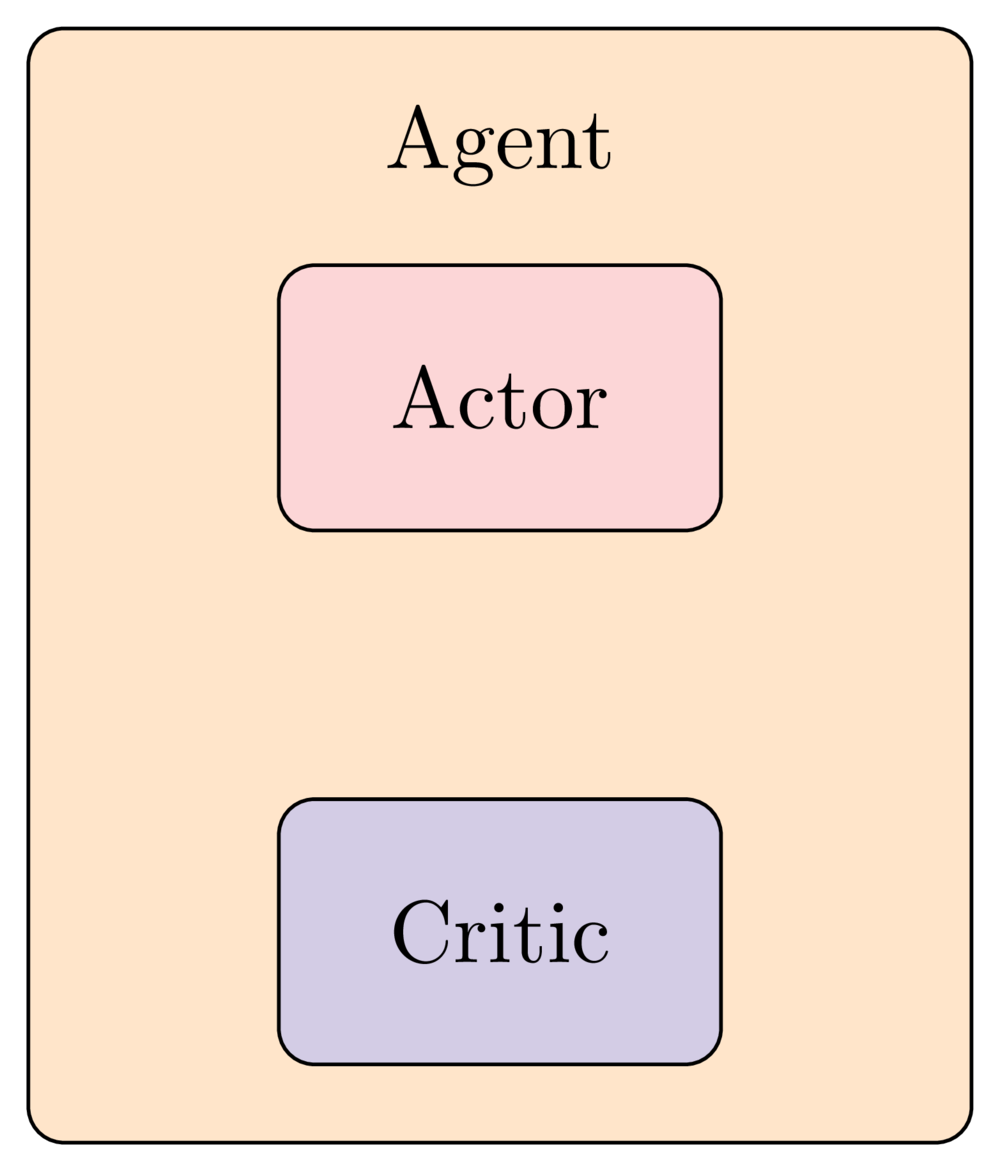

Actor Critic methods introduce a critic network, enabling Temporal Difference learning

The intuition behind Actor Critic methods

Actor network:

- Makes decisions

- Cannot evaluate them

Critic network:

- Provides feedback to actor at every step

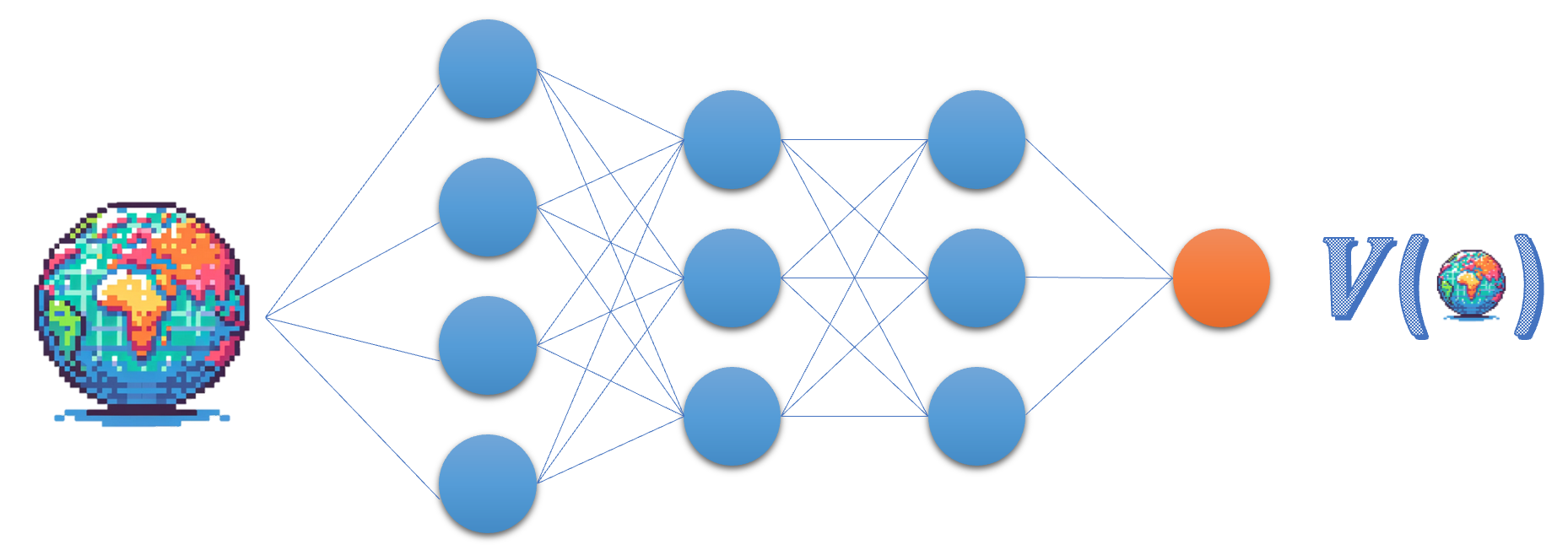

The Critic network

- Critic approximates the state value function

- Judges action $a_t$ based on the advantage or TD-error

class Critic(nn.Module): def __init__(self, state_size): super(Critic, self).__init__() self.fc1 = nn.Linear(state_size, 64) self.fc2 = nn.Linear(64, 1)def forward(self, state): x = torch.relu(self.fc1(torch.tensor(state))) value = self.fc2(x) return valuecritic_network = Critic(8)

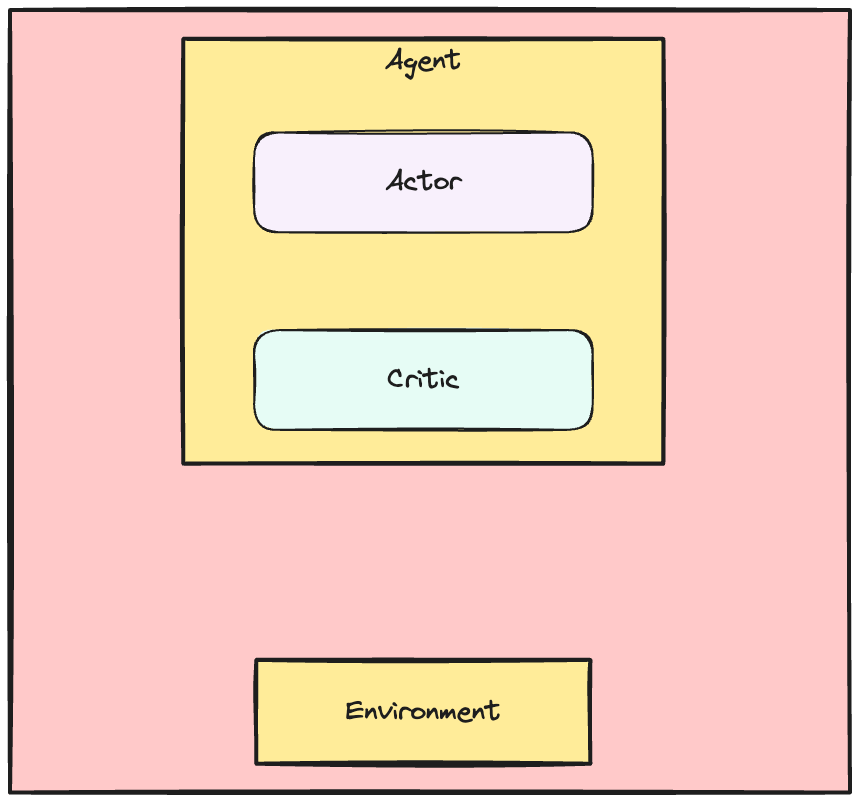

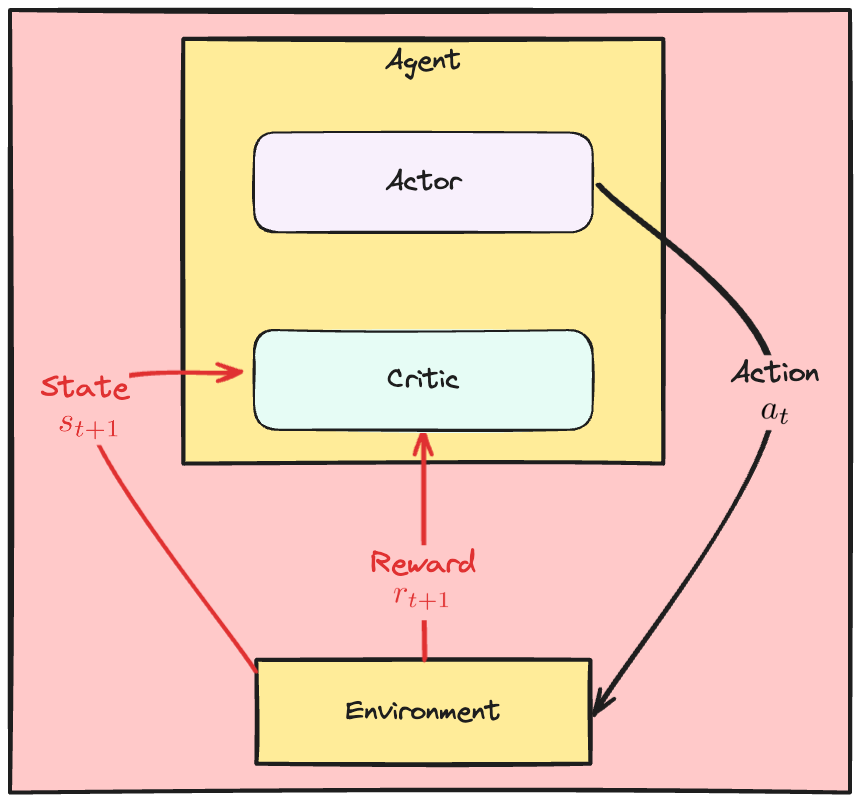

The Actor Critic dynamics

- At every step:

- Actor chooses action (same as policy network in REINFORCE)

The Actor Critic dynamics

- At every step:

- Actor chooses action (same as policy network in REINFORCE)

- Critic observes reward and state

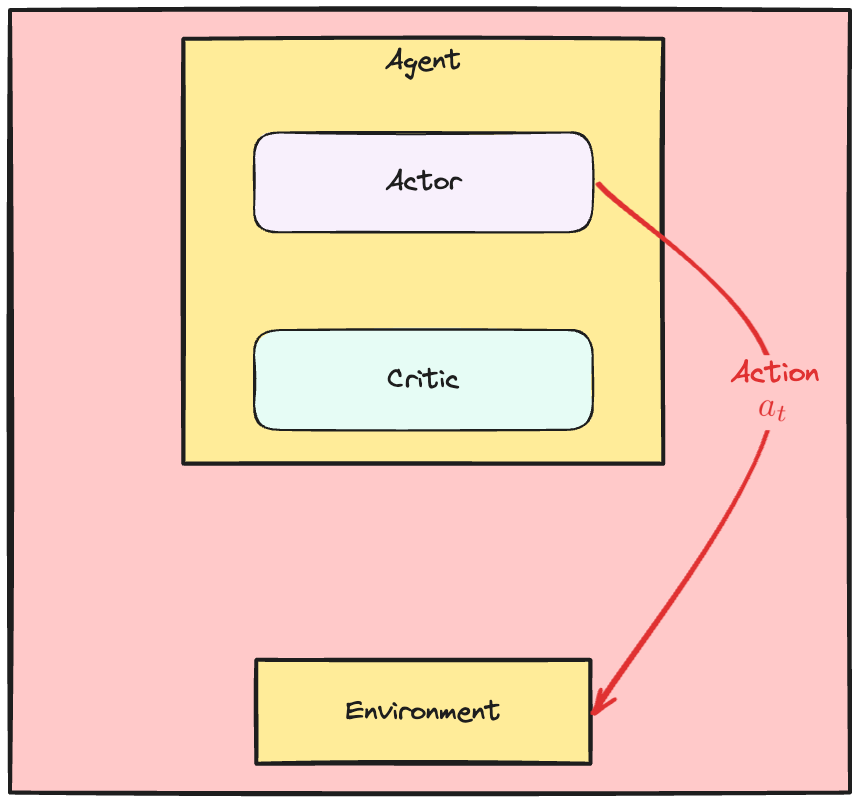

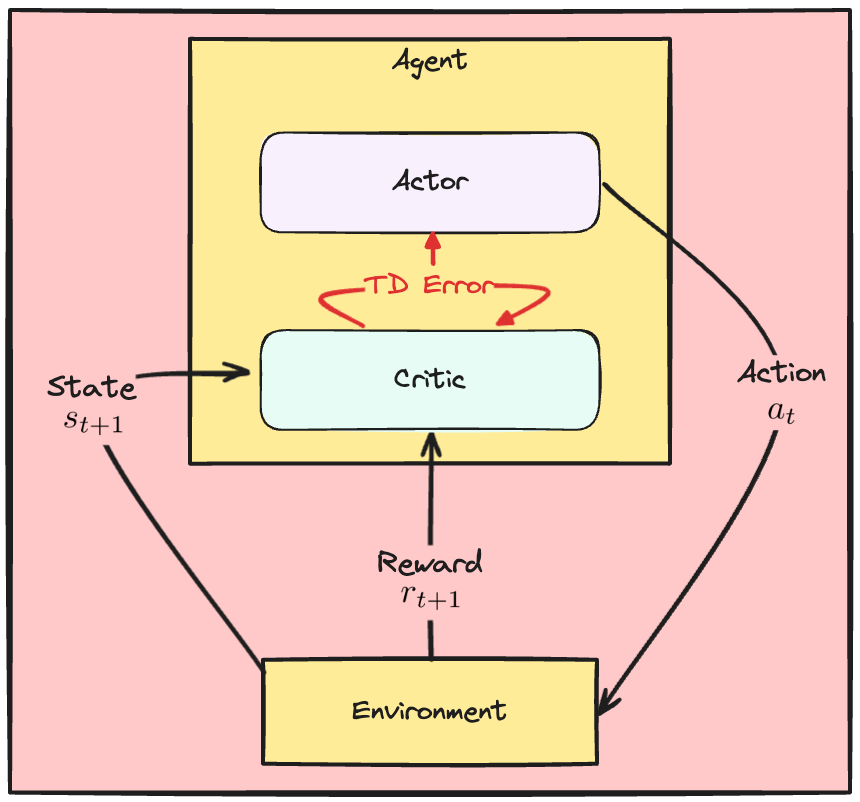

The Actor Critic dynamics

- At every step:

- Actor chooses action (same as policy network in REINFORCE)

- Critic observes reward and state

- Critic evaluates TD Error

- Actor and Critic use TD Error to update weights

The Actor Critic dynamics

- At every step:

- Actor chooses action (same as policy network in REINFORCE)

- Critic observes reward and state

- Critic evaluates TD Error

- Actor and Critic use TD Error to update weights

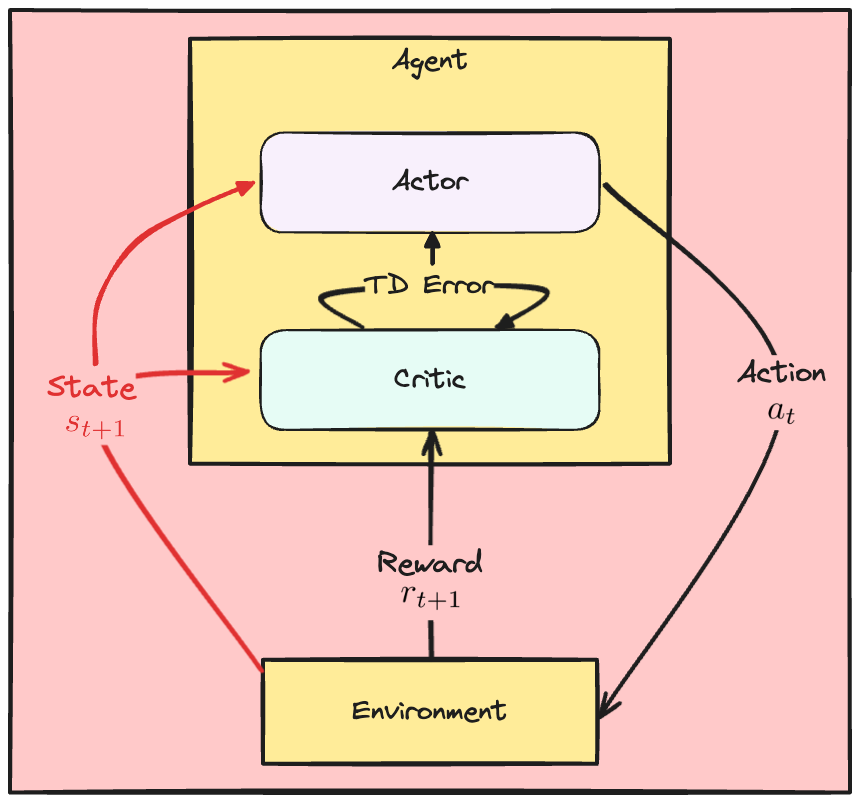

- Updated Actor observes new state

The Actor Critic dynamics

- At every step:

- Actor chooses action (same as policy network in REINFORCE)

- Critic observes reward and state

- Critic evaluates TD Error

- Actor and Critic use TD Error to update weights

- Updated Actor observes new state

- ... start over

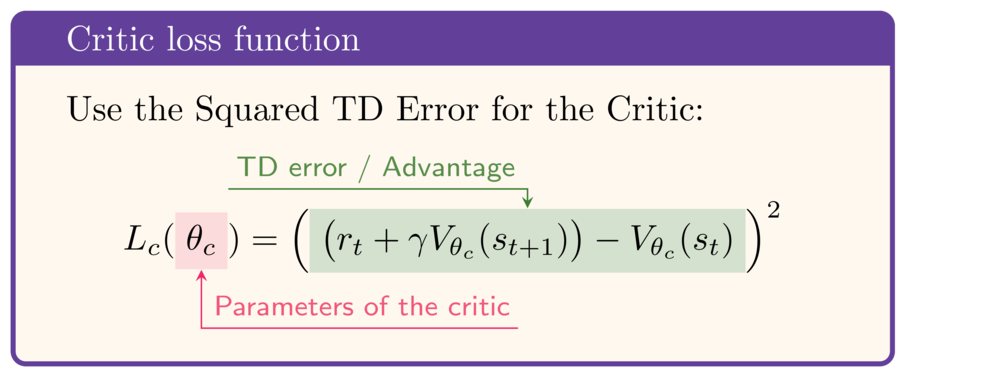

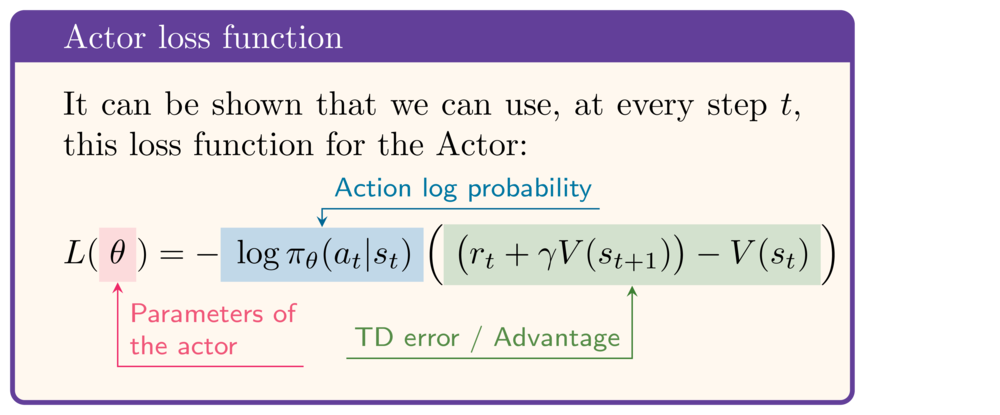

The A2C losses

Critic

- Critic loss: squared TD error

Actor

- TD error captures critic rating

- Increase probability of actions with positive TD error

Calculating the losses

def calculate_losses(critic_network, action_log_prob, reward, state, next_state, done):# Critic provides the state value estimates value = critic_network(state)next_value = critic_network(next_state)td_target = (reward + gamma * next_value * (1-done))td_error = td_target - value# Apply formulas for actor and critic losses actor_loss = -action_log_prob * td_error.detach()critic_loss = td_error ** 2return actor_loss, critic_loss

- Calculate TD-Error

- Calculate actor loss

- Use

.detach()to stop gradient propagation to critic weights

- Use

- Calculate critic loss

The Actor Critic training loop

for episode in range(10): state, info = env.reset() done = False while not done:# Select action action, action_log_prob = select_action(actor, state)next_state, reward, terminated, truncated, _ = env.step(action) done = terminated or truncated# Calculate losses actor_loss, critic_loss = calculate_losses(critic, action_log_prob, reward, state, next_state, done)# Update actor actor_optimizer.zero_grad(); actor_loss.backward(); actor_optimizer.step()# Update critic critic_optimizer.zero_grad(); critic_loss.backward(); critic_optimizer.step()state = next_state

Let's practice!

Deep Reinforcement Learning in Python