Policy gradient and REINFORCE

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

Differences with DQN

- REINFORCE: Monte-Carlo, not Temporal Difference

- Update at the end of the episode, not at every step

- Can update after several episodes instead

- No value function

- No target network

- No epsilon-greediness

- No experience replay

The REINFORCE training loop structure

for episode in range(num_episodes):# 1. Initialize episodewhile not done:# 2. Select action# 3. Play action and obtain next state and reward# 4. Add (discounted) reward to return# 5. Update state# 6. Calculate loss# 7. Update policy network by gradient descent

Action Selection

from torch.distributions import Categorical def select_action(policy_network, state): action_probs = policy_network(state)action_dist = Categorical(action_probs)action = action_dist.sample()log_prob = action_dist.log_prob(action)return action.item(), log_prob.reshape(1)action, log_prob = select_action( policy_network, state)

- Obtain probabilities from network

- Sample one action

- Return action and corresponding log probabilities

Sampled action index: 1

Log probability of sampled action: -1.38

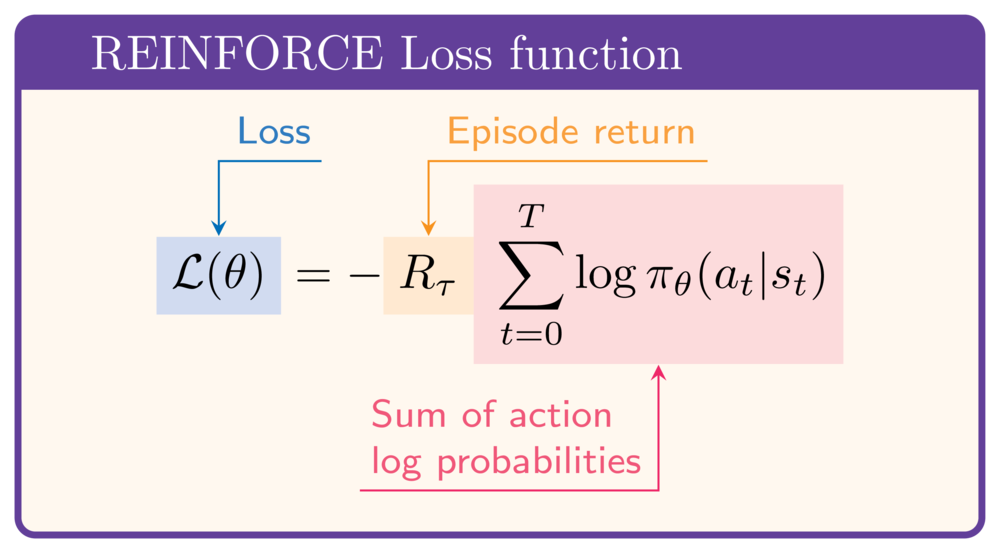

Loss Calculation

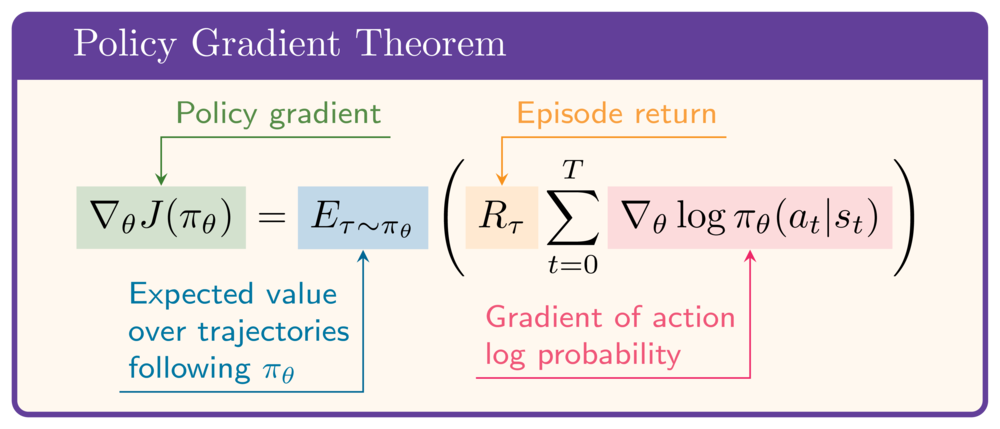

Recall the policy gradient theorem:

In Python:

- $R_{\tau}$ as

episode_return - Vector of $\log\pi_\theta(a_t|s_t)$ as

episode_log_probs

loss = -episode_return * episode_log_probs.sum()

The REINFORCE training loop

for episode in range(50): state, info = env.reset(); done = False; step = 0; episode_log_probs = torch.tensor([])R = 0while not done: step += 1 action, log_prob = select_action(policy_network, state)next_state, reward, terminated, truncated, _ = env.step(action) done = terminated or truncatedR += (gamma ** step) * rewardepisode_log_probs = torch.cat((episode_log_probs, log_prob))state = next_stateloss = - R * episode_log_probs.sum()optimizer.zero_grad(); loss.backward(); optimizer.step()

Let's practice!

Deep Reinforcement Learning in Python