Post-hoc analysis following ANOVA

Experimental Design in Python

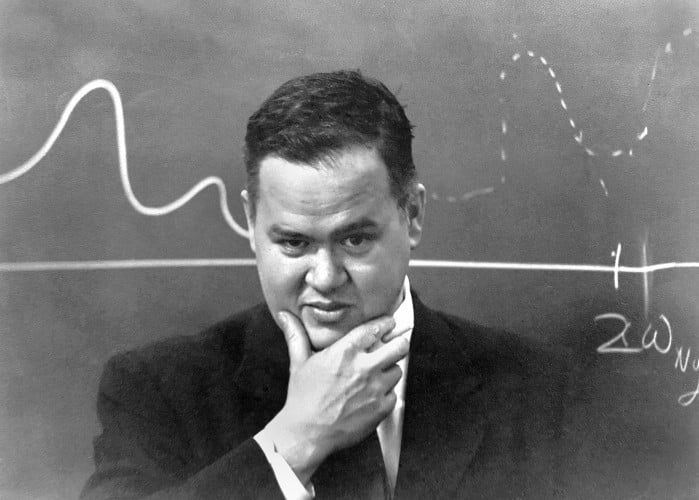

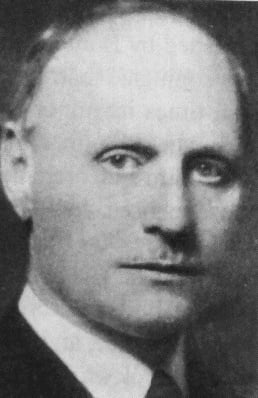

James Chapman

Curriculum Manager, DataCamp

When to use post-hoc tests

- After significant ANOVA results

- To explore pairwise group differences

![Gears behind the curtain]](https://assets.datacamp.com/production/repositories/6639/datasets/d00bdfc93497d3c502c6ed7b6d0011e75f8ce689/behind-the-curtain.png)

Key post-hoc methods

- Tukey's HSD (Honest Significant Difference)

- Robust for multiple comparisons

- Best for broader comparisons

- Bonferroni Correction

- Adjusts p-values to control for Type I errors

- Best for focused tests

1 https://www.amphilsoc.org/item-detail/photograph-john-wilder-tukey 2 https://en.wikipedia.org/wiki/Carlo_Emilio_Bonferroni

The dataset: marketing ad campaigns

ad_campaigns

Ad_Campaign Click_Through_Rate

1300 Seasonal Discount 2.1659547732

1661 New Arrival 2.9409657365

2762 Loyalty Reward 3.2476777154

571 Seasonal Discount 3.3382186561

775 Seasonal Discount 1.7148876401

Data organization with pivot tables

pivot_table = ad_campaigns.pivot_table(values='Click_Through_Rate',

index='Ad_Campaign',

aggfunc="mean")

print(pivot_table)

Click_Through_Rate

Ad_Campaign

Loyalty Reward 2.792716

New Arrival 3.013843

Seasonal Discount 2.518917

Performing ANOVA

from scipy.stats import f_oneway campaign_types = ['Seasonal Discount', 'New Arrival', 'Loyalty Reward']groups = [ad_campaigns[ad_campaigns['Ad_Campaign'] == campaign] ['Click_Through_Rate'] for campaign in campaign_types]f_stat, p_val = f_oneway(*groups) print(p_val)

4.484124496940693e-134

Tukey's HSD test

from statsmodels.stats.multicomp import pairwise_tukeyhsdtukey_results = pairwise_tukeyhsd(ad_campaigns['Click_Through_Rate'],ad_campaigns['Ad_Campaign'],alpha=0.05)print(tukey_results)

Multiple Comparison of Means - Tukey HSD, FWER=0.05

===========================================================================

group1 group2 meandiff p-adj lower upper reject

<hr />---------------------------------------------------------------------

Loyalty Reward New Arrival 0.2211 0.0 0.176 0.2663 True

Loyalty Reward Seasonal Discount -0.2738 0.0 -0.3189 -0.2287 True

New Arrival Seasonal Discount -0.4949 0.0 -0.5401 -0.4498 True

<hr />---------------------------------------------------------------------

Bonferroni correction set-up

from scipy.stats import ttest_ind from statsmodels.sandbox.stats.multicomp import multipletestsp_values = []comparisons = [('Seasonal Discount', 'New Arrival'), ('Seasonal Discount', 'Loyalty Reward'), ('New Arrival', 'Loyalty Reward')]for comp in comparisons: group1 = ad_campaigns[ad_campaigns['Ad_Campaign'] == comp[0]]['Click_Through_Rate'] group2 = ad_campaigns[ad_campaigns['Ad_Campaign'] == comp[1]]['Click_Through_Rate']t_stat, p_val = ttest_ind(group1, group2)p_values.append(p_val)

Performing Bonferroni correction

p_adjusted = multipletests(p_values, alpha=0.05, method='bonferroni')print(f"Adjusted P-values: {p_adjusted[1]}")

Adjusted P-values: [5.33634403e-133 2.17627991e-043 5.62590083e-029]

Let's practice!

Experimental Design in Python