Congratulations!

Working with Llama 3

Imtihan Ahmed

Machine Learning Engineer

Let's recall

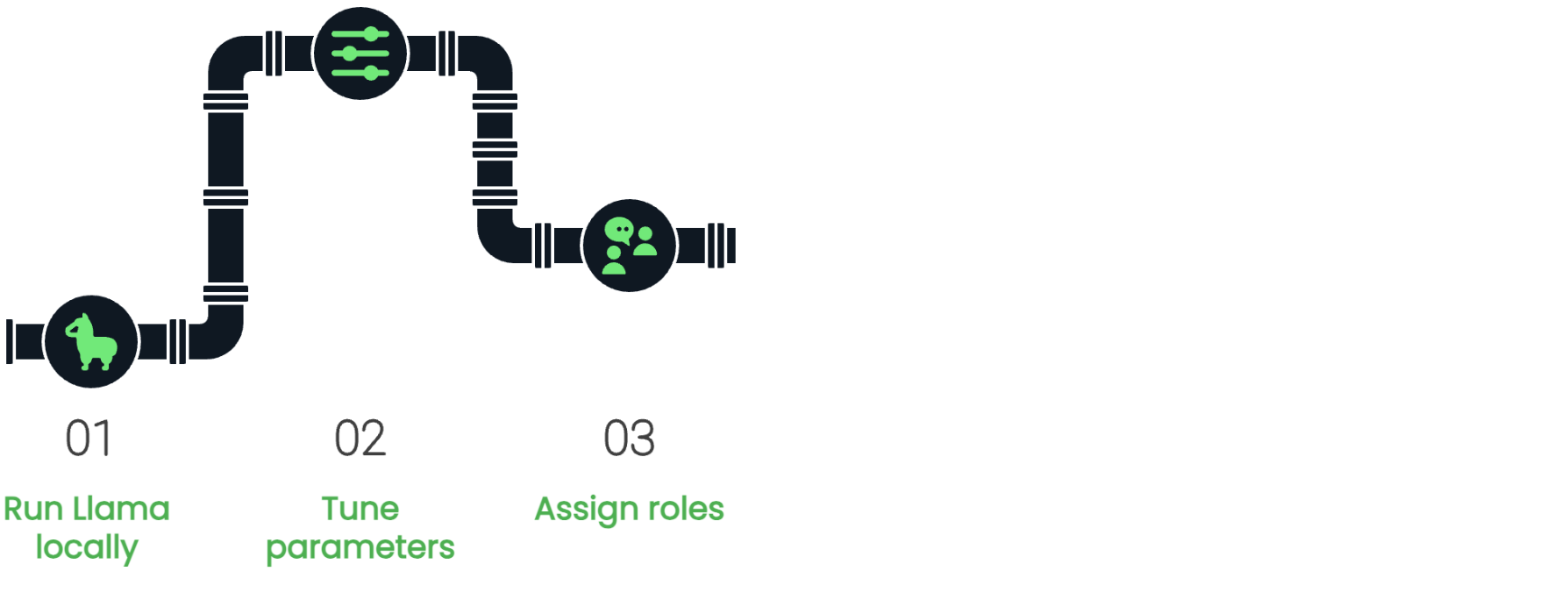

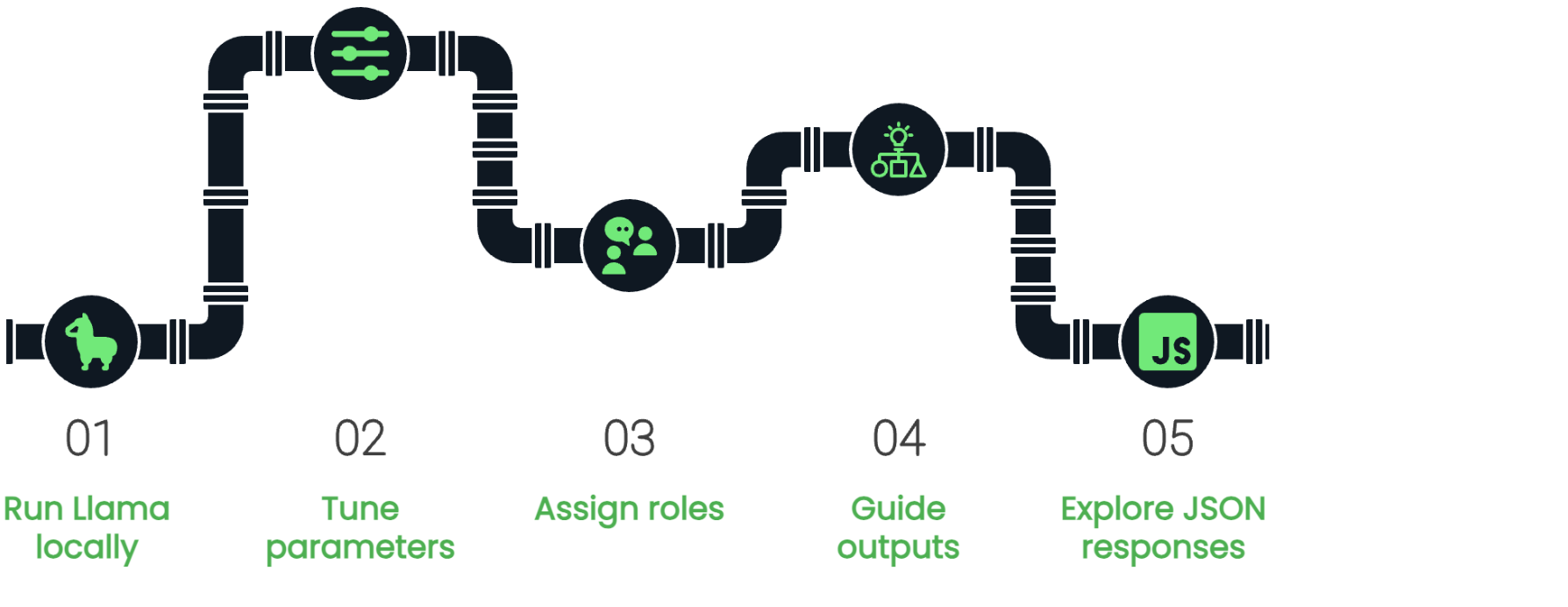

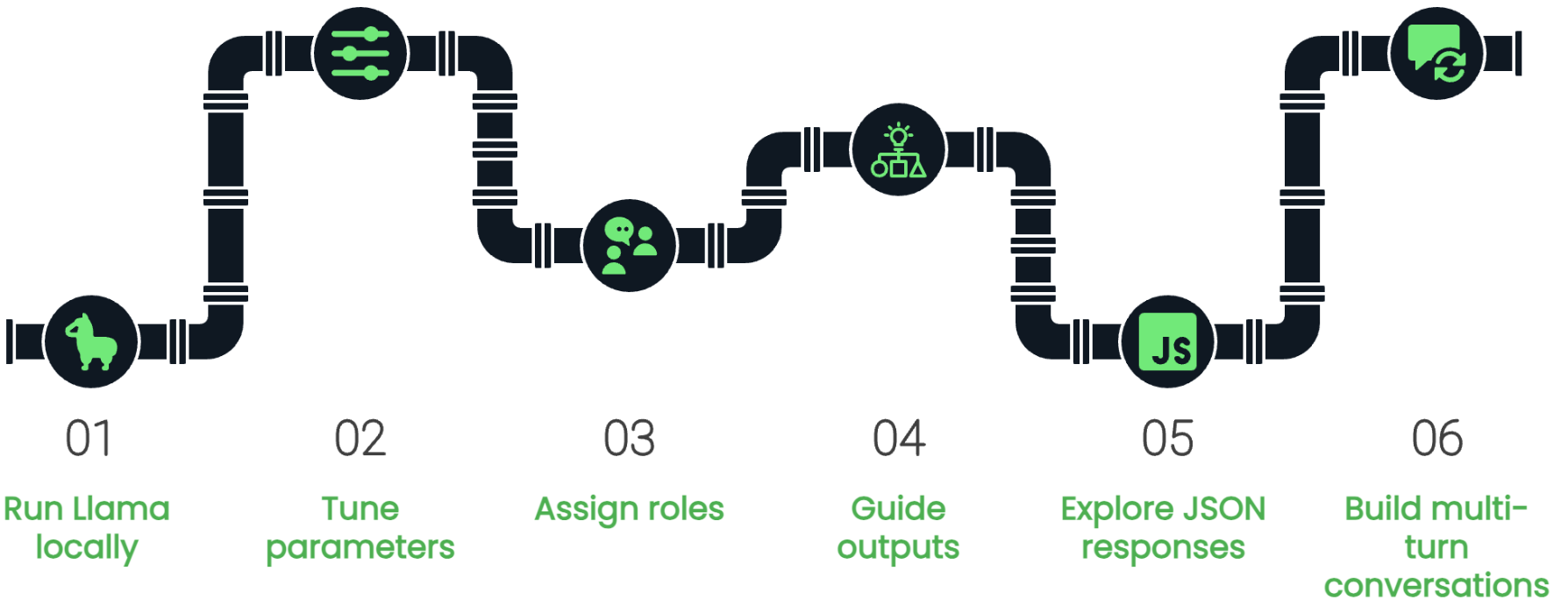

from llama_cpp import Llama

llm = Llama(model_path = "path/to/model.gguf")

Let's recall

$$

temperature,top_k,top_pparameters

Let's recall

message_list = [{"role": "system", "content": system_message},

{"role": "user", "content": user_message}]

Let's recall

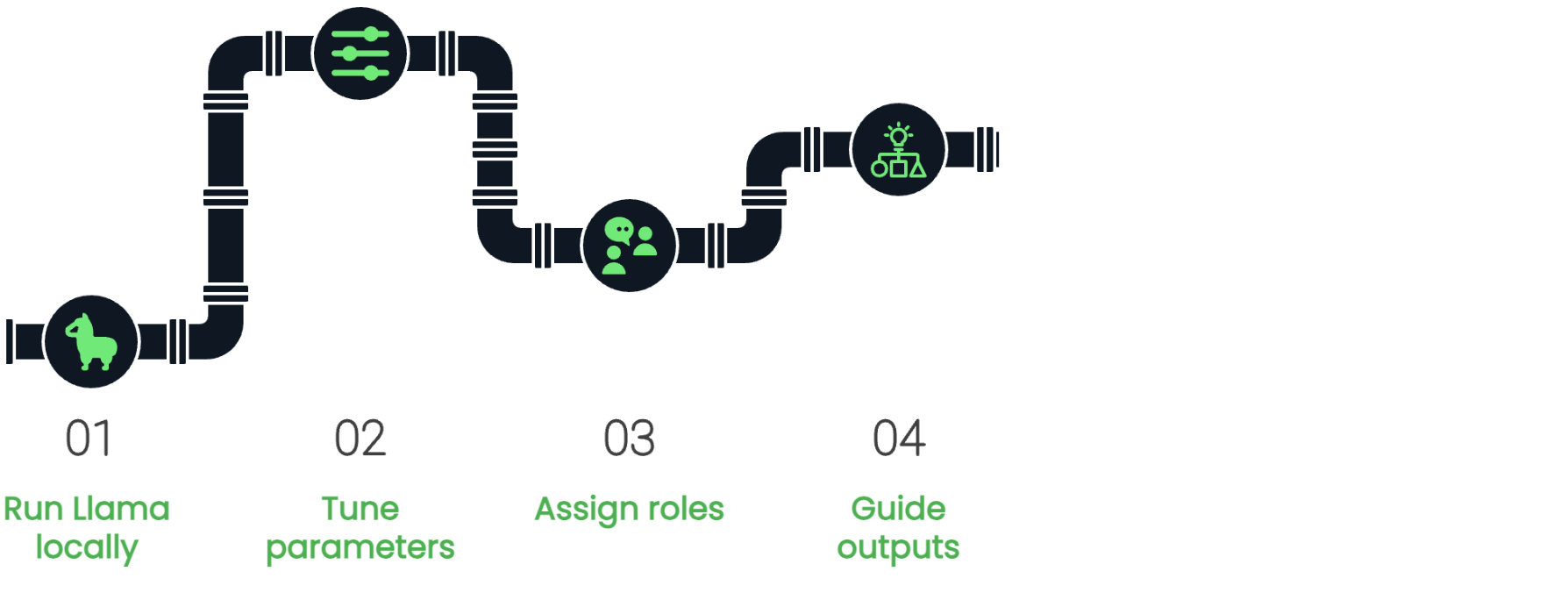

- Precise prompts

stopwords- Zero-shot/Few-shot prompting

Let's recall

$$

$$

response_format = {"type": "json_object"}

Let's recall

Conversationclass.create_completion()method

What's next?

Thank you!

Working with Llama 3