Building conversations

Working with Llama 3

Imtihan Ahmed

Machine Learning Engineer

Maintaining context

Maintaining context

Maintaining context

Maintaining context

Maintaining context

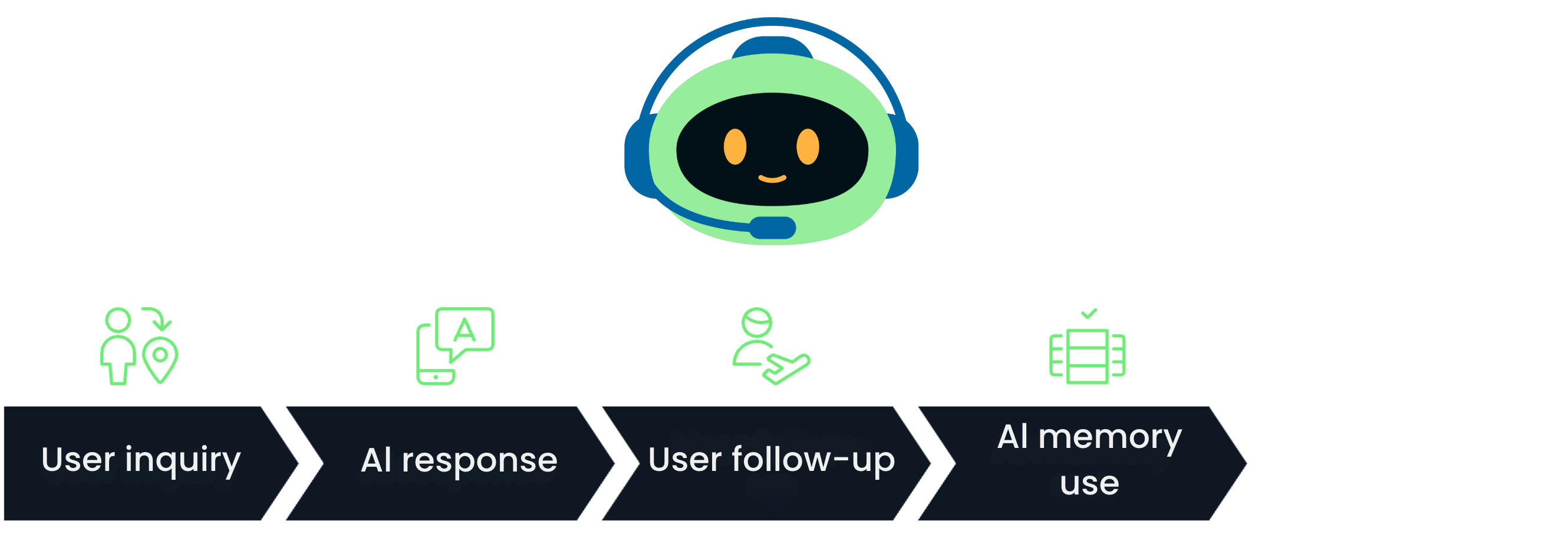

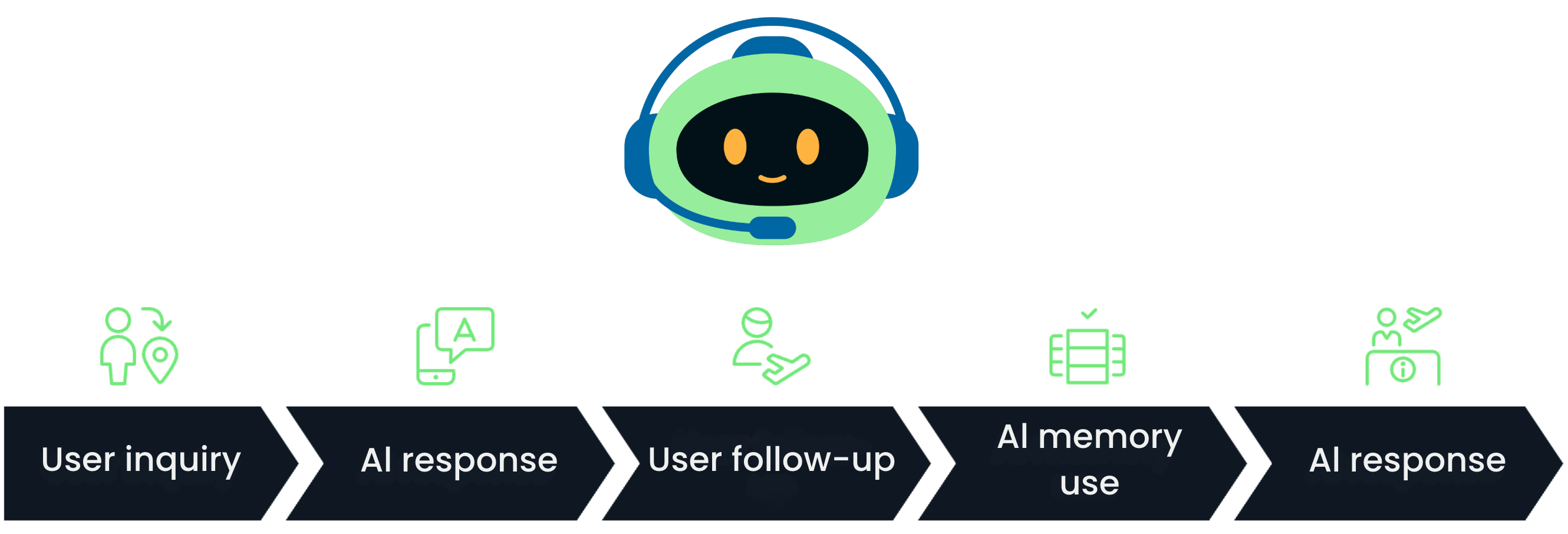

- Track a chat history with a

Conversationclass

Conversation class

- Can store a history of prior messages

class Conversation:def __init__(self, llm: Llama, system_prompt='', history=[]): self.llm = llm self.system_prompt = system_promptself.history = [{"role": "system", "content": self.system_prompt}] + history

Conversation class

- Can store a history of prior messages

class Conversation: def __init__(self, llm: Llama, system_prompt='', history=[]): self.llm = llm self.system_prompt = system_prompt self.history = [{"role": "system", "content": self.system_prompt}] + historydef create_completion(self, user_prompt=''):self.history.append({"role": "user", "content": user_prompt}) # Append inputoutput = self.llm.create_chat_completion(messages=self.history)conversation_result = output['choices'][0]['message'] self.history.append(conversation_result) # Append output return conversation_result['content'] # Return output

Running a multi-turn conversation

conversation = Conversation(llm, system_prompt="You are a virtual travel assistant helping with planning trips.")response1 = conversation.create_completion("What are some destinations in France for a short weekend break?")print(f"Response 1: {response1}")response2 = conversation.create_completion("How about Spain?")print(f"Response 2: {response2}")

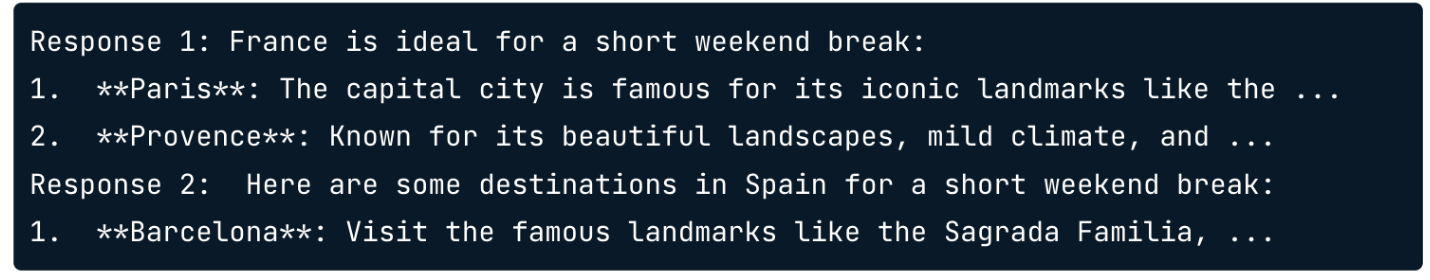

Running a multi-turn conversation

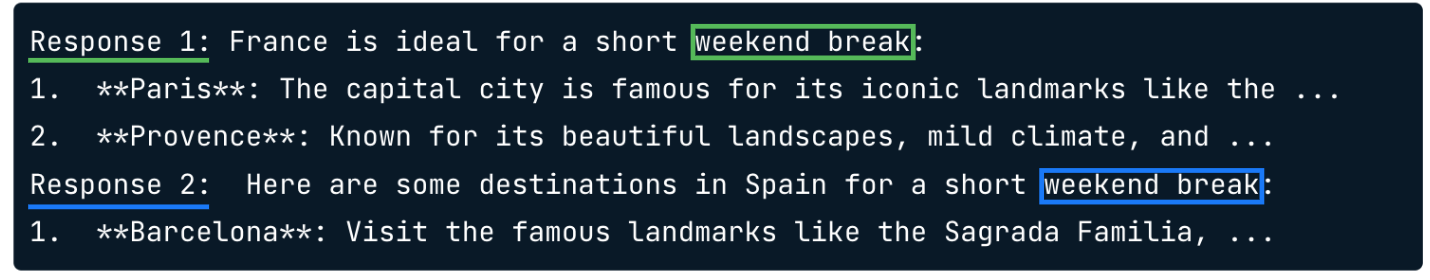

print(f"Response 1: {response1}")

print(f"Response 2: {response2}")

Running a multi-turn conversation

print(f"Response 1: {response1}")

print(f"Response 2: {response2}")

Let's practice!

Working with Llama 3