Generating structured output

Working with Llama 3

Imtihan Ahmed

Machine Learning Engineer

Structured output in JSON

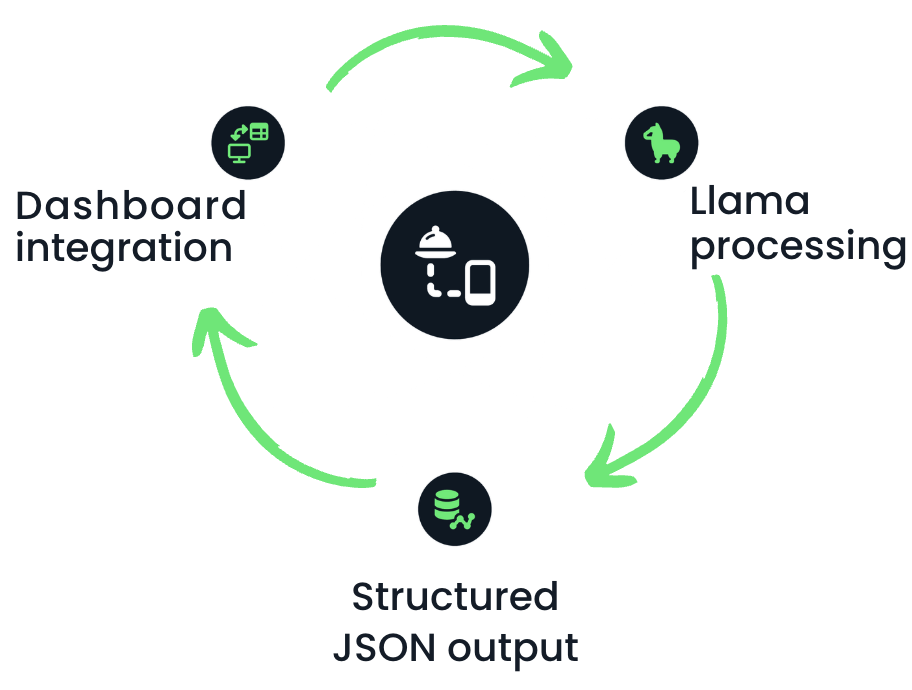

Example: Llama responses might be input to a dashboard

Plain text responses won't work ❌

We need structured outputs ✅

JSON responses with chat completion

response_format = {"type": "json_object"}message_list = [ {"role": "system", # System role defined as market analyst "content": "You are a food industry market analyst. You analyze sales data and generate structured JSON reports of top-selling beverages."},{"role": "user", # User role to pass the request "content": "Provide a structured report on the top-selling beverages this year."} ]

JSON responses with chat completion

output = llm.create_chat_completion( messages = message_list,response_format = "json_object")

- Response format specified as JSON

- Llama generates structured response, no free-flowing text

Extracting the JSON response

print(output['choices'][0]['message']['content'])

{

"report_name": "Top-Selling Beverages 2024",

"top_beverages": [

{

"rank": 1,

"beverage_name": "Coca-Cola Classic",

"sales_volume": 2.1,

"growth_rate": 1.9

},... ]

}

Defining a schema

response_format = { "type": "json_object","schema": {"type": "object","properties": { "Product Name": {"type": "string"}, "Category": {"type": "string"}, "Sales Growth": {"type": "float"}}}}

- Can specify a schema: rules to define how the data should be formatted

Defining a schema

output = llm.create_chat_completion(

messages = message_list,

response_format = response_format)

print(output['choices'][0]['message']['content'])

{

"Product Name": "Coca-Cola",

"Category": "Soft Drink",

"Sales Growth": 12.5

}

Let's practice!

Working with Llama 3