Intrinsic dimension

Unsupervised Learning in Python

Benjamin Wilson

Director of Research at lateral.io

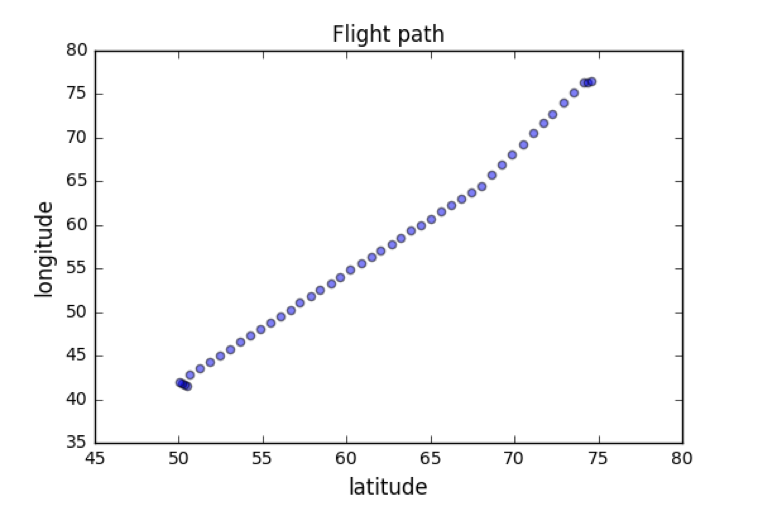

Intrinsic dimension of a flight path

- 2 features: longitude and latitude at points along a flight path

- Dataset appears to be 2-dimensional

- But can approximate using one feature: displacement along flight path

- Is intrinsically 1-dimensional

latitude longitude

50.529 41.513

50.360 41.672

50.196 41.835

...

Intrinsic dimension

- Intrinsic dimension = number of features needed to approximate the dataset

- Essential idea behind dimension reduction

- What is the most compact representation of the samples?

- Can be detected with PCA

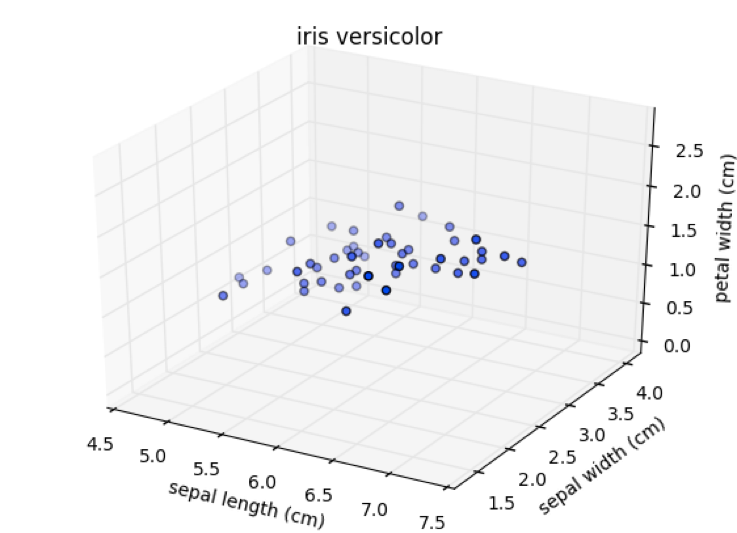

Versicolor dataset

- "versicolor", one of the iris species

- Only 3 features: sepal length, sepal width, and petal width

- Samples are points in 3D space

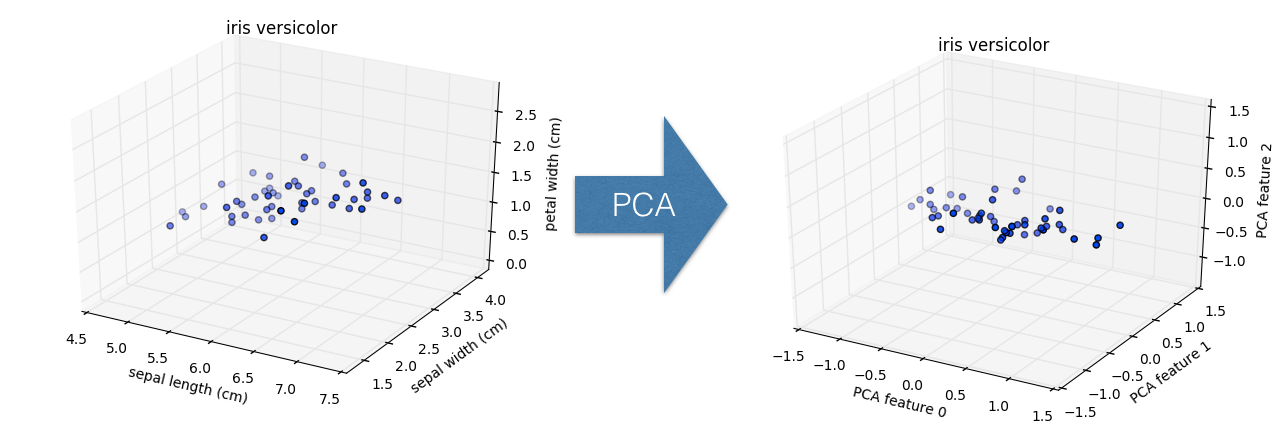

Versicolor dataset has intrinsic dimension 2

- Samples lie close to a flat 2-dimensional sheet

- So can be approximated using 2 features

PCA identifies intrinsic dimension

- Scatter plots work only if samples have 2 or 3 features

- PCA identifies intrinsic dimension when samples have any number of features

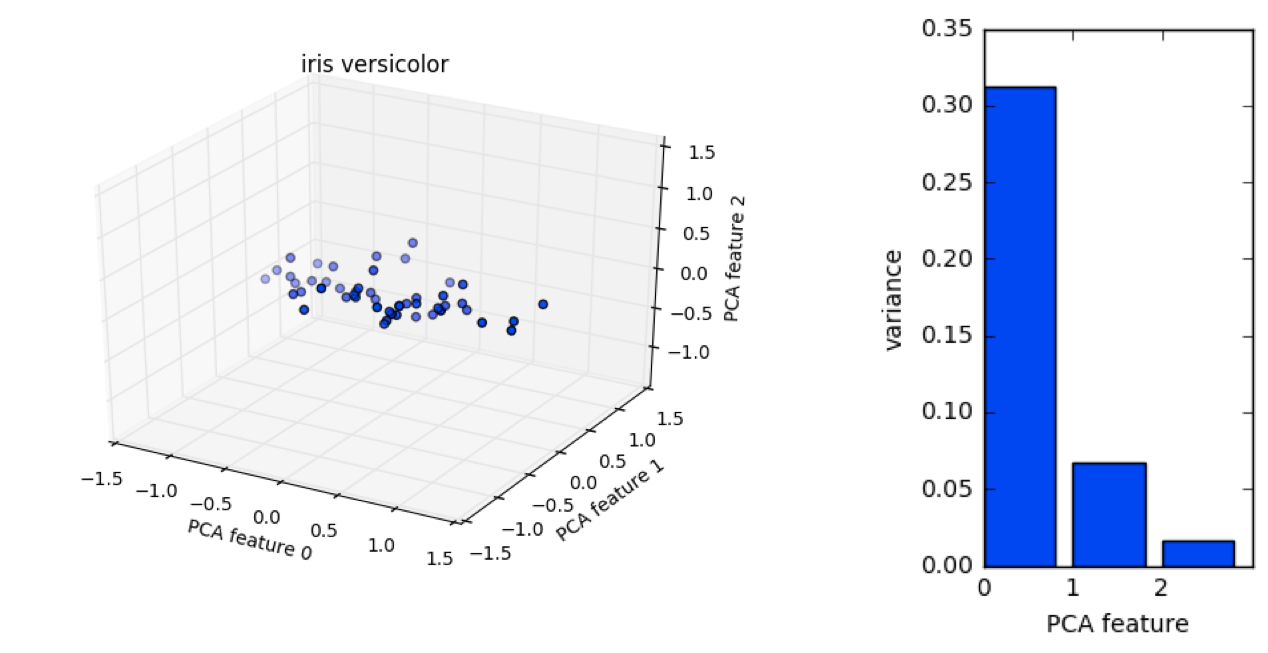

- Intrinsic dimension = number of PCA features with significant variance

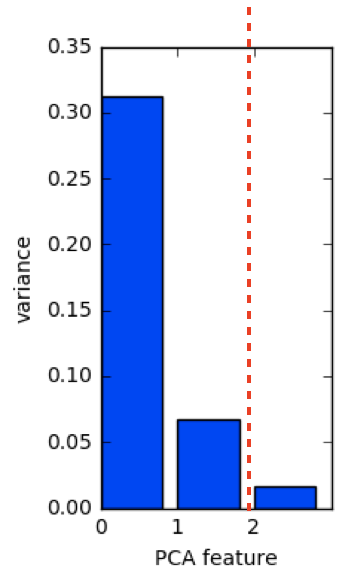

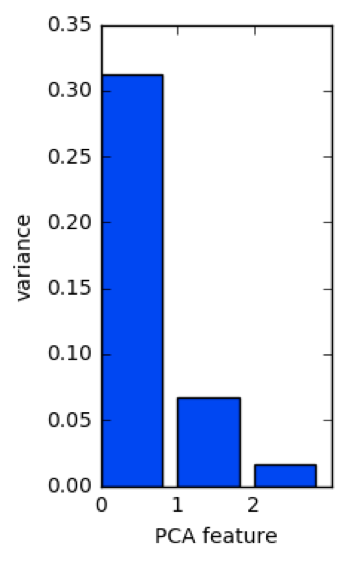

PCA of the versicolor samples

PCA features are ordered by variance descending

Variance and intrinsic dimension

- Intrinsic dimension is number of PCA features with significant variance

- In our example: the first two PCA features

- So intrinsic dimension is 2

Plotting the variances of PCA features

samples= array of versicolor samples

import matplotlib.pyplot as plt from sklearn.decomposition import PCApca = PCA()pca.fit(samples)

PCA()

features = range(pca.n_components_)

Plotting the variances of PCA features

plt.bar(features, pca.explained_variance_)

plt.xticks(features)

plt.ylabel('variance')

plt.xlabel('PCA feature')

plt.show()

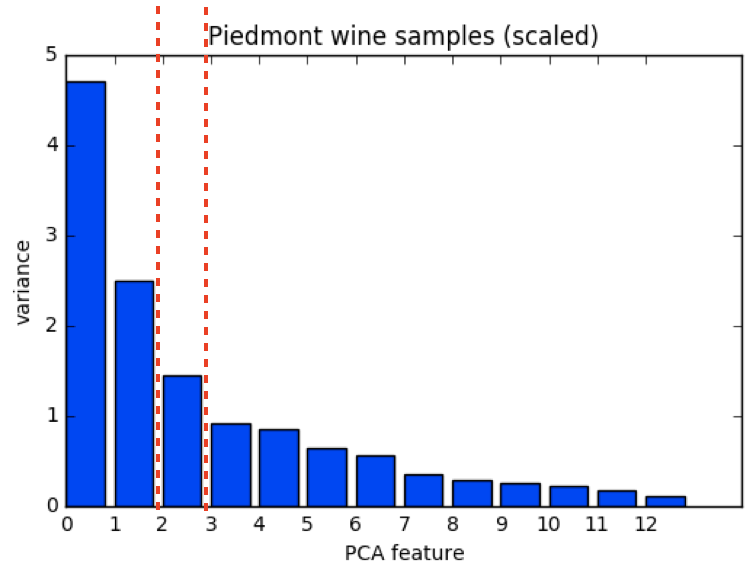

Intrinsic dimension can be ambiguous

- Intrinsic dimension is an idealization

- ... there is not always one correct answer!

- Piedmont wines: could argue for 2, or for 3, or more

Let's practice!

Unsupervised Learning in Python