Mejorar el rendimiento del modelo

Introducción al aprendizaje profundo con PyTorch

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

Pasos para maximizar el rendimiento

$$

¿Podemos resolver el problema?

Establece una línea de base de rendimiento

$$

- Aumentar el rendimiento en el conjunto de validación

$$ $$

- Consigue el mejor rendimiento posible

Paso 1: sobreajustar el conjunto de entrenamiento

Modifica el bucle de entrenamiento para sobreajustar un único punto de datos

features, labels = next(iter(dataloader)) for i in range(1000): outputs = model(features) loss = criterion(outputs, labels) optimizer.zero_grad() loss.backward() optimizer.step()- Debe alcanzar 1,0 de precisión y 0 de pérdida

A continuación, amplía a todo el conjunto de entrenamiento

- Mantener hiperparámetros por defecto

Paso 2: reducir el sobreajuste

Objetivo: maximizar la precisión de la validación

Experimenta con:

- Abandono

- Aumento de datos

- Decaimiento del peso

- Reducir la capacidad del modelo

$$

- Realiza un programa de seguimiento de cada hiperparámetro y de la precisión de la validación

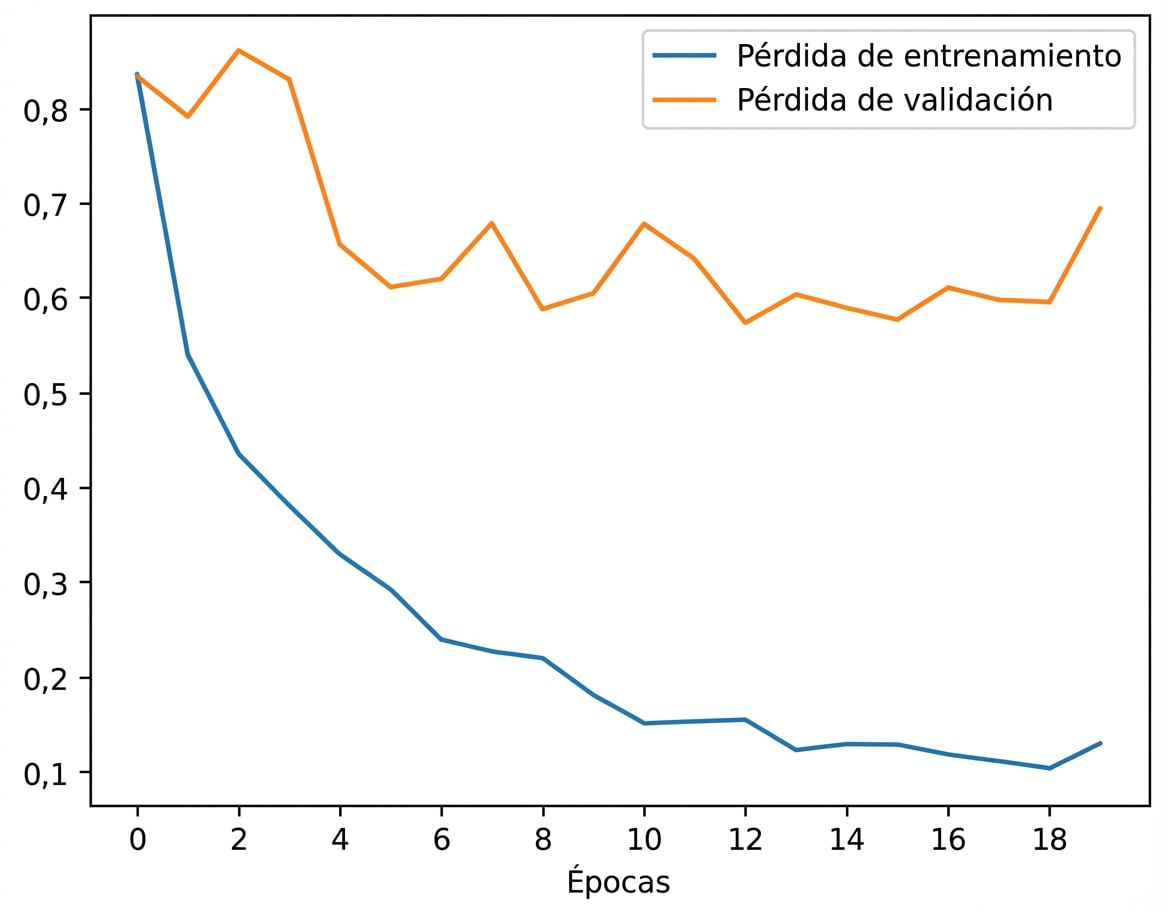

Paso 2: reducir el sobreajuste

$$

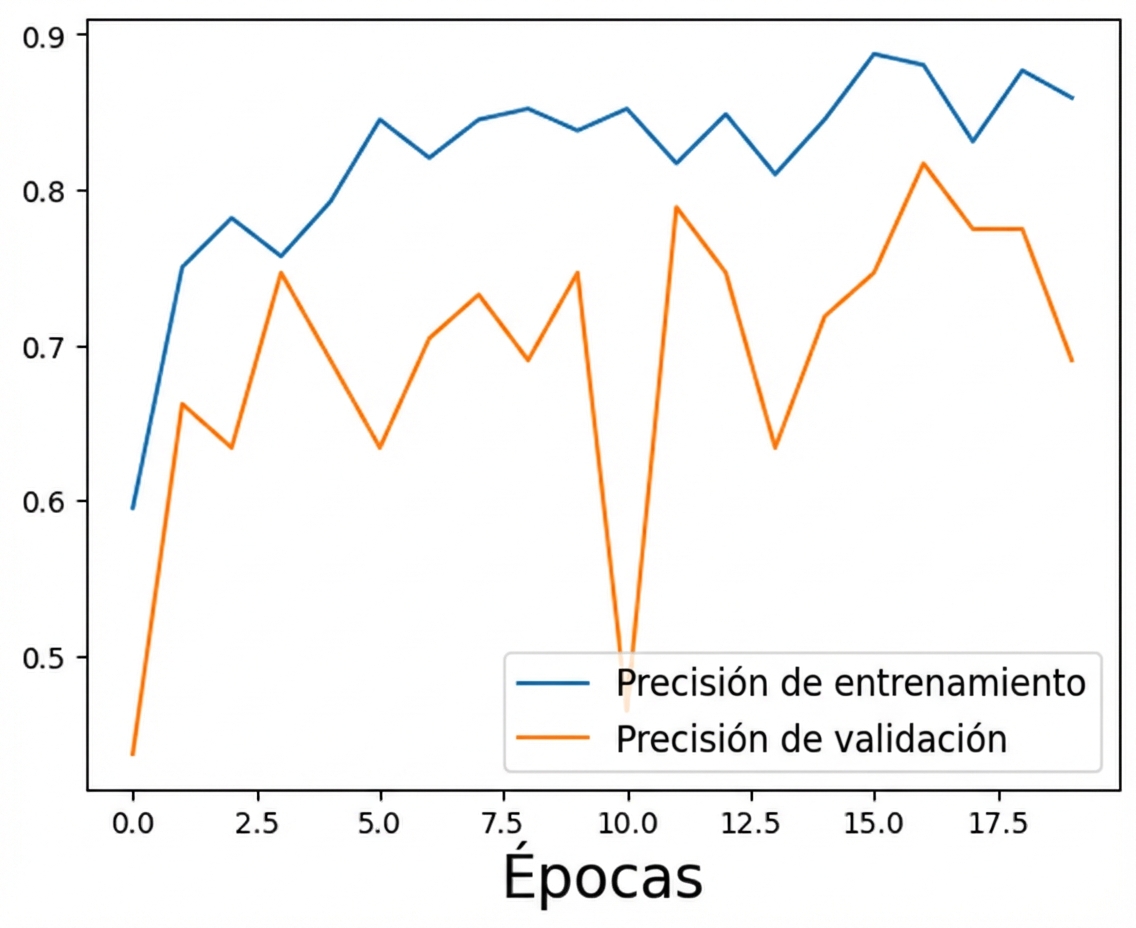

El modelo original se ajusta en exceso a los datos de entrenamiento

$$

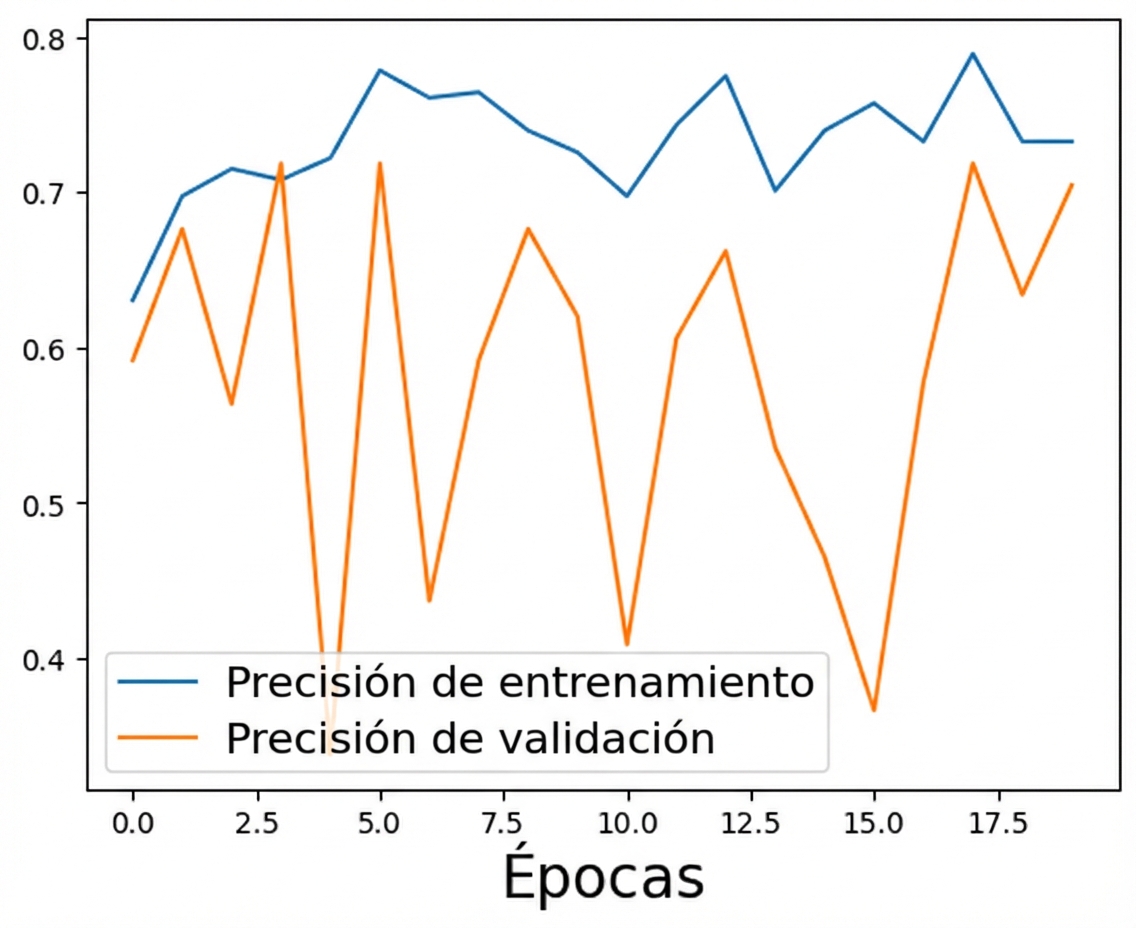

Modelo actualizado con demasiada regularización

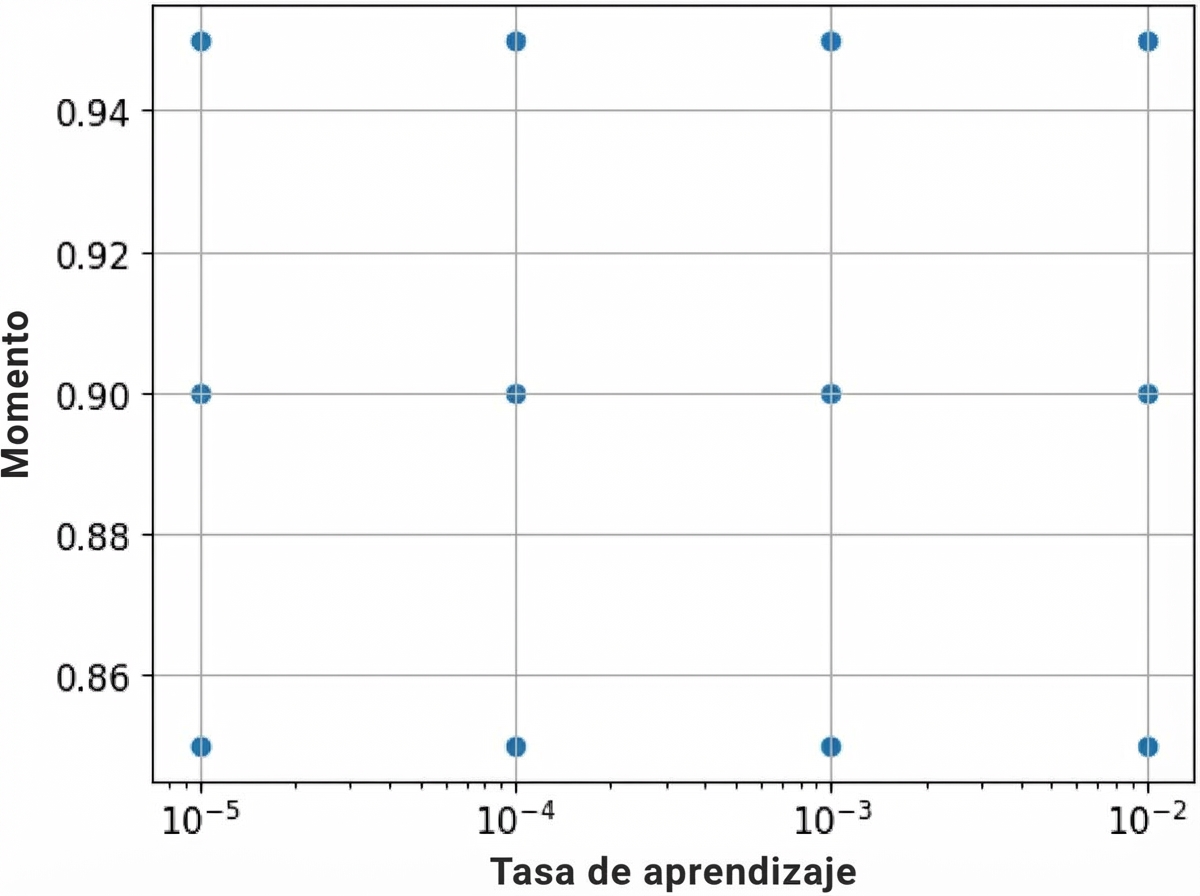

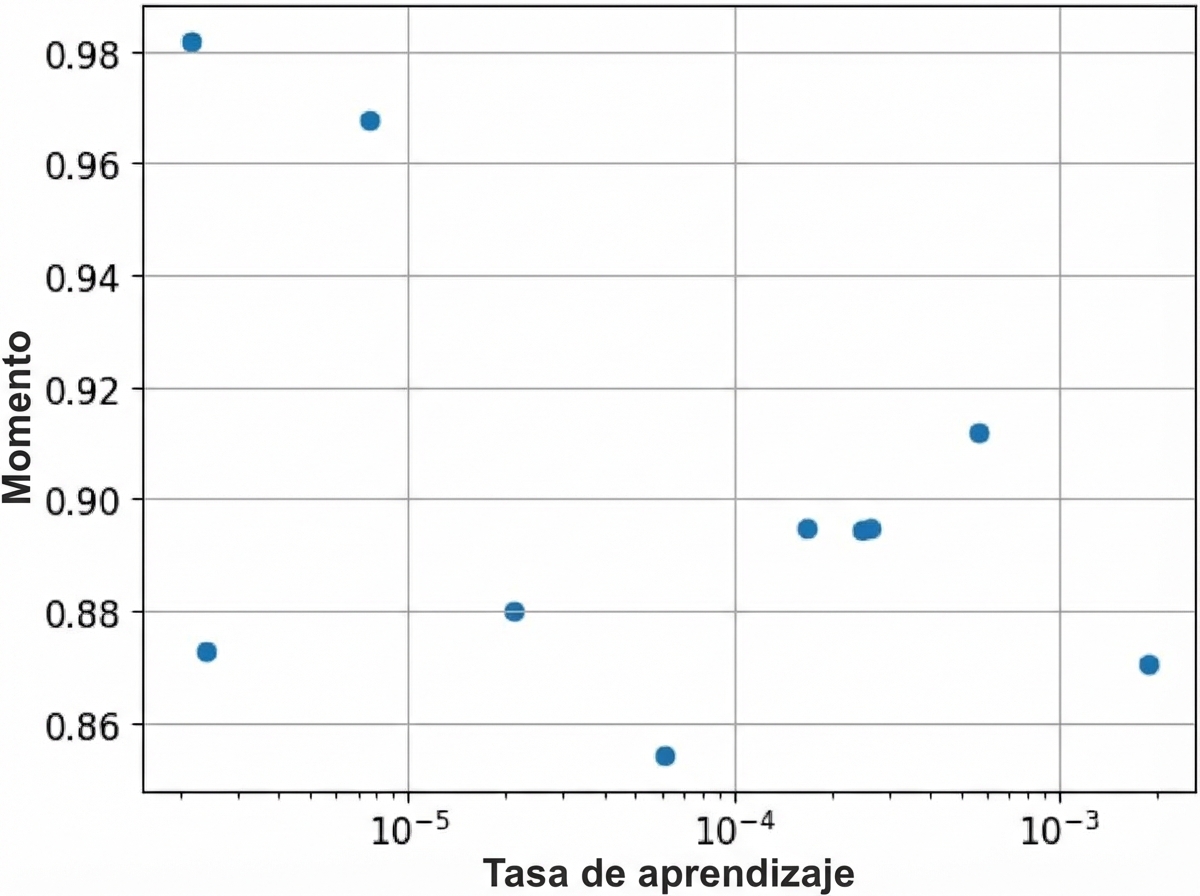

Paso 3: afinar los hiperparámetros

- Búsqueda en la parrilla

for factor in range(2, 6):

lr = 10 ** -factor

- Búsqueda aleatoria

factor = np.random.uniform(2, 6)

lr = 10 ** -factor

¡Vamos a practicar!

Introducción al aprendizaje profundo con PyTorch