Avaliação do modelo

Conceitos de IA Generativa

Daniel Tedesco

Data Lead, Google

Afinal, por que avaliar?

Avaliação do desempenho e eficácia de um modelo:

- Mede os avanços

- Comparação rigorosa de modelos

- Comparação com o desempenho humano

Avaliação de IAs generativas

Métricas quantitativas

- Métricas de avaliação de modelos discriminativos

- Métricas específicas de modelos generativos

Métricas centradas no ser humano

- Comparação com o desempenho humano

- Avaliação inteligente

Técnicas de avaliação de modelos discriminativos

Avaliam o desempenho em tarefas bem definidas

Vantagens:

- Bastante aceitas e compreendidas

- Fáceis de calcular e comparar

Desvantagens:

- Não captam a natureza subjetiva dos conteúdos gerados

Métricas específicas de modelos generativos

Feitas sob medida para certas tarefas generativas

Vantagens:

- Critérios detalhados, como realismo, diversidade e originalidade

- Muitas métricas bem conhecidas

Desvantagens:

- Não captam muitos elementos subjetivos

- Muitas vezes não são generalizáveis

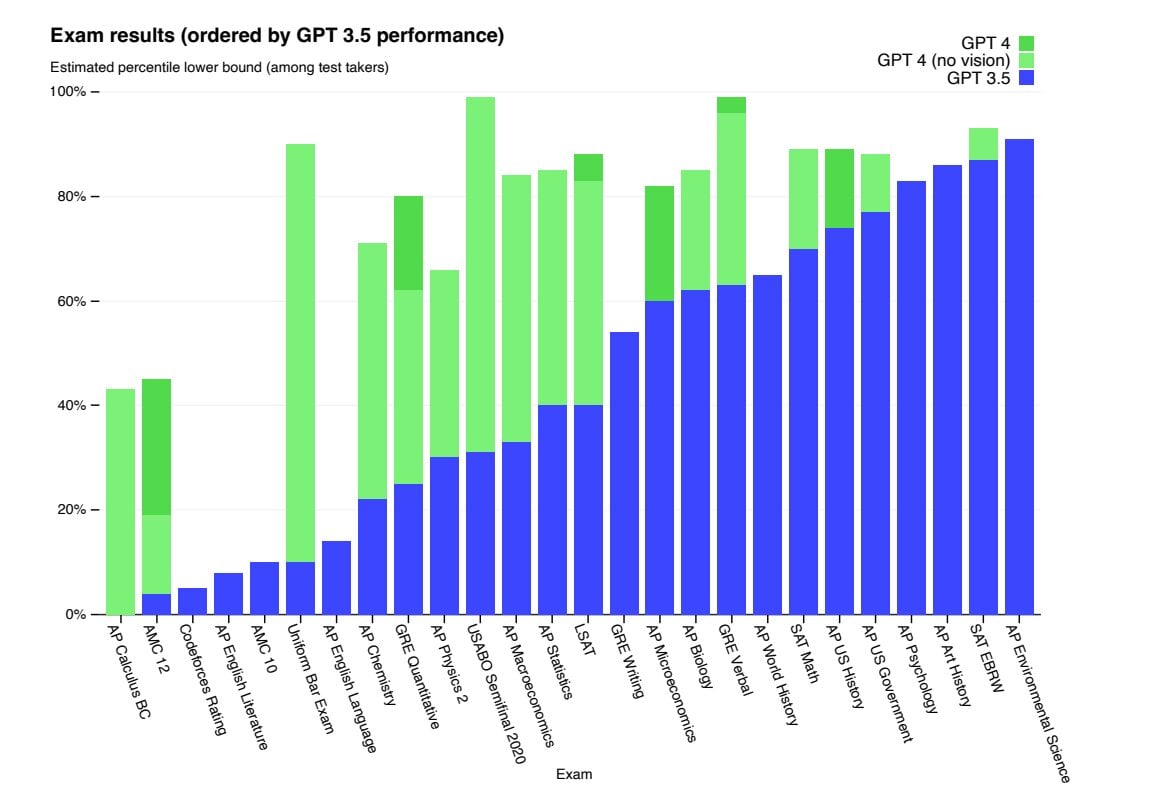

Comparação com o desempenho humano

Vantagens:

- Comparação com as habilidades humanas

- Demonstra aplicação prática

Desvantagens:

- Comparação injusta

IAs premiadas

Competições com humanos

Testes padronizados com humanos

1 https://twitter.com/colostatefair/status/1565486317839863809, OpenAI

O padrão de excelência

Avaliação inteligente por humanos ou outras IAs

Vantagens:

- Capta aspectos subjetivos

Desvantagens:

- Lenta, cara e difícil de padronizar

- Sujeita a irregularidades e vieses humanos

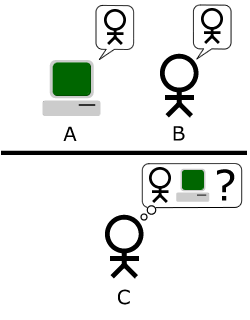

O clássico teste de Turing

- Proposto pelo cientista da computação Alan Turing

- Um avaliador humano julga o conteúdo gerado pela IA

- Aprovado se o avaliador não consegue diferenciar a IA de um humano

- Mas o comportamento humano nem sempre é o padrão ideal

Vamos praticar!

Conceitos de IA Generativa