Ajuste de hiperparâmetros

Aprendizado Supervisionado com o scikit-learn

George Boorman

Core Curriculum Manager

Ajuste de hiperparâmetros

Regressão ridge/lasso: como escolher

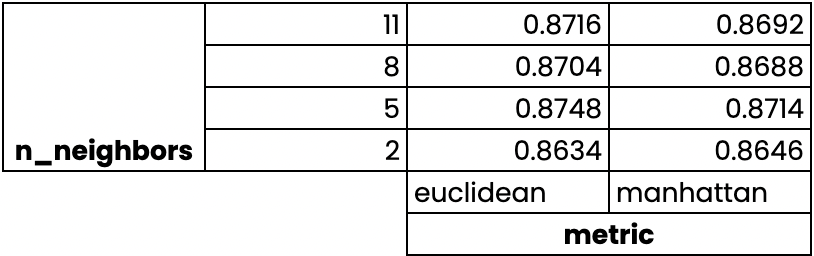

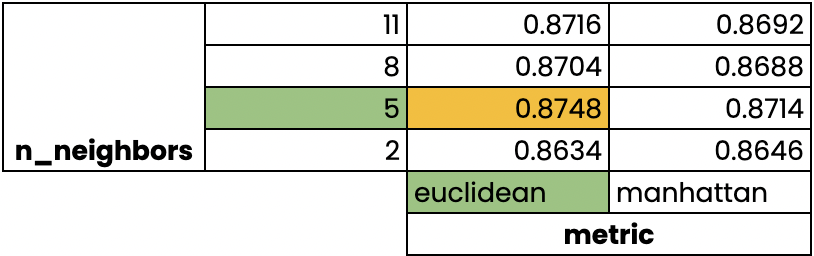

alphaKNN: como escolher

n_neighborsHiperparâmetros: parâmetros que especificamos antes de ajustar o modelo

- Como

alphaen_neighbors

- Como

Como escolher os hiperparâmetros corretos

Testar muitos valores diferentes de hiperparâmetros

Fazer o ajuste de todos eles separadamente

Observar o desempenho deles

Escolher os valores com melhor desempenho

Isso é chamado de ajuste de hiperparâmetros

É essencial usar a validação cruzada para evitar o sobreajuste ao conjunto de teste

Ainda podemos dividir os dados e realizar a validação cruzada no conjunto de treinamento

Reservamos o conjunto de teste para a avaliação final

Validação cruzada com pesquisa em grade

Validação cruzada com pesquisa em grade

Validação cruzada com pesquisa em grade

GridSearchCV no scikit-learn

from sklearn.model_selection import GridSearchCVkf = KFold(n_splits=5, shuffle=True, random_state=42)param_grid = {"alpha": np.arange(0.0001, 1, 10), "solver": ["sag", "lsqr"]}ridge = Ridge()ridge_cv = GridSearchCV(ridge, param_grid, cv=kf)ridge_cv.fit(X_train, y_train)print(ridge_cv.best_params_, ridge_cv.best_score_)

{'alpha': 0.0001, 'solver': 'sag'}

0.7529912278705785

Limitações e uma abordagem alternativa

- Validação cruzada com 3 grupos, 1 hiperparâmetro, 10 valores totais = 30 ajustes

- Validação cruzada com 10 grupos, 3 hiperparâmetros, 30 valores ao todo = 900 ajustes

RandomizedSearchCV

from sklearn.model_selection import RandomizedSearchCVkf = KFold(n_splits=5, shuffle=True, random_state=42) param_grid = {'alpha': np.arange(0.0001, 1, 10), "solver": ['sag', 'lsqr']} ridge = Ridge()ridge_cv = RandomizedSearchCV(ridge, param_grid, cv=kf, n_iter=2) ridge_cv.fit(X_train, y_train)print(ridge_cv.best_params_, ridge_cv.best_score_)

{'solver': 'sag', 'alpha': 0.0001}

0.7529912278705785

Avaliação com o conjunto de teste

test_score = ridge_cv.score(X_test, y_test)print(test_score)

0.7564731534089224

Vamos praticar!

Aprendizado Supervisionado com o scikit-learn