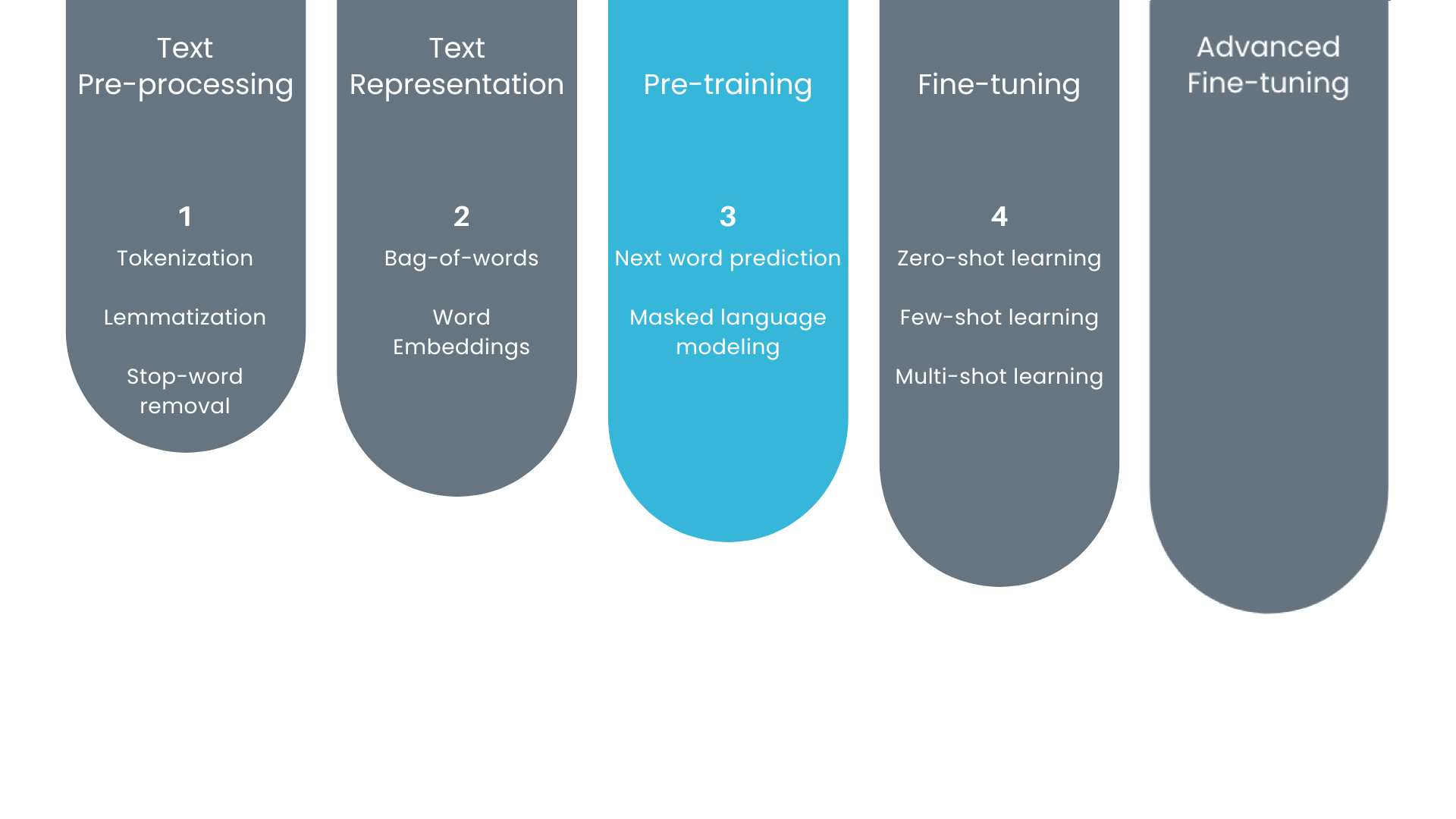

Elementos básicos para treinar LLMs

Conceitos de Grandes Modelos de Linguagem (LLMs)

Vidhi Chugh

AI strategist and ethicist

Onde estamos?

Pré-treinamento generativo

Treinados usando pré-treinamento generativo

- Dados de entrada: tokens de textos

- Treinados para prever os tokens do conjunto de dados

- Tipos:

- Previsão da próxima palavra

- Modelagem de linguagem mascarada

Previsão da próxima palavra

- Técnica de aprendizado supervisionado

- Modelo treinado com pares entrada-saída

- Prevê a próxima palavra e gera um texto coerente

- Capta as dependências entre as palavras

- Dados de treinamento

- Exemplos de pares de entrada e saída

Dados de treinamento na previsão da próxima palavra

Entrada

A rápida raposa

A rápida raposa marrom

A rápida raposa marrom pula

A rápida raposa marrom pula sobre

A rápida raposa marrom pula sobre o

A rápida raposa marrom pula sobre o cão

A rápida raposa marrom pula sobre o cão preguiçoso.

Saída

marrom

pula

sobre

o

cão

preguiçoso

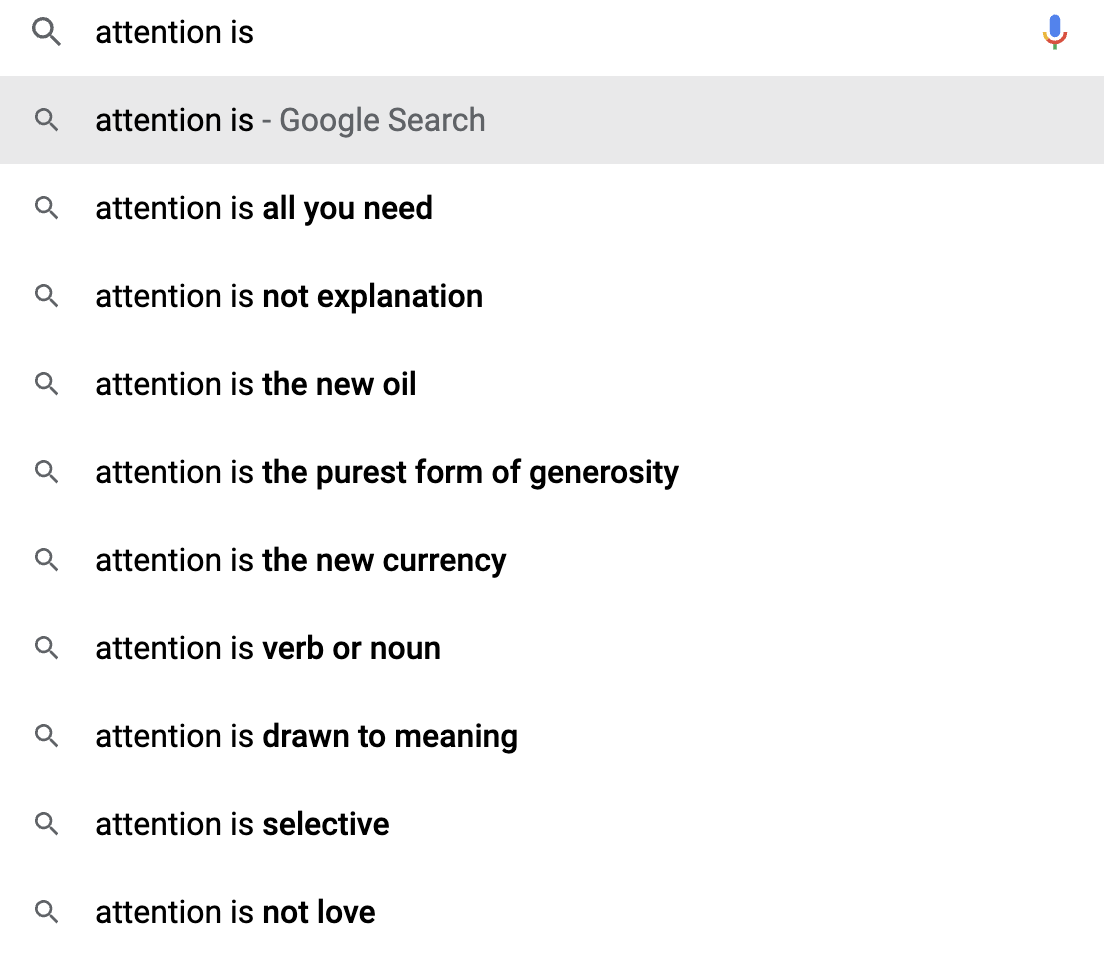

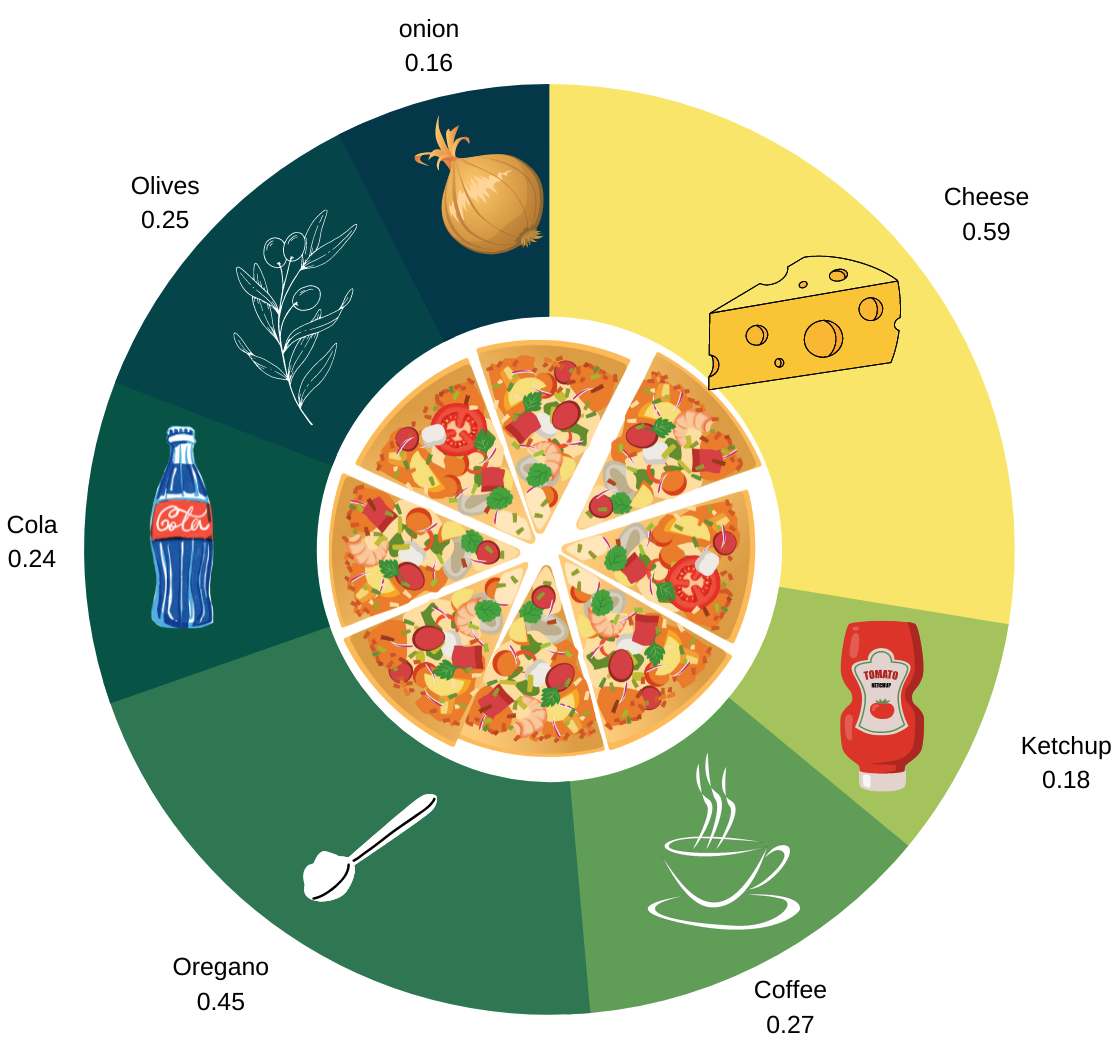

Qual palavra é mais associada a pizza?

- Mais exemplos = melhores previsões

- Exemplo:

- Adoro comer pizza com _ _ _ _ _ _

- Queijo está mais relacionado com pizza do que qualquer outra opção

Modelagem de linguagem mascarada

Oculta uma palavra selecionada

O modelo treinado prevê a palavra mascarada

Texto original: “A rápida raposa marrom pula sobre o cão preguiçoso.”

Texto mascarado: “A rápida raposa [MÁSCARA] pula sobre o cão preguiçoso.”

Objetivo: prever a palavra que está faltando

Com base nos aprendizados com dados de treinamento

Vamos praticar!

Conceitos de Grandes Modelos de Linguagem (LLMs)