Técnicas de aprendizado

Conceitos de Grandes Modelos de Linguagem (LLMs)

Vidhi Chugh

AI strategist and ethicist

Onde estamos?

Como superar limitações nos dados

- Ajuste fino: treinamento de um modelo pré-treinado para uma tarefa específica

- Mas e se quase não houver dados rotulados?

- Aprendizado n-shot: zero-shot, few-shot e multi-shot

Aprendizado por transferência

- Aprender com uma tarefa e transferir para tarefas relacionadas

- Transferência de conhecimentos sobre piano para violão

- Leitura de partituras

- Compreensão do ritmo

- Entendimento de conceitos musicais

- Aprendizado n-shot

- Zero-shot – sem dados específicos

- Few-shot – poucos dados específicos

- Multi-shot – mais dados de treinamento

Aprendizado zero-shot

- Sem treinamento específico

- Usa compreensão de linguagem e contexto

- Generaliza sem exemplos anteriores

1 Freepik

Aprendizado few-shot

- Aprender uma tarefa com alguns exemplos

- Aprendizado one-shot: ajuste fino com base em um único exemplo

- Conhecimento prévio para responder a novas perguntas

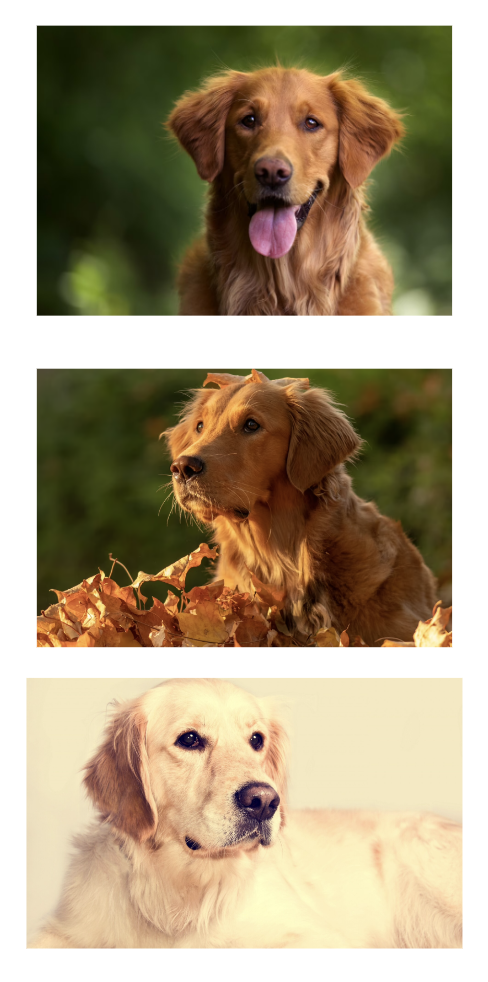

Aprendizado multi-shot

- Precisa de mais exemplos que o few-shot

- Tarefas anteriores + novos exemplos

- Ex.: um modelo treinado com Golden Retrievers

1 Freepik

Aprendizado multi-shot

- Resultado do modelo: Labrador Retriever

- Economiza tempo na coleta e rotulagem de dados

- Não compromete a precisão

1 Freepik

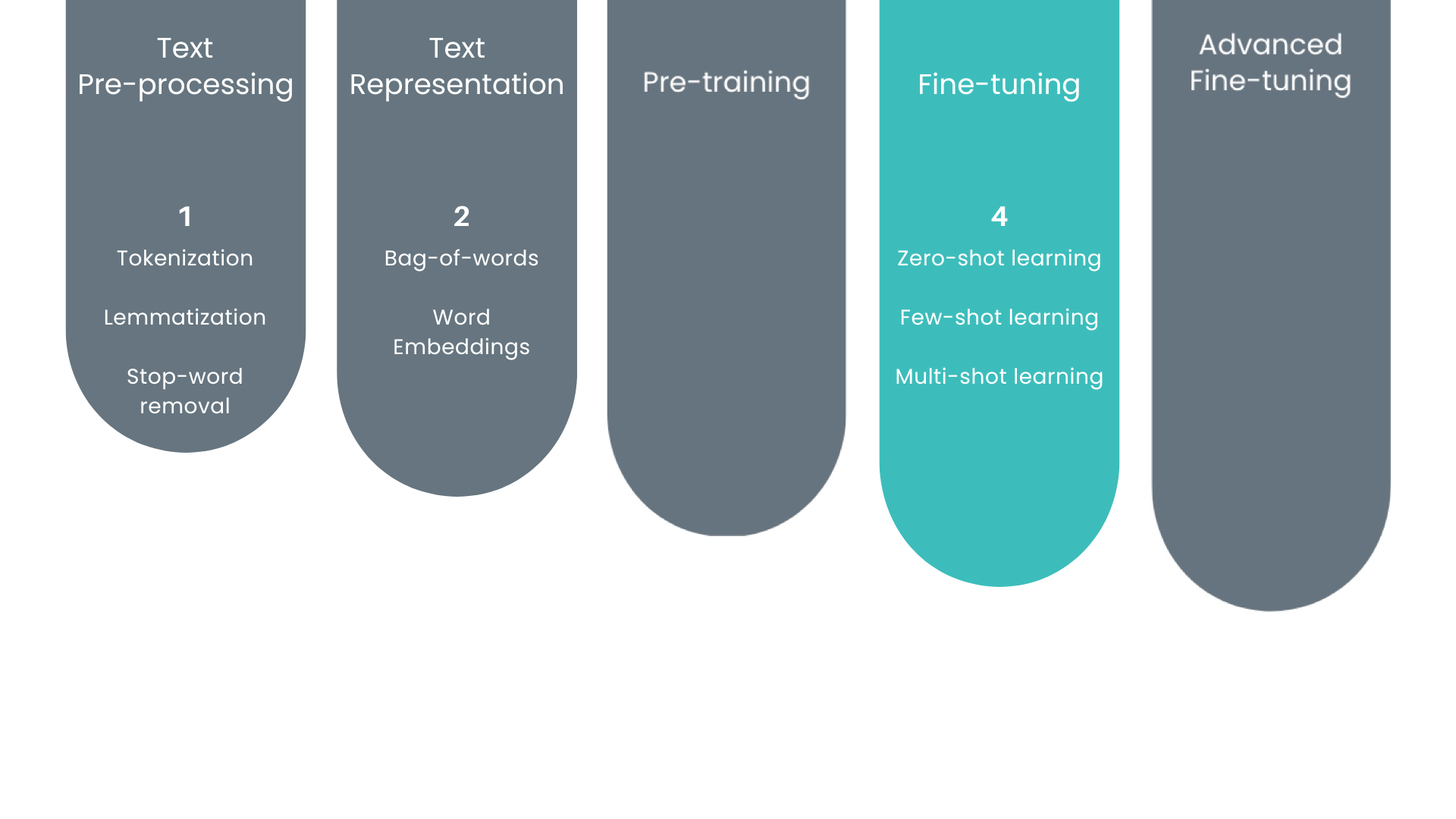

Componentes básicos até agora

- Fluxo de trabalho de preparação de dados

- Ajuste fino

- Técnicas de aprendizado n-shot

- Próxima etapa: pré-treinamento

Vamos praticar!

Conceitos de Grandes Modelos de Linguagem (LLMs)