Using pre-trained LLMs

Introduction to LLMs in Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

Language understanding

Language generation

Text generation

generator = pipeline(task="text-generation", model="distilgpt2")

prompt = "The Gion neighborhood in Kyoto is famous for"

output = generator(prompt, max_length=100, pad_token_id=generator.tokenizer.eos_token_id)

- Coherent

- Meaningful

- Human-like text

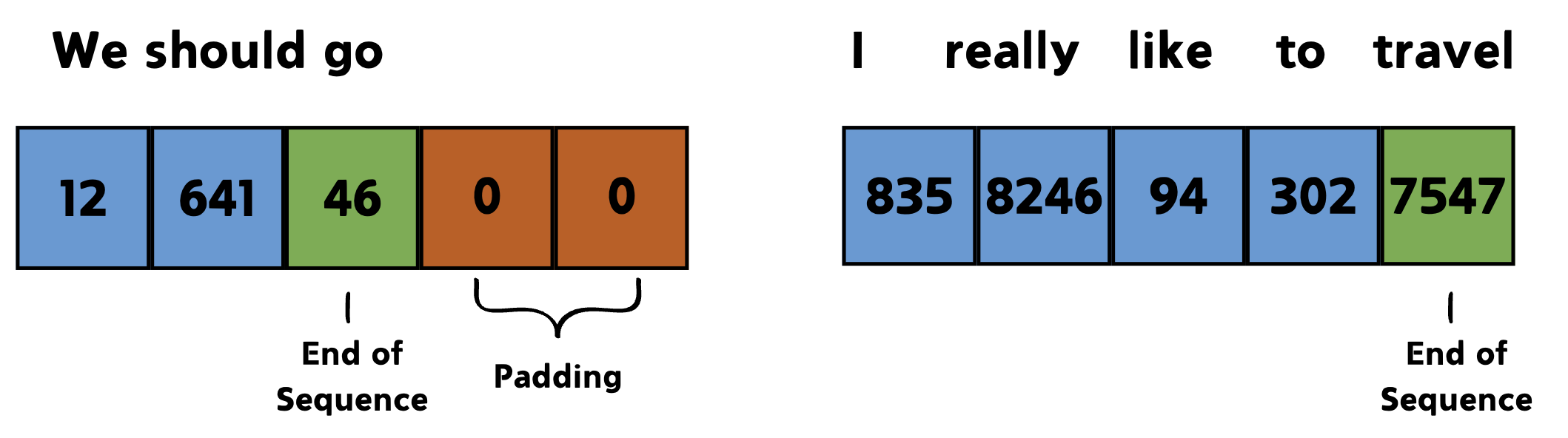

eos_token_id: end-of-sequence token ID

Text generation

pad_token_id: fills in extra space up tomax_length- Padding: adding tokens

- Setting to

generator.tokenizer.eos_token_idmarks the end of meaningful text, learned through training - Model generates up to

max_lengthorpad_token_id truncation = True

Text generation

generator = pipeline(task="text-generation", model="distilgpt2") prompt = "The Gion neighborhood in Kyoto is famous for" output = generator(prompt, max_length=100, pad_token_id=generator.tokenizer.eos_token_id)print(output[0]["generated_text"])

The Gion neighborhood in Kyoto is famous for its many colorful green forests, such as the

Red Hill, the Red River and the Red River. The Gion neighborhood is home to the world's

tallest trees.

- Output may be suboptimal if prompt is vague

Guiding the output

generator = pipeline(task="text-generation", model="distilgpt2")review = "This book was great. I enjoyed the plot twist in Chapter 10." response = "Dear reader, thank you for your review." prompt = f"Book review:\n{review}\n\nBook shop response to the review:\n{response}"output = generator(prompt, max_length=100, pad_token_id=generator.tokenizer.eos_token_id) print(output[0]["generated_text"])

Dear reader, thank you for your review. We'd like to thank you for your reading!

Language translation

- Hugging Face has a complete list of translation tasks and models

translator = pipeline(task="translation_en_to_es", model="Helsinki-NLP/opus-mt-en-es")text = "Walking amid Gion's Machiya wooden houses was a mesmerizing experience."output = translator(text, clean_up_tokenization_spaces=True)print(output[0]["translation_text"])

Caminar entre las casas de madera Machiya de Gion fue una experiencia fascinante.

Let's practice!

Introduction to LLMs in Python