Getting started with Databricks SQL

Databricks Concepts

Kevin Barlow

Data Practitioner

SQL Compute vs. General Compute

Designing compute clusters for data science or data engineering workloads...

import pyspark.sql.functions as F

spark_df = (spark

.read

.table('user_table'))

spark_df = (spark_df

.withColumn('score',

F.flatten(...))

)

is inherently different than designing compute for SQL workloads

SELECT *

FROM user_table u

LEFT JOIN product_use p

ON u.userId = p.userId

WHERE country = 'USA'

AND utilization >= 0.6

SQL Warehouse

SQL Warehouse

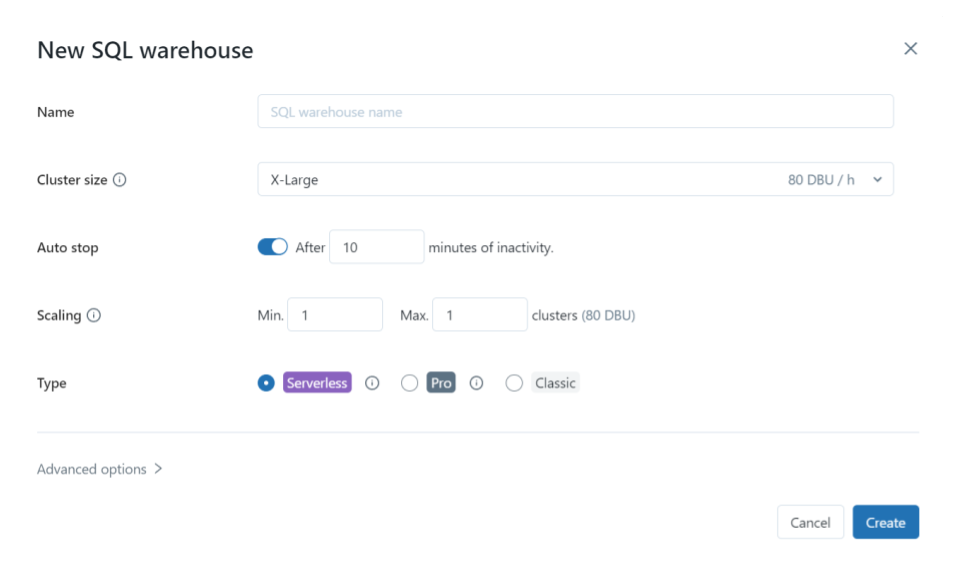

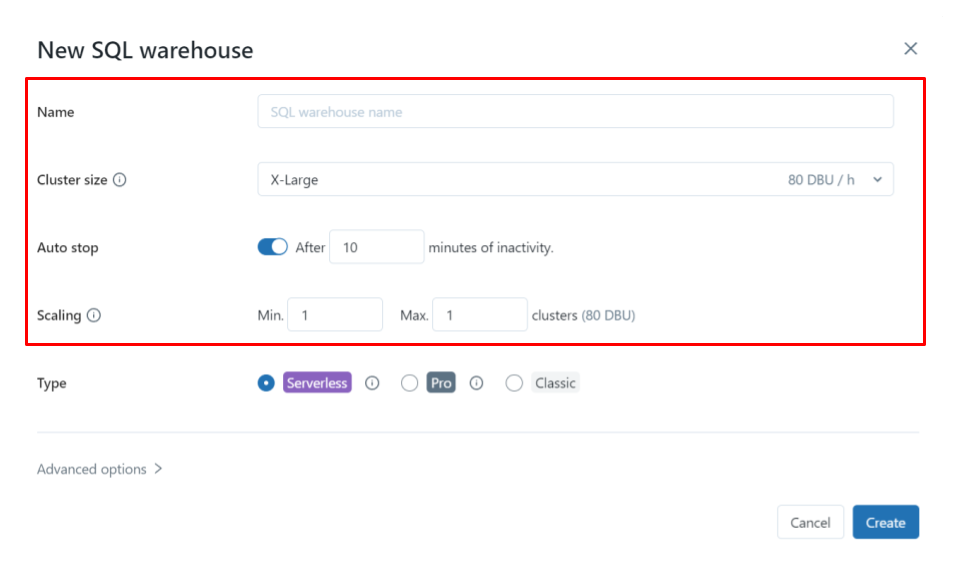

SQL Warehouse Configuration Options

- Cluster Name

- Cluster Size (S, M, L, etc.)

- Scaling behavior

SQL Warehouse

SQL Warehouse Configuration Options

- Cluster Name

- Cluster Size (S, M, L, etc.)

- Scaling behavior

- Cluster Type

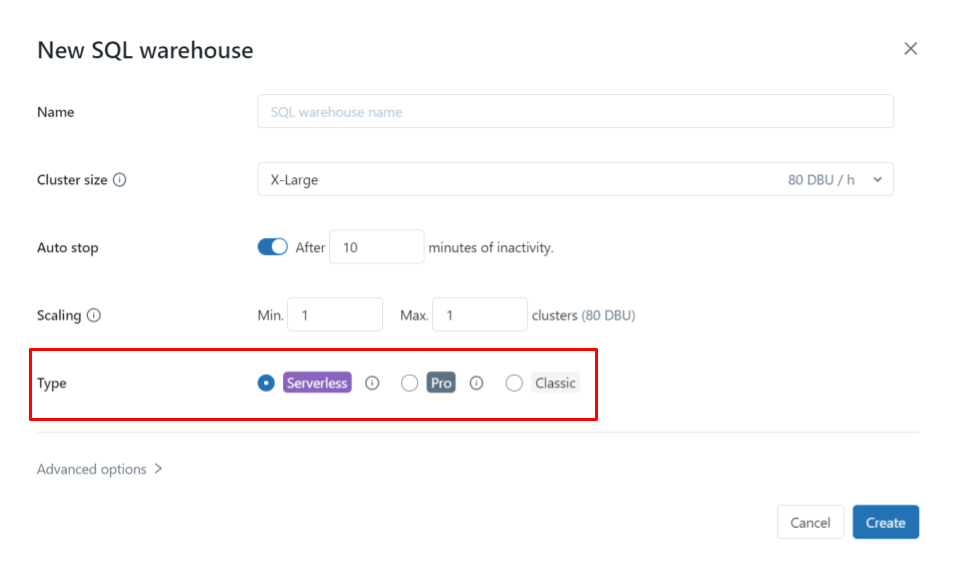

SQL Warehouse Types

Different types provide different benefits

Classic

- Most basic SQL compute

- In customer cloud

Pro

- More advanced features than Classic

- In customer cloud

Serverless

- Cutting edge features

- In Databricks cloud

- Most cost performant

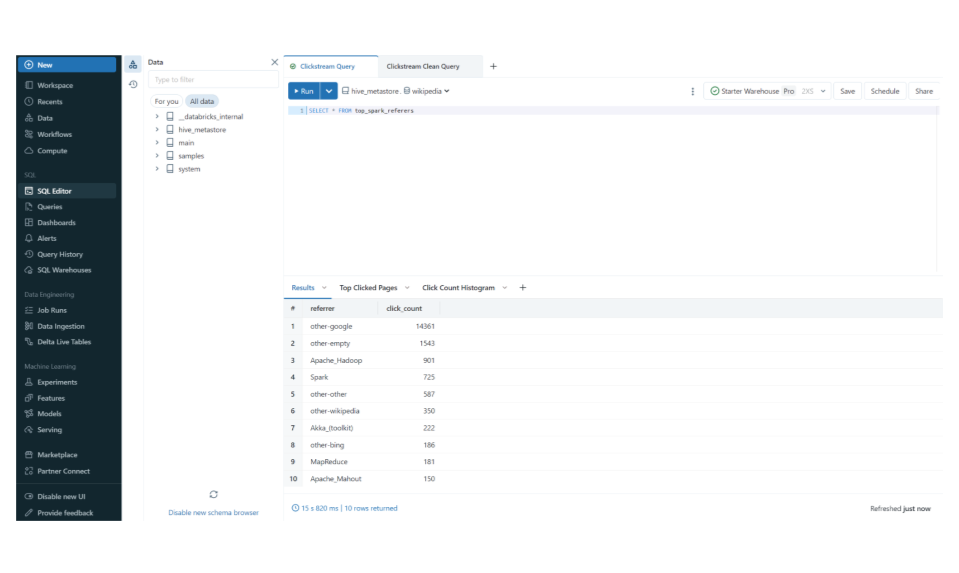

SQL Editor

Common SQL Commands

COPY INTO

- Grab raw data and put into Delta

- The Extract of ETL

COPY INTO my_table

FROM '/path/to/files'

FILEFORMAT = <format>

FORMAT_OPTIONS ('mergeSchema' = 'true')

COPY_OPTIONS ('mergeSchema' = 'true');

CREATE <entity> AS

- Create a Table or View

- The Transform in ETL

CREATE TABLE events

USING DELTA

AS (

SELECT *

FROM raw_events

WHERE ...

)

Let's practice!

Databricks Concepts