Data Engineering foundations in Databricks

Databricks Concepts

Kevin Barlow

Data Practitioner

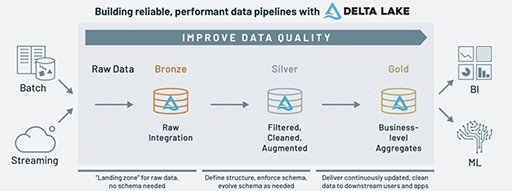

Medallion architecture

Reading data

Spark is a highly flexible framework and can read from various data sources/types.

Common data sources and types:

- Delta tables

- File formats (CSV, JSON, Parquet, XML)

- Databases (MySQL, Postgres, EDW)

- Streaming data

- Images / Videos

Reading data

Spark is a highly flexible framework and can read from various data sources/types.

Common data sources and types:

- Delta tables

- File formats (CSV, JSON, Parquet, XML)

- Databases (MySQL, Postgres, EDW)

- Streaming data

- Images / Videos

#Delta table

spark.read.table()

#CSV files

spark.read.format('csv').load('*.csv')

#Postgres table

spark.read.format("jdbc")

.option("driver", driver)

.option("url", url)

.option("dbtable", table)

.option("user", user)

.option("password", password)

.load()

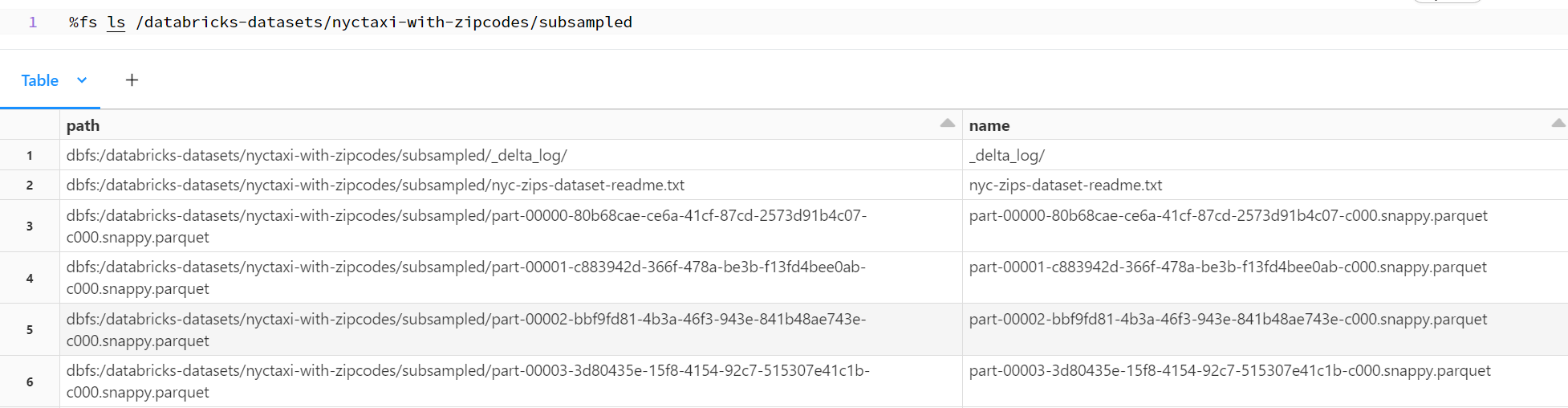

Structure of a Delta table

A Delta table provides table-like qualities to an open file format.

- Feels like a table when reading

- Access to underlying files (Parquet and JSON)

Explaining the Delta Lake structure

DataFrames

DataFrames are two-dimensional representations of data.

- Look and feel similar to tables

- Similar concept for many different data tools

- Spark (default), pandas, dplyr, SQL queries

- Underlying construct for most data processes

| id | customerName | bookTitle |

|---|---|---|

| 1 | John Data | Guide to Spark |

| 2 | Sally Bricks | SQL for Data Engineering |

| 3 | Adam Delta | Keeping Data Clean |

df = (spark.read

.format("csv")

.option("header", "true")

.option("inferSchema", "true")

.load("/data.csv"))

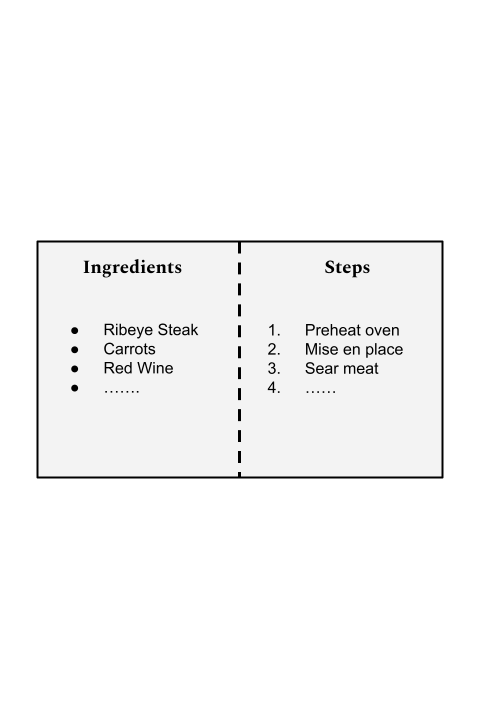

Writing data

Kinds of tables in Databricks

- Managed tables

- Default type

- Stored with Unity Catalog

- Databricks managed

- External tables

- Stored in another location

- Set LOCATION

- Customer managed

df.write.saveAsTable(table_name)

CREATE TABLE table_name

USING delta

AS ...

df.write

.location('').saveAsTable(table_name)

CREATE TABLE table_name

USING delta

LOCATION "<path>"

AS ...

Let's practice!

Databricks Concepts