Introduction to Clustering

Big Data Fundamentals with PySpark

Upendra Devisetty

Science Analyst, CyVerse

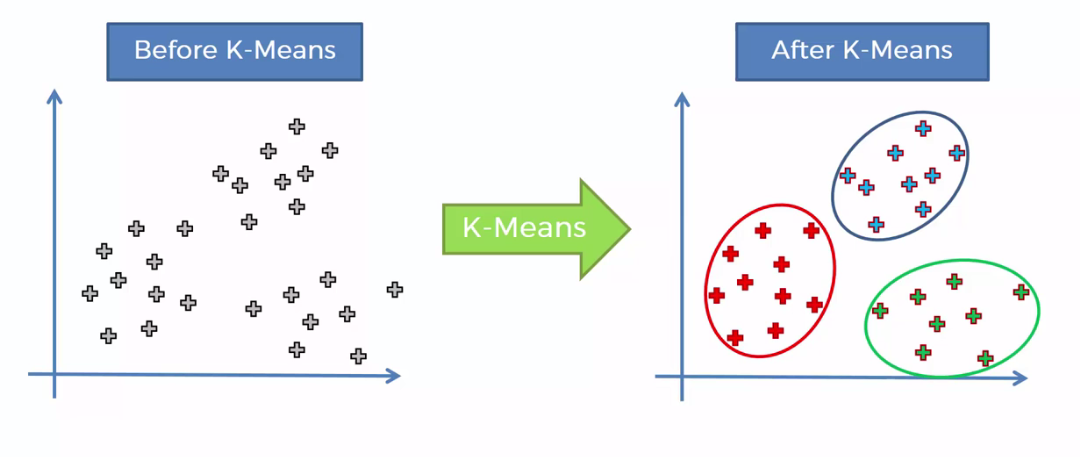

What is Clustering?

Clustering is the unsupervised learning task to organize a collection of data into groups

PySpark MLlib library currently supports the following clustering models

- K-means

- Gaussian mixture

- Power iteration clustering (PIC)

- Bisecting k-means

- Streaming k-means

K-means Clustering

- K-means is the most popular clustering method

K-means with Spark MLLib

RDD = sc.textFile("WineData.csv"). \

map(lambda x: x.split(",")).\

map(lambda x: [float(x[0]), float(x[1])])

RDD.take(5)

[[14.23, 2.43], [13.2, 2.14], [13.16, 2.67], [14.37, 2.5], [13.24, 2.87]]

Train a K-means clustering model

- Training K-means model is done using

KMeans.train()method

from pyspark.mllib.clustering import KMeans

model = KMeans.train(RDD, k = 2, maxIterations = 10)

model.clusterCenters

[array([12.25573171, 2.28939024]), array([13.636875 , 2.43239583])]

Evaluating the K-means Model

from math import sqrt

def error(point):

center = model.centers[model.predict(point)]

return sqrt(sum([x**2 for x in (point - center)]))

WSSSE = RDD.map(lambda point: error(point)).reduce(lambda x, y: x + y)

print("Within Set Sum of Squared Error = " + str(WSSSE))

Within Set Sum of Squared Error = 77.96236420499056

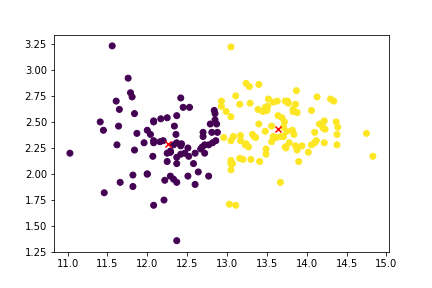

Visualizing K-means clusters

Visualizing clusters

wine_data_df = spark.createDataFrame(RDD, schema=["col1", "col2"])

wine_data_df_pandas = wine_data_df.toPandas()

cluster_centers_pandas = pd.DataFrame(model.clusterCenters, columns=["col1", "col2"])

cluster_centers_pandas.head()

plt.scatter(wine_data_df_pandas["col1"], wine_data_df_pandas["col2"]);

plt.scatter(cluster_centers_pandas["col1"], cluster_centers_pandas["col2"], color="red", marker="x");

Clustering practice

Big Data Fundamentals with PySpark