Fundamentals of Big Data

Big Data Fundamentals with PySpark

Upendra Devisetty

Science Analyst, CyVerse

What is Big Data?

- Big data is a term used to refer to the study and applications of data sets that are too complex for traditional data-processing software - Wikipedia

The 3 V's of Big Data

Volume, Variety and Velocity

Volume: Size of the data

Variety: Different sources and formats

Velocity: Speed of the data

Big Data concepts and Terminology

Clustered computing: Collection of resources of multiple machines

Parallel computing: Simultaneous computation on single computer

Distributed computing: Collection of nodes (networked computers) that run in parallel

Batch processing: Breaking the job into small pieces and running them on individual machines

Real-time processing: Immediate processing of data

Big Data processing systems

Hadoop/MapReduce: Scalable and fault tolerant framework written in Java

Open source

Batch processing

Apache Spark: General purpose and lightning fast cluster computing system

Open source

Both batch and real-time data processing

Note: Apache Spark is nowadays preferred over Hadoop/MapReduce

Features of Apache Spark framework

Distributed cluster computing framework

Efficient in-memory computations for large data sets

Lightning fast data processing framework

Provides support for Java, Scala, Python, R and SQL

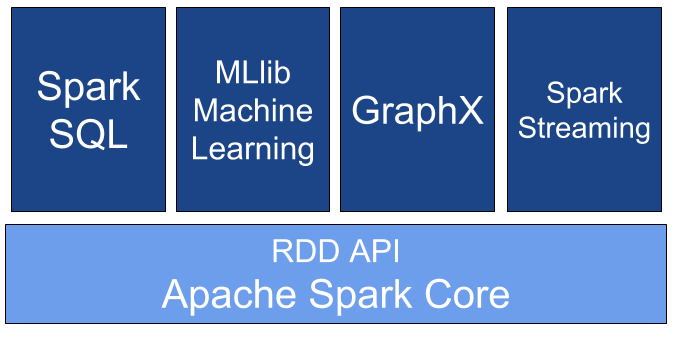

Apache Spark Components

Spark modes of deployment

Local mode: Single machine such as your laptop

- Local model convenient for testing, debugging and demonstration

Cluster mode: Set of pre-defined machines

- Good for production

Workflow: Local -> clusters

No code change necessary

Coming up next - PySpark

Big Data Fundamentals with PySpark