Running a data pipeline in production

ETL and ELT in Python

Jake Roach

Data Engineer

Data pipeline architecture patterns

# Define ETL function

...

def load(clean_data):

...

# Run the data pipeline

raw_stock_data = extract("raw_stock_data.csv")

clean_stock_data = transform(raw_stock_data)

load(clean_stock_data)

> ls

etl_pipeline.py

# Import extract, transform, and load functions

from pipeline_utils import extract, transform, load

# Run the data pipeline

raw_stock_data = extract("raw_stock_data.csv")

clean_stock_data = transform(raw_stock_data)

load(clean_stock_data)

> ls

etl_pipeline.py

pipeline_utils.py

Running a data pipeline end-to-end

import logging

from pipeline_utils import extract, transform, load

logging.basicConfig(format='%(levelname)s: %(message)s', level=logging.DEBUG)

try:

# Extract, transform, and load data

raw_stock_data = extract("raw_stock_data.csv")

clean_stock_data = transform(raw_stock_data)

load(clean_stock_data)

logging.info("Successfully extracted, transformed and loaded data.") # Log success message

# Handle exceptions, log messages

except Exception as e:

logging.error(f"Pipeline failed with error: {e}")

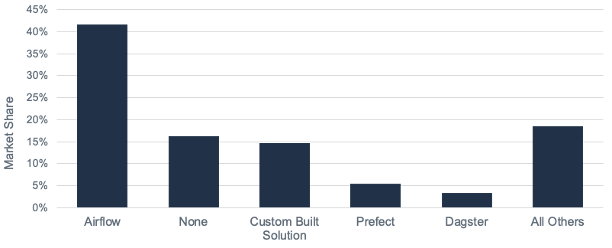

Orchestrating data pipelines in production

1 https://open.substack.com/pub/seattledataguy/p/the-state-of-data-engineering-part?r=1po78c&utm_campaign=post&utm_medium=web

Let's practice!

ETL and ELT in Python