The barebone DQN algorithm

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

The Barebone DQN

- Our first step to the full DQN algorithm

- Features:

- Generic DRL training loop

- A Q-network

- Principles of Q-learning

for episode in range(1000):

state, info = env.reset()

done = False

while not done:

# Action selection

action = select_action(network, state)

next_state, reward, terminated, truncated, _ = (

env.step(action))

done = terminated or truncated

# Loss calculation

loss = calculate_loss(network, state, action,

next_state, reward, done)

optimizer.zero_grad()

loss.backward()

optimizer.step()

state = next_state

The Barebone DQN action selection

def select_action(q_network, state):# Feed state to network to obtain Q-valuesq_values = q_network(state)# Obtain index of action with highest Q-value action = torch.argmax(q_values).item()return action

- Policy: select action with highest Q-value

- $ a_t = {\arg\max}_a Q(S_t, a) $

- Here: action 2, with Q-value

0.12

Q-values: [-0.01, 0.08, 0.12, -0.07]Action selected: 2, with q-value 0.12

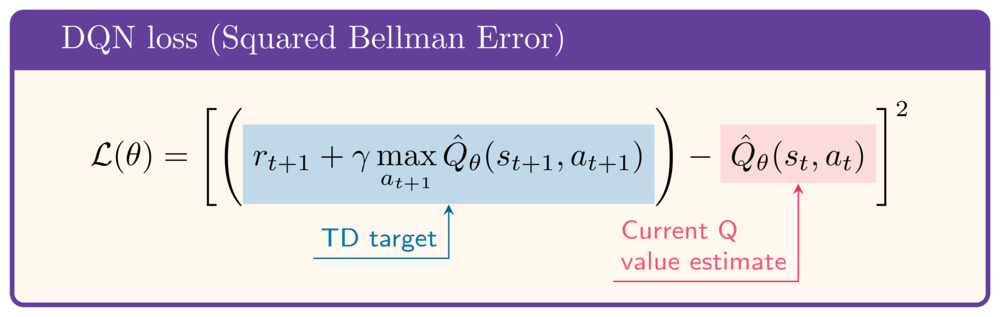

The Barebone DQN loss function

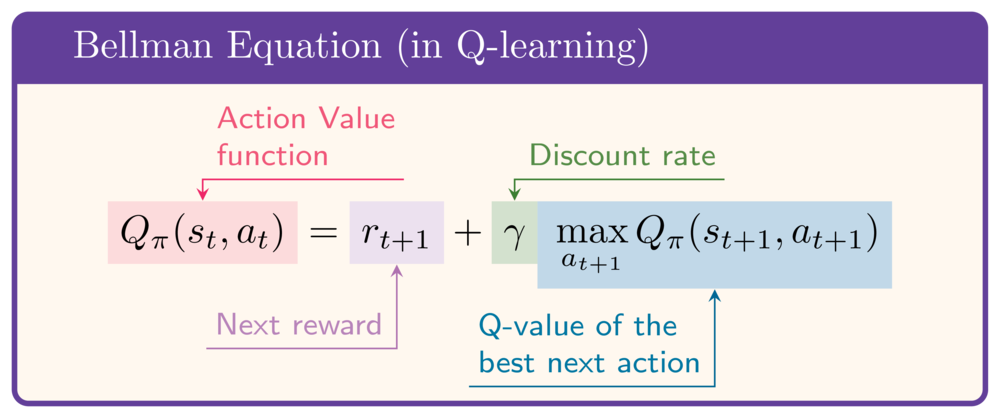

- Action-Value function satisfies Bellman Equation

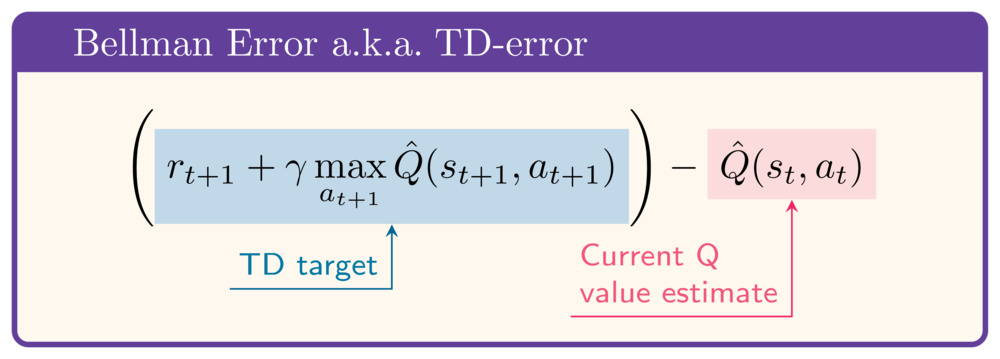

- Idea: minimize the difference between both sides a.k.a. TD-error or Bellman error

- Use Squared Bellman Error as loss function:

The Barebone DQN loss function

def calculate_loss( q_network, state, action, next_state, reward, done):q_values = q_network(state)current_state_q_value = q_values[action]next_state_q_value = q_network(next_state).max()target_q_value = reward + gamma * next_state_q_value * (1-done)loss = nn.MSELoss()( current_state_q_value, target_q_value)return loss

- Current state Q-value:

$Q(s_t, a_t)$

- Next state Q-value:

$\max_a Q(s_{t+1}, a)$

- Target Q-value:

$r_{t+1} + \gamma \max_a Q(s_{t+1}, a)$

- DQN Loss:

$$\left(Q(s_t, a_t) - (r_{t+1} + \gamma \max_a Q(s_{t+1}, a)\right)^2$$

Describing the episodes

describe_episode(episode, reward, episode_reward, step)

| Episode 1 | Duration: 84 steps | Return: -871.38 | Crashed || Episode 2 | Duration: 53 steps | Return: -452.68 | Crashed || Episode 3 | Duration: 57 steps | Return: -414.22 | Crashed | | Episode 4 | Duration: 54 steps | Return: -475.09 | Crashed || Episode 5 | Duration: 67 steps | Return: -532.31 | Crashed | | Episode 6 | Duration: 53 steps | Return: -407.00 | Crashed | | Episode 7 | Duration: 52 steps | Return: -380.45 | Crashed | | Episode 8 | Duration: 55 steps | Return: -380.75 | Crashed | | Episode 9 | Duration: 88 steps | Return: -688.68 | Crashed | | Episode 10 | Duration: 76 steps | Return: -338.06 | Crashed |

Let's practice!

Deep Reinforcement Learning in Python