Double DQN

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

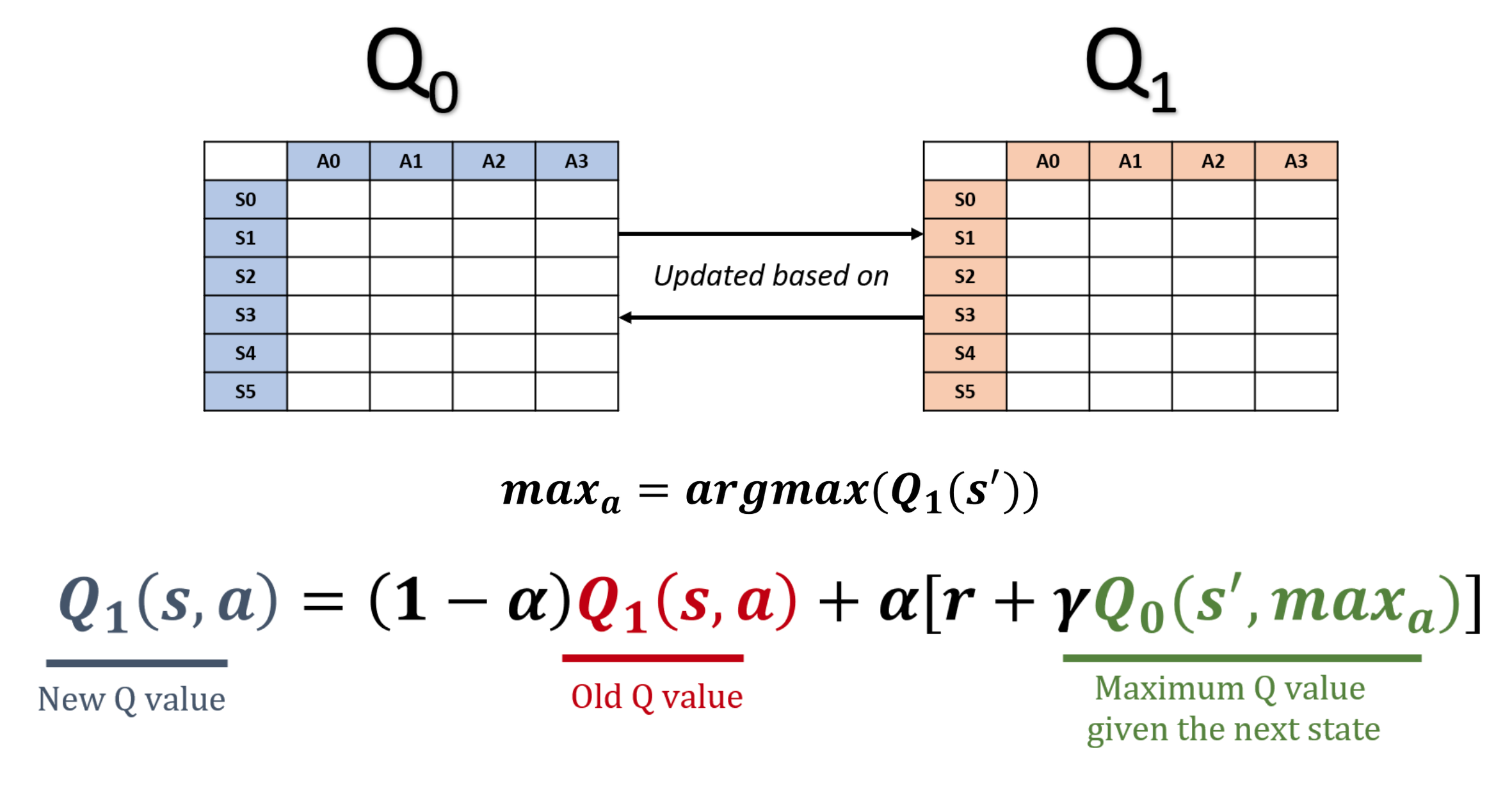

Double Q-learning

- Q-learning overestimates Q-values, compromising learning efficiency

- This is due to maximization bias

- Double Q-Learning eliminates bias by decoupling action selection and value estimation

The idea behind DDQN

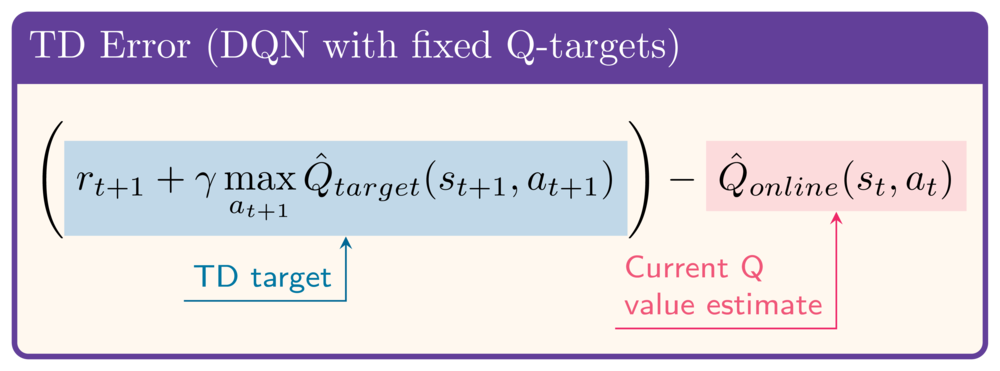

- Start from complete DQN (with fixed Q-targets)

- In DQN TD target:

- Action selection: target network

- Value estimation: target network

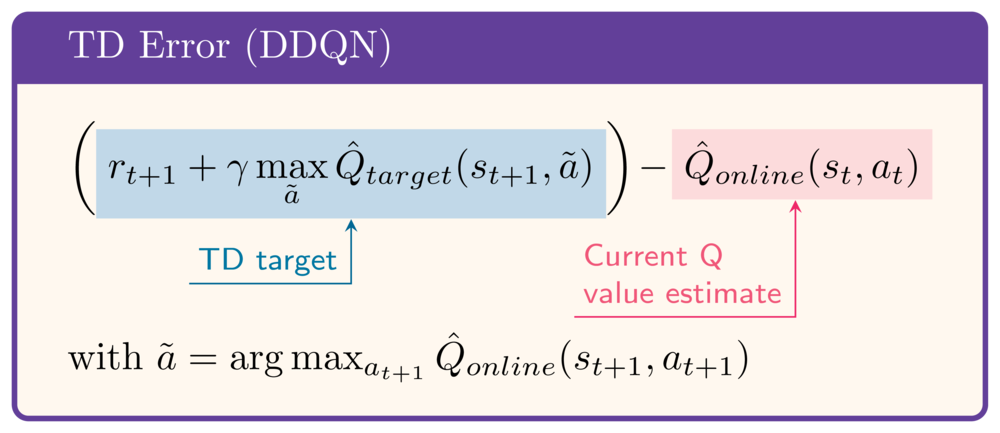

- In DDQN TD target:

- Action selection: online network

- Value estimation: target network

- Not exactly double Q-learning (no alternating Q-networks)

- Most of the benefit, with minimal change

Double DQN implementation

DQN:

... # instantiate online and target networks q_values = (online_network(states) .gather(1, actions).squeeze(1))with torch.no_grad():# # next_q_values = (target_network(next_states) .amax(1))target_q_values = (rewards + gamma * next_q_values * (1 - dones))loss = torch.nn.MSELoss()(q_values, target_q_values) ... # gradient descent ... # target network update

DDQN:

... # instantiate online and target networks q_values = (online_network(states) .gather(1, actions).squeeze(1))with torch.no_grad():target_q_values = (rewards + gamma * next_q_values * (1 - dones))loss = torch.nn.MSELoss()(q_values, target_q_values) ... # gradient descent ... # target network update

Double DQN implementation

DQN:

... # instantiate online and target networks q_values = (online_network(states) .gather(1, actions).squeeze(1))with torch.no_grad():next_actions = (target_network(next_states) .argmax(1).unsqueeze(1))next_q_values = (target_network(next_states) .gather(1, next_actions).squeeze(1))target_q_values = (rewards + gamma * next_q_values * (1 - dones))loss = torch.nn.MSELoss()(q_values, target_q_values) ... # gradient descent ... # target network update

DDQN:

... # instantiate online and target networks q_values = (online_network(states) .gather(1, actions).squeeze(1))with torch.no_grad():next_actions = (online_network(next_states) .argmax(1).unsqueeze(1))next_q_values = (target_network(next_states) .gather(1, next_actions).squeeze(1))target_q_values = (rewards + gamma * next_q_values * (1 - dones))loss = torch.nn.MSELoss()(q_values, target_q_values) ... # gradient descent ... # target network update

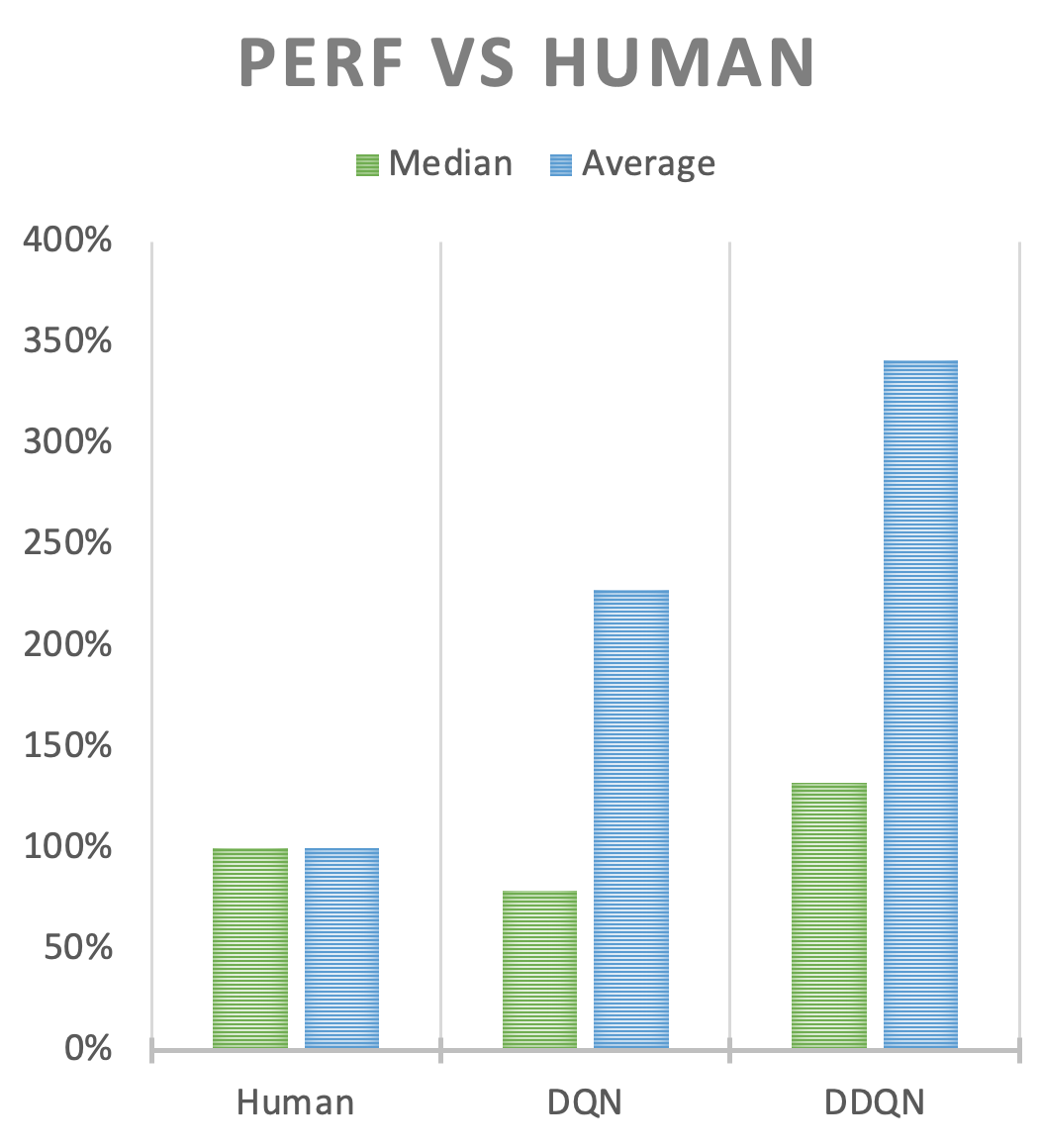

DDQN performance

- Compare performance of DDQN, DQN and human players on Atari games

- DDQN: higher scores than original DQN

- May not always be true -> try both

1 https://arxiv.org/abs/2303.11634

Let's practice!

Deep Reinforcement Learning in Python