Entropy bonus and PPO

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

Entropy bonus

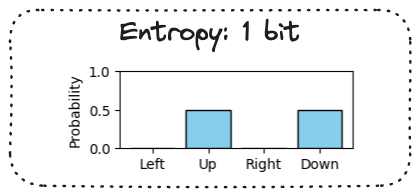

- Policy gradient algorithms may collapse into deterministic policies

- Solution: add entropy bonus

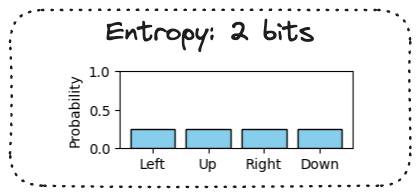

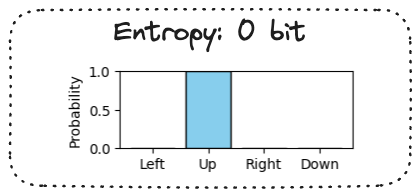

- Entropy measures uncertainty of a distribution!

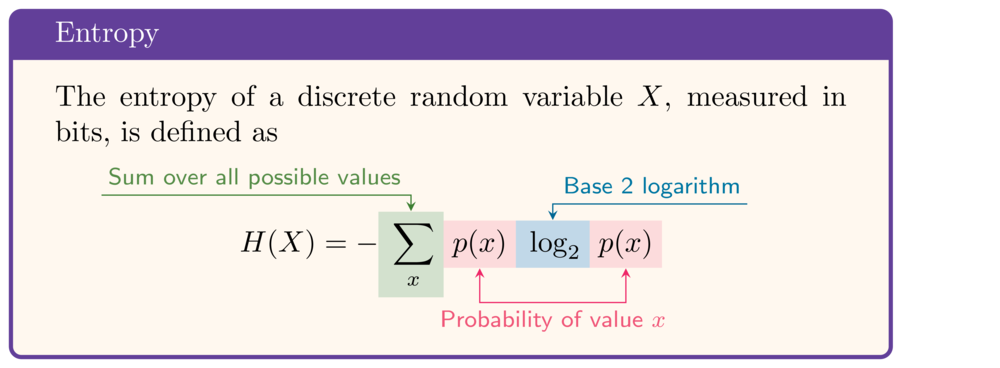

Entropy of a probability distribution

- If $\ln$ instead of $\log_2$: result measured in $nats$.

- $1\ nat = \frac{1}{\ln 2}\ bit \approx 1.44 \ bit$

Implementing the entropy bonus

def select_action(policy_network, state): action_probs = policy_network(state) action_dist = Categorical(action_probs) action = action_dist.sample() log_prob = action_dist.log_prob(action)# Obtain the entropy of the policy entropy = action_dist.entropy()return (action.item(), log_prob.reshape(1), entropy)

- Actor loss:

actor_loss -= c_entropy * entropy - Note:

Categorical.entropy()is in nats; divide bymath.log(2)for bits

PPO training loop

for episode in range(10):

state, info = env.reset()

done = False

while not done:

action, action_log_prob, entropy = select_action(actor, state)

next_state, reward, terminated, truncated, _ = env.step(action)

done = terminated or truncated

actor_loss, critic_loss = calculate_losses(critic, action_log_prob, action_log_prob,

reward, state, next_state, done)

actor_loss -= c_entropy * entropy

actor_optimizer.zero_grad(); actor_loss.backward(); actor_optimizer.step()

critic_optimizer.zero_grad(); critic_loss.backward(); critic_optimizer.step()

state = next_state

Towards PPO with batch updates

- Updating at each step: not taking full advantage of PPO objective function

- At each step, $\theta$ actually coincides with $\theta_{old}$.

- Full PPO implementations decouple:

- Parameter updates (minibatches)

- Policy updates (rollouts)

Let's practice!

Deep Reinforcement Learning in Python