Introduction to policy gradient

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

Introduction to Policy methods in DRL

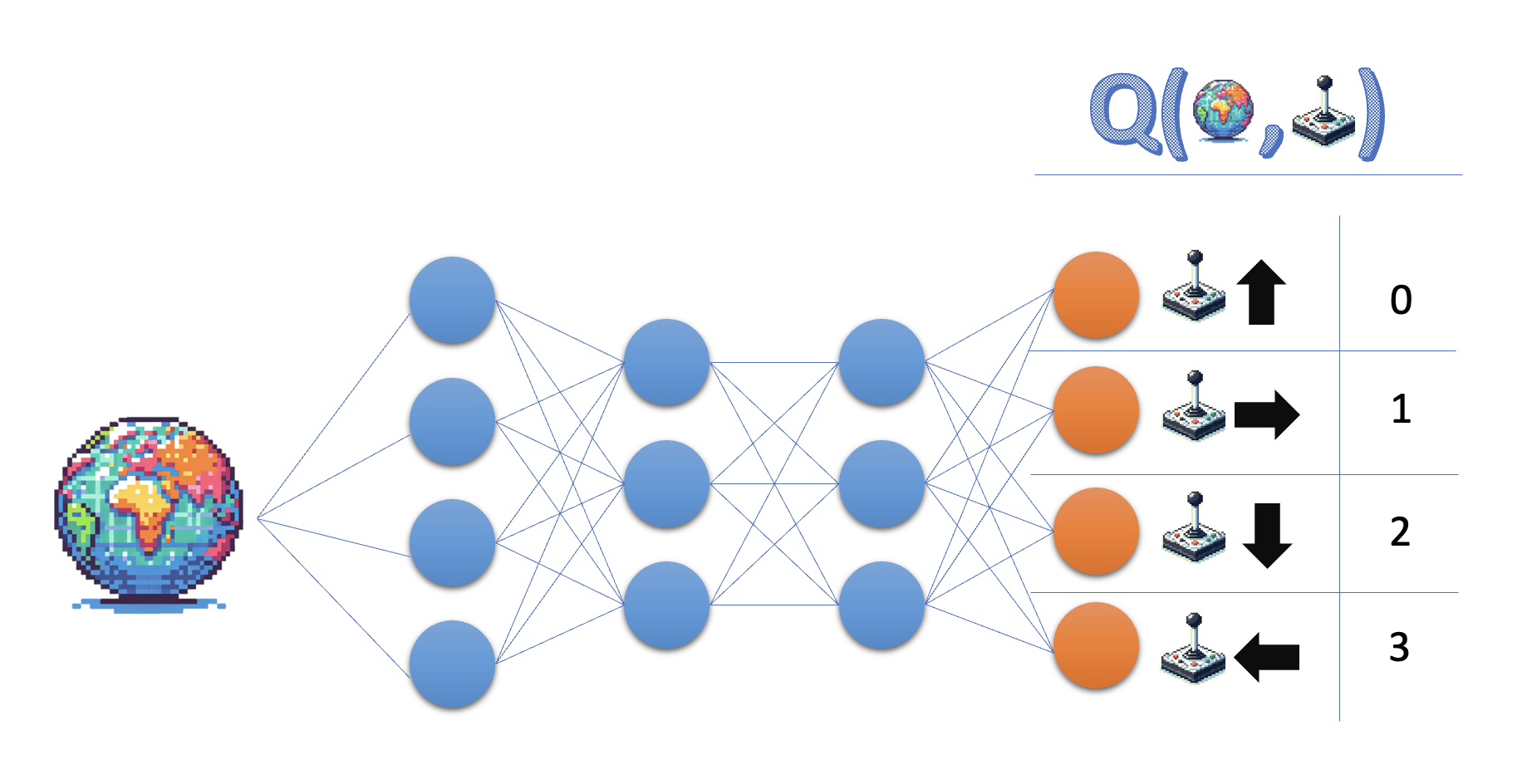

Q-learning:

- Learn the action value function Q

- Policy: select action with highest value

Policy learning:

- Learn the policy directly

Policy learning

- Can be stochastic

- Handle continuous spaces

- Directly optimize for the objective

- High variance

- Less sample efficient

- In Deep-Q learning: policies are deterministic

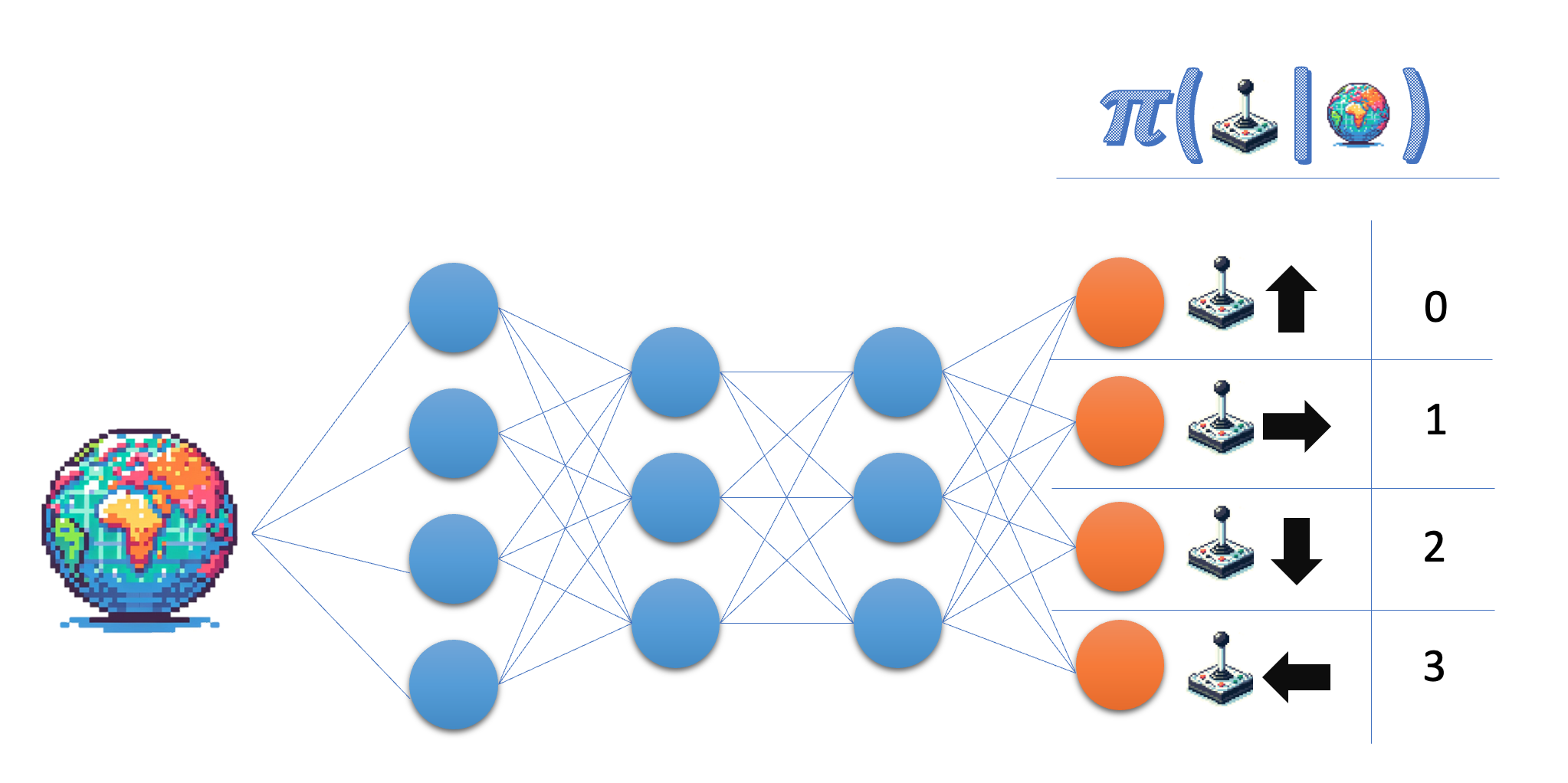

$\pi_\theta(a_t | s_t)$:

- Probability distribution for $a_t$ in state $s_t$, with:

- $a_t$, $s_t$: action and state at step $t$

- $\theta$: policy parameters (network weights)

The policy network (discrete actions)

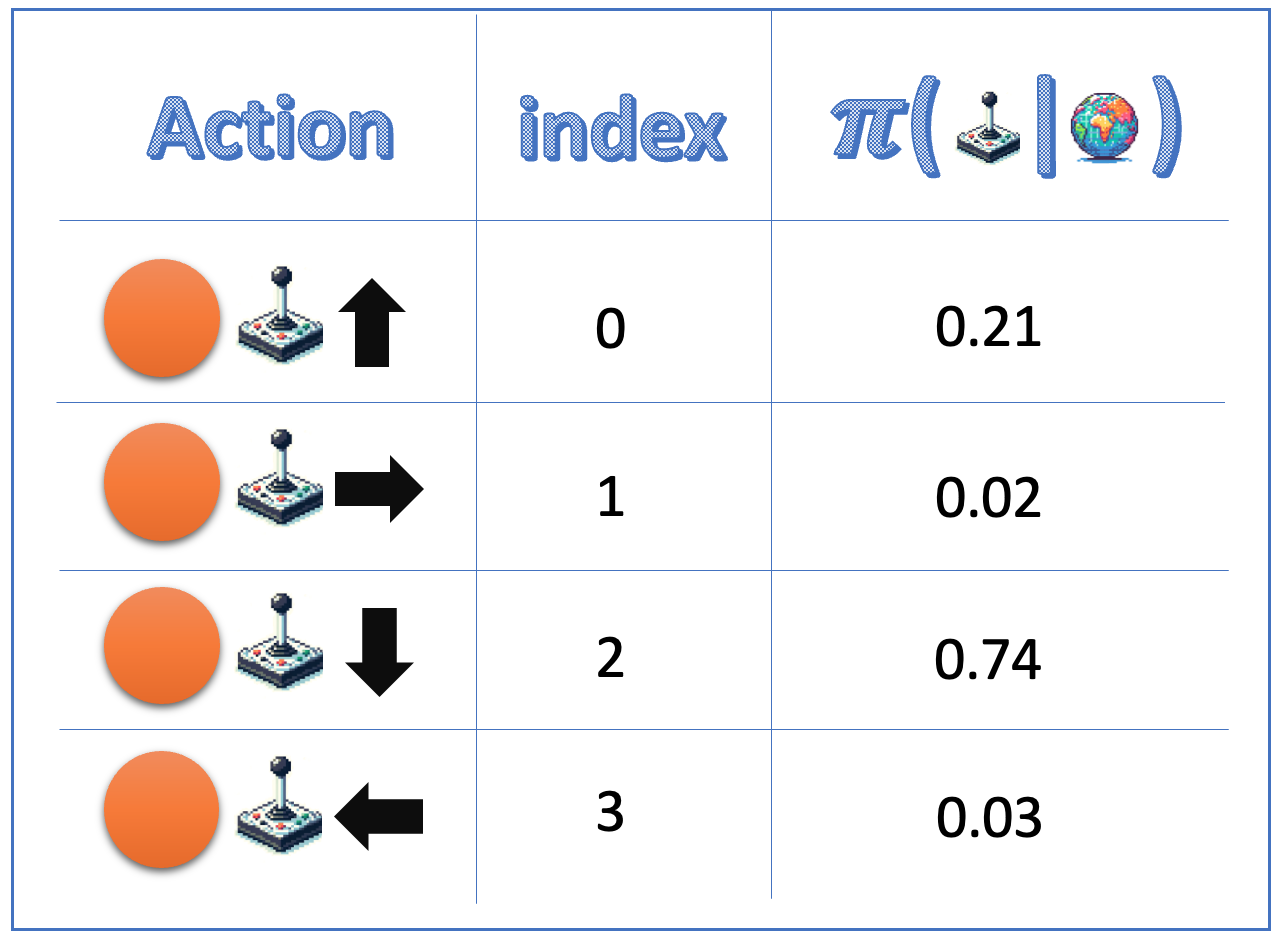

class PolicyNetwork(nn.Module): def __init__(self, state_size, action_size): super(PolicyNetwork, self).__init__() self.fc1 = nn.Linear(state_size, 64) self.fc2 = nn.Linear(64, 64) self.fc3 = nn.Linear(64, action_size) def forward(self, state): x = torch.relu(self.fc1(torch.tensor(state))) x = torch.relu(self.fc2(x)) action_probs = torch.softmax(self.fc3(x), dim=-1) return action_probsaction_probs = policy_network(state) print('Action probabilities:', action_probs)

Action probabilities: tensor([0.21, 0.02, 0.74, 0.03])

action_dist = ( torch.distributions.Categorical(action_probs))action = action_dist.sample()

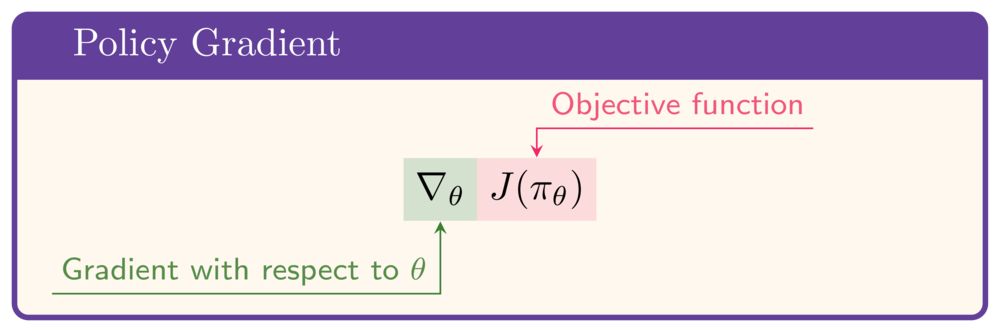

The objective function

Policy must maximize expected returns

- Assuming the agent follows $\pi_\theta$

- By optimizing policy parameter $\theta$

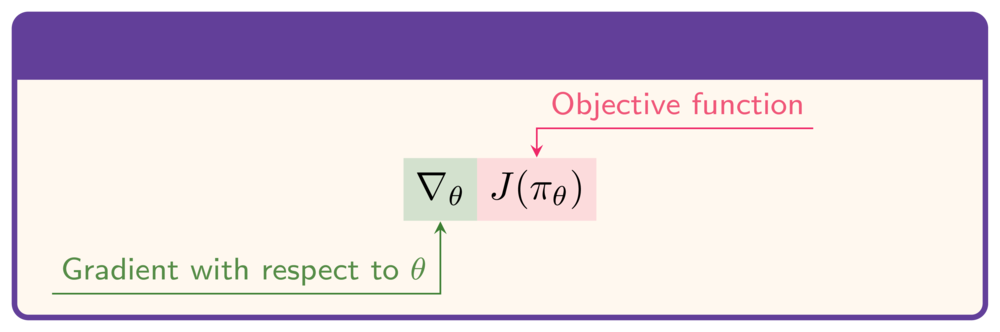

Objective function:

- To maximize $J$: need gradient with respect to $\theta$:

The objective function

Policy must maximize expected returns

- Assuming the agent follows $\pi_\theta$

- By optimizing policy parameter $\theta$

Objective function:

- To maximize $J$: need gradient with respect to $\theta$:

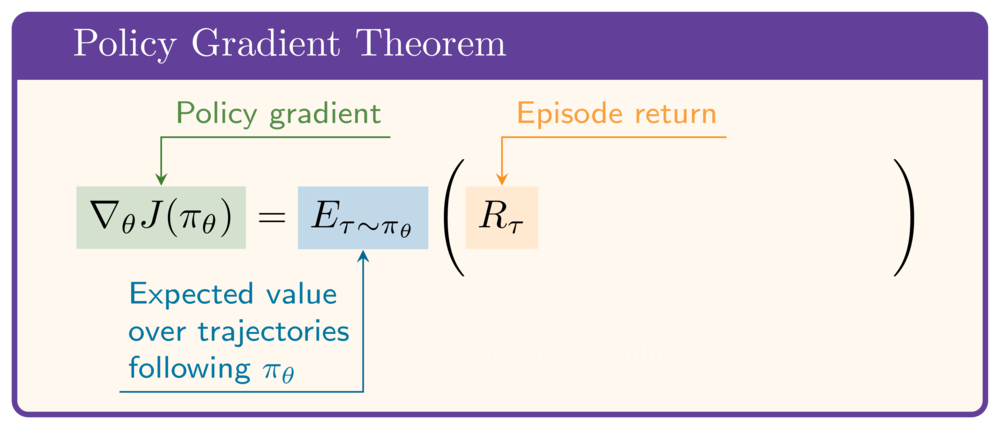

The policy gradient theorem

- Gives a tractable expression for $\nabla_\theta J(\pi_\theta)$

- Expectation over trajectories following $\pi_\theta$

- Collect trajectories and observe the returns

The policy gradient theorem

- Gives a tractable expression for $\nabla_\theta J(\pi_\theta)$

- Expectation over trajectories following $\pi_\theta$

- Collect trajectories and observe the returns

- For each trajectory: consider return $R_\tau$

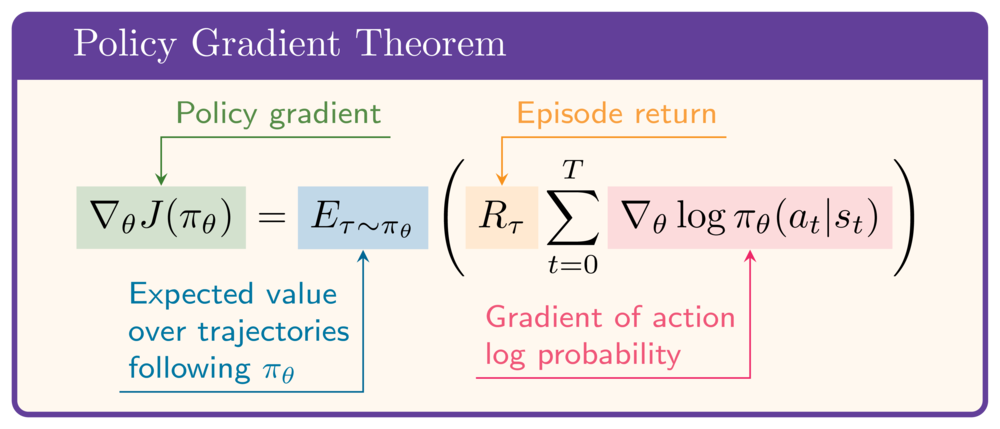

The policy gradient theorem

- Gives a tractable expression for $\nabla_\theta J(\pi_\theta)$

- Expectation over trajectories following $\pi_\theta$

- Collect trajectories and observe the returns

- For each trajectory: consider return $R_\tau$

- Multiply by the sum of gradients of log probabilities of selected actions

- Intuition: nudge theta in ways that increase probability of all actions taken in a 'good' episode

Let's practice!

Deep Reinforcement Learning in Python