Proximal policy optimization

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

A2C

- A2C policy updates:

- Based on volatile estimates

- Can be large and unstable

- May harm performance

PPO

- PPO sets limits on the size of each policy update

- Improves stability

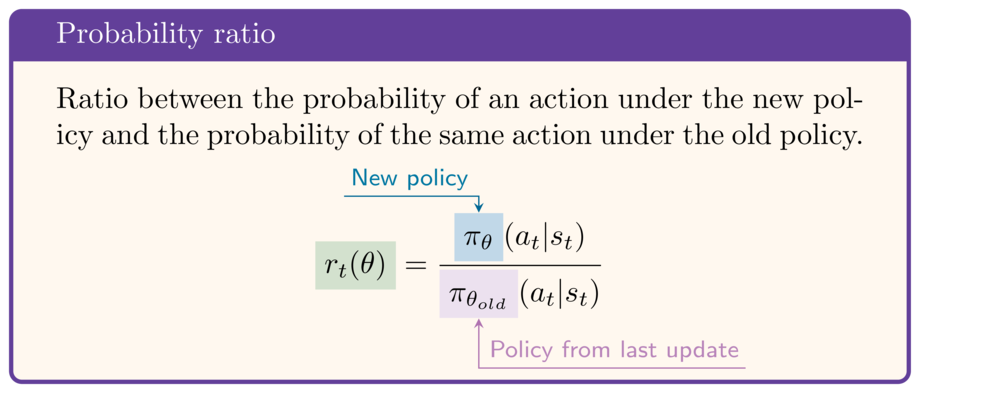

The probability ratio

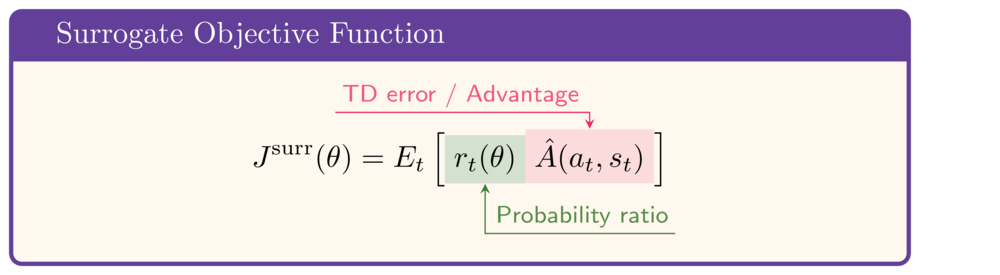

- PPO main innovation: a new objective function

- At its core:

- How much more likely is action $a_t$ with $\theta$ than with $\theta_{old}$?

ratio = action_log_prob.exp() / old_action_log_prob.exp().detach()# Or equivalently ratio = torch.exp(action_log_prob - old_action_log_prob.detach())

detachthe denominator to prevent gradient propagation

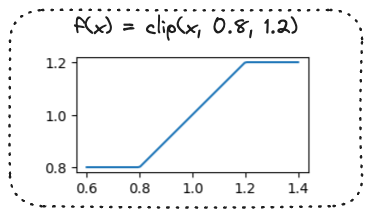

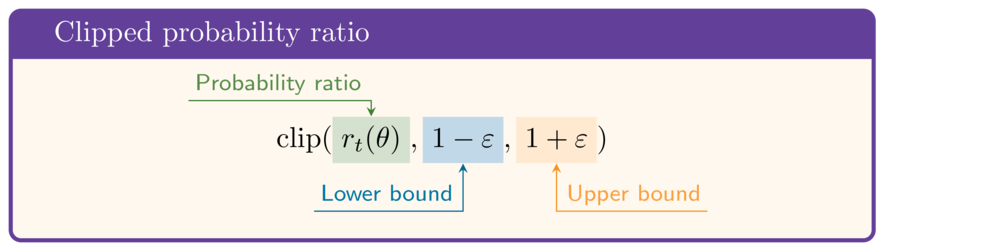

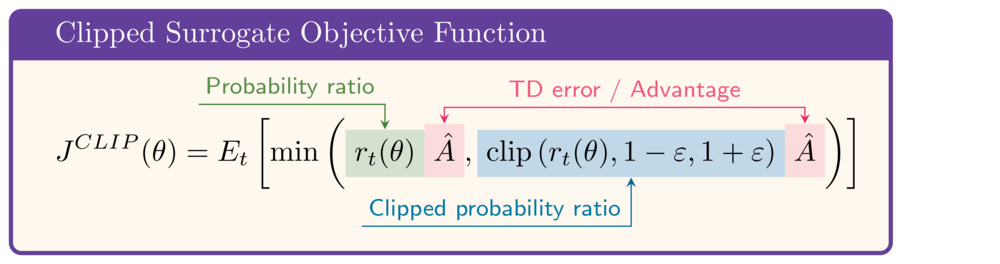

Clipping the probability ratio

- Clip function:

clipped_ratio = torch.clamp(ratio,

1-epsilon,

1+epsilon)

The calculate_ratios function

def calculate_ratios(action_log_prob, action_log_prob_old, epsilon):prob = action_log_prob.exp() prob_old = action_log_prob_old.exp() prob_old_detached = prob_old.detach() ratio = prob / prob_old_detached clipped_ratio = torch.clamp(ratio, 1-epsilon, 1+epsilon)return (ratio, clipped_ratio)

Example with epsilon = .2:Ratio: tensor(1.25)Clipped ratio: tensor(1.20)

The PPO objective function

surr1 = ratio * td_error.detach()surr2 = clipped_ratio * td_error.detach()objective = torch.min(surr1, surr2)

- Surrogate with clipped ratio:

$$\mathrm{clip}(r_t(\theta),1-\varepsilon,1+\varepsilon)\hat{A}$$

- PPO clipped surrogate objective function:

- More stable than A2C

PPO loss calculation

def calculate_losses(critic_network,

action_log_prob,

action_log_prob_old,

reward, state, next_state,

done

):

# calculate TD error (same as A2C)

value = critic_network(state)

next_value = critic_network(next_state)

td_target = (reward +

gamma * next_value * (1-done))

td_error = td_target - value

...

... ratio, clipped_ratio = calculate_ratios(action_log_prob, action_log_prob_old, epsilon)surr1 = ratio * td_error.detach()surr2 = clipped_ratio * td_error.detach()objective = torch.min(surr1, surr2)actor_loss = -objectivecritic_loss = td_error ** 2 return actor_loss, critic_loss

Let's practice!

Deep Reinforcement Learning in Python