Transforming features for better clusterings

Unsupervised Learning in Python

Benjamin Wilson

Director of Research at lateral.io

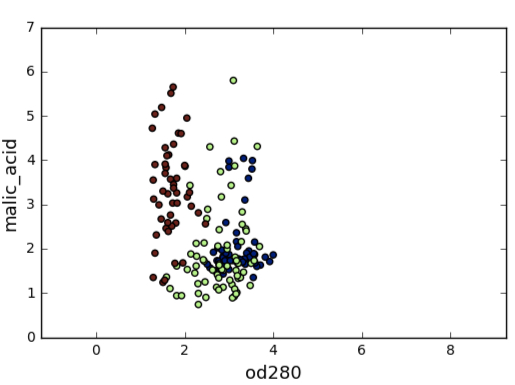

Piedmont wines dataset

178 samples from 3 distinct varieties of red wine: Barolo, Grignolino and Barbera

Features measure chemical composition e.g. alcohol content

Visual properties like "color intensity"

Clustering the wines

from sklearn.cluster import KMeans

model = KMeans(n_clusters=3)

labels = model.fit_predict(samples)

Clusters vs. varieties

df = pd.DataFrame({'labels': labels, 'varieties': varieties}) ct = pd.crosstab(df['labels'], df['varieties'])print(ct)

varieties Barbera Barolo Grignolino

labels

0 29 13 20

1 0 46 1

2 19 0 50

Feature variances

The wine features have very different variances!

Variance of a feature measures spread of its values

feature variance

alcohol 0.65

malic_acid 1.24

...

od280 0.50

proline 99166.71

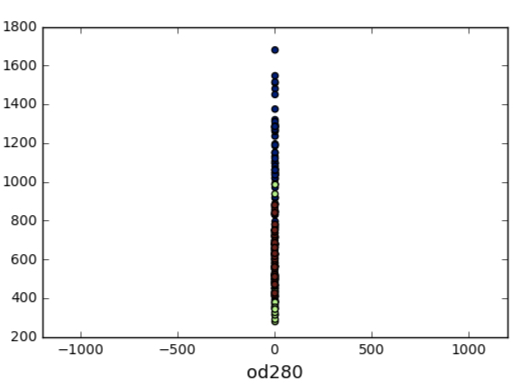

Feature variances

The wine features have very different variances!

Variance of a feature measures spread of its values

feature variance

alcohol 0.65

malic_acid 1.24

...

od280 0.50

proline 99166.71

StandardScaler

In kmeans: feature variance = feature influence

StandardScalertransforms each feature to have mean 0 and variance 1Features are said to be "standardized"

sklearn StandardScaler

from sklearn.preprocessing import StandardScalerscaler = StandardScaler()scaler.fit(samples) StandardScaler(copy=True, with_mean=True, with_std=True)samples_scaled = scaler.transform(samples)

Similar methods

StandardScalerandKMeanshave similar methodsUse

fit()/transform()withStandardScalerUse

fit()/predict()withKMeans

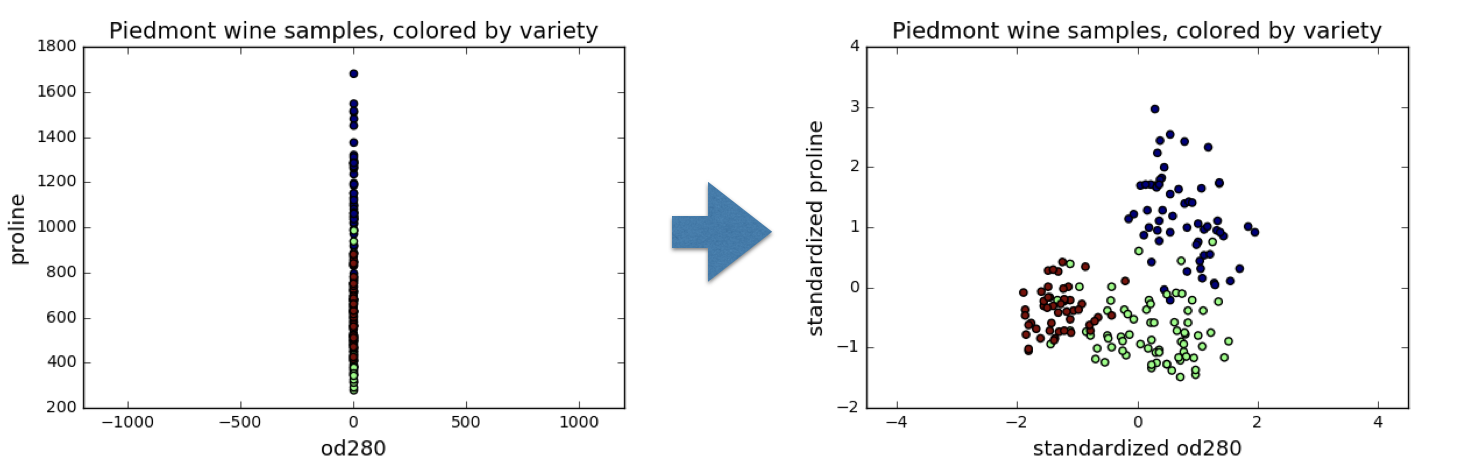

StandardScaler, then KMeans

Need to perform two steps:

StandardScaler, thenKMeansUse

sklearnpipeline to combine multiple stepsData flows from one step into the next

Pipelines combine multiple steps

from sklearn.preprocessing import StandardScaler from sklearn.cluster import KMeans scaler = StandardScaler() kmeans = KMeans(n_clusters=3)from sklearn.pipeline import make_pipelinepipeline = make_pipeline(scaler, kmeans)pipeline.fit(samples)

Pipeline(steps=...)

labels = pipeline.predict(samples)

Feature standardization improves clustering

With feature standardization:

varieties Barbera Barolo Grignolino

labels

0 0 59 3

1 48 0 3

2 0 0 65

Without feature standardization was very bad:

varieties Barbera Barolo Grignolino

labels

0 29 13 20

1 0 46 1

2 19 0 50

sklearn preprocessing steps

StandardScaleris a "preprocessing" stepMaxAbsScalerandNormalizerare other examples

Let's practice!

Unsupervised Learning in Python