Understanding model optimization

Introduction to Deep Learning in Python

Dan Becker

Data Scientist and contributor to Keras and TensorFlow libraries

Why optimization is hard

- Simultaneously optimizing 1000s of parameters with complex relationships

- Updates may not improve model meaningfully

- Updates too small (if learning rate is low) or too large (if learning rate is high)

Stochastic gradient descent

def get_new_model(input_shape = input_shape):

model = Sequential()

model.add(Dense(100, activation='relu', input_shape = input_shape))

model.add(Dense(100, activation='relu'))

model.add(Dense(2, activation='softmax'))

return(model)

lr_to_test = [.000001, 0.01, 1]

# Loop over learning rates

for lr in lr_to_test:

model = get_new_model()

my_optimizer = SGD(lr=lr)

model.compile(optimizer = my_optimizer, loss = 'categorical_crossentropy')

model.fit(predictors, target)

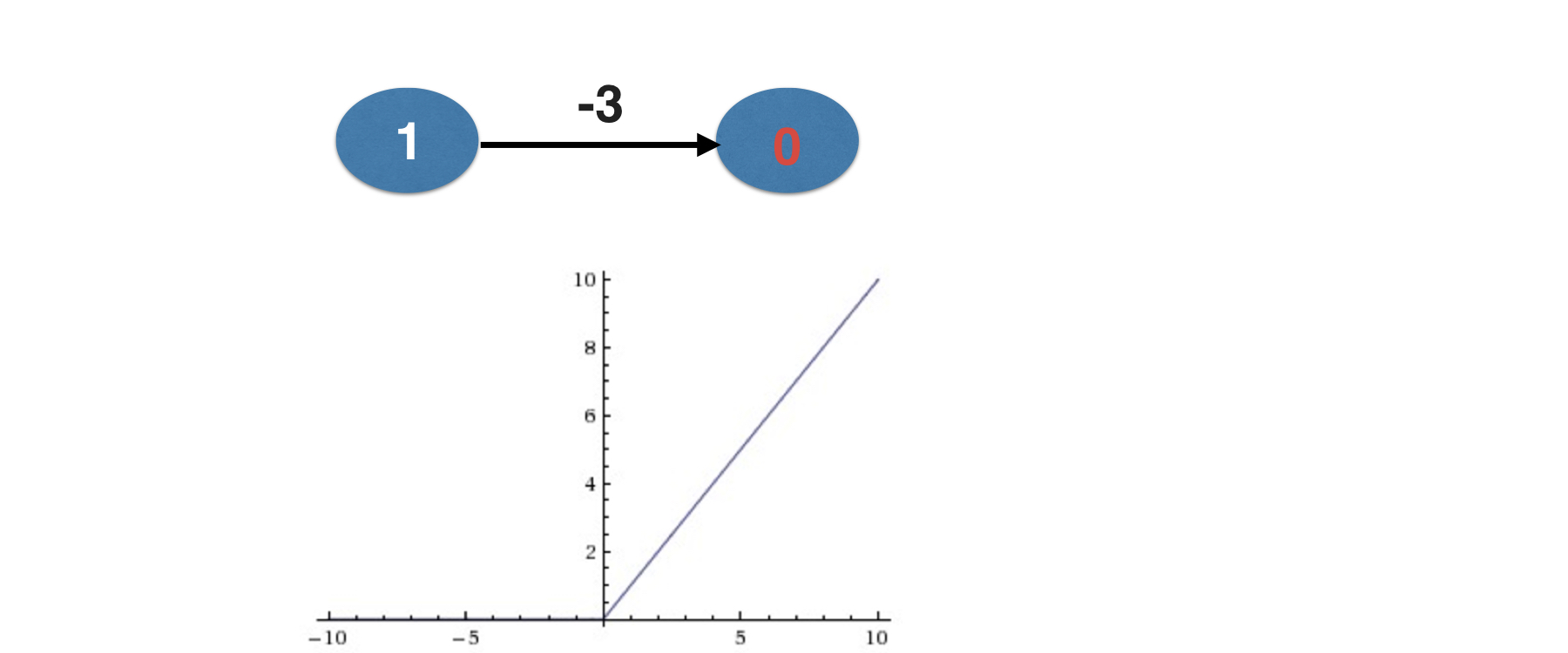

The dying neuron problem

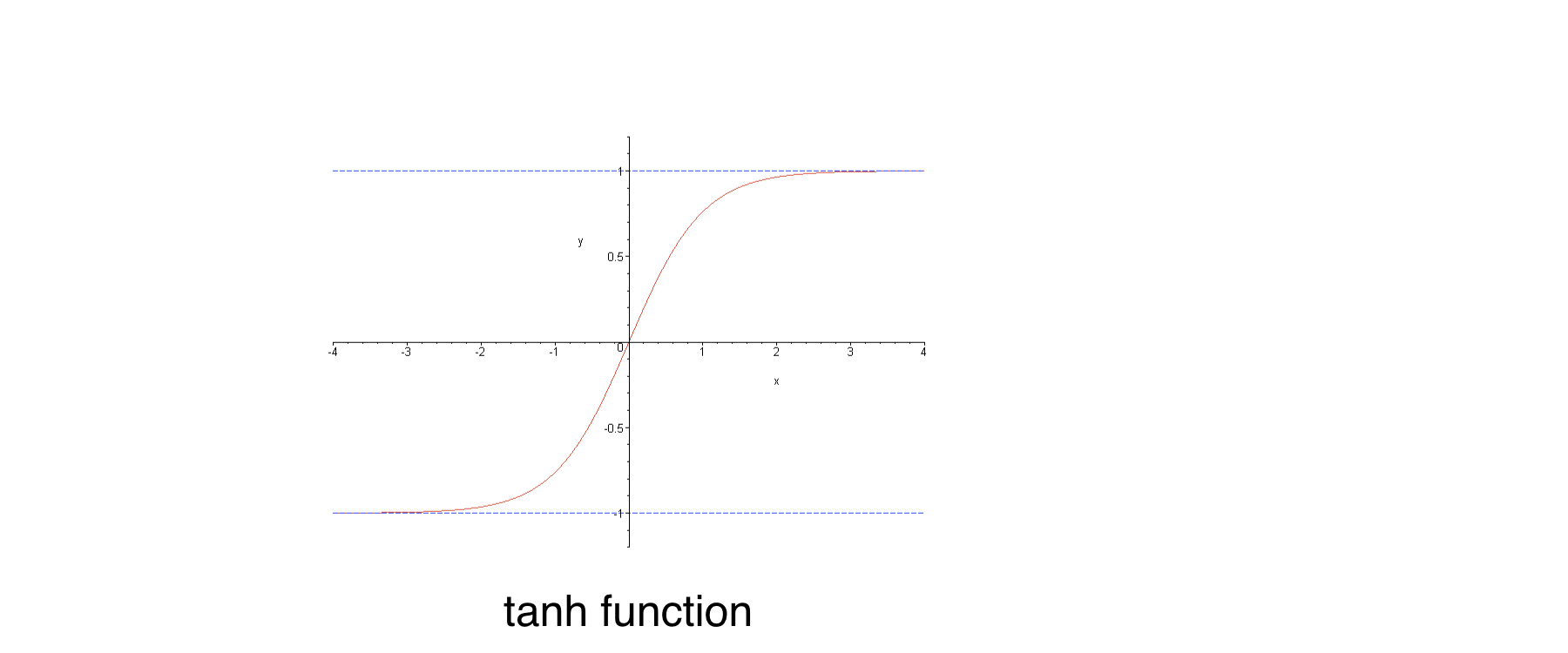

Vanishing gradients

Vanishing gradients

- Occurs when many layers have very small slopes (e.g. due to being on flat part of tanh curve)

- In deep networks, updates to backprop were close to 0

Let's practice!

Introduction to Deep Learning in Python