Introduction to deep Q learning

Deep Reinforcement Learning in Python

Timothée Carayol

Principal Machine Learning Engineer, Komment

What is Deep Q Learning?

Q-Learning refresher

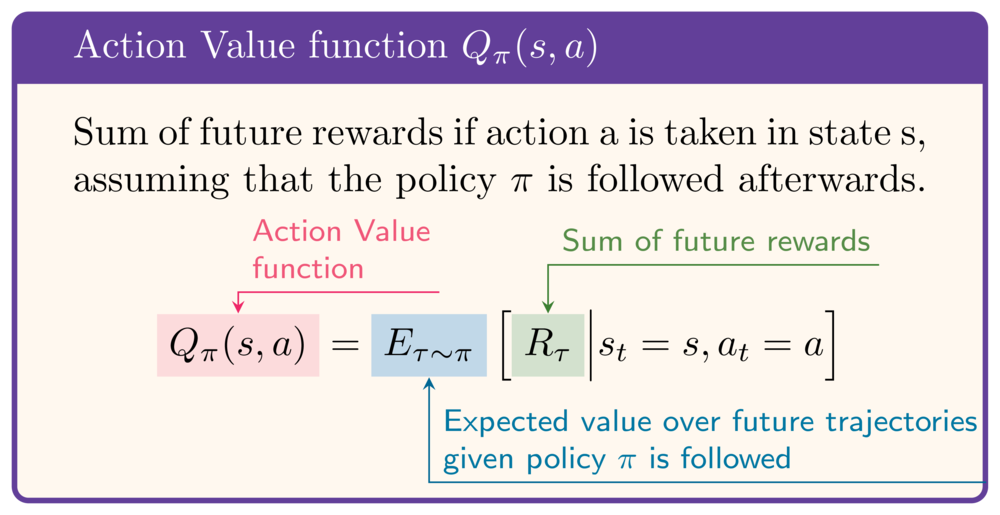

Knowledge of $Q$ would enable optimal policy: $$ \pi(s_t) = {\arg\max}_a Q(s_t, a) $$

Goal of Q-learning: learn $Q$ over time

Q-Learning refresher

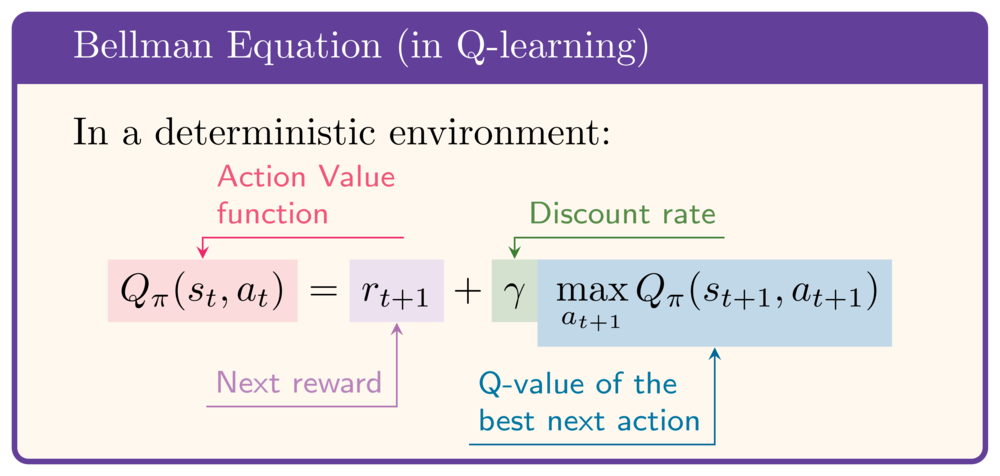

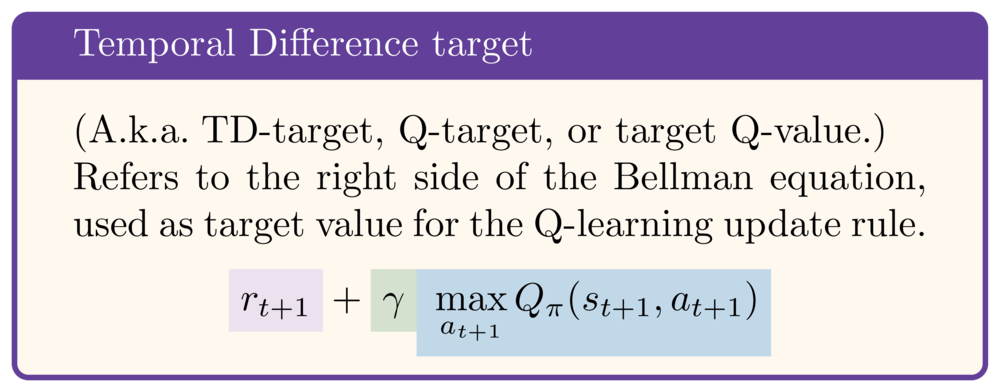

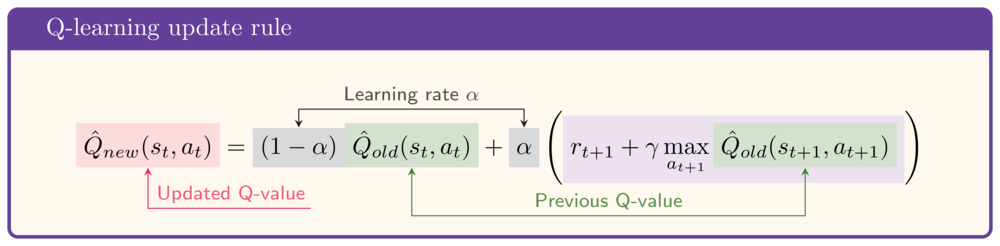

- Bellman equation: recursive formula for $Q$

- Right side of Bellman Equation: "TD-target"

- Use TD-target from Bellman Equation to update $\hat{Q}$ after each step

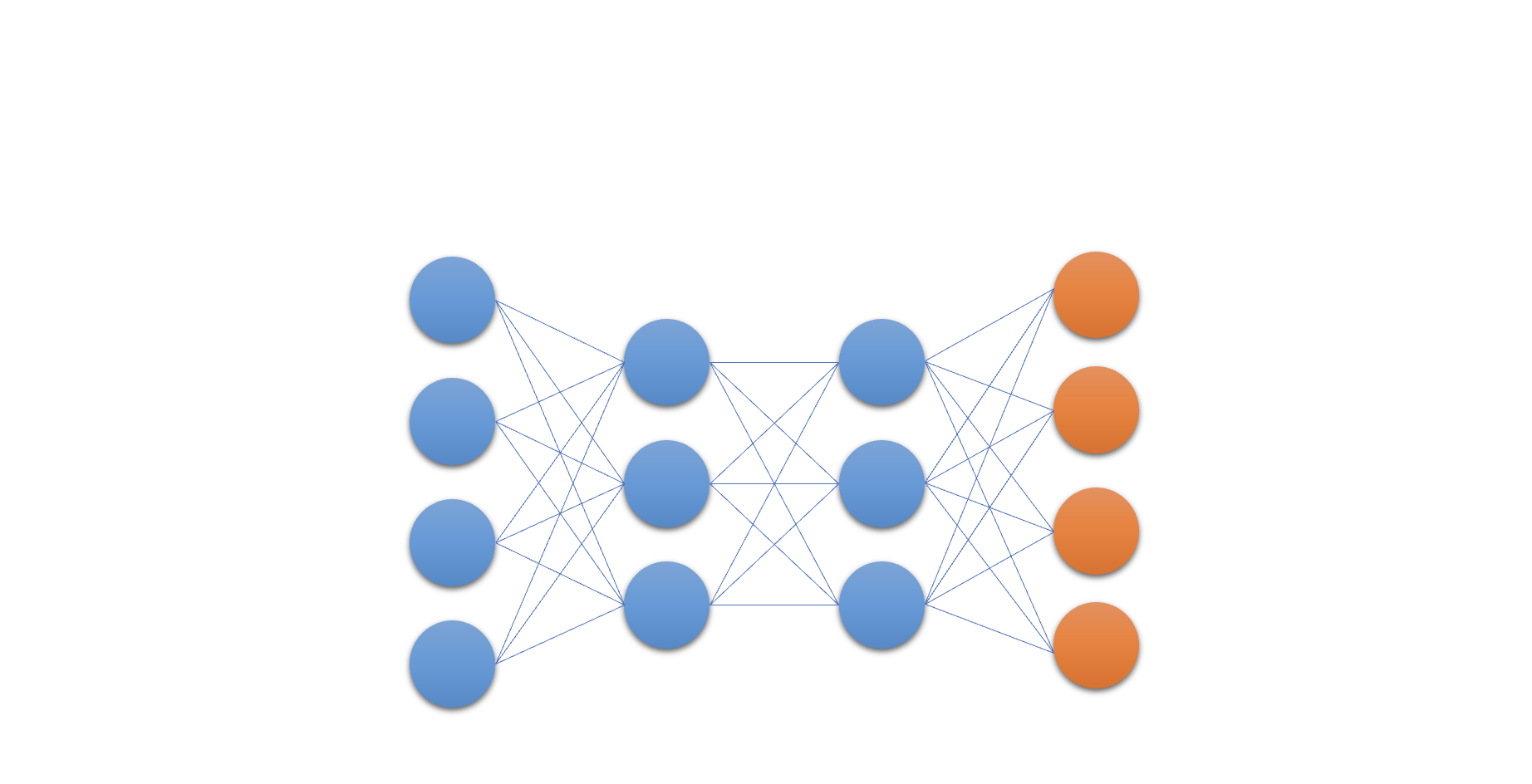

The Q-Network

The Q-Network

The Q-Network

The Q-Network

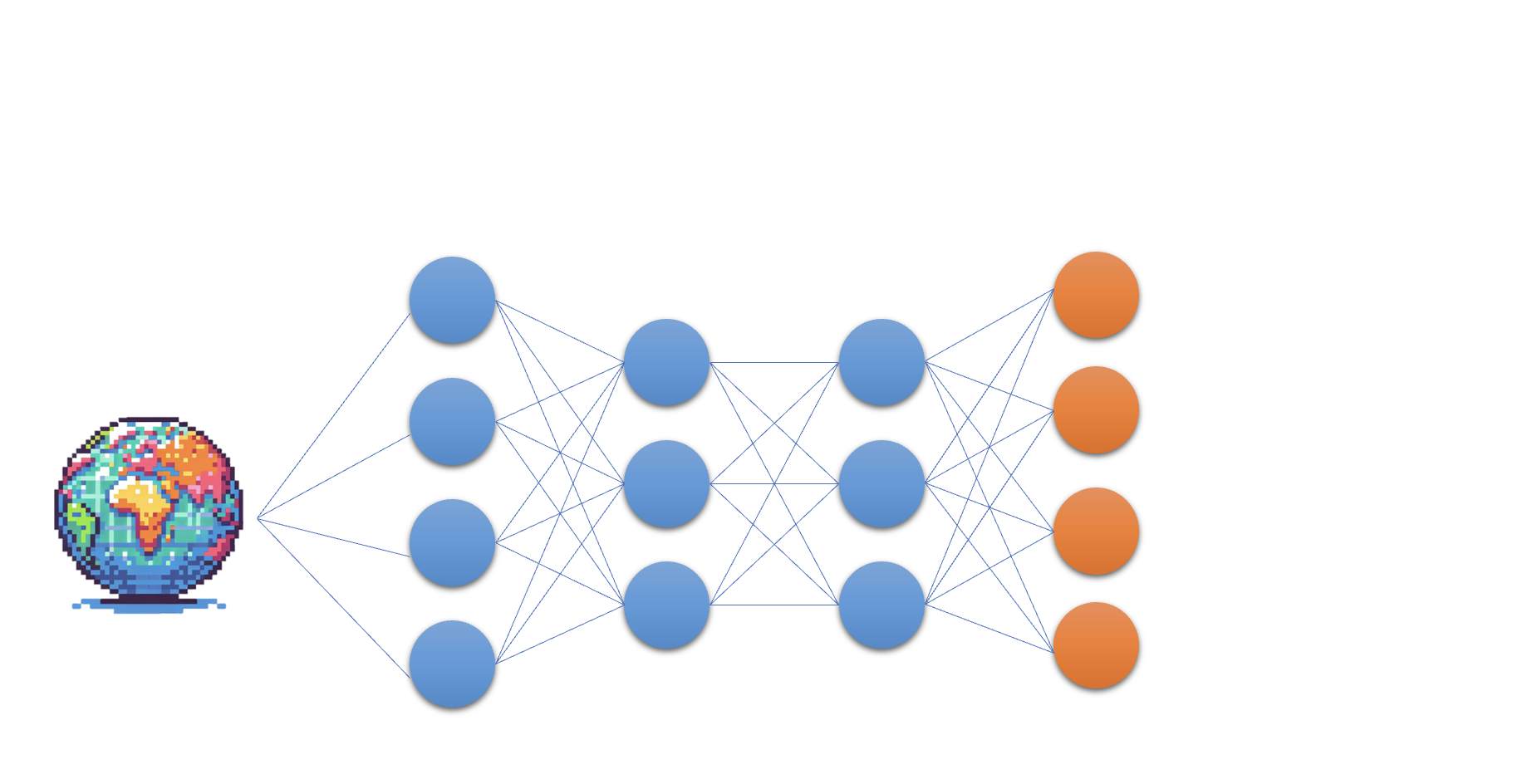

- At the heart of Deep Q Learning: a neural network

The Q-Network

- At the heart of Deep Q Learning: a neural network

The Q-Network

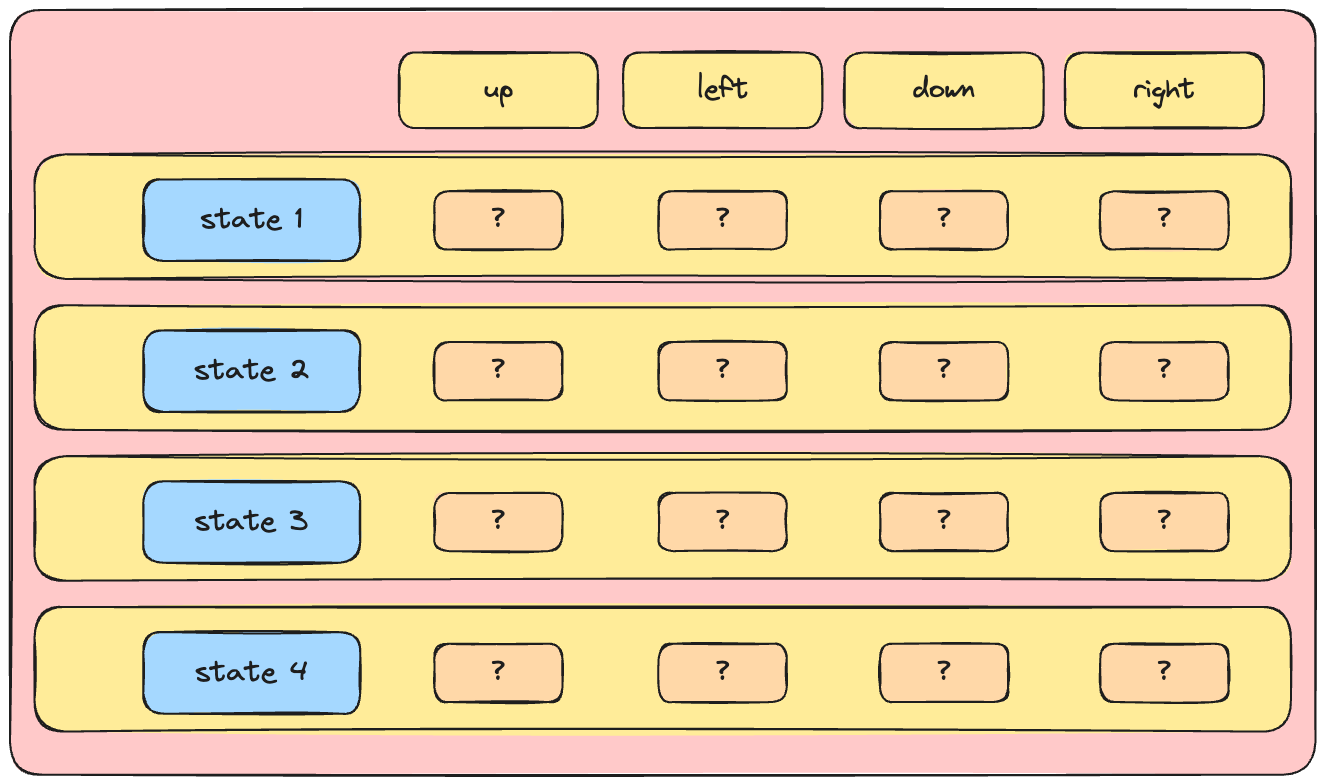

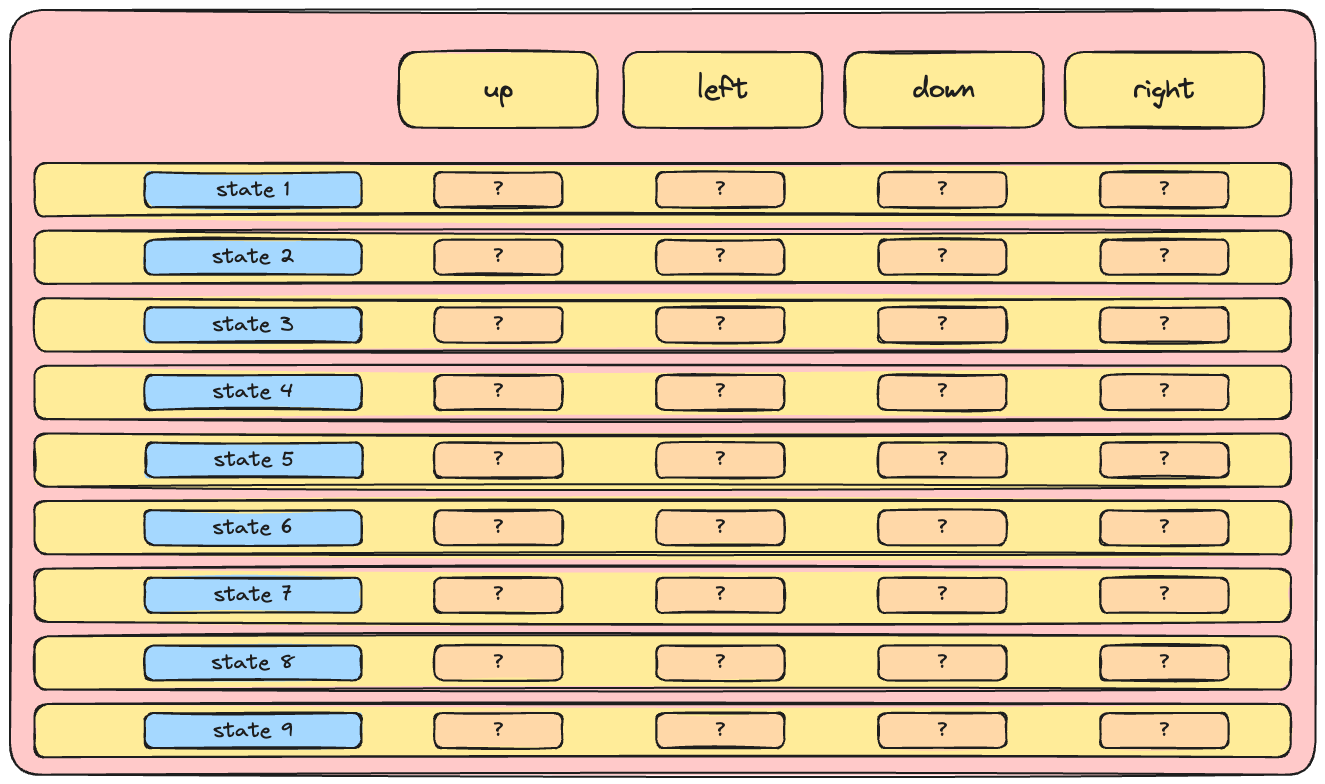

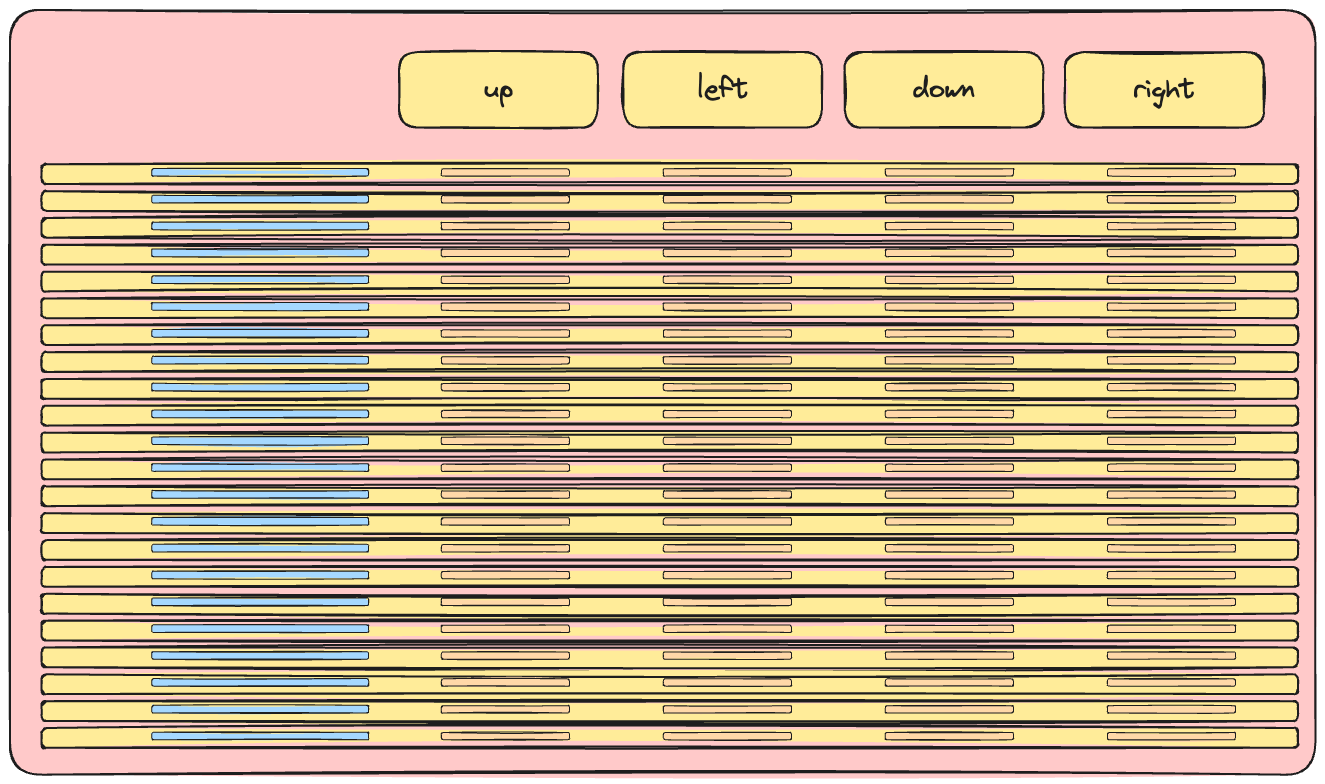

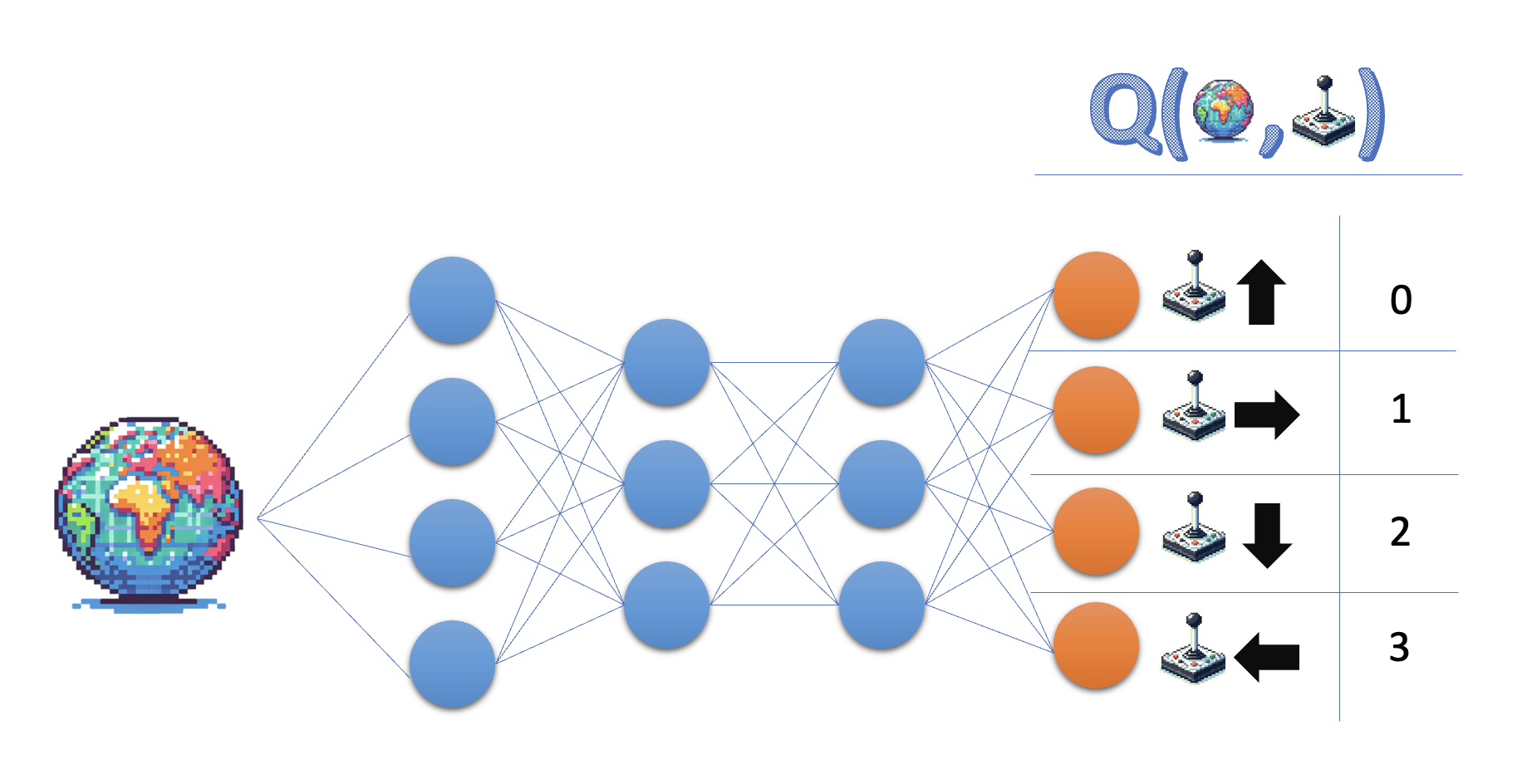

- At the heart of Deep Q Learning: a neural network mapping state to Q-values

- A network approximating the action-value function is called 'Q-network'

- Q-networks are commonly used in Deep Q Learning algorithms, such as DQN.

Implementing the Q-network

class QNetwork(nn.Module):def __init__(self, state_size, action_size): super(QNetwork, self).__init__()self.fc1 = nn.Linear(state_size, 64) self.fc2 = nn.Linear(64, 64) self.fc3 = nn.Linear(64, action_size)def forward(self, state): x = torch.relu(self.fc1(torch.tensor(state))) x = torch.relu(self.fc2(x)) return self.fc3(x)q_network = QNetwork(8, 4)optimizer = optim.Adam(q_network.parameters(), lr=0.0001)

- Input dimension determined by state

Output dimension determined by number of possible actions

In this example:

- 2 hidden layers with 64 nodes each

- ReLu activation function

Let's practice!

Deep Reinforcement Learning in Python