Data transformations in Databricks

Databricks Concepts

Kevin Barlow

Data Practitioner

SQL for data engineering

SQL

- Familiar for Database Administrators (DBAs)

- Great for standard manipulations

- Execute pre-defined UDFs

-- Creating a new table in SQL

CREATE TABLE table_name

USING delta

AS (

SELECT *

FROM source_table

WHERE date >= '2023-01-01'

)

Other languages for data engineering

Python, R, Scala

- Familiar for software engineers

- Standard and complex transformations

- Use and define custom functions

#Creating a new table in Pyspark

spark

.read

.table('source_table')

.filter(col('date') >= '2023-01-01')

.write

.saveAsTable('table_name')

Common transformations

Schema manipulation

- Add and remove columns

- Redefine columns

#Pyspark

df

.withColumn(col('newCol'), ...)

.drop(col('oldCol'))

Filtering

- Reduce DataFrame to subset of data

- Pass multiple criteria

#Pyspark

df

.filter(col('date') >= target_date)

.filter(col('id') IS NOT NULL)

Common transformations (continued)

Nested data

- Arrays or Struct data

- Expand or contract

df

.explode(col('arrayCol')) #wide to long

.flatten(col('items')) #long to wide

Aggregation

- Group data based on columns

- Calculate data summarizations

df

.groupBy(col('region'))

.agg(sum(col('sales')))

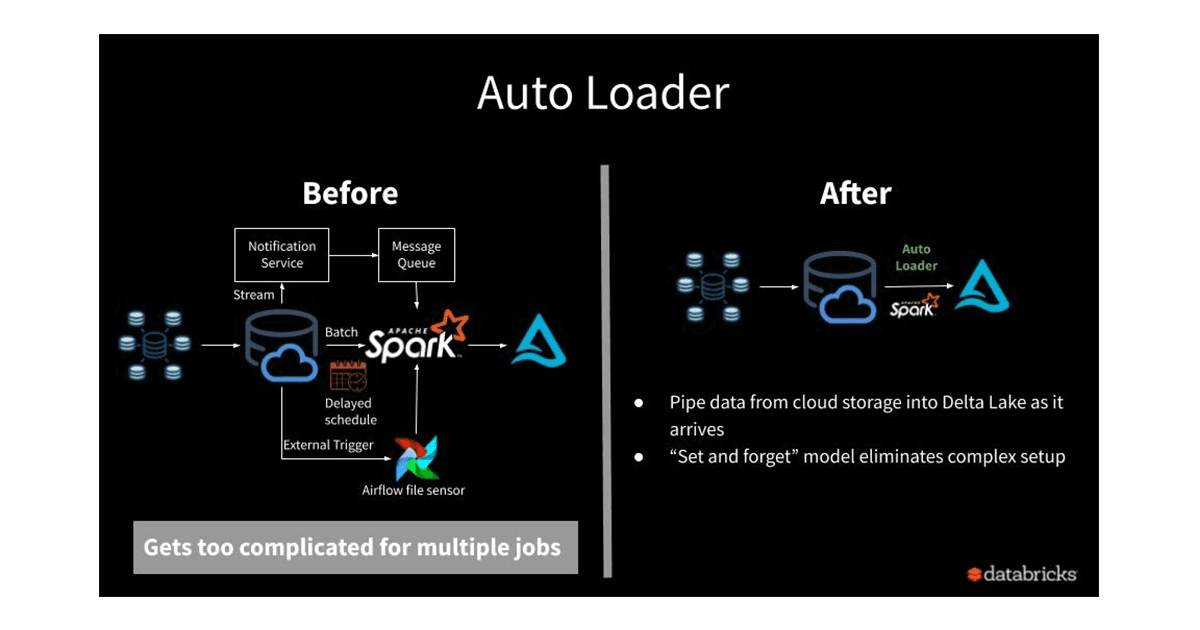

Auto Loader

Auto Loader processes new data files as they land in a data lake.

- Incremental processing

- Efficient processing

- Automatic

spark.readStream

.format("cloudFiles")

.option("cloudFiles.format", "json")

.load(file_path)

1 https://www.databricks.com/blog/2020/02/24/introducing-databricks-ingest-easy-data-ingestion-into-delta-lake.html

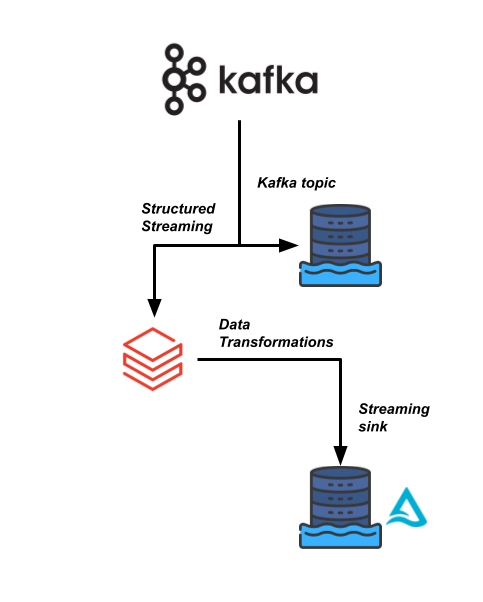

Structured Streaming

spark.readStream

.format("kafka")

.option("subscribe", "<topic>")

.load()

.join(table_df,

on="<id>", how="left")

.writeStream

.format("kafka")

.option("topic", "<topic>")

.start()

Let's practice!

Databricks Concepts