Metrics for language tasks: ROUGE, METEOR, EM

Introduction to LLMs in Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

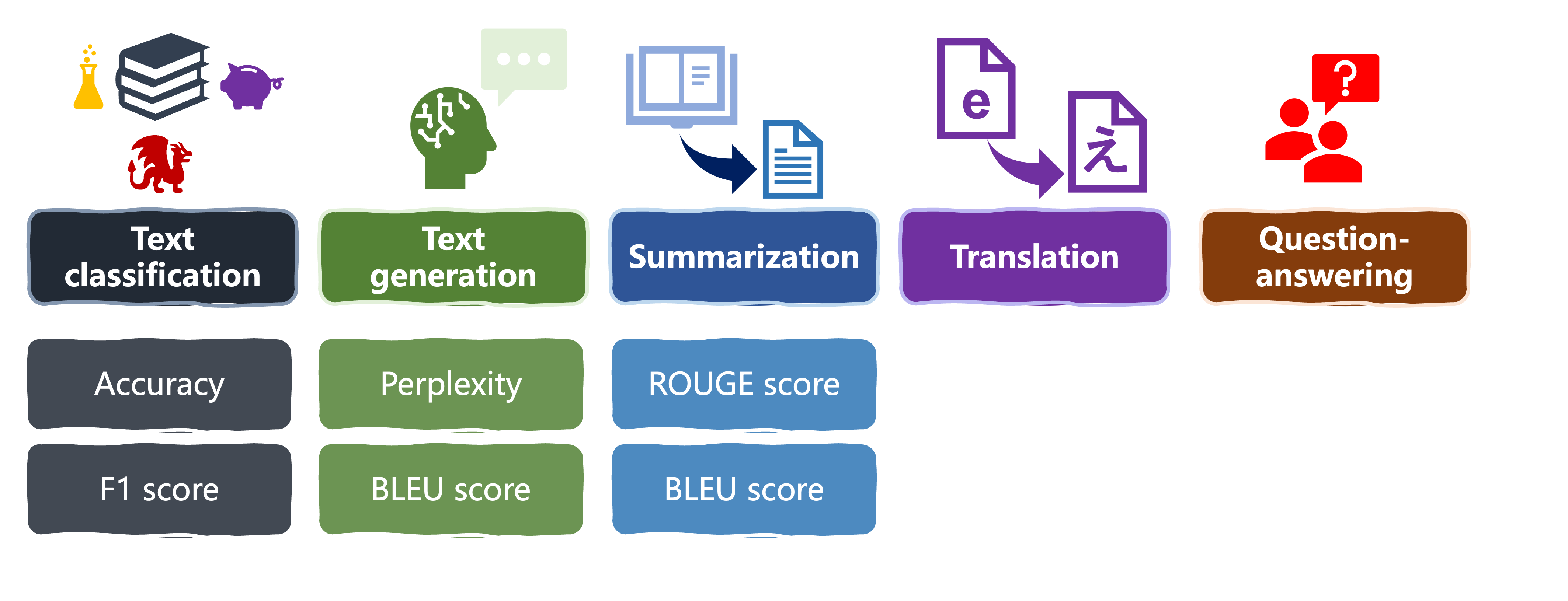

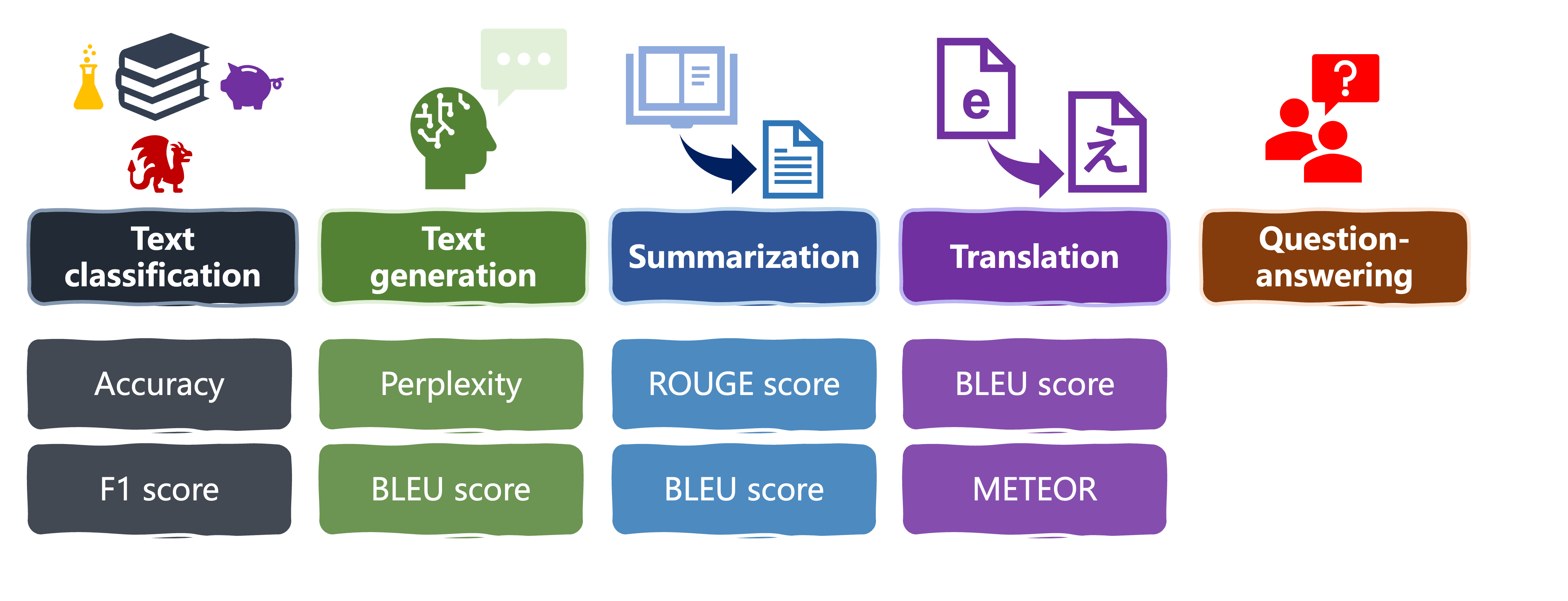

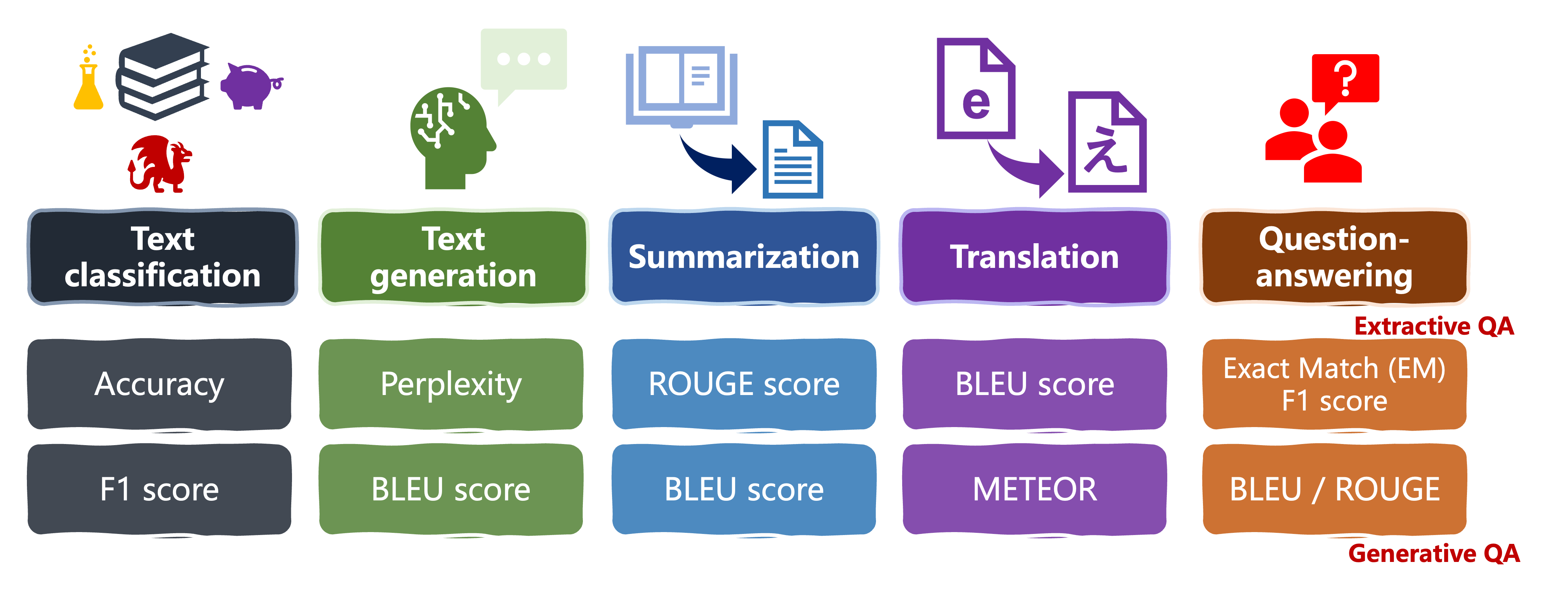

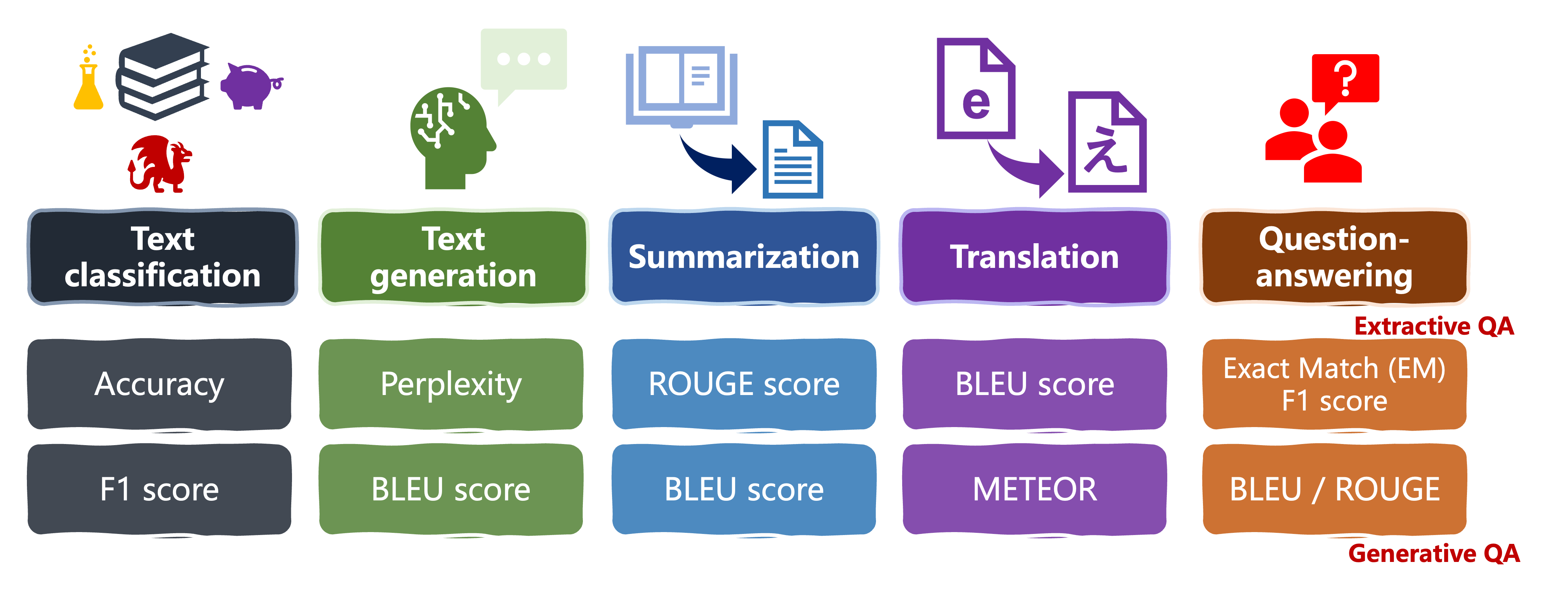

LLM tasks and metrics

LLM tasks and metrics

LLM tasks and metrics

ROUGE

- ROUGE: similarity between generated a summary and reference summaries

- Looks at n-grams and overlapping

predictions:LLM outputsreferences: human-provided summaries

ROUGE

rouge = evaluate.load("rouge")

predictions = ["""as we learn more about the frequency and size distribution of

exoplanets, we are discovering that terrestrial planets are exceedingly common."""]

references = ["""The more we learn about the frequency and size distribution of

exoplanets, the more confident we are that they are exceedingly common."""]

ROUGE scores:

rouge1: unigram overlaprouge2: bigram overlaprougeL: long overlapping subsequences

ROUGE outputs

ROUGE scores:

rouge1: unigram overlaprouge2: bigram overlaprougeL: long overlapping subsequences

- Scores between 0-1: higher score indicates higher similarity

results = rouge.compute(predictions=predictions,

references=references)

print(results)

{'rouge1': 0.7441860465116279,

'rouge2': 0.4878048780487805,

'rougeL': 0.6976744186046512,

'rougeLsum': 0.6976744186046512}

METEOR

- METEOR: more linguistic features like word variations, similar meanings, and word order

bleu = evaluate.load("bleu") meteor = evaluate.load("meteor")prediction = ["He thought it right and necessary to become a knight-errant, roaming the world in armor, seeking adventures and practicing the deeds he had read about in chivalric tales."] reference = ["He believed it was proper and essential to transform into a knight-errant, traveling the world in armor, pursuing adventures, and enacting the heroic deeds he had encountered in tales of chivalry."]

METEOR

results_bleu = bleu.compute(predictions=pred, references=ref)

results_meteor = meteor.compute(predictions=pred, references=ref)

print("Bleu: ", results_bleu['bleu'])

print("Meteor: ", results_meteor['meteor'])

Bleu: 0.19088841781992524

Meteor: 0.5350702240481536

0-1score: higher is better

Question and answering

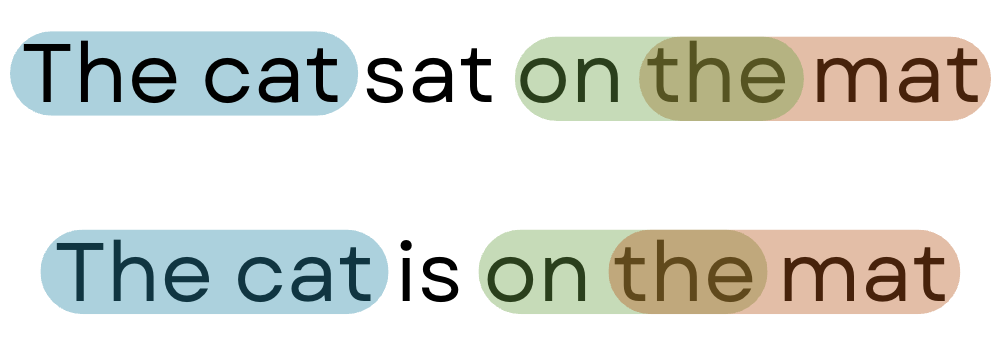

Exact Match (EM)

- Exact Match (EM): 1 if an LLM's output exactly matches its reference answer

- Normally used in conjunction with F1 score

from evaluate import load

em_metric = load("exact_match")

exact_match = evaluate.load("exact_match")

predictions = ["The cat sat on the mat.",

"Theaters are great.",

"Like comparing oranges and apples."]

references = ["The cat sat on the mat?",

"Theaters are great.",

"Like comparing apples and oranges."]

results = exact_match.compute(

references=references, predictions=predictions)

print(results)

{'exact_match': 0.3333333333333333}

Let's practice!

Introduction to LLMs in Python