Fine-tuning approaches

Introduction to LLMs in Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

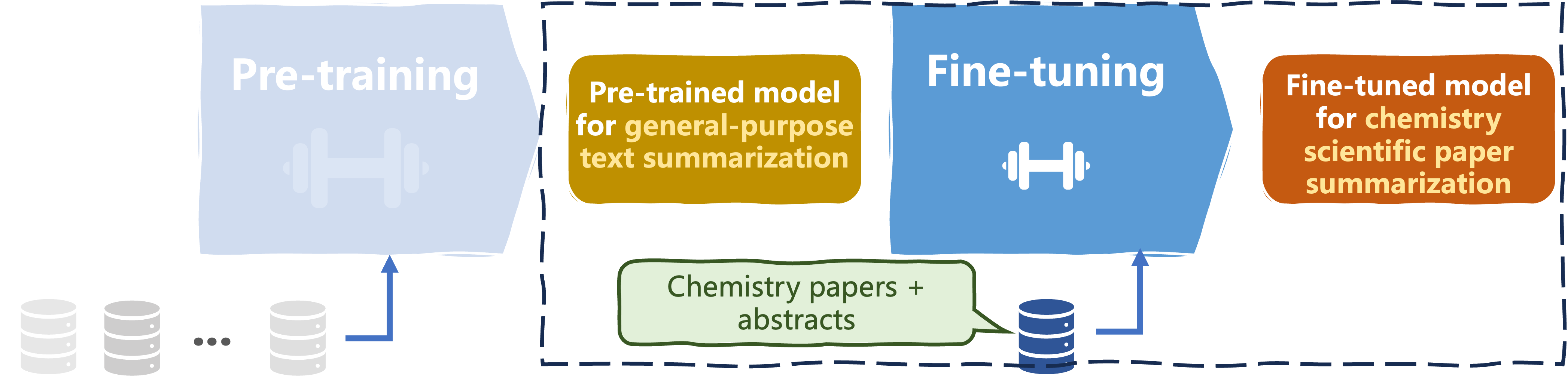

Fine-tuning

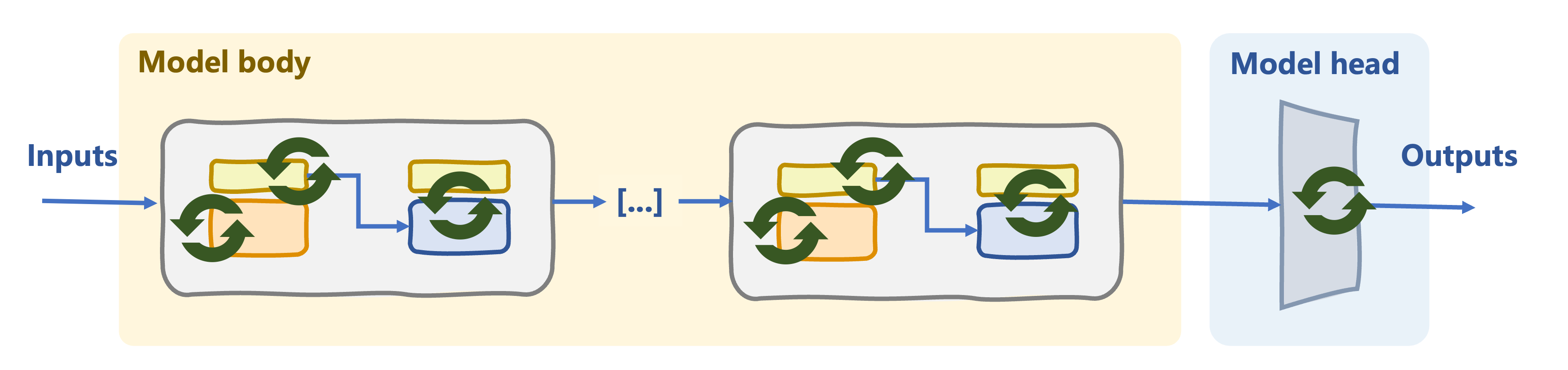

Full fine-tuning

- The entire model weights are updated

- Computationally expensive

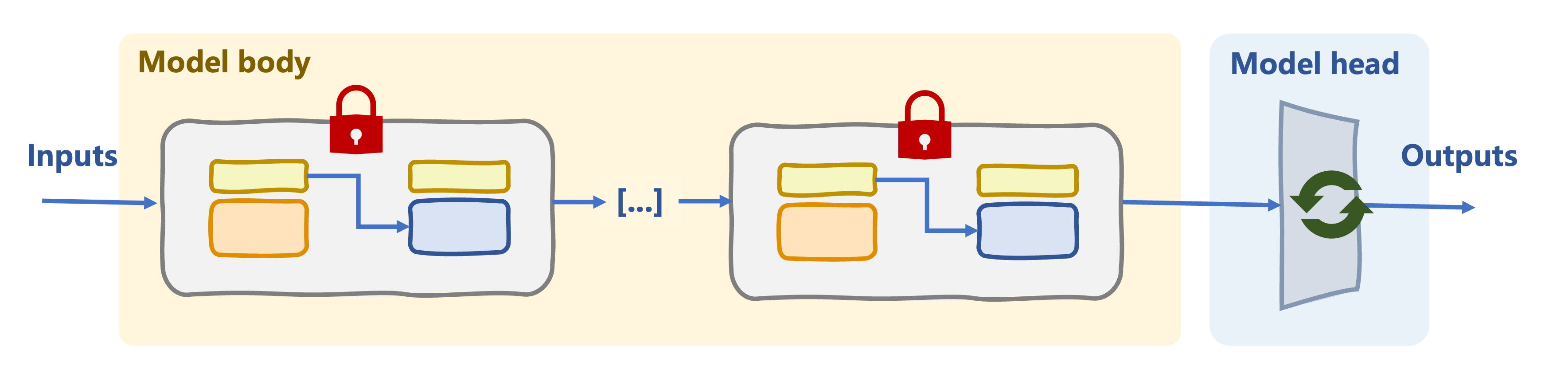

Partial fine-tuning

- Some layers are fixed

- Only task-specific layers are updated

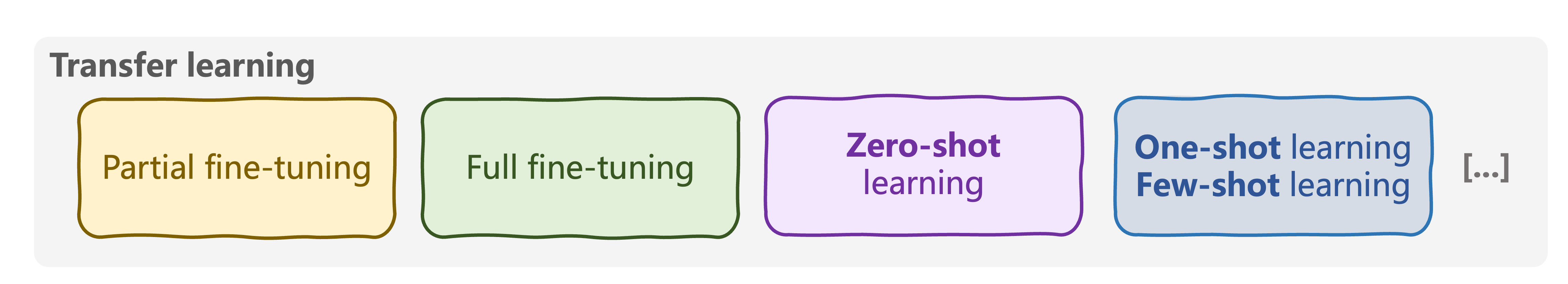

Transfer learning

- A pre-trained model is adapted to a different but related task

- Leverages knowledge from one domain to a related one

N-shot learning

- Zero-shot learning: no examples

- One-shot learning: one example

- Few-shot learning: several examples

One-shot learning

from transformers import pipeline

generator = pipeline(task="sentiment-analysis", model="distilbert-base-uncased-finetuned-sst-2-english")

input_text = """

Classify the sentiment of this sentence as either Positive or Negative.

Example:

Text: "I'm feeling great today!" Sentiment: Positive

Text: "The weather today is lovely." Sentiment:

"""

result = generator(input_text, max_length=100)

print(result[0]["label"])

POSITIVE

Let's practice!

Introduction to LLMs in Python