Preparing for fine-tuning

Introduction to LLMs in Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

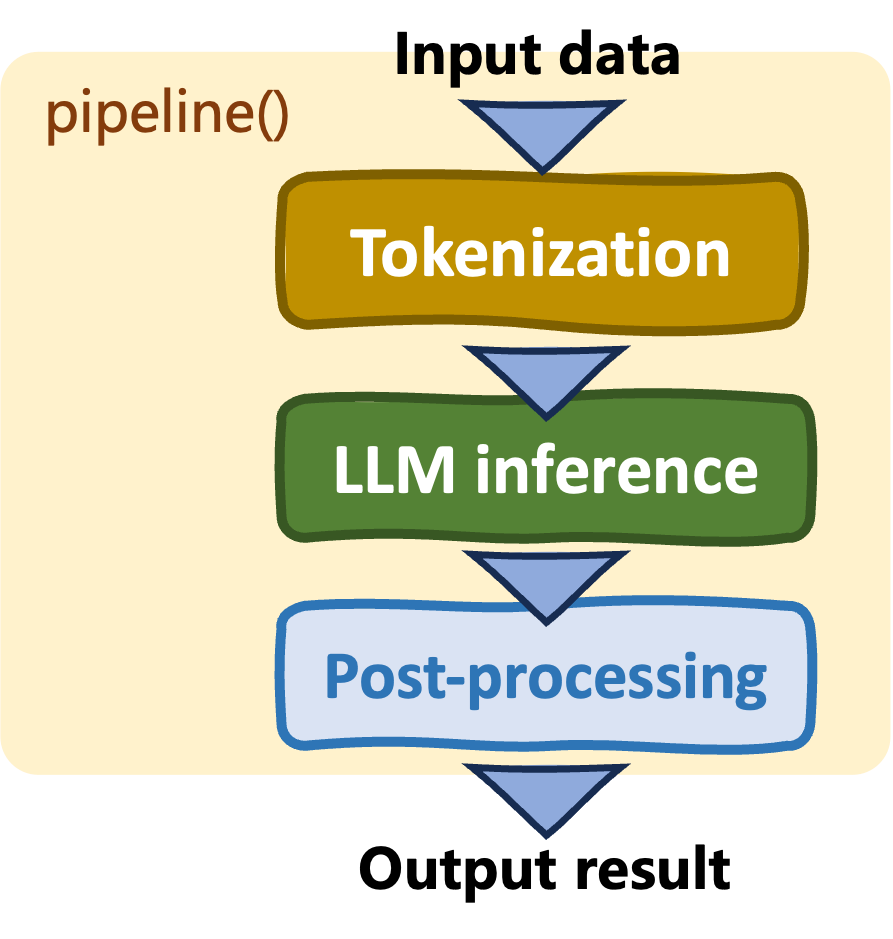

Pipelines and auto classes

Pipelines: pipeline()

- Streamlines tasks

- Automatic model and tokenizer selection

- Limited control

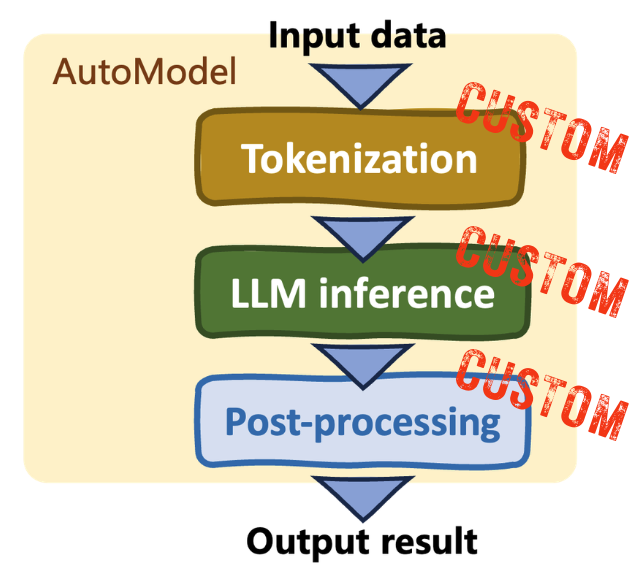

Auto classes (AutoModel class)

- Customization

- Manual adjustments

- Supports fine-tuning

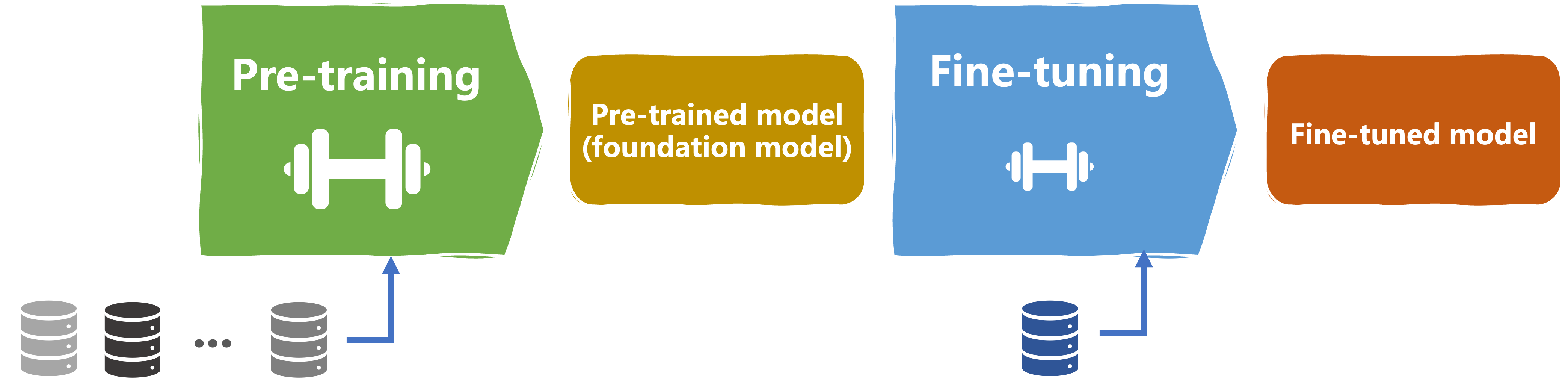

LLM lifecycle

Pre-training

- Broad data

- Learn general patterns

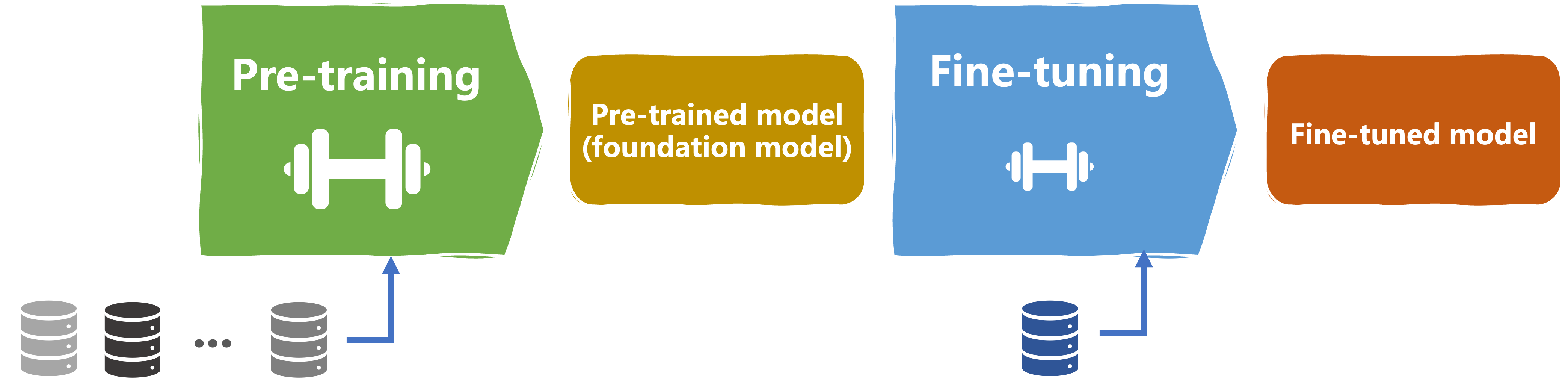

LLM lifecycle

Pre-training Fine-tuning

- Broad data Domain specific

- Learn general patterns Specialized tasks

Loading a dataset for fine-tuning

from datasets import load_datasettrain_data = load_dataset("imdb", split="train")train_data = data.shard(num_shards=4, index=0)test_data = load_dataset("imdb", split="test")test_data = data.shard(num_shards=4, index=0)

load_dataset(): loads a dataset from Hugging Face hub- imdb: review classification

Auto classes

from transformers import AutoModel, AutoTokenizerfrom transformers import AutoModelForSequenceClassificationmodel = AutoModelForSequenceClassification.from_pretrained("bert-base-uncased") tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

Tokenization

from transformers import AutoTokenizer, AutoModelForSequenceClassification from datasets import load_dataset train_data = load_dataset("imdb", split="train") train_data = data.shard(num_shards=4, index=0) test_data = load_dataset("imdb", split="test") test_data = data.shard(num_shards=4, index=0) model = AutoModelForSequenceClassification.from_pretrained("bert-base-uncased") tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")# Tokenize the data tokenized_training_data = tokenizer(train_data["text"], return_tensors="pt", padding=True, truncation=True, max_length=64) tokenized_test_data = tokenizer(test_data["text"], return_tensors="pt", padding=True, truncation=True, max_length=64)

Tokenization output

print(tokenized_training_data)

{'input_ids': tensor([[ 101, 1045, 12524, 1045, 2572, 8025, 1011, 3756,

2013, 2026, 2678, 3573, 2138, 1997, 2035, 1996, 6704, 2008, 5129, 2009,

2043, 2009, 2001, 2034, 2207, 1999, 3476, 1012, 1045, 2036, ...

Tokenizing row by row

def tokenize_function(text_data): return tokenizer(text_data["text"], return_tensors="pt", padding=True, truncation=True, max_length=64) # Tokenize in batches tokenized_in_batches = train_data.map(tokenize_function, batched=True)# Tokenize row by row tokenized_by_row = train_data.map(tokenize_function, batched=False)

Dataset({

features: ['text', 'label', 'input_ids', 'token_type_ids', 'attention_mask'],

num_rows: 1563

})

Subword tokenization

- Common in modern tokenizers

- Words split into meaningful sub-parts

Subword tokenization

- Common in modern tokenizers

- Words split into meaningful sub-parts

Let's practice!

Introduction to LLMs in Python