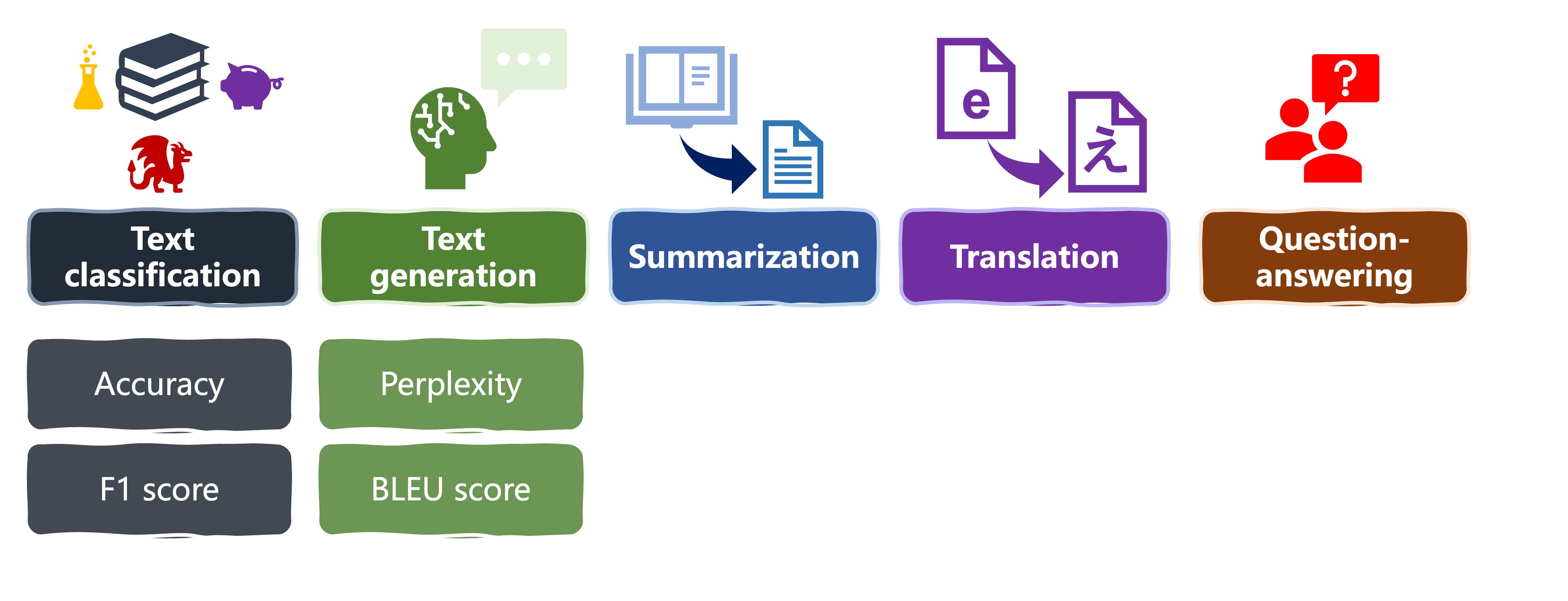

Metrics for language tasks: perplexity and BLEU

Introduction to LLMs in Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

LLM tasks and metrics

Perplexity

- A model's ability to predict the next word accurately and confidently

- Lower perplexity = higher confidence

input_text = "Latest research findings in Antarctica show" generated_text = "Latest research findings in Antarctica show that the ice sheet is melting faster than previously thought."# Encode the prompt, generate text and decode it input_text_ids = tokenizer.encode(input_text, return_tensors="pt") output = model.generate(input_text_ids, max_length=20) generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

Perplexity output

perplexity = evaluate.load("perplexity", module_type="metric") results = perplexity.compute(predictions=generated_text, model_id="gpt2")print(results)

{'perplexities': [245.63299560546875, 520.3106079101562, ....],

'mean_perplexity': 2867.7229790460497}

print(results["mean_perplexity"])

2867.7229790460497

- Compare to baseline results

BLEU

Measures translation quality against human references

Predictions: LLM's outputs

- References: human references

bleu = evaluate.load("bleu")input_text = "Latest research findings in Antarctica show" references = [["Latest research findings in Antarctica show significant ice loss due to climate change.", "Latest research findings in Antarctica show that the ice sheet is melting faster than previously thought."]] generated_text = "Latest research findings in Antarctica show that the ice sheet is melting faster than previously thought."

BLEU output

results = bleu.compute(predictions=[generated_text], references=references)

print(results)

{'bleu': 1.0,

'precisions': [1.0, 1.0, 1.0, 1.0],

'brevity_penalty': 1.0,

'length_ratio': 1.2142857142857142,

'translation_length': 17,

'reference_length': 14}

- 0-1 score: closer to 1 = higher similarity

Let's practice!

Introduction to LLMs in Python