Understanding the transformer

Introduction to LLMs in Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

What is a transformer?

- Deep learning architectures

- Processing, understanding, and generating text

- Used in most LLMs

- Handle long text sequences in parallel

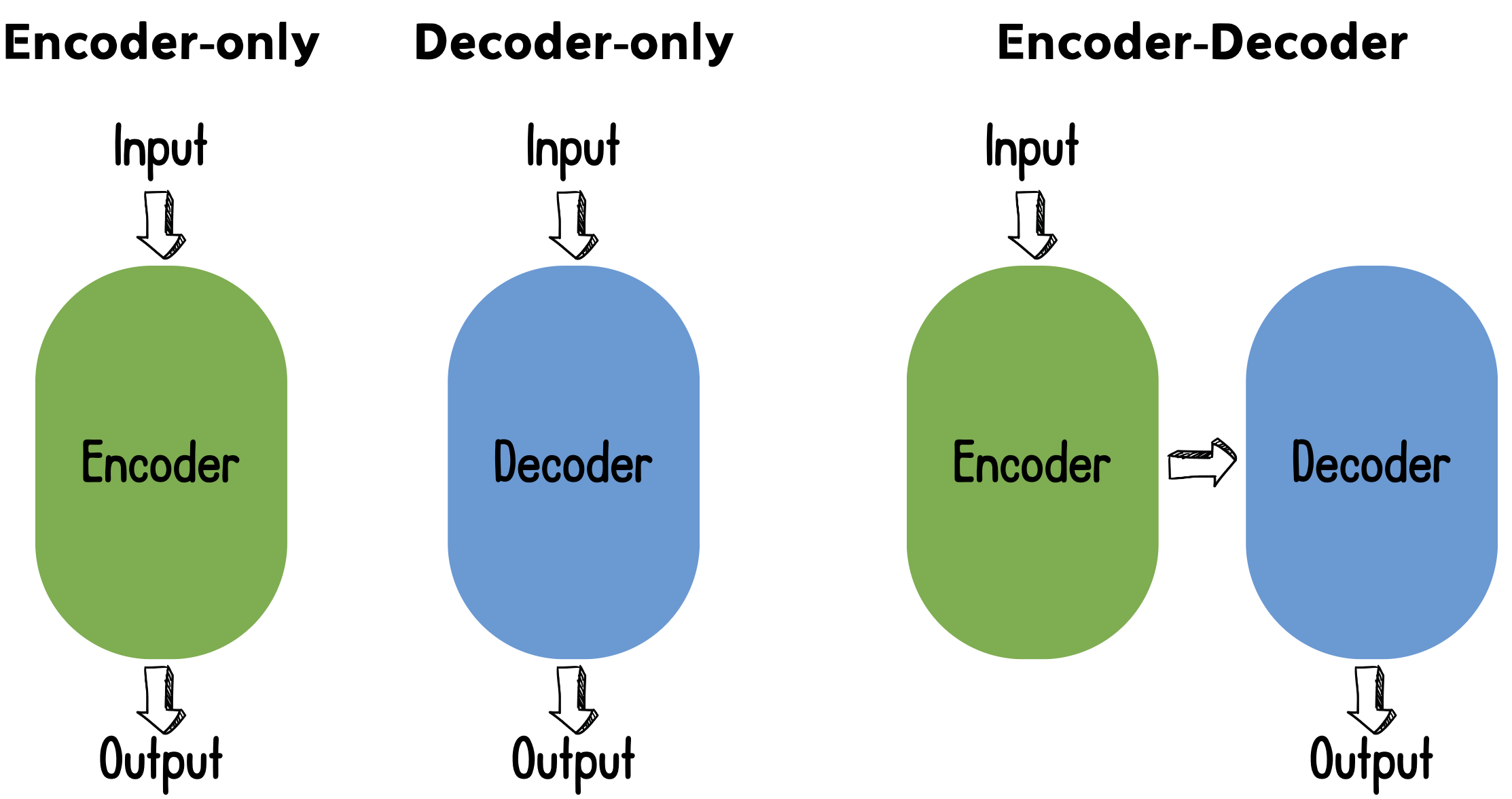

Transformer architectures

- Find the architecture details in the Hugging Face model card

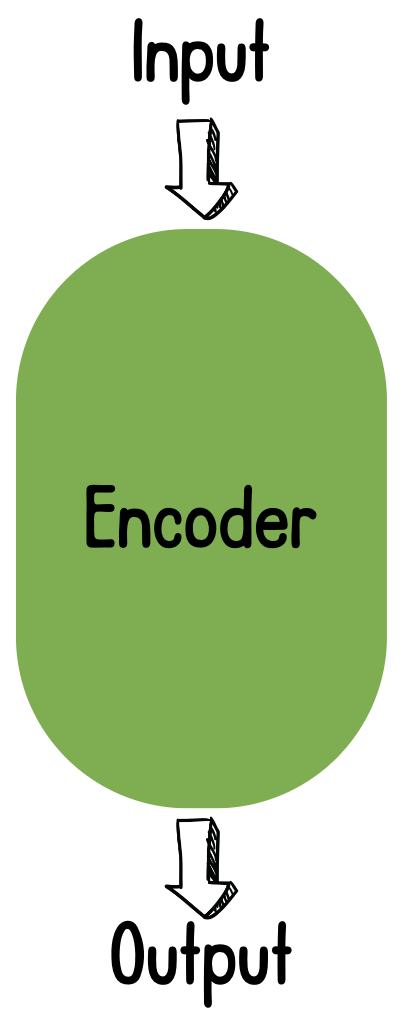

Encoder-only

- Understanding the input text

- No sequential output

- Common tasks:

- Text classification

- Sentiment analysis

- Extractive question-answering (extract or label)

- BERT models

- Example:

"distilbert-base-uncased-distilled-squad"

Encoder-only

llm = pipeline(model="bert-base-uncased")

print(llm.model)

BertForMaskedLM(

(bert): ...

)

(encoder): BertEncoder(

...

print(llm.model.config)

BertConfig {

...

"architectures": [

"BertForMaskedLM"

...

Encoder-only

print(llm.model.config.is_decoder)

False

- Alternatively:

llm.model.config.is_encoder_decoder

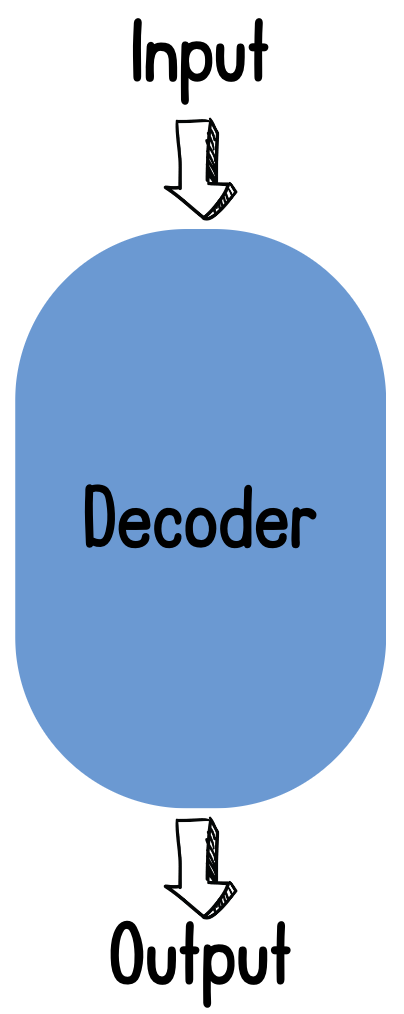

Decoder-only

- Focus shifts to output

- Common tasks:

- Text generation

- Generative question-answering (sentence(s) or paragraph(s))

- GPT models

- Example:

"gpt-3.5-turbo"

Decoder-only

llm = pipeline(model="gpt2")

print(llm.model.config)

GPT2Config {

...

"architectures": [

"GPT2LMHeadModel"

],

...

"task_specific_params": {

"text-generation": {

...

print(llm.model.config.is_decoder)

False

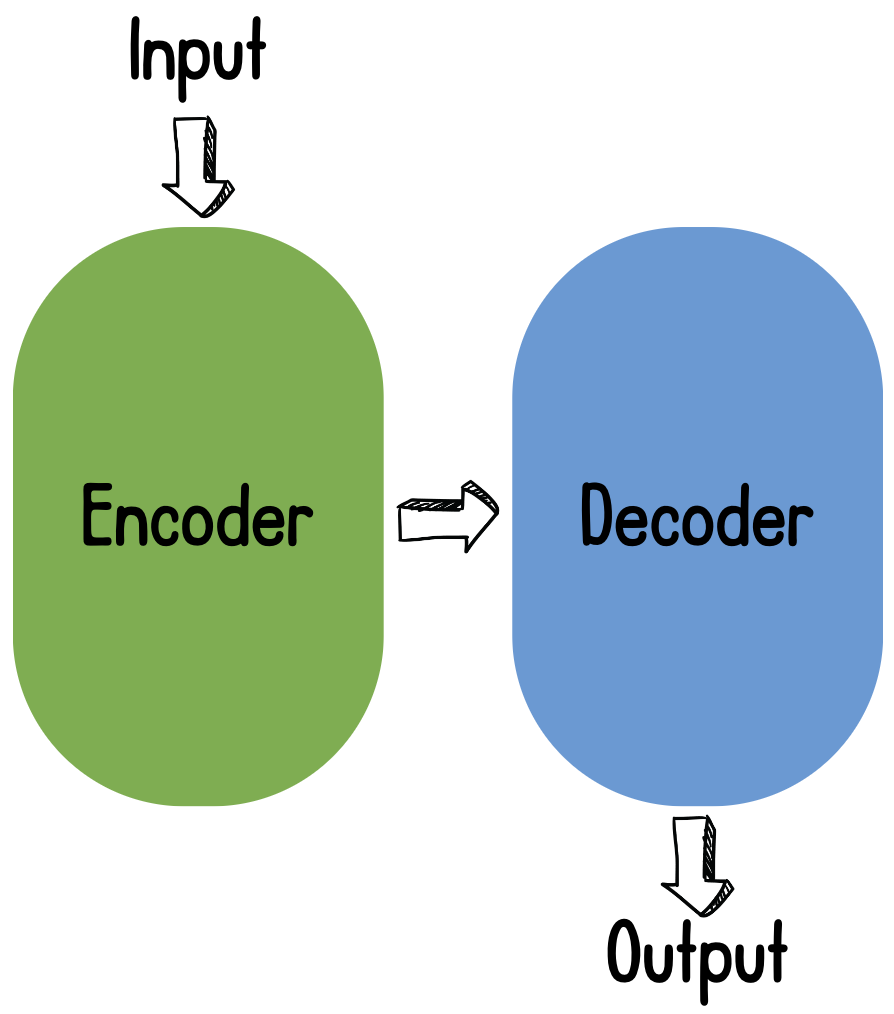

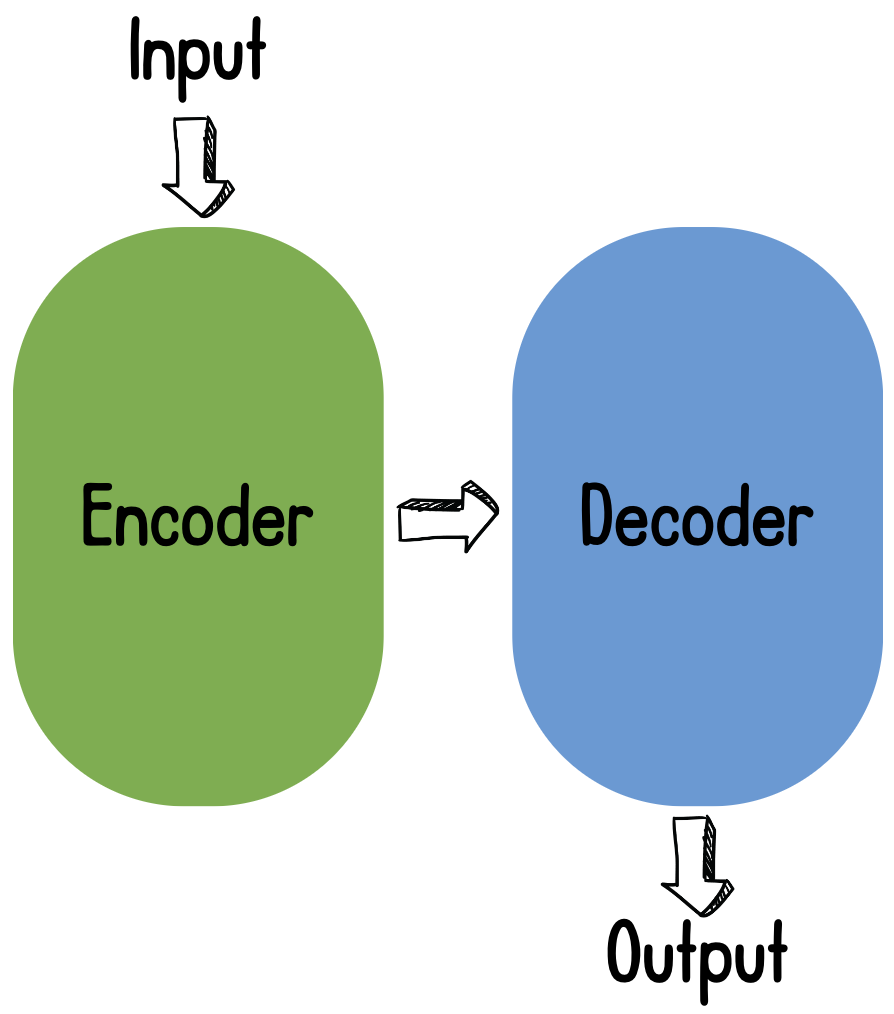

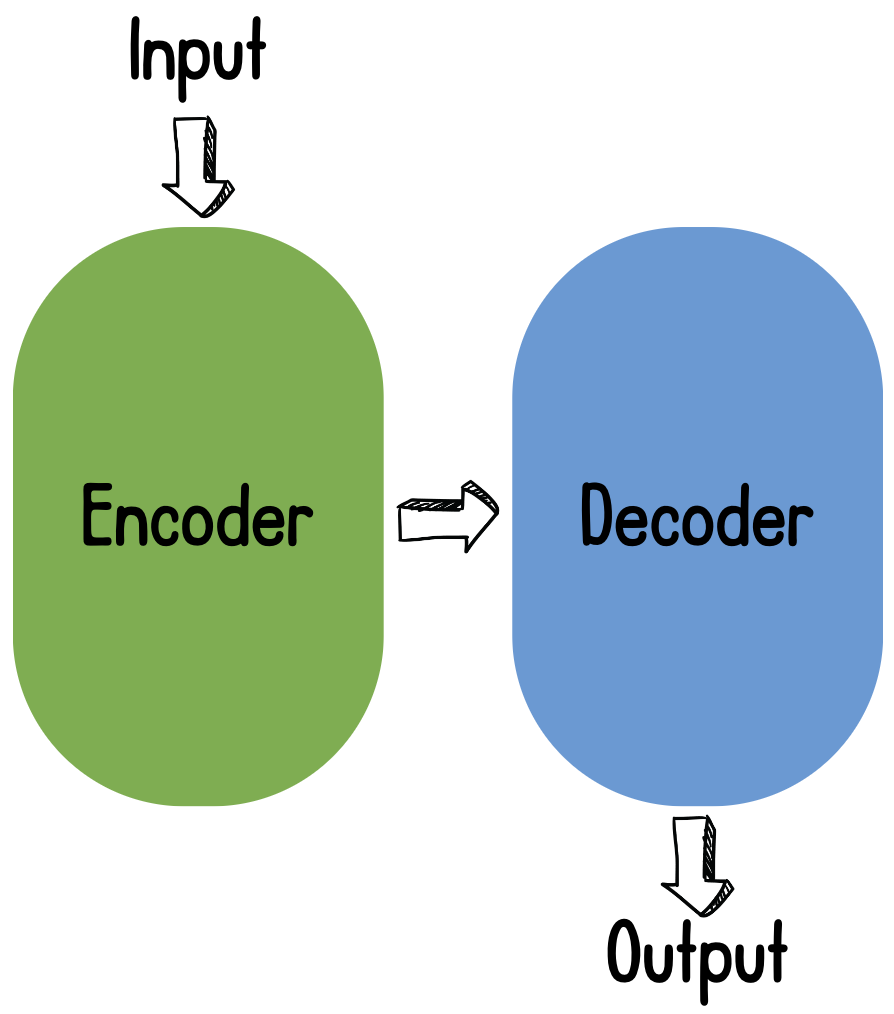

Encoder-decoder

- Understand and process the input and output

- Common tasks:

- Translation

- Summarization

- T5, BART models

Encoder-decoder

llm = pipeline(model="Helsinki-NLP/opus-mt-es-en")

print(llm.model)

MarianMTModel(

...

(encoder): MarianEncoder(

...

(decoder): MarianDecoder(

...

Encoder-decoder

print(llm.model.config)

MarianConfig {

...

"decoder_attention_heads": 8,

...

"encoder_attention_heads": 8,

...

"is_encoder_decoder": true,

...

print(llm.model.config.is_encoder_decoder)

True

Let's practice!

Introduction to LLMs in Python