Data Ingestion and Scheduling in Dataflows Gen 2

Data Ingestion and Semantic Models with Microsoft Fabric

Alex Kuntz

Head of Cloud Curriculum, DataCamp

Data Destination Connections and Table Options

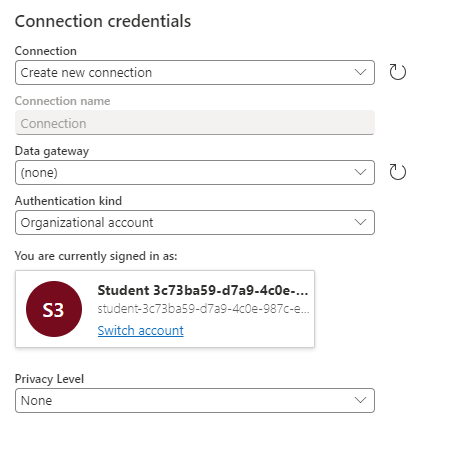

Configuring Connection to Data Destination

- Create new or use an existing connection based on the sink selected.

Create New or Use Existing Table

- New Table: Automatically recreated if deleted

- Existing Table: Not recreated if deleted

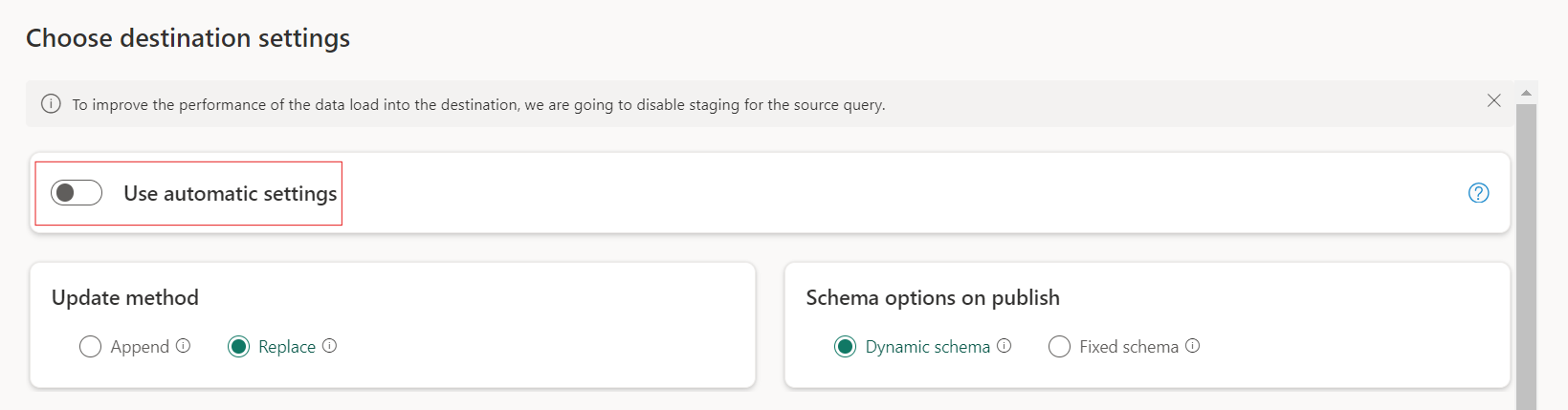

Managed settings

- Automatic settings on by default

- Update Method: Data fully replaced with each refresh

- Managed Mapping: Automatic adjustments for schema changes

- Table Recreated: Table dropped and recreated during refresh

Manual Settings

Manual Settings for Data Destination

- Disable automatic settings for full control

- Modify column mapping, exclude unnecessary columns

Update Methods

- Replace: Old data is removed and replaced

- Append: New data is added to the existing data

Schema Options

- Dynamic Schema: Allows changes but drops table

- Fixed Schema: No schema changes; keeps relationships intact

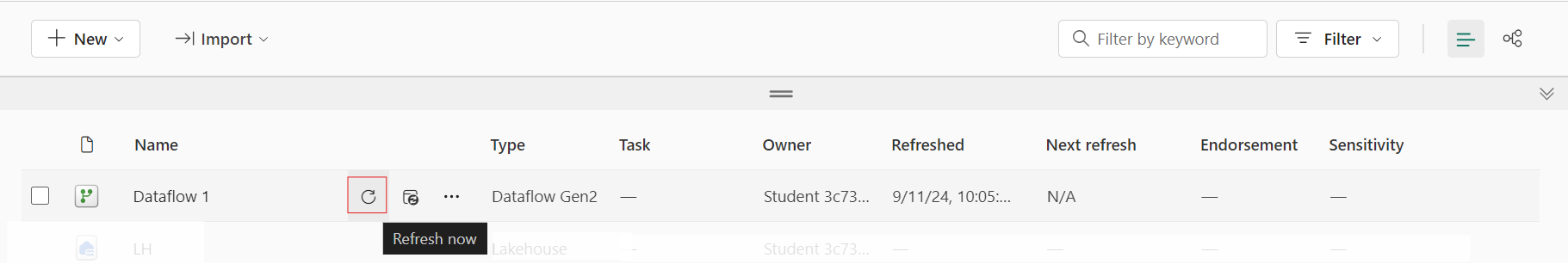

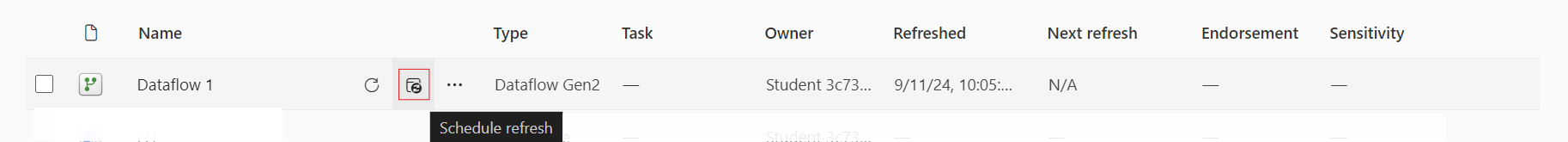

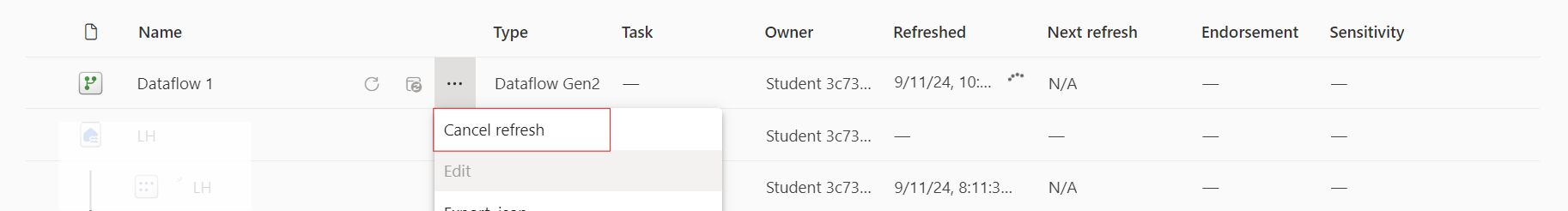

Managing Dataflow Refreshes

Dataflow refreshes apply transformations to keep destination data up-to-date

- On-Demand Refresh: Manually triggered or via pipeline

- Scheduled Refresh: Set refresh frequency (up to 48 times/day)

- Cancel Refresh: Stop a refresh during in-progress

Let's practice!

Data Ingestion and Semantic Models with Microsoft Fabric