Advanced splitting methods

Retrieval Augmented Generation (RAG) with LangChain

Meri Nova

Machine Learning Engineer

Limitations of our current splitting strategies

🤦 Splits are naive (not context-aware)

- Ignores context of surrounding text

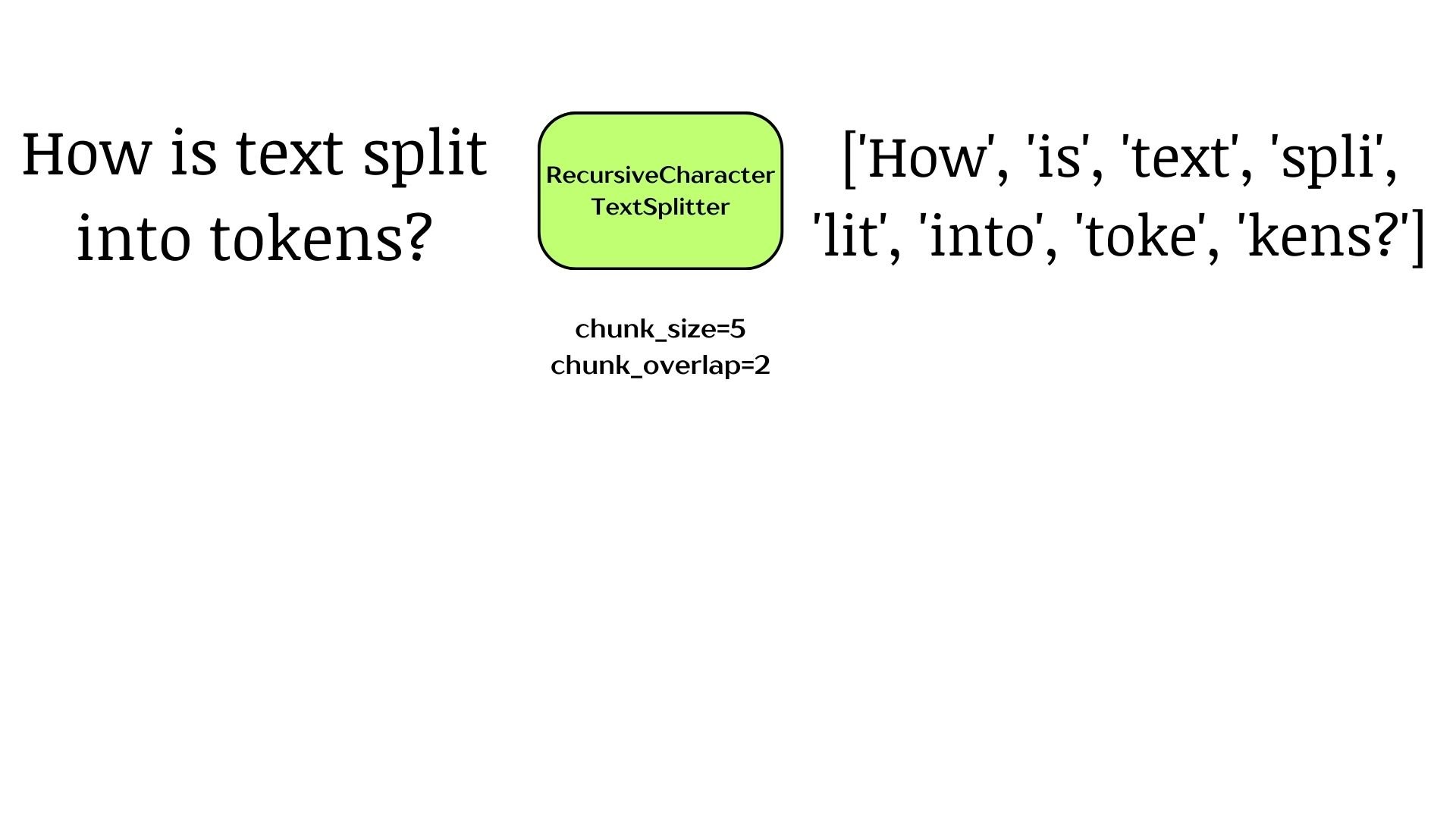

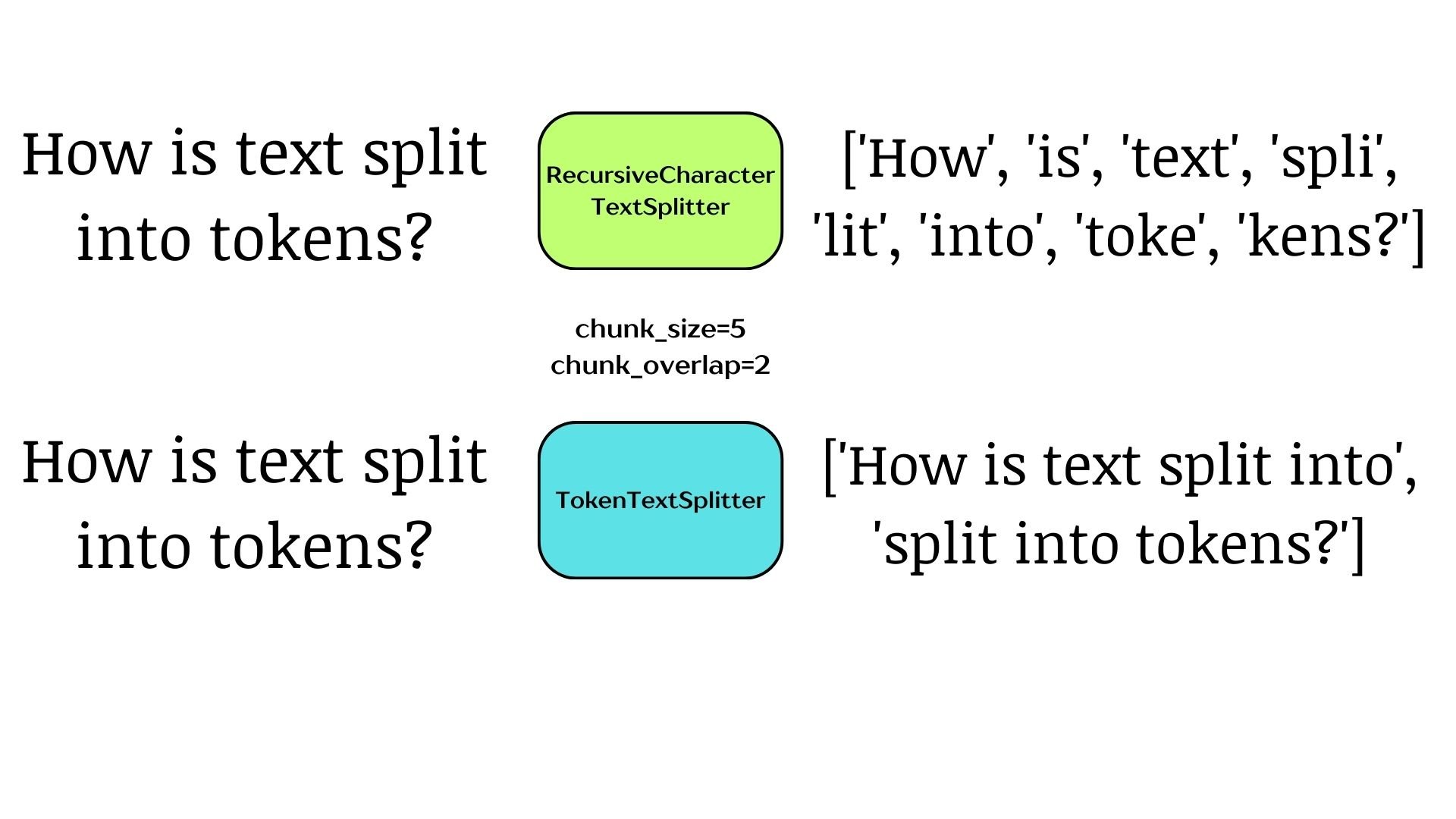

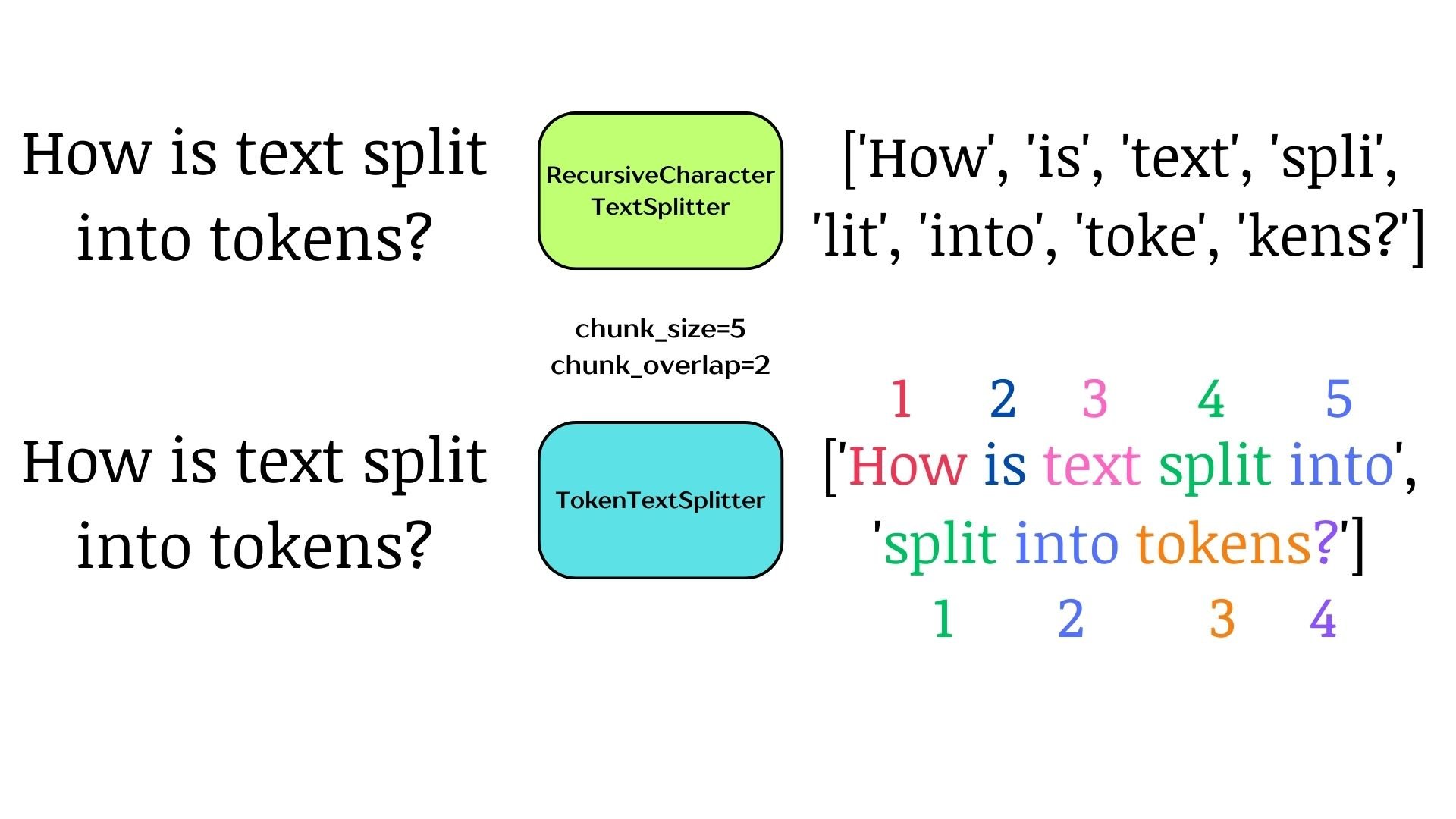

🖇 Splits are made using characters vs. tokens

- Tokens are processed by models

- Risk exceeding the context window

→ SemanticChunker

→ TokenTextSplitter

Splitting on tokens

Splitting on tokens

Splitting on tokens

Splitting on tokens

import tiktoken from langchain_text_splitters import TokenTextSplitter example_string = "Mary had a little lamb, it's fleece was white as snow." encoding = tiktoken.encoding_for_model('gpt-4o-mini') splitter = TokenTextSplitter(encoding_name=encoding.name, chunk_size=10, chunk_overlap=2)chunks = splitter.split_text(example_string) for i, chunk in enumerate(chunks): print(f"Chunk {i+1}:\n{chunk}\n")

Splitting on tokens

Chunk 1:

Mary had a little lamb, it's fleece

Chunk 2:

fleece was white as snow.

Splitting on tokens

for i, chunk in enumerate(chunks):

print(f"Chunk {i+1}:\nNo. tokens: {len(encoding.encode(chunk))}\n{chunk}\n")

Chunk 1:

No. tokens: 10

Mary had a little lamb, it's fleece was

Chunk 2:

No. tokens: 6

fleece was white as snow.

Semantic splitting

Semantic splitting

Semantic splitting

Semantic splitting

from langchain_openai import OpenAIEmbeddings from langchain_experimental.text_splitter import SemanticChunkerembeddings = OpenAIEmbeddings(api_key="...", model='text-embedding-3-small')semantic_splitter = SemanticChunker( embeddings=embeddings,breakpoint_threshold_type="gradient", breakpoint_threshold_amount=0.8)

1 https://api.python.langchain.com/en/latest/text_splitter/langchain_experimental.text_splitter. SemanticChunker.html

Semantic splitting

chunks = semantic_splitter.split_documents(data)

print(chunks[0])

page_content='Retrieval-Augmented Generation for\nKnowledge-Intensive NLP Tasks\ Patrick Lewis,

Ethan Perez,\nAleksandra Piktus, Fabio Petroni, Vladimir Karpukhin, Naman Goyal, Heinrich

Küttler,\nMike Lewis, Wen-tau Yih, Tim Rocktäschel, Sebastian Riedel, Douwe Kiela\nFacebook AI

Research; University College London;New York University;\[email protected]\nAbstract\nLarge

pre-trained language models have been shown to store factual knowledge\nin their parameters,

and achieve state-of-the-art results when ?ne-tuned on down-\nstream NLP tasks. However, their

ability to access and precisely manipulate knowl-\nedge is still limited, and hence on

knowledge-intensive tasks, their performance\nlags behind task-specific architectures.'

metadata={'source': 'rag_paper.pdf', 'page': 0}

Let's practice!

Retrieval Augmented Generation (RAG) with LangChain