Introduction to RAG evaluation

Retrieval Augmented Generation (RAG) with LangChain

Meri Nova

Machine Learning Engineer

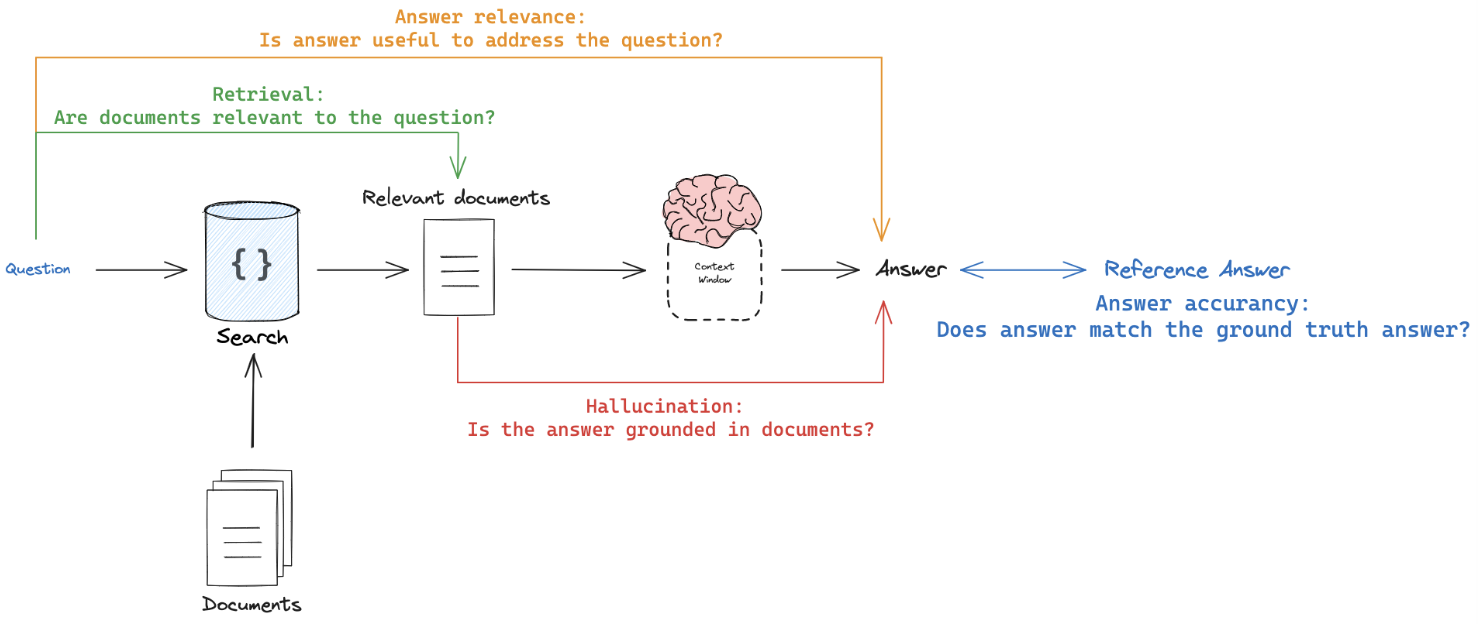

Types of RAG evaluation

1 Image Credit: LangSmith

Output accuracy: string evaluation

query = "What are the main components of RAG architecture?"

predicted_answer = "Training and encoding"

ref_answer = "Retrieval and Generation"

Output accuracy: string evaluation

prompt_template = """You are an expert professor specialized in grading students' answers to questions.

You are grading the following question:{query}

Here is the real answer:{answer}

You are grading the following predicted answer:{result}

Respond with CORRECT or INCORRECT:

Grade:"""

prompt = PromptTemplate(

input_variables=["query", "answer", "result"],

template=prompt_template

)

eval_llm = ChatOpenAI(temperature=0, model="gpt-4o-mini", openai_api_key='...')

Output accuracy: string evaluation

from langsmith.evaluation import LangChainStringEvaluator qa_evaluator = LangChainStringEvaluator( "qa", config={ "llm": eval_llm, "prompt": PROMPT } )score = qa_evaluator.evaluator.evaluate_strings( prediction=predicted_answer, reference=ref_answer, input=query )

Output accuracy: string evaluation

print(f"Score: {score}")

Score: {'reasoning': 'INCORRECT', 'value': 'INCORRECT', 'score': 0}

query = "What are the main components of RAG architecture?"

predicted_answer = "Training and encoding"

ref_answer = "Retrieval and Generation"

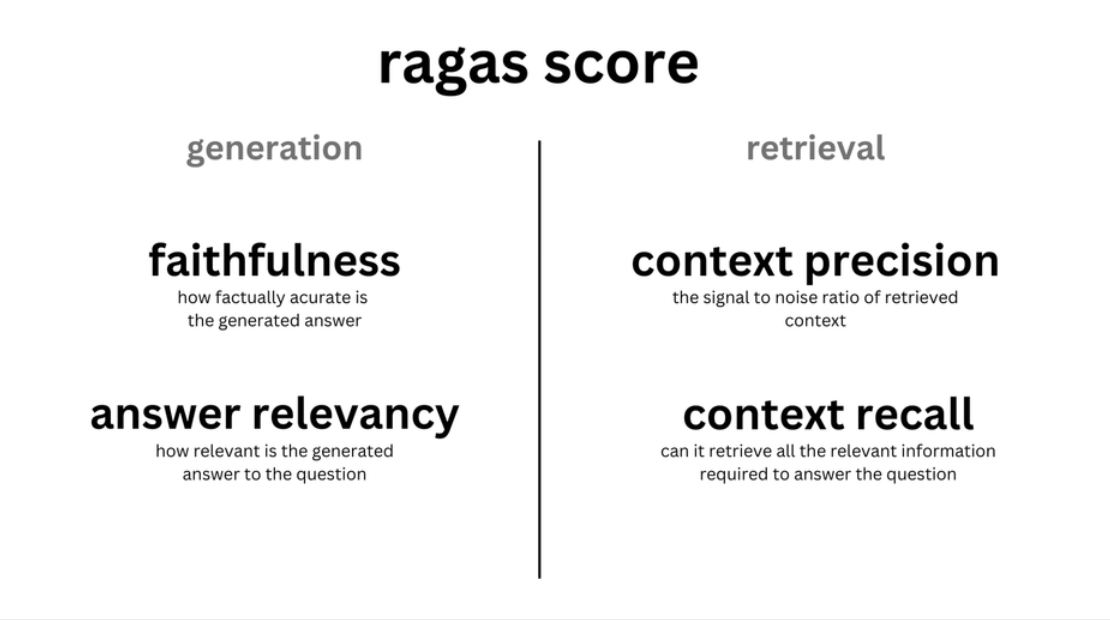

Ragas framework

1 Image Credit: Ragas

Faithfulness

- Does the generated output faithfully represent the context?

$$ \text{Faithfulness} = \frac{\text{No. of claims made that can be inferred from the context}}{\text{Total no. of claims}} $$

- Normalized to

(0, 1)

Evaluating faithfulness

from langchain_openai import ChatOpenAI, OpenAIEmbeddingsfrom ragas.integrations.langchain import EvaluatorChain from ragas.metrics import faithfulnessllm = ChatOpenAI(model="gpt-4o-mini", api_key="...") embeddings = OpenAIEmbeddings(model="text-embedding-3-small", api_key="...")faithfulness_chain = EvaluatorChain( metric=faithfulness, llm=llm, embeddings=embeddings )

Evaluating faithfulness

eval_result = faithfulness_chain({"question": "How does the RAG model improve question answering with LLMs?","answer": "The RAG model improves question answering by combining the retrieval of documents...","contexts": [ "The RAG model integrates document retrieval with LLMs by first retrieving relevant passages...", "By incorporating retrieval mechanisms, RAG leverages external knowledge sources, allowing the...", ]})print(eval_result)

'faithfulness': 1.0

Context precision

- How relevant are the retrieved documents to the query?

- Normalized to

(0, 1)→1= highly relevant

from ragas.metrics import context_precision

llm = ChatOpenAI(model="gpt-4o-mini", api_key="...")

embeddings = OpenAIEmbeddings(model="text-embedding-3-small", api_key="...")

context_precision_chain = EvaluatorChain(

metric=context_precision,

llm=llm,

embeddings=embeddings

)

Evaluating context precision

eval_result = context_precision_chain({ "question": "How does the RAG model improve question answering with large language models?", "ground_truth": "The RAG model improves question answering by combining the retrieval of...", "contexts": [ "The RAG model integrates document retrieval with LLMs by first retrieving...", "By incorporating retrieval mechanisms, RAG leverages external knowledge sources...", ] })print(f"Context Precision: {eval_result['context_precision']}")

Context Precision: 0.99999999995

Let's practice!

Retrieval Augmented Generation (RAG) with LangChain