Building an LCEL retrieval chain

Retrieval Augmented Generation (RAG) with LangChain

Meri Nova

Machine Learning Engineer

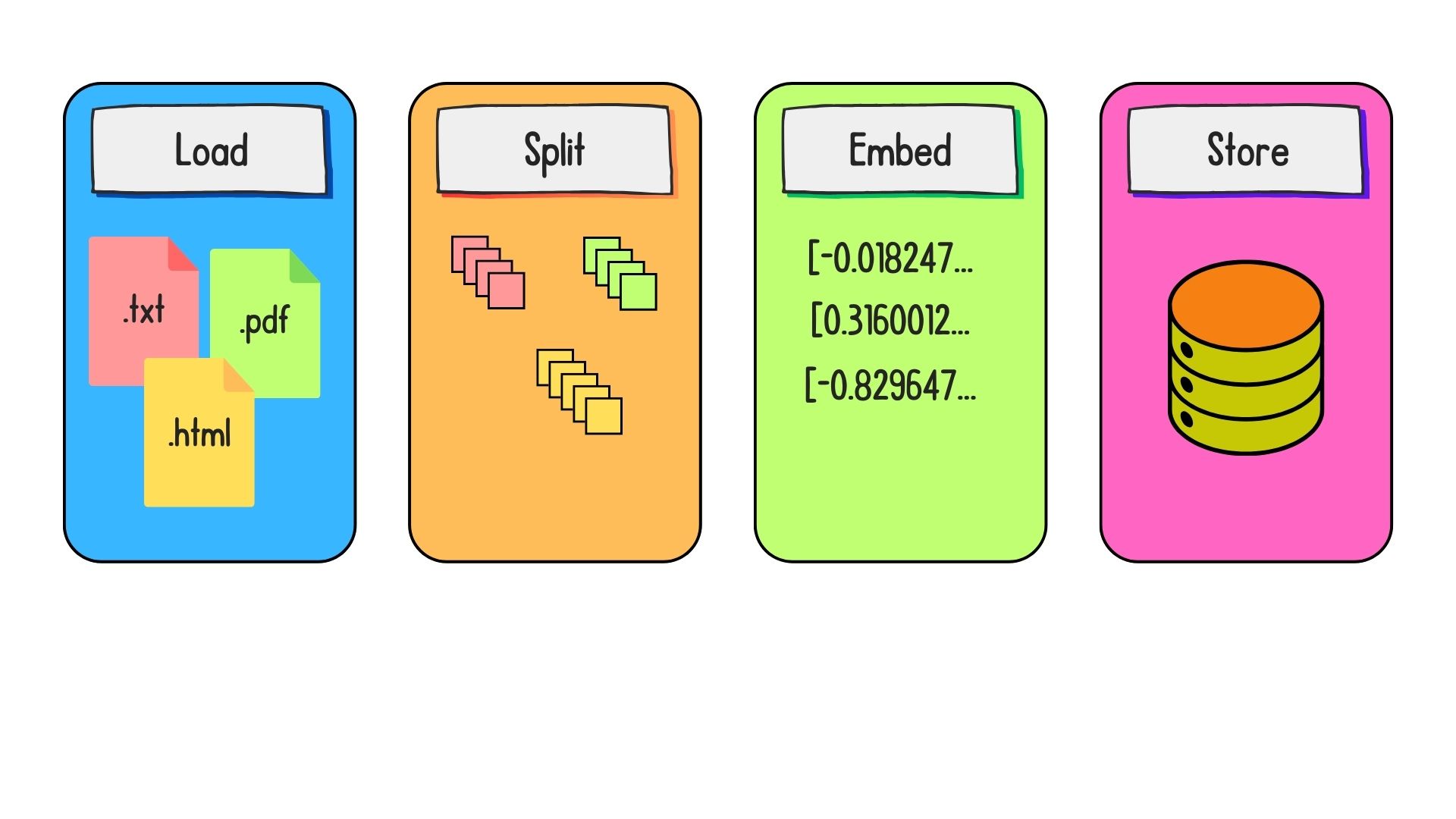

Preparing data for retrieval

Introduction to LCEL for RAG

Introduction to LCEL for RAG

Introduction to LCEL for RAG

Introduction to LCEL for RAG

Introduction to LCEL for RAG

Introduction to LCEL for RAG

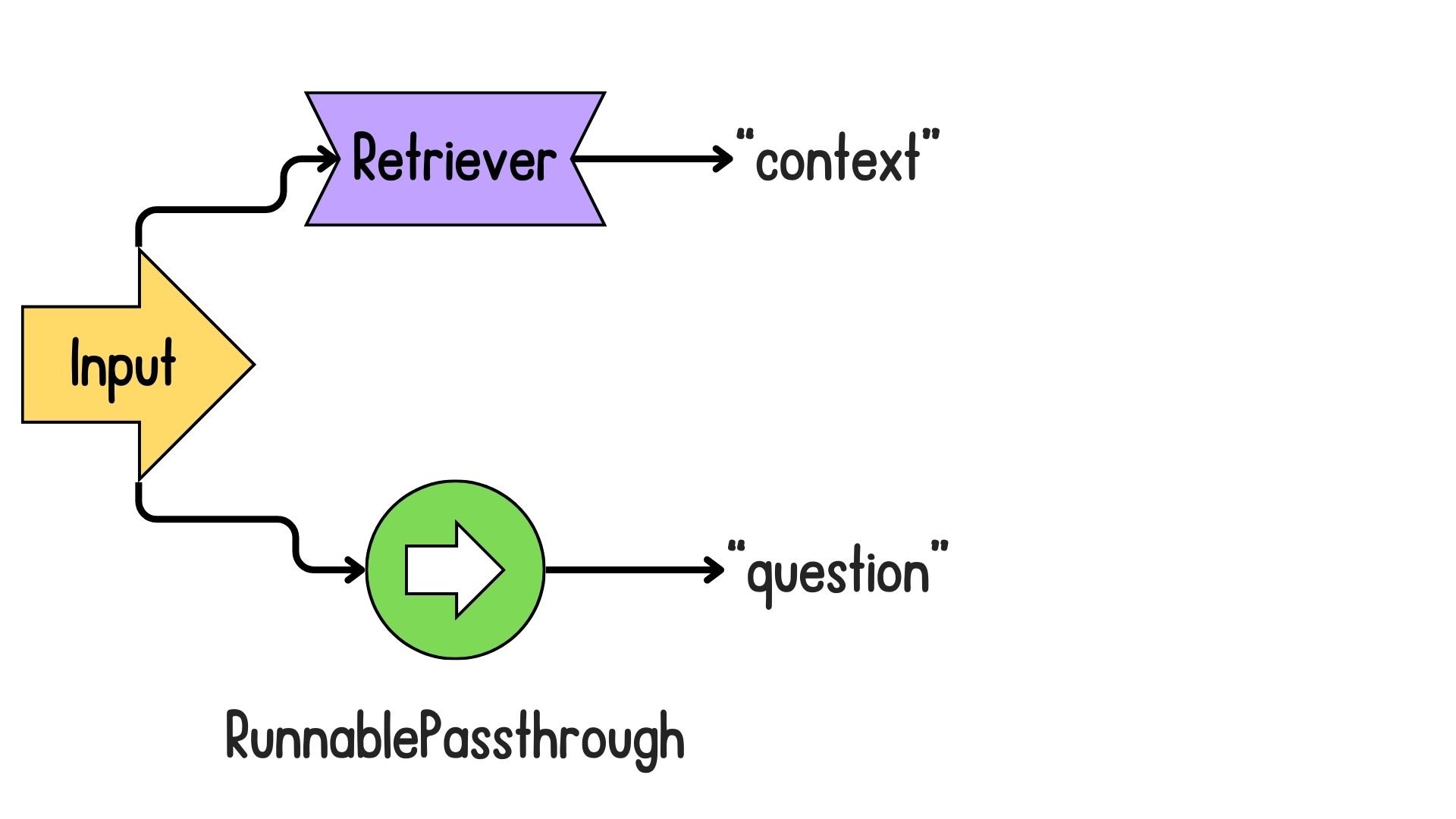

Instantiating a retriever

vector_store = Chroma.from_documents( documents=chunks, embedding=embedding_model )retriever = vector_store.as_retriever( search_type="similarity", search_kwargs={"k": 2} )

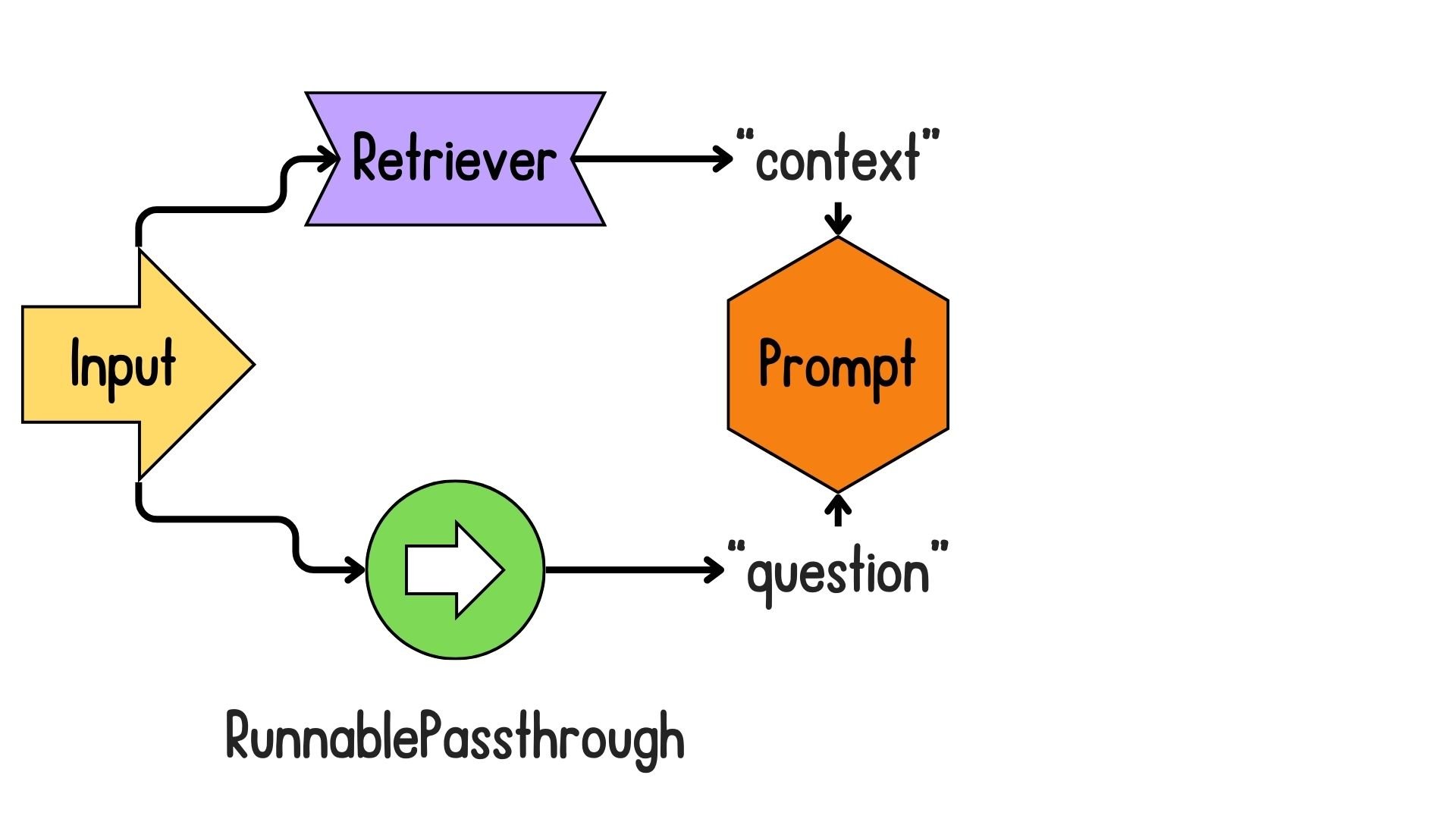

Creating a prompt template

from langchain_core.prompts import ChatPromptTemplateprompt = ChatPromptTemplate.from_template(""" Use the following pieces of context to answer the question at the end. If you don't know the answer, say that you don't know. Context: {context} Question: {question} """)

llm = ChatOpenAI(model="gpt-4o-mini", api_key="...", temperature=0)

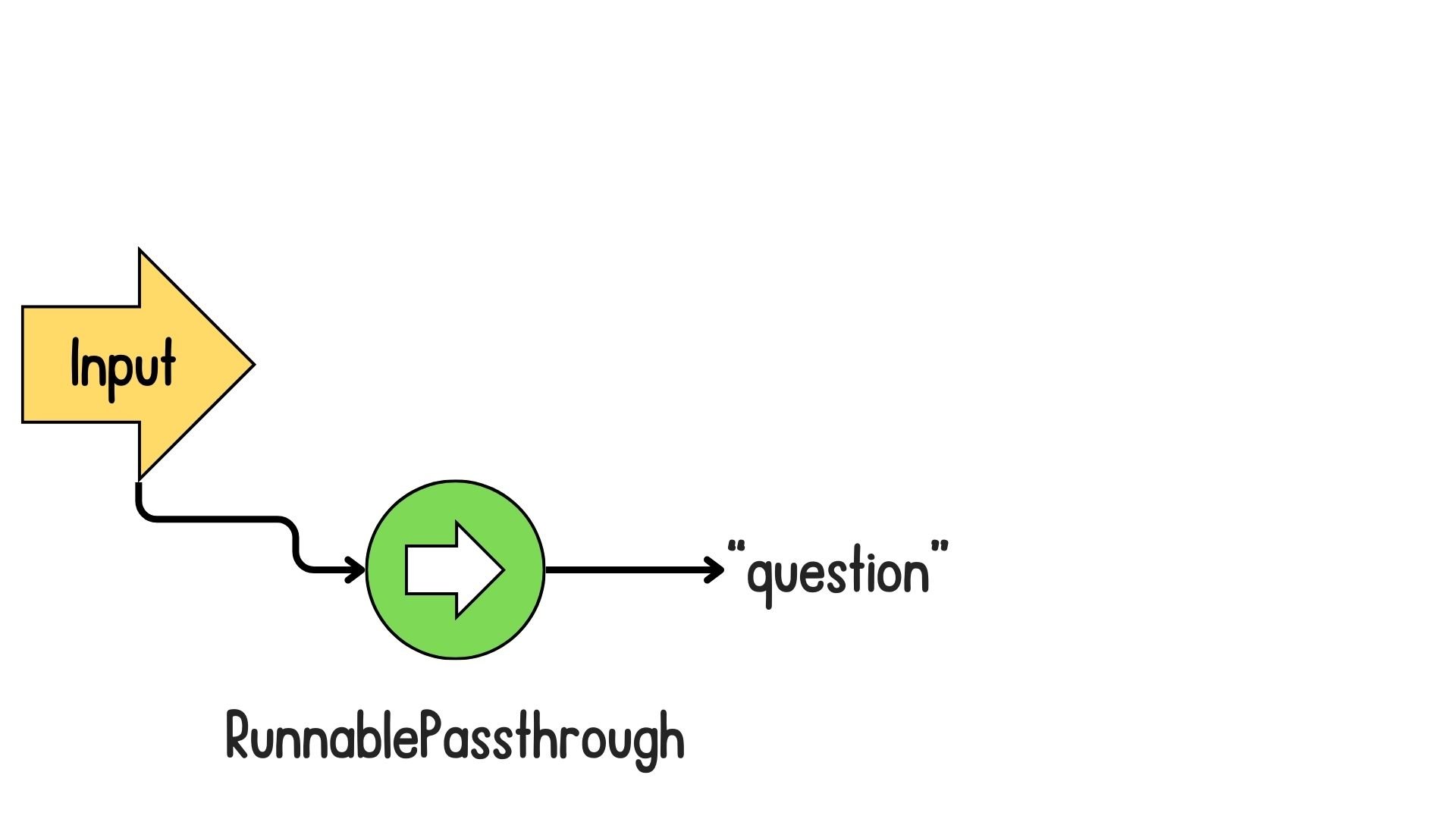

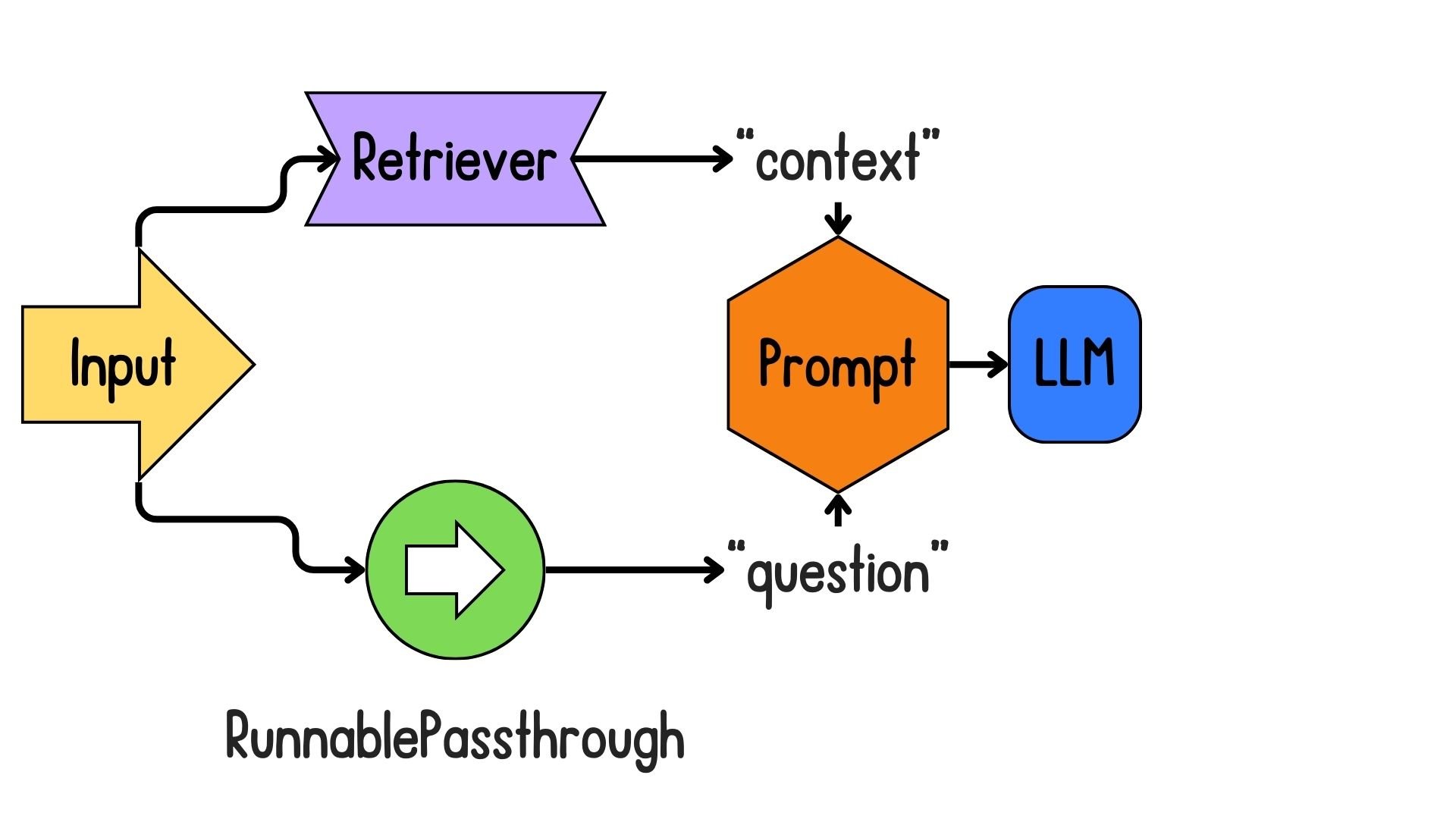

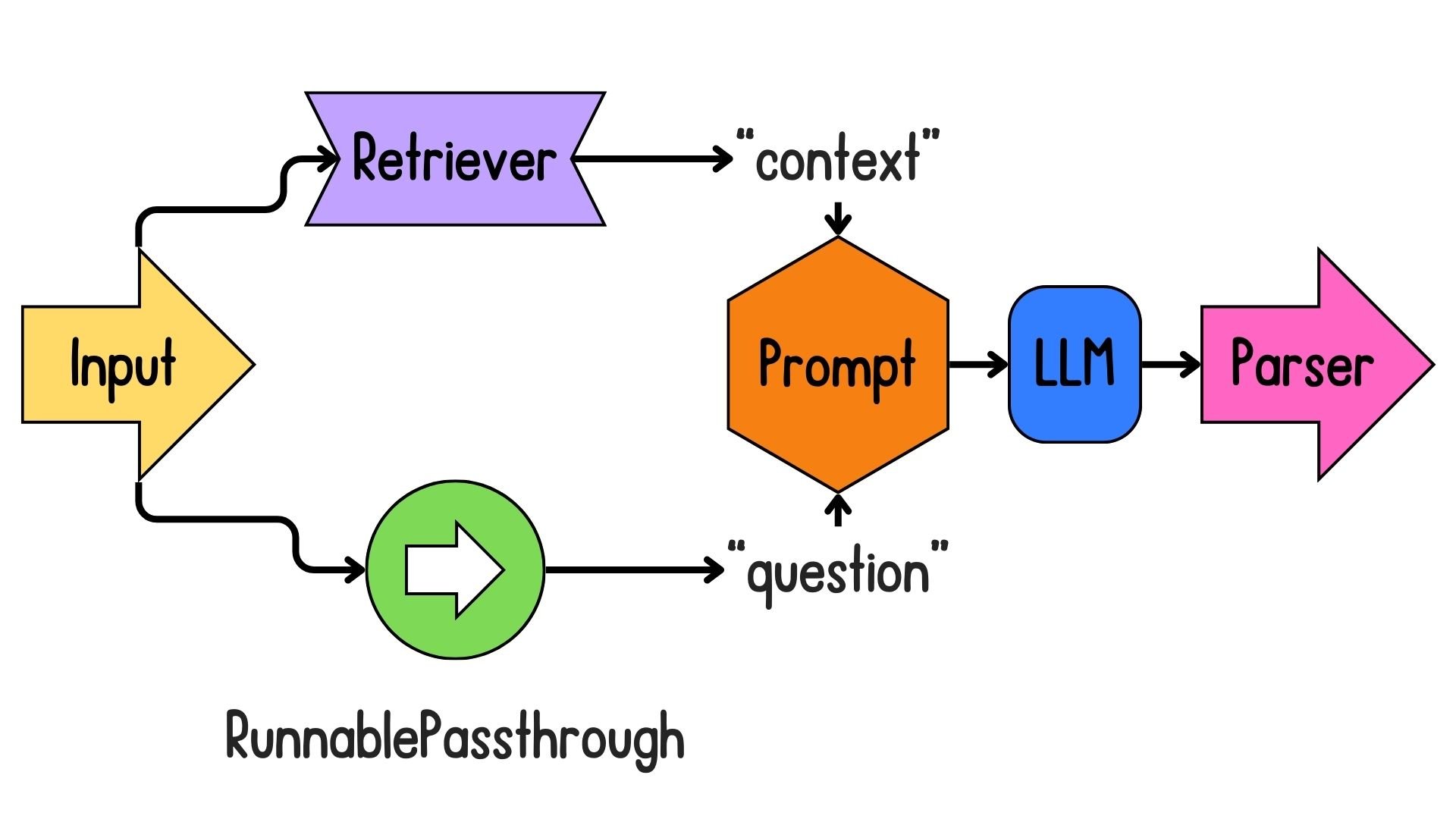

Building an LCEL retrieval chain

from langchain_core.runnables import RunnablePassthrough from langchain_core.output_parsers import StrOutputParserchain = ({"context": retriever, "question": RunnablePassthrough()}| prompt| llm| StrOutputParser())

Invoking the retrieval chain

result = chain.invoke({"question": "What are the key findings or results presented in the paper?"})

print(result)

- Top Performance: RAG models set new records on open-domain question answering tasks...

- Better Generation: RAG models produce more specific, diverse, and factual language...

- Dynamic Knowledge Use: The non-parametric memory allows RAG models to access and ...

Let's practice!

Retrieval Augmented Generation (RAG) with LangChain